Abstract

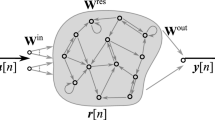

Echo state networks (ESNs), belonging to the wider family of reservoir computing methods, are a powerful tool for the analysis of dynamic data. In an ESN, the input signal is fed to a fixed (possibly large) pool of interconnected neurons, whose state is then read by an adaptable layer to provide the output. This last layer is generally trained via a regularized linear least-squares procedure. In this paper, we consider the more complex problem of training an ESN for classification problems in a semi-supervised setting, wherein only a part of the input sequences are effectively labeled with the desired response. To solve the problem, we combine the standard ESN with a semi-supervised support vector machine (S3VM) for training its adaptable connections. Additionally, we propose a novel algorithm for solving the resulting non-convex optimization problem, hinging on a series of successive approximations of the original problem. The resulting procedure is highly customizable and also admits a principled way of parallelizing training over multiple processors/computers. An extensive set of experimental evaluations on audio classification tasks supports the presented semi-supervised ESN as a practical tool for dynamic problems requiring the analysis of partially labeled data.

Similar content being viewed by others

Notes

https://www.jyu.fi/hum/laitokset/musiikki/en/research/coe/materials/mirtoolbox [last visited on November 10, 2016]

https://bitbucket.org/ispamm/semi-supervised-esn [last visited on November 10, 2016]

http://sound.natix.org/ [last visited November 10, 2016]

http://learning.eng.cam.ac.uk/carl/code/minimize/ [last accessed November 10, 2016]

References

Adankon MM, Cheriet M, Biem A. Semisupervised least squares support vector machine. IEEE Trans Neural Netw 2009;20(12):1858–1870.

Bacciu D, Barsocchi P, Chessa S, Gallicchio C, Micheli A. An experimental characterization of reservoir computing in ambient assisted living applications. Neural Comput & Applic 2014;24(6):1451–1464.

Barchiesi D, Giannoulis D, Stowell D, Plumbley MD. Acoustic scene classification: Classifying environments from the sounds they produce. IEEE Signal Process Mag 2015;32(3):16–34.

Belkin M, Niyogi P, Sindhwani V. Manifold regularization: A geometric framework for learning from labeled and unlabeled examples. J Mach Learn Res 2006;7:2399–2434.

Beltrán J, Chávez E, Favela J. Scalable identification of mixed environmental sounds, recorded from heterogeneous sources. Pattern Recogn Lett 2015;68:153–160.

Bianchi FM, Scardapane S, Uncini A, Rizzi A, Sadeghian A. Prediction of telephone calls load using echo state network with exogenous variables. Neural Netw 2015;71:204–213.

Campolucci P, Uncini A, Piazza F, Rao BD . On-line learning algorithms for locally recurrent neural networks. IEEE Trans Neural Netw 1999;10(2):253–271.

Castillo JC, Castro-González Á, Fernández-Caballero A, Latorre JM, Pastor JM, Fernández-Sotos A, Salichs MA. Software architecture for smart emotion recognition and regulation of the ageing adult. Cogn Comput 2016;8(2):357–367.

Chapelle O, Schölkopf B, Zien A. 2006. Semi-supervised learning MIT Press Cambridge.

Chapelle O, Sindhwani V, Keerthi S. Optimization techniques for semi-supervised support vector machines. J Mach Learn Res 2008;9:203–233.

Chapelle O, Sindhwani V, Keerthi SS. Branch and bound for semi-supervised support vector machines. Advances in neural information processing systems; 2006. p. 217–224.

Chapelle O, Zien A. Semi-supervised classification by low density separation. Proceedings of the tenth international workshop on artificial intelligence and statistics; 2005. p. 57–64.

Chatzis SP, Demiris Y. Echo state gaussian process. IEEE Trans Neural Netw 2011;22(9):1435–1445.

Di Lorenzo P, Scutari G. NEXT: In-network nonconvex optimization. IEEE Transactions on Signal and Information Processing over Networks 2016;2(2):120–136.

Dutoit X, Schrauwen B, Van Campenhout J, Stroobandt D, Van Brussel H, Nuttin M. Pruning and regularization in reservoir computing. Neurocomputing 2009;72(7):1534–1546.

Eronen AJ, Peltonen VT, Tuomi JT, Klapuri AP, Fagerlund S, Sorsa T, Lorho G, Huopaniemi J. Audio-based context recognition. IEEE Trans Audio Speech Lang Process 2006;14(1):321–329.

Facchinei F, Scutari G, Sagratella S. Parallel selective algorithms for nonconvex big data optimization. IEEE Trans Signal Process 2015;63(7):1874–1889.

Fu Z, Lu G, Ting KM, Zhang D. A survey of audio-based music classification and annotation. IEEE Trans Multimedia 2011;13(2):303–319.

Fung G, Mangasarian OL. Semi-supervised support vector machines for unlabeled data classification. Optimization methods and software 2001;15(1):29–44.

Hochreiter S, Schmidhuber J. Long short-term memory. Neural Comput 1997;9(8):1–32.

Jaeger H . 2001. The echo state approach to analysing and training recurrent neural networks. Tech. rep., GMD Report 148 German National Research Center for Information Technology.

Jaeger H. Adaptive nonlinear system identification with echo state networks. Advances in Neural Information Processing Systems; 2002. p. 593–600.

Jaeger H., Haas H. Harnessing nonlinearity: predicting chaotic systems and saving energy in wireless communication. Science 2004;304(5667):78–80.

Lartillot O, Toiviainen P. A matlab toolbox for musical feature extraction from audio. International Conference on Digital Audio Effects, pp. 237–244; 2007.

Li D, Han M, Wang J. Chaotic time series prediction based on a novel robust echo state network. IEEE Transactions on Neural Networks and Learning Systems 2012;23(5):787–799.

Li YF, Tsang IW, Kwok JT. Convex and scalable weakly labeled SVMs. J Mach Learn Res 2013;14: 2151–2188.

Lin X, Yang Z, Song Y. Short-term stock price prediction based on echo state networks. Expert Systems with Applications 2009;36(3):7313–7317.

Lukoševičius M, Jaeger H. Reservoir computing approaches to recurrent neural network training. Computer Science Review 2009;3(3):127–149.

Maass W, Natschläger T, Markram H. Real-time computing without stable states: a new framework for neural computation based on perturbations. Neural Comput 2002;14(11):2531–2560.

Malik ZK, Hussain A, Wu J. An online generalized eigenvalue version of Laplacian eigenmaps for visual big data. Neurocomputing 2016;173:127–136.

Malik ZK, Hussain A, Wu QJ. Multilayered echo state machine: a novel architecture and algorithm. IEEE Transactions on Cybernetics 2016:1–14. In press.

Martens J, Sutskever I. Learning recurrent neural networks with Hessian-free optimization. Proceedings of the 28th International Conference on Machine Learning (ICML’11); 2011. p. 1033–1040.

Meftah B, Lézoray O, Benyettou A. Novel approach using echo state networks for microscopic cellular image segmentation. Cogn Comput 2016;8(2):237–245.

Nesterov Y. 2013. Introductory lectures on convex optimization: a basic course Springer Science & Business Media.

Pandarachalil R, Sendhilkumar S, Mahalakshmi G. Twitter sentiment analysis for large-scale data: an unsupervised approach. Cogn Comput 2015;7(2):254–262.

Pascanu R, Mikolov T, Bengio Y . On the difficulty of training recurrent neural networks. Proceedings of the 30th International Conference on Machine Learning (ICML’12) (2); 2012. p. 1310–1318.

Rasmussen CE. 2006. Gaussian processes for machine learning MIT Press.

Rifkin R, Klautau A. In defense of one-vs-all classification. J Mach Learn Res 2004;5:101–141.

Scardapane S, Comminiello D, Scarpiniti M, Uncini A. Music classification using extreme learning machines. Proceedings of the 2013 IEEE International Symposium on Image and Signal Processing and Analysis (ISPA’13), pp. 377–381; 2013.

Scardapane S, Comminiello D, Scarpiniti M, Uncini A . A semi-supervised random vector functional-link network based on the transductive framework. Inf Sci 2015;364–365:156—-166.

Scardapane S, Fierimonte R, Di Lorenzo P, Panella M, Uncini A. Distributed semi-supervised support vector machines. Neural Netw 2016;80:43–52.

Scardapane S, Scarpiniti M, Bucciarelli M, Colone F, Mansueto MV, Parisi R. Microphone array based classification for security monitoring in unstructured environments. AEU-Int J Electron C 2015;69(11): 1715–1723.

Scardapane S, Wang D, Panella M. A decentralized training algorithm for echo state networks in distributed big data applications. Neural Netw 2016;78:65—74.

Scutari G, Facchinei F, Song P, Palomar DP, Pang JS. Decomposition by partial linearization: Parallel optimization of multi-agent systems. IEEE Transactions on Signal Processing 2014;62(3):641–656.

Shi Z, Han M. Support vector echo-state machine for chaotic time-series prediction. IEEE Trans Neural Netw 2007;18(2):359–372.

Stowell D, Giannoulis D, Benetos E, Lagrange M, Plumbey M. Detection and classification of audio scenes and events. IEEE Trans Multimedia 2015;17(10):1733–1746.

Tong M. H, Bickett AD, Christiansen EM, Cottrell GW. Learning grammatical structure with echo state networks. Neural Netw 2007;20(3):424–432.

Trentin E, Scherer S, Schwenker F. Emotion recognition from speech signals via a probabilistic echo-state network. Pattern Recogn Lett 2015;66:4–12.

Triefenbach F, Jalalvand A, Demuynck K, Martens JP. Acoustic modeling with hierarchical reservoirs. IEEE Trans Audio Speech Lang Process 2013;21(11):2439–2450.

Tzanetakis G, Cook P . Musical genre classification of audio signals. IEEE Transactions on Speech and Audio Processing 2002;10(5):293–302.

Vandoorne K, Mechet P, Van Vaerenbergh T, Fiers M, Morthier G, Verstraeten D, Schrauwen B, Dambre J, Bienstman P. Experimental demonstration of reservoir computing on a silicon photonics chip. Nat Commun 2014;5:1–6.

Verstraeten D, Schrauwen B, d’Haene M, Stroobandt D. An experimental unification of reservoir computing methods. Neural Netw 2007;20(3):391–403.

Wang P, Song Q, Han H, Cheng J. Sequentially supervised long short-term memory for gesture recognition. Cogn Comput 2016:1–10. In press.

Werbos PJ. Backpropagation through time: what it does and how to do it. Proc IEEE 1990;78(10):1550–1560.

Yildiz IB, Jaeger H, Kiebel SJ. Re-visiting the echo state property. Neural Netw 2012;35:1–9.

Zhang B, Miller DJ, Wang Y. Nonlinear system modeling with random matrices: echo state networks revisited. IEEE Transactions on Neural Networks and Learning Systems 2012;23(1):175–182.

Zhao J, Du C, Sun H, Liu X, Sun J. Biologically motivated model for outdoor scene classification. Cogn Comput 2015;7(1):20–33.

Zhu X, Goldberg AB. Introduction to semi-supervised learning. Synthesis lectures on artificial intelligence and machine learning 2009;3(1):1–130.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interests

The authors declare that they have no conflict of interest.

Ethical Approval

This article does not contain any studies with human or animal subjects performed by any of the authors.

Rights and permissions

About this article

Cite this article

Scardapane, S., Uncini, A. Semi-supervised Echo State Networks for Audio Classification. Cogn Comput 9, 125–135 (2017). https://doi.org/10.1007/s12559-016-9439-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12559-016-9439-z