Abstract

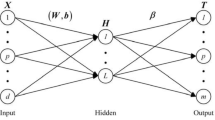

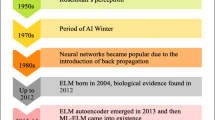

As a single hidden layer feed-forward neural network, the extreme learning machine (ELM) has been extensively studied for its short training time and good generalization ability. Recently, with the deep learning algorithm becoming a research hotspot, some deep extreme learning machine algorithms such as multi-layer extreme learning machine (ML-ELM) and hierarchical extreme learning machine (H-ELM) have also been proposed. However, the deep ELM algorithm also has many shortcomings: (1) when the number of model layers is shallow, the random feature mapping makes the sample features cannot be fully learned and utilized; (2) when the number of model layers is deep, the validity of the sample features will decrease after continuous abstraction and generalization. In order to solve the above problems, this paper proposes a densely connected deep ELM algorithm: dense-HELM (D-HELM). Benchmark data sets of different sizes have been employed for the property of the D-HELM algorithm. Compared with the H-ELM algorithm on the benchmark dataset, the average test accuracy is increased by 5.34% and the average training time is decreased by 21.15%. On the NORB dataset, the proposed D-HELM algorithm still maintains the best classification results and the fastest training speed. The D-HELM algorithm can make full use of the features of hidden layer learning by using the densely connected network structure and effectively reduce the number of parameters. Compared with the H-ELM algorithm, the D-HELM algorithm significantly improves the recognition accuracy and accelerates the training speed of the algorithm.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Huang GB, Chen L, Siew CK. Universal approximation using incremental constructive feedforward networks with random hidden nodes. IEEE Trans Neural Netw. Jul. 2006;17(4):879–92.

Ding S, Zhao H, Zhang Y, Xu X, Nie R. Extreme learning machine: algorithm, theory and applications. Artif Intell Rev. 2015;44(1):103–15.

Kasun LLC, Zhou H, Huang GB, Vong CM. Representational learning with extreme learning machine for big data. IEEE Intell Syst. 2013;28(6):31–4.

Tang J, Deng C, Huang GB. Extreme learning machine for multilayer perceptron. IEEE Trans Neural Netw Learn Syst. 2016;27(4):809–21.

Lv Q, Niu X, Dou Y, Xu J, Lei Y. Classification of hyperspectral remote sensing image using hierarchical local-receptive-field-based extreme learning machine. IEEE Geosci Remote Sens Lett. 2016;13(3):434–8.

Yin Y, Li H. Multi-view CSPMPR-ELM feature learning and classifying for RGB-D object recognition. Clust Comput. 2018;5:1–11.

Hu L, Chen Y, Wang J, Hu C, Jiang X. OKRELM: online kernelized and regularized extreme learning machine for wearable-based activity recognition. Int J Mach Learn Cybern. 2018;9(9):1577–90.

Yan S, Zhang S, Bo H, et al. Gaussian derivative models and ensemble extreme learning machine for texture image classification. Neurocomputing. 2018;277:53–64.

Zhai J, Zhang S, Zhang M, Liu X. Fuzzy integral-based elm ensemble for imbalanced big data classification. Soft Comput. 2018;22(11):3519–31.

Li Y, Qiu R, Jing S. Intrusion detection system using online sequence extreme learning machine (OS-ELM) in advanced metering infrastructure of smart grid. PLoS One. 2018;13(2):192–216.

Chen S, Zhang SF, Zhai JH, et al. Imbalanced data classification based on extreme learning machine autoencoder, 2018. Chendu: International Conference on Machine Learning and Cybernetics(ICMLC); 2018. p. 399–404.

Zhang J, Feng L, Yu L. A novel target tracking method based on OSELM. Multidim Syst Sign Process. 2017;28(3):1091–108.

Cao JW, Zhang K, Yong HW, Lai XP, Chen BD, Lin ZP. Extreme learning machine with affine transformation inputs in an activation function. IEEE Transactions on Neural Networks and Learning Systems. 2019;30(7):2093–107.

Cosmo DL, Salles EOT. Multiple sequential regularized extreme learning machines for single image super resolution. IEEE Signal Processing Letters. 2019;26(3):440–4.

Wong CM, Vong CM, Wong PK, Cao J. Kernel-based multilayer extreme learning machines for representation learning. IEEE Trans Neural Netw Learn Syst. 2018;29(3):757–62.

Rafael J. On the kernel function. Int Math Forum. 2017;12(14):693–703.

Farooq M, Steinwart I. An svm-like approach for expectile regression. Comp Stat Data Anal. 2016;109:159–81.

Ramya S, Shama K. Comparison of SVM kernel effect on online handwriting recognition: a case study with Kannada script. Data Eng Intell Comp. 2018;542:75–82.

Wu YQ, Ma XD. Alarms-related wind turbine fault detection based on kernel support vector machines. Journal of Engineering. 2019;2019(18):4980–5.

Jia CC, Shao M, Li S, et al. Stacked denoising tensor auto-encoder for action recognition with spatiotemporal corruptions. IEEE Trans Image Process. 2017;27(4):1878–87.

Zhang Y, Li PS, Wang XH. Intrusion detection for IoT based on improved genetic algorithm and deep belief network. IEEE Access. 2019;7:31711–22.

Huang G, Liu Z, Weinberger KQ, and Maaten LVD, Densely connected convolutional networks, 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, vol. 1, no. 2, pp. 2261–2269, 2017.

Zhu M, Song Y, Guo J, Feng C, Li G, Yan T, et al. PCA and kernel-based extreme learning machine for side-scan sonar image classification. In: Underwater Technology (UT). Busan: 2017 IEEE; 2017. p. 1–4.

Huang GB. An insight into extreme learning machines: random neurons, random features and kernels. Cogn Comput. 2014;6(3):376–90.

Beck A, Teboulle M. A fast iterative shrinkage-thresholding algorithm for linear inverse problems. Siam J Imaging Sci. 2009;2(1):183–202.

Szegedy C, Vanhoucke V, Ioffe S, Shlens J, Wojna Z. Rethinking the Inception Architecture for Computer Vision. Las Vegas: 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR); 2016. p. 2818–26.

Szegedy C, Ioffe S, Vanhoucke V, and Alemi AA, Inception-v4, Inception-ResNet and the impact of residual connections on learning, In 2017 AAAI, vol. 4, 2017. http://arxiv.org/abs/1602.07261. Accessed 21 Jul 2020.

Dua D, Taniskidou EK. UCI machine learning repository. Irvine: University of California, School of Information and Computer Science; 2017. http://archive.ics.uci.edu/ml. Accessed 21 Jul 2020.

Vincent P, Larochelle H, Bengio Y, et al. Extracting and composing robust features with denoising autoencoders. In: International Conference on Machine Learning. Helsinki: ACM.; 2008. p. 1096–103.

Huang FJ, Yann L. THE small NORB DATASET, V1.0, 2005-05-30/2019-03-03, https://cs.nyu.edu/~ylclab/data/norb-v1.0-small/. Accessed 21 Jul 2020.

Funding

This work is partially supported by the Key Research and Development Program of China (2016YFC0301400) and Natural Science Foundation of China (51379198, 51075377, and 31202036).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The authors declare that they have no conflict of interest.

Informed Consent

Informed consent was not required as no human or animals were involved.

Ethical Approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Jiang, X.W., Yan, T.H., Zhu, J.J. et al. Densely Connected Deep Extreme Learning Machine Algorithm. Cogn Comput 12, 979–990 (2020). https://doi.org/10.1007/s12559-020-09752-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12559-020-09752-2