Abstract

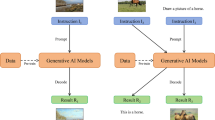

Since generative adversarial network (GAN) can learn data distribution and generate new samples based on the learned data distribution, it has become a research hotspot in the area of deep learning and cognitive computation. The learning of GAN heavily depends on a large set of training data. However, in many real-world applications, it is difficult to acquire a large number of data as needed. In this paper, we propose a novel generative adversarial network called ML-CGAN for generating authentic and diverse images with few training data. Particularly, ML-CGAN consists of two modules: the conditional generative adversarial network (CGAN) backbone and the meta-learner structure. The CGAN backbone is applied to generate images, while the meta-learner structure is an auxiliary network to provide deconvolutional weights for the generator of the CGAN backbone. Qualitative and quantitative experimental results on the MNIST, Fashion MNIST, CelebA and CIFAR-10 data sets demonstrate the superiority of ML-CGAN over state-of-the-art models. Specifically, the results show that the meta-learner structure can learn prior knowledge and transfer it to the new tasks, which is beneficial for generating authentic and diverse images in the new tasks with few training data.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Huang K, Hussain A, Wang Q, and Zhang R. Deep Learning: Fundamentals, Theory, and Applications, Springer, ISBN 978-3-030-06072-5, 2019.

Thrun S, Pratt L. Learning to Learn: Introduction and Overview. Learning to Learn. 1998;3–17.

Munkhdalai T, Yu H. Meta Networks. ICML. 2017;2554–633.

Snell J, Swersky K, Zemel R. Prototypical Networks for Few-Shot Learning. NIPS. 2017;4077–87.

Vinyals O, Blundell C, Lillicrap T, Wierstra D. Matching Networks for One Shot Learning. NIPS. 2016;3630–8.

Koch G, Zemel R, and Salakhutdinov R, Siamese Neural Networks for One-Shot Image Recognition. ICML 2015.

Finn C, Abbeel P, Levine S. Model-Agnostic Meta-Learning for Fast Adaptation of Deep Networks. ICML. 2017;1126–35.

Gomez F, and Schmidhuber J. Evolving Modular Fast-Weight Networks for Control. ICANN (2) 2005: 383-389.

Qiao S, Liu C, Shen W, and Yuille A. Few-shot Image Recognition by Predicting Parameters from Activations. CoRR abs/1706.03466 (2017).

Ha D, Dai A, and Le Q. Hypernetworks, CoRR abs/1609.09106 (2016).

Andrychowicz M, Denil M, Gomez S, Hoffman M, Pfau D, Schaul T, Freitas N. Learning to Learn by Gradient Descent by Gradient Descent. NIPS. 2016;3981–9.

Ravi S, and Larochelle H. Optimization as a Model for Few-Shot Learning. ICLR 2017.

Munkhdalai T, Yuan X, Mehri S, Wang T, and Trischler A. Learning Rapid-Temporal Adaptations. CoRR abs/1712.09926 (2017).

Goodfellow I, Pouget-Abadie J, Mirza M, Xu B, Farley D, Ozair S, Courville A, and Bengio Y. Generative Adversarial Networks. CoRR abs/1406.2661 (2014).

Mirza M, and Osindero S. Conditional Generative Adversarial Nets. CoRR abs/1411.1784 (2014).

Radford A, Metz L, and Chintala S. Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks. ICLR (Poster) 2016.

Arjovsky M, Chintala S, Bottou L. Wasserstein Generative Adversarial Networks. ICML. 2017;214–23.

Isola P, Zhu J, Zhou T, Efros A. Image-to-Image Translation with Conditional Adversarial Networks. CVPR. 2017;5967–76.

Zhu J, Park T, Isola P, Efros A. Unpaired Image-to-Image Translation Using Cycle-Consistent Adversarial Networks. ICCV. 2017;2242–51.

Kim T, Cha M, Kim H, Lee J, Kim J. Learning to Discover Cross-Domain Relations with Generative Adversarial Networks. ICML. 2017;1857–65.

Huang R, Zhang S, Li T, He R. Beyond Face Rotation: Global and Local Perception GAN for Photorealistic and Identity Preserving Frontal View Synthesis. ICCV. 2017;2458–67.

Zhang H, Xu T, Li H. StackGAN: Text to Photo-Realistic Image Synthesis with Stacked Generative Adversarial Networks. ICCV. 2017;5908–16.

Li C, and Wand M. Precomputed Real-Time Texture Synthesis with Markovian Generative Adversarial Networks. ECCV (3) 2016: 702-716.

Ledig C, Theis L, Huszar F, Caballero J, Cunningham A, Acosta A, Aitken A, Tejani A, Totz J, Wang Z, Shi W. Photo-Realistic Single Image Super-Resolution Using a Generative Adversarial Network. CVPR. 2017;105–14.

Liu G, Reda F, Shih K, Wang T, Tao A, and Catanzaro B. Image Inpainting for Irregular Holes Using Partial Convolutions. ECCV (11) 2018: 89-105.

Zhang R, Che T, Ghahramani Z, Bengio Y, Song Y. MetaGAN: An adversarial Approach to Few-Shot Learning. NIPS. 2018;2371–80.

Wang Y, Girshick R, Hebert M, Hariharan B. Low-shot Learning from Imaginary Data. CVPR. 2018;7278–86.

Clouatre L and Demers M. FIGR: Few-Shot Image Generation with Reptile. CoRR abs/1901.02199 (2019).

Ulyanov D, Vedaldi A, and Lempitsky V. Instance Normalization: The Missing Ingredient for Fast Stylization. CoRR abs/1607.08022 (2016).

Ma S, Fu J, Chen C, Mei T. DA-GAN: Instance-Level Image Translation by Deep Attention Generative Adversarial Networks. CVPR. 2018;5657–66.

Duan Y, Schulman J, Chen X, Bartlett P, Sutskever I, and Abbeel P. RL2: Fast Reinforcement Learning via Slow Reinforcement Learning. CoRR abs/1611.02779 (2016).

LeCun Y, Bottou L, Bengio Y, Haffner P. Gradient-Based Learning Applied to Document Recognition. Proceedings of the IEEE. 1998;86(11):2278–C2324.

Xiao H, Rasul K, and Vollgraf R. Fashion-MNIST: A Novel Image Dataset for Benchmarking Machine Learning Algorithms. CoRR abs/1708.07747.

Krizhevsky A, Hinton G. Learning Multiple Layers of Features from Tiny Images. Citeseer: Tech. rep; 2009.

Salimans T, Goodfellow IJ, Zaremba W, Cheung V, Radford A and Chen X. Improved Techniques for Training GANs. in: NIPS, 2016, pp.2226–C2234.

Mirza M, and Osindero S. Conditional Generative Adversarial Nets. CoRR abs/1411.1784.

Odena A, Olah C, and Shlens J. Conditional Image Synthesis with Auxiliary Classifier GANs. in: ICML, 2017, pp. 2642–C2651.

Gurumurthy S, Sarvadevabhatla RK, and Babu R. DeLiGAN: Generative Adversarial Networks for Diverse and Limited Data. in: CVPR, 2017, pp.4941–C4949.

Arjovsky M, Chintala S, and Bottou L. Wasserstein GAN. CoRR abs/1701.07875 (2017).

Liu Z, Luo P, Wang X and Tang X. Deep learning face attributes in the wild. in: ICCV, 2015.

Sun J, Zhong G, Chen Y, Liu Y, Li T, Huang K. Generative Adversarial Networks with Mixture of T-distributions Noise for Diverse Image Generation. Neural Networks. 2020;122:374–81.

Zakharov E, Shysheya A, Burkov E, Lempitsky Vi, Few-Shot Adversarial Learning of Realistic Neural Talking Head Models. ICCV 2019: 9458-9467.

Tsutsui S, Fu Y, Crandall D. Meta-Reinforced Synthetic Data for One-Shot Fine-Grained Visual Recognition. NeurIPS. 2019;3057–66.

Fontanini T, Iotti E, Donati L, Prati A. MetalGAN: Multi-Domain Label-Less Image Synthesis Using cGANs and Meta-Learning. CoRR abs/1912.02494 (2019).

Acknowledgements

This work was supported by the Major Project for New Generation of AI under Grant No. 2018AAA0100400; the National Key R&D Program of China under Grant 2016YFC1401004; the National Natural Science Foundation of China (NSFC) under Grant No. 41706010 and No. 61876155; the Joint Fund of the Equipment’s Pre-Research and Ministry of Education of China under Grant No. 6141A020337; the Natural Science Foundation of Jiangsu Province under Grant No. BK20181189; the Key Program Special Fund in XJTLU under Grant No. KSF-A-01, KSF-T-06, KSF-E-26, KSF-P- 02 and KSF-A-10; the Project for Graduate Student Education Reformation and Research of Ocean University of China under Grant No. HDJG19001; and the Fundamental Research Funds for the Central Universities of China.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of Interest

The authors declare that they have no conflict of interest.

Ethical Approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Informed Consent

Informed consent was obtained from all individual participants included in the study.

Rights and permissions

About this article

Cite this article

Ma, Y., Zhong, G., Liu, W. et al. ML-CGAN: Conditional Generative Adversarial Network with a Meta-learner Structure for High-Quality Image Generation with Few Training Data. Cogn Comput 13, 418–430 (2021). https://doi.org/10.1007/s12559-020-09796-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12559-020-09796-4