Abstract

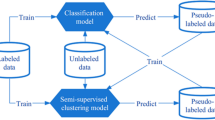

Co-clustering simultaneously performs clustering on the sample and feature dimensions of the data matrix, so it can obtain better insight into the data than traditional clustering. Adjustment learning extracts valuable information from chunklets for unsupervised cluster learning in specific scenarios, but in fact it can be easily extended to semi-supervised and supervised learning situations. In this paper, we propose a novel co-clustering framework, named co-adjustment learning for co-clustering (CALCC), and CALCC can be simultaneously used in unsupervised, semi-supervised and supervised learning situations. A novel co-adjustment learning (CAL) model is proposed to extract meaningful representations in both sample space and feature space for co-clustering. CAL can not only perform the sample projection as well as feature projection under the guidance of chunklet information, it can also transform the original data into another space with improved separability. We can obtain the row partition matrix and column partition matrix by performing the clustering process on the representations learned by the CAL model. In order to prove the availability of our framework, an unsupervised case of CALCC is introduced to make an extensive comparison with several related methods (specifically including the classic co-clustering methods and the state-of-the-art methods closely related to our work) on several image and real data sets. The experimental results show the superior performance of the CAL model in discovering discriminative representations and demonstrate the effectiveness of the CALCC framework. The proposed CALCC framework, as demonstrated in the experiments, is more effective superior to the related methods. In addition, the chunklet information can be effective to enhance the expression ability of the learned representations.

Similar content being viewed by others

Explore related subjects

Discover the latest articles and news from researchers in related subjects, suggested using machine learning.References

Abdullah A, Hussain A. A cognitively inspired approach to two-way cluster extraction from one-way clustered data. Cogn Comput. 2014;7(1):161–82.

Banerjee A, Dhillon I, Ghosh J, Merugu S, Modha DS. A generalized maximum entropy approach to bregman co-clustering and matrix approximation. J Mach Learn Res. (Aug) 2007;8:1919–86.

Bekkerman R, Ran EY, Mccallum A. Multi-way distributional clustering via pairwise interactions. International Conference on Machine Learning. 2005:41–8.

Blake C. Uci repository of machine learning databases. Department of Information and Computer Science: University of California; 1998.

Busygin S. Biclustering in data mining. Computers and Operations Research. 2008;35(9):2964–87.

Cai D, He X, Han J, Huang TS. Graph regularized nonnegative matrix factorization for data representation. IEEE Trans Pattern Anal Mach Intell. 2011;33(8):1548–60.

Chen LC, Yu PS, Tseng VS. Wf-msb: a weighted fuzzy-based biclustering method for gene expression data. Int J Data Min Bioin. 2011;5(1):89–109.

Chen, X., Ritter, A., Gupta, A., Mitchell, T.: Sense discovery via co-clustering on images and text. In: IEEE Conference on Computer Vision and Pattern Recognition. 2015:5298–5306.

Chen Y, Wang L, Dong M. Non-negative matrix factorization for semisupervised heterogeneous data coclustering. IEEE Transactions on Knowledge Data Engineering. 2010;22(10):1459–74.

Cheng Y, Church GM. Biclustering of expression data. In: Eighth International Conference on Intelligent Systems for Molecular Biology. 2000:93–103.

Dhillon I, Mallela S, Modha D. Information-theoretic co-clustering. In: ACM SIGKDD International Conference on Knowledge Discovery Data Mining. 2003:89–98.

Dhillon IS. Co-clustering documents and words using bipartite spectral graph partitioning. In: ACM SIGKDD International Conference on Knowledge Discovery Data Mining. 2001:269–274.

Feldman DD, Griffiths LJ. A constraint projection approach for robust adaptive beamforming. In: Acoustics, Speech, and Signal Processing, 1991. Icassp-91., 1991 International Conference. 1991:1381–84.

Gao C, Mcdowell IC, Zhao S, Brown CD, Engelhardt BE. Context specific and differential gene co-expression networks via bayesian biclustering: Plos Computational Biology. 2016;12(7):e1004791.

Garcia-Pedrajas N, Maudes-Raedo J, Garcia-Osorio C, Rodriguez-Diez JJ. Supervised subspace projections for constructing ensembles of classifiers. Inf Sci. 2012;193(11):1–21.

Hartigan JA. Direct clustering of a data matrix. Publications of the American Statistical Association. 1978;67(337):123–9.

Herlocker JL, Konstan JA, Terveen L, Riedl J. Evaluating collaborative filtering recommender systems. ACM Transactions on Information Systems. 2004;22(1):5–53.

Huang S, Wang H, Li T, Li T, Xu Z. Robust graph regularized nonnegative matrix factorization for clustering. Data Mining and Knowledge Discovery. 2018;32(2):483–503.

Huang S, Wang H, Li T, Yang Y, Li T. Constraint co-projections for semi-supervised co-clustering. IEEE Transactions on Cybernetics. 2015;46(12):3047–58.

Huang S, Xu Z, Lv J. Adaptive local structure learning for document co-clustering. Knowledge-Based Systems. 2018;148:74–84.

Jain AK, Murty MN, Flynn PJ. Data clustering: a review. ACM Computing Surveys. 1999;31(3):264–32323.

Klein D, Kamvar SD, Manning CD. From instance-level constraints to space-level constraints: Making the most of prior knowledge in data clustering. In: Proceedings of the Nineteenth International Conference on Machine Learning. 2020:307–314.

Kumar S, Gao X, Welch I. Learning under data shift for domain adaptation: A model-based co-clustering transfer learning solution. In: Pacific Rim Knowledge Acquisition Workshop. 2016:43–54.

Leung WT, Lee DL, Lee WC. Clr:a collaborative location recommendation framework based on co-clustering. 2011:305–14.

Li H, Wang M, Hua XS. Msra-mm 2.0: A large-scale web multimedia dataset. In: IEEE International Conference on Data Mining Workshops. 2009:164–69.

Lu Z, Liu G, Wang S. Sparse neighbor constrained co-clustering via category consistency learning. Knowledge-Based Systems. 2020.

Nie F, Wang X, Deng C, Huang H. Learning a structured optimal bipartite graph for co-clustering. In: Guyon I, Luxburg UV, Bengio S, Wallach H, Fergus R, Vishwanathan S, Garnett R (eds.) Advances in Neural Information Processing Systems 30. 2017:4129–38. Curran Associates, Inc. http://papers.nips.cc/paper/7001-learning-a-structured-optimal-bipartite-graph-for-co-clustering.pdf

Peng X, Xu D. Structural regularized projection twin support vector machine for data classification. Information Sciences. 2014;279(279):416–32.

Prats-Montalbn JM, Lopez F, Valiente JM, Ferrer A. Feature extraction and classification in surface grading application using multivariate statistical projection models. In: Eighth International Conference on Quality Control by Artificial Vision. 2007:63560N–63560N–11.

Rodriguez A, Laio A. Clustering by fast search and find of density peaks. Machine Learning. 2014;344(6191):1492.

Shan H, Banerjee A. Bayesian co-clustering. In: Eighth IEEE International Conference on Data Mining. 2008:530–39.

Shental N, Bar-Hillel A, Hertz T, Weinshall D. Gaussian mixture models with equivalence constraints. Constrained Clustering. 2009:33–58.

Shental N, Hertz T, Weinshall D, Pavel M. Adjustment learning and relevant component analysis. In: European Conference on Computer Vision. 2002:776–92.

Shi, X, Fan W, Yu PS. Efficient semi-supervised spectral co-clustering with constraints. In: IEEE International Conference on Data Mining. 2011:043–48.

Wang P, Domeniconi C, Laskey KB. Nonparametric bayesian clustering ensembles. In: European Conference on Machine Learning and Knowledge Discovery in Databases. 2010:435–50.

Webb AR, Copsey KD. Introduction to statistical pattern recognition. Academic Press, 1972:2133–43

Whang JJ, Dhillon IS. Non-exhaustive, overlapping co-clustering. In: Proceedings of the 2017 ACM on Conference on Information and Knowledge Management. 2017;2367–70.

Yan Y, Chen L, Tjhi WC. Fuzzy semi-supervised co-clustering for text documents. Fuzzy Sets and Systems. 2013;215(215):74–89.

Yu X, Yu G, Wang J, Domeniconi C. Co-clustering ensembles based on multiple relevance measures. IEEE Transactions on Knowledge and Data Engineering. 2019.

Zhang, Daoqiang, Chen, Songcan, Zhou, ZhiHua, Yang, Qiang. Constraint projections for ensemble learning. National conference on artificial intelligence. 2008;758–763.

Acknowledgements

This work is partially supported by Key program for International S&T Cooperation of Sichuan Province, No. (2019YFH0097); Science and Technology Support Project of Sichuan Province under 290 Grant No. 2020YFG0045, 2020YFG0238.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of Interest

The authors declare that they have no conflict of interest.

Ethical Approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Rights and permissions

About this article

Cite this article

Zhang, J., Wang, H., Huang, S. et al. Co-Adjustment Learning for Co-Clustering. Cogn Comput 13, 504–517 (2021). https://doi.org/10.1007/s12559-021-09827-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12559-021-09827-8