Abstract

Background

Spiking neural network (SNN), as the next-generation neural network inspired by the human brain, has been proved to be promising for constructing energy-efficient systems due to its inherent property of dynamic representation and information processing mechanism. For applications in deep reinforcement learning (DRL), however, the actual performance of SNNs is usually weaker than that of deep neural networks in high-dimensional continuous control. Related studies have shown that SNN suffers from insufficient model representation capacity and ineffective parameter training methods. To advance SNN to the practical application level, we aim at challenging partially observable Markov decision process (POMDP) high-dimensional control problems.

Method

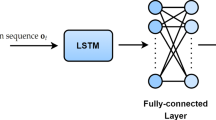

Based on Twin Delayed Deep Deterministic Policy Gradient algorithm (TD3), we propose a Spiking Memory TD3 algorithm (SM-TD3), which is a hybrid training framework of a spiking Long Short-Term Memory (Spiking-LSTM) policy network and a deep critic network. The policy leverages population-encoding to improve input encoding precision, spiking-LSTM to provide memory function, and spatio-temporal backpropagation to train parameters.

Results and Conclusions

We use the Pybullet benchmark to test the performance of SM-TD3 and set up comparisons in three cases of full-observation Markov decision process (MDP), random noise, and random sensor missing. The results show that SM-TD3 with SNN energy-efficient framework solves the POMDP problems under large-scale and high-dimensional tasks. It reaches the same performance level as the deep LSTM-TD3 algorithm. At the same time, SM-TD3 still has competitive robustness when transferred to the same environment in different situations. Finally, we analyze the energy consumption of SM-TD3. Compared with the energy consumption of deep LSTM-TD3, the energy consumption of SM-TD3 is only \(20\%~50\%\) of that. Facing the practical application level, the proposed SM-TD3 provides an effective solution for both high-performance and energy-efficient.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Arulkumaran K, Deisenroth MP, Brundage M, Bharath AA. Deep Reinforcement Learning: A Brief Survey. IEEE Signal Processing Magazine. 2017;34(6):26–38.

Tsurumine Y, Cui Y, Uchibe E, Matsubara T. Deep reinforcement learning with smooth policy update: Application to robotic cloth manipulation. Robotics and Autonomous Systems. 2019;112:72–83.

Hwangbo J, Sa I, Siegwart R, Hutter M. Control of a Quadrotor With Reinforcement Learning. IEEE Robotics and Automation Letters. 2017;2(4):2096–2103.

Sallab AE, Abdou M, Perot E, Yogamani S. Deep reinforcement learning framework for autonomous driving. Electronic Imaging. 2017;2017(19):70–76.

Kiran BR, Sobh I, Talpaert V, Mannion P, Sallab AAA, Yogamani S, Pérez P. Deep Reinforcement Learning for Autonomous Driving: A Survey. IEEE Transactions on Intelligent Transportation Systems. 2021;1–18.

Shalev-Shwartz S, Shammah S, Shashua A. Safe, multi-agent, reinforcement learning for autonomous driving. arXiv preprint arXiv:1610.03295. 2016.

Vinyals O, Babuschkin I, Czarnecki WM, Mathieu M, et al. Grandmaster level in StarCraft II using multi-agent reinforcement learning. Nature. 2019;575(7782):350–354.

Wu B. Hierarchical macro strategy model for moba game ai. Proceedings of the AAAI Conference on Artificial Intelligence. 2019;33(01):1206–1213.

Ye D, Chen G, Zhang W, Chen S, Yua Y, et al. Towards playing full moba games with deep reinforcement learning. arXiv preprint arXiv:2011.12692. 2020.

Zhao W, Queralta JP. 2020 IEEE Symposium Series on Computational Intelligence (SSCI). Sim-to-real transfer in deep reinforcement learning for robotics: a survey. 2020;737–744.

Ghosh-Dastidar S, Adeli H. Spiking neural networks. International journal of neural systems. 2009;19(04):295–308.

Maass W. Networks of spiking neurons: the third generation of neural network models. Neural networks. 1997;10(9):1659–1671.

Tang G, Kumar N, Yoo R, Michmizos KP. Deep Reinforcement Learning with Population-Coded Spiking Neural Network for Continuous Control. arXiv preprint arXiv:2010.09635. 2020.

Wu Y, Deng L, Li G, Zhu J, Shi L. Spatio-temporal backpropagation for training high-performance spiking neural networks. Frontiers in neuroscience. 2018;12(331).

Zhu P, Li X, Poupart P, Miao G. On improving deep reinforcement learning for pomdps. arXiv preprint arXiv:1704.07978. 2017.

Averbeck BB, Latham PE, Pouget A, r. Neural correlations, population coding and computation. Nature reviews neuroscience. 2006;7(5):358-366.

Georgopoulos AP, Schwartz AB, Kettner RE. Neuronal population coding of movement direction. Science. 1986;233(4771):1416–1419.

Meng L. Gorbet R, Kulic D. Memory-based Deep Reinforcement Learning for POMDP. arXiv preprint arXiv:2102.12344. 2021.

Nakano T, Otsuka M, Yoshimoto J, Doya K. A spiking neural network model of model-free reinforcement learning with high-dimensional sensory input and perceptual ambiguity. PloS one. 2015;10(3):e0115620.

Mahadevuni A, Li P. Navigating mobile robots to target in near shortest time using reinforcement learning with spiking neural networks. 2017 International Joint Conference on Neural Networks (IJCNN). 2017;2243–2250.

Bing Z, Meschede C, Huang K, Chen G, Rohrbein F, Akl M, Knoll A. End to end learning of spiking neural network based on r-stdp for a lane keeping vehicle. 2018 IEEE International Conference on Robotics and Automation (ICRA). 2018;4725–4732.

Yuan M, Wu X, Yan R, Tang H. Reinforcement learning in spiking neural networks with stochastic and deterministic synapses. Neural computation. 2019;31(12):2368–2389.

Chung S, Kozma R. Reinforcement Learning with Feedback-modulated TD-STDP. arXiv preprint arXiv:2008.13044. 2020.

Chevtchenko SF. Ludermir TB. Combining STDP and binary networks for reinforcement learning from images and sparse rewards. Neural Networks. 2021;144:496–506.

Aenugu S. Training spiking neural networks using reinforcement learning. arXiv preprint arXiv:2005.05941. 2020.

Rueckauer B, Liu S. Conversion of analog to spiking neural networks using sparse temporal coding. 2018 IEEE International Symposium on Circuits and Systems (ISCAS). 2018;1–5.

Midya R, Wang Z, Asapu S, Joshi S, Li Y, Zhuo Y, et al. Artificial neural network (ANN) to spiking neural network (SNN) converters based on diffusive memristors. Advanced Electronic Materials. 2019;5(9):1900060.

Tan W, Patel D, Kozma R. Strategy and Benchmark for Converting Deep Q-Networks to Event-Driven Spiking Neural Networks. arXiv preprint arXiv:2009.14456. 2020.

Patel D, Hazan H, Saunders DJ, Siegelmann HT, Kozma R. Improved robustness of reinforcement learning policies upon conversion to spiking neuronal network platforms applied to Atari Breakout game. Neural Networks. 2019;120:108–115.

Tang GZ, Kumar N, Michmizos KP. Reinforcement co-learning of deep and spiking neural networks for energy-efficient mapless navigation with neuromorphic hardware. 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). 2020;6090–6097.

Naya K, Kutsuzawa K, Owaki D. Spiking Neural Network Discovers Energy-Efficient Hexapod Motion in Deep Reinforcement Learning. IEEE Access 2021; 150345–150354.

Zhang DZ, Zhang TL, Jia SC, Cheng X, Xu B. Population-coding and Dynamic-neurons improved Spiking Actor Network for Reinforcement Learning. arXiv preprint arXiv:2106.07854. 2021.

Fujimoto S, Hoof H, Meger D. Addressing function approximation error in actor-critic methods. International Conference on Machine Learning. 2018;1587-1596.

Rezaabad AL, Vishwanath S. Long Short-Term Memory Spiking Networks and Their Applications. arXiv preprint arXiv:2007.04779. 2020.

Lemaire E, Miramond B, S Bilavarn, et al. Synaptic Activity and Hardware Footprint of Spiking Neural Networks in Digital Neuromorphic Systems. ACM Transactions on Embedded Computing Systems (TECS). 2022.

Panda P, Aketi S A, Roy K. Toward scalable, efficient, and accurate deep spiking neural networks with backward residual connections, stochastic softmax, and hybridization. Frontiers in Neuroscience. 2020; 14:653.

Acknowledgements

This work was supported in part by the National Key Research and Development Program of China under Grant 2017YFE0129700, in part by the National Natural Science Foundation of China (Key Program) under Grant 11932013, in part by the Tianjin Research Innovation Project for Postgraduate Students under Grant 2021YJSO2B01, and in part by the Tianjin Natural Science Foundation for Distinguished Young Scholars under Grant 18JCJQJC46100

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethical Standard

This article does not contain any studies with human participants performed by any of the authors.

Informed Consent

Text

Conflicts of Interest

All of the authors declare that they have no conflict of interest

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Cheng, H., Duan, F. & He, M. Spiking Memory Policy with Population-encoding for Partially Observable Markov Decision Process Problems. Cogn Comput 15, 1153–1166 (2023). https://doi.org/10.1007/s12559-022-10030-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12559-022-10030-6