Abstract

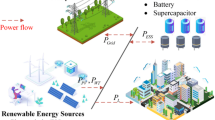

Due to the interactions among schedulable equipment and the uncertainty of microgrid (MG) systems, it becomes increasingly difficult to establish accurate mathematical models for energy management. To improve the stability and economy of MGs, a data-driven energy management strategy must be proposed. In this paper, distributed generators (DGs) and an energy storage system (ESS) are taken as the control objects, and a data-driven energy management strategy based on prioritized experience replay soft actor-critic (PERSAC) is proposed for MGs. First, we construct an MG energy management model with the objective of minimizing the operation cost. Second, the energy management model is formulated as a Markov decision process (MDP), and the PERSAC algorithm is used to solve the MDP. Moreover, the sampling rule of the training process is optimized by using the prioritized empirical replay (PER) method. The analysis of numerical examples proves the effectiveness and practicability of the algorithm. By controlling DGs and the ESS, the operation cost of the proposed algorithm is the lowest compared with other algorithms.

Similar content being viewed by others

Explore related subjects

Discover the latest articles and news from researchers in related subjects, suggested using machine learning.Data Availability

The data that support the findings of this study are available from the corresponding author upon reasonable request.

References

Aslam S, Herodotou H, Mohsin SM. A survey on deep learning methods for power load and renewable energy forecasting in smart microgrids. Renew Sustain Energy Rev. 2021;144.

Zia MF, Elbouchikhi E, Benbouzid M. Microgrids energy management systems: A critical review on methods, solutions, and prospects. Appl Energy. 2018;222:1033–55.

Valencia F, Collado J, Sáez D. Robust energy management system for a microgrid based on a fuzzy prediction interval model. IEEE Trans Smart Grid. 2015;7(3):1486–94.

Meng T, Lin Z, Shamash YA. Distributed cooperative control of battery energy storage systems in dc microgrids. IEEE/CAA Journal of Automatica Sinica. 2021;8(3):606–16.

Cosic A, Stadler M, Mansoor M, Zellinger M. Mixed-integer linear programming based optimization strategies for renewable energy communities. Energy. 2021;237.

Vitale F, Rispoli N, Sorrentino M, Rosen M. On the use of dynamic programming for optimal energy management of gridconnected reversible solid oxide cell-based renewable microgrids. Energy. 2021;225.

Hossain MA, Pota HR, Squartini S, Zaman F. Energy scheduling of community microgrid with battery cost using particle swarm optimisation. Appl Energy. 2019;254.

Nosratabadi SM, Jahandide M, Guerrero JM. Robust scenario-based concept for stochastic energy management of an energy hub contains intelligent parking lot considering convexity principle of CHP nonlinear model with triple operational zones[J]. Sustain Cities Soc. 2021;68.

Khosravi M, Azarinfar H, Nejati AS. Microgrids energy management in automated distribution networks by considering consumers comfort index. Int J Electr Power Energy Syst. 2022;139:108013.

Velasquez MA, Gomez BJ, Quijano N, Cadena AI. Intra-hour microgrid economic dispatch based on model predictive control. IEEE Trans Smart Grid. 2020;11(3):1968–79.

Chuan S, Shan G, Yu L. A model predictive control approach in microgrid considering multi-uncertainty of electric vehicles. Renew Energy. 2021;163:1385–96.

Gan L, Zhang P, Lee J. Data-Driven Energy Management System With Gaussian Process Forecasting and MPC for Interconnected Microgrids. IEEE Trans Sustainable Energy. 2021;12(1):695–704.

Li W, Wen S, Shi K, Yang Y. Neural Architecture Search With a Lightweight Transformer for Text-to-Image Synthesis. IEEE Transactions on Network Science and Engineering. 2022;9(3):1567–76.

Lyu B, Wen S, Shi K, Huang T. Multiobjective Reinforcement Learning-Based Neural Architecture Search for Efficient Portrait Parsing. IEEE Transactions on Cybernetics. 2021;1–12.

Li S, Li W, Wen S, Shi K. Auto-FERNet: A facial expression recognition network with architecture search. IEEE Transactions on Network Science and Engineering. 2021;8(3):2213–22.

Hodge VJ, Hawkins R, Alexander R. Deep reinforcement learning for drone navigation using sensor data[J]. Neural Comput Appl. 2021;33(6):2015–33.

Chen Q, Zhao W, Li L, Wang C. ES-DQN: A Learning Method for Vehicle Intelligent Speed Control Strategy Under Uncertain Cut-In Scenario. IEEE Trans Veh Technol. 2022;71(3):2472–84.

Xu B, Zhou Q, Shi J, Li S. Hierarchical q-learning network for online simultaneous optimization of energy efficiency and battery life of the battery/ultracapacitor electric vehicle. Journal of Energy Storage. 2022;46.

Alabdullah MH, Abido MA. Microgrid energy management using deep Q-network reinforcement learning. Alex Eng J. 2022;61(11):9069–78.

Botvinick M, Wang JX, Dabney W, Miller KJ. Deep reinforcement learning and its neuroscientific implications. Neuron. 2020;107(4):603–16.

Mathew A, Jolly MJ, Mathew J. Improved residential energy management system using priority double deep q-learning. Sustain Cities Soc. 2021;69.

Du Y, Zandi H, Kotevska O, Kurte K, Munk J. Intelligent multi-zone residential HVAC control strategy based on deep reinforcement learning. Appl Energy. 2021;281.

Si C, Tao Y, Qiu J, Lai J. Deep reinforcement learning based home energy management system with devices operational dependencies. Int J Mach Learn Cybern. 2021;12(6):1687–703.

Guo C, Wang X, Zheng Y, Zhang F. Optimal energy management of multi-microgrids connected to distribution system based on deep reinforcement learning. Int J Electr Power Energy Syst. 2021;131:107048.

Li X, Ma R. Operation control strategy for energy storage station after considering battery life in commercial park. High Voltage Engineering. 2020;46(1):62–70.

Haarnoja T, Zhou A. Soft actor-critic: Offpolicy maximum entropy deep reinforcement learning with a stochastic actor. ArXiv:1801012902018.

Hasselt HV, Guez A, Silver D. Deep reinforcement learning with double q-learning. In: Proceedings of the AAAI conference on artificial intelligence. 2016;30.

Schaul T, Quan J, Antonoglou I. Prioritized experience replay. 2015. arXiv preprint arXiv:1511.05952.

Jalilibal Z, Amiri A, Castagliola P, Khoo MB. Monitoring the coefficient of variation: A literature review. Comput Ind Eng. 2021;161:107600.

Acknowledgements

The authors would like to thank the associate editor and the reviewers for their detailed comments and valuable suggestions which considerably improved the presentation of the paper.

Funding

This work was jointly supported in part by the National Natural Science Foundation of China (61876097).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethical Approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Informed Consent

Informed consent was not required as no human or animals were involved.

Conflict of Interest

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Bao, G., Xu, R. A Data-Driven Energy Management Strategy Based on Deep Reinforcement Learning for Microgrid Systems. Cogn Comput 15, 739–750 (2023). https://doi.org/10.1007/s12559-022-10106-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12559-022-10106-3