Abstract

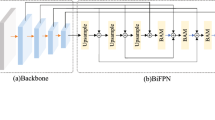

Image segmentation accuracy is critical in marine ecological detection utilizing unmanned aerial vehicles (UAVs). By flying a drone around, we can swiftly determine the location of a variety of species. However, remote sensing photos, particularly those of inter-class items, are remarkably similar, and there are a significant number of little objects. The universal segmentation network is ineffective. This research constructs attentional networks that imitate the human cognitive system, inspired by camouflaged object detection and the management of human attentional mechanisms in the recognition of diverse things. This research proposes TriseNet, an attention-guided multi-scale fusion semantic segmentation network that solves the challenges of high item similarity and poor segmentation accuracy in UAV settings. To begin, we employ a bidirectional feature extraction network to extract low-level spatial and high-level semantic information. Second, we leverage the attention-induced cross-level fusion module (ACFM) to create a new multi-scale fusion branch that performs cross-level learning and enhances the representation of inter-class comparable objects. Finally, the receptive field block (RFB) module is used to increase the receptive field, resulting in richer characteristics in specific layers. The inter-class similarity increases the difficulty of segmentation accuracy greatly, whereas the three modules improve feature expression and segmentation results. Experiments are conducted using our UAV dataset, UAV-OUC-SEG (55.61% MIoU), and the public dataset, Cityscapes (76.10% MIoU), to demonstrate the efficacy of our strategy. In two datasets, the TriseNet delivers the best results when compared to other prominent segmentation algorithms.

Similar content being viewed by others

Data Availability

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

References

Amarasingam N, Salgadoe ASA, Powell K, Gonzalez LF, Natarajan S. A review of UAV platforms, sensors, and applications for monitoring of sugarcane crops. Remote Sensing Applications: Society and Environment. 2022;26:100712.

Yao F, Wang S, Ding L, Zhong G, Bullock LB, Xu Z, Dong J. Lightweight network learning with zero-shot neural architecture search for UAV images. Knowledge-Based Systems 2023;260:110142.

Delavarpour N, Koparan C, Nowatzki J, Bajwa S, Sun X. A technical study on UAV characteristics for precision agriculture applications and associated practical challenges. Remote Sens. 2021;13(6):1204.

Liao YH, Juang JG. Real-time UAV trash monitoring system. Appl Sci. 2022;12(4):1838.

del Cerro J, Cruz Ulloa C, Barrientos A, de León Rivas J. Unmanned aerial vehicles in agriculture: a survey. Agronomy. 2021;11(2):203.

Shakhatreh H, Sawalmeh AH, Al-Fuqaha A, Dou Z, Almaita E, Khalil I, Othman NS, Khreishah A, Guizani M. Unmanned aerial vehicles (UAVs): a survey on civil applications and key research challenges. IEEE Access. 2019;7:48572–634.

Yang Z, Yu X, Dedman S, Rosso M, Zhu J, Yang J, Xia Y, Tian Y, Zhang G, Wang J. UAV remote sensing applications in marine monitoring: knowledge visualization and review. Sci Total Environ; 2022. p. 155939.

Wang YN, Tian X, Zhong G. FFNet: feature fusion network for few-shot semantic segmentation. Cogn Comput. 2022;14(2):875–86.

Ren W, Tang Y, Sun Q, Zhao C, Han QL. Visual semantic segmentation based on few/zero-shot learning: an overview. IEEE/CAA Journal of Automatica Sinica. 2023.

Xing Y, Zhong L, Zhong X. An encoder-decoder network based FCN architecture for semantic segmentation. Wirel Commun Mob Comput. 2020;2020.

Chen LC, Papandreou G, Kokkinos I, Murphy K, Yuille AL. DeepLab: semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected CRFs. IEEE Trans Pattern Anal Mach Intell. 2017;40(4):834–48.

Chen LC, Papandreou G, Schroff F, Adam H. Rethinking atrous convolution for semantic image segmentation. 2017. arXiv preprint arXiv:1706.05587

Chen LC, Zhu Y, Papandreou G, Schroff F, Adam H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In: Proceedings of the European Conference on Computer Vision; 2018. p. 801–818.

Liang-Chieh C, Papandreou G, Kokkinos I, Murphy K, et al. Semantic image segmentation with deep convolutional nets and fully connected CRFs. In: International Conference on Learning Representations; 2015.

Yu C, Gao C, Wang J, Yu G, Shen C, Sang N. Bisenet v2: Bilateral network with guided aggregation for real-time semantic segmentation. Int J Comput Vis. 2021;129(11):3051–68.

Yu C, Wang J, Peng C, Gao C, Yu G, Sang N. BiSeNet: bilateral segmentation network for real-time semantic segmentation. In: Proceedings of the European Conference on Computer Vision; 2018. p. 325–341.

Li S, Florencio D, Li W, Zhao Y, Cook C. A fusion framework for camouflaged moving foreground detection in the wavelet domain. IEEE Trans Image Process. 2018;27(8):3918–30.

Liu J, Zhang J, Barnes N. Modeling aleatoric uncertainty for camouflaged object detection. In: Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision; 2022. p. 1445–1454.

Fan DP, Ji GP, Sun G, Cheng MM, Shen J, Shao L. Camouflaged object detection. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition; 2020. p. 2777–2787.

Sun Y, Chen G, Zhou T, Zhang Y, Liu N. Context-aware cross-level fusion network for camouflaged object detection. International Joint Conference on Artificial Intelligence. 2021. p. 1025–1031.

Feng H, Guo J, Xu H, Ge SS. SharpGAN: dynamic scene deblurring method for smart ship based on receptive field block and generative adversarial networks. Sensors. 2021;21(11):3641.

Qi J, Wang X, Hu Y, Tang X, Liu W. Pyramid self-attention for semantic segmentation. In: Chinese Conference on Pattern Recognition and Computer Vision; 2021. p. 480–492. Springer.

Chang M, Guo F, Ji R. Depth-assisted RefineNet for indoor semantic segmentation. In: 2018 24th International Conference on Pattern Recognition (ICPR); 2018. p. 1845–1850. IEEE.

Zhao S, Hao G, Zhang Y, Wang S. A real-time semantic segmentation method of sheep carcass images based on ICNet. J Robot. 2021;2021:1–12.

Ronneberger O, Fischer P, Brox T. U-Net: convolutional networks for biomedical image segmentation. In: International Conference on Medical Image Computing and Computer-assisted Intervention; 2015. p. 234–241. Springer.

Ma J, Chen J, Ng M, Huang R, Li Y, Li C, Yang X, Martel AL. Loss odyssey in medical image segmentation. Med Image Anal 2021;71:102035;

Wang Z, Zou Y, Liu PX. Hybrid dilation and attention residual U-Net for medical image segmentation. Comput Biol Med. 2021;134:104449.

Zhu X, Cheng Z, Wang S, Chen X, Lu G. Coronary angiography image segmentation based on PSPNet. Comput Methods Prog Biomed. 2021;200:105897.

Poudel RP, Liwicki S, Cipolla R. Fast-SCNN: fast semantic segmentation network. 2019. arXiv preprint arXiv:1902.04502.

Pan H, Hong Y, Sun W, Jia Y. Deep dual-resolution networks for real-time and accurate semantic segmentation of traffic scenes. IEEE Trans Intell Transp Syst. 2022.

Chen Z, Zhong B, Li G, Zhang S, Ji R, Tang Z, Li X. SiamBAN: target-aware tracking with Siamese box adaptive network. IEEE Trans Pattern Anal Mach Intell. 2022.

Zheng Y, Zhong B, Liang Q, Tang Z, Ji R, Li X. Leveraging local and global cues for visual tracking via parallel interaction network. IEEE Trans Circuits Syst Video Technol. 2022.

Zhai W, Cao Y, Xie H, Zha ZJ. Deep texton-coherence network for camouflaged object detection. IEEE Trans Multimedia. 2022.

Zhu H, Li P, Xie H, Yan X, Liang D, Chen D, Wei M, Qin J. I can find you! boundary-guided separated attention network for camouflaged object detection. In: Proceedings of the AAAI Conference on Artificial Intelligence. 2022;36:3608–3616.

Zhai W, Cao Y, Zhang J, Zha ZJ. Exploring figure-ground assignment mechanism in perceptual organization. Adv Neural Inf Proces Syst. 2022;35:17030–42.

Mei H, Ji GP, Wei Z, Yang X, Wei X, Fan DP. Camouflaged object segmentation with distraction mining. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition; 2021. p. 8772–8781.

Borji A, Cheng MM, Jiang H, Li J. Salient object detection: a benchmark. IEEE Trans Image Process. 2015;24(12):5706–22.

Yang F, Zhai Q, Li X, Huang R, Luo A, Cheng H, Fan DP. Uncertainty-guided transformer reasoning for camouflaged object detection. In: Proceedings of the IEEE/CVF International Conference on Computer Vision; 2021. p. 4146–4155.

Yu F, Koltun V. Multi-scale context aggregation by dilated convolutions. International Conference on Learning Representations. 2016.

Hao S, Zhou Y, Guo Y. A brief survey on semantic segmentation with deep learning. Neurocomputing. 2020;406:302–21.

Li G, Kim J. DABNet: depth-wise asymmetric bottleneck for real-time semantic segmentation. In: 30th British Machine Vision Conference 2019, BMVC 2019. BMVA Press. 2020.

Howard A, Sandler M, Chu G, Chen LC, Chen B, Tan M, Wang W, Zhu Y, Pang R, Vasudevan V, et al. Searching for MobileNetV3. In: Proceedings of the IEEE/CVF International Conference on Computer Vision; 2019. p. 1314–1324.

Funding

This work was partially supported by the National Key Research and Development Program of China under Grant No. 2018AAA0100400, the Natural Science Foundation of Shandong Province under Grants No. ZR2020MF131 and No. ZR2021ZD19, and the Science and Technology Program of Qingdao under Grant No. 21-1-4-ny-19-nsh. We also want to thank “Qingdao AI Computing Center” and “Eco-Innovation Center” for providing inclusive computing power and technical support of MindSpore during the completion of this paper.

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Ethical Approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Conflict of Interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Yao, F., Wang, S., Ding, L. et al. Attention-Guided Multi-Scale Fusion Network for Similar Objects Semantic Segmentation. Cogn Comput 16, 366–376 (2024). https://doi.org/10.1007/s12559-023-10206-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12559-023-10206-8