Abstract

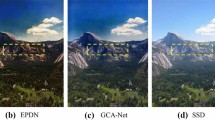

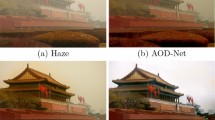

Image hazing poses a significant challenge in various computer vision applications, degrading the visual quality and reducing the perceptual clarity of captured scenes. The proposed AGD-Net utilizes a U-Net style architecture with an Attention-Guided Dense Inception encoder-decoder framework. Unlike existing methods that heavily rely on synthetic datasets which are based on CARLA simulation, our model is trained and evaluated exclusively on realistic data, enabling its effectiveness and reliability in practical scenarios. The key innovation of AGD-Net lies in its attention-guided mechanism, which empowers the network to focus on crucial information within hazy images and effectively suppress artifacts during the dehazing process. The dense inception modules further advance the representation capabilities of the model, facilitating the extraction of intricate features from the input images. To assess the performance of AGD-Net, a detailed experimental analysis is conducted on four benchmark haze datasets. The results show that AGD-Net significantly outperforms the state-of-the-art methods in terms of PSNR and SSIM. Moreover, a visual comparison of the dehazing results further validates the superior performance gains achieved by AGD-Net over other methods. By leveraging realistic data exclusively, AGD-Net overcomes the limitations associated with synthetic datasets which are based on CARLA simulation, ensuring its adaptability and effectiveness in real-world circumstances. The proposed AGD-Net offers a robust and reliable solution for single-image dehazing, presenting a significant advancement over existing methods.

Similar content being viewed by others

Data Availability

Data sharing is not applicable to this article as no datasets were generated or analyzed during the current study.

References

Nayar SK, Narasimhan SG. Vision in bad weather. In: Proceedings of the seventh IEEE international conference on computer vision, vol. 2. IEEE; 1999. p. 820–7.

Narasimhan SG, Nayar SK. Chromatic framework for vision in bad weather. In Proceedings IEEE conference on computer vision and pattern recognition. CVPR,. (Cat. No. PR00662), vol. 1. IEEE. 2000;2000:598–605.

Singh A, Chougule A, Narang P, Chamola V, Yu FR. Low-light image enhancement for UAVs with multi-feature fusion deep neural networks. IEEE Geosci Remote Sens Lett. 2022;19:1–5.

Zhou G, Li H, Song R, Wang Q, Xu J, Song B. Orthorectification of fisheye image under equidistant projection model. Remote Sensing. 2022;14(17):4175.

Liang X, Huang Z, Yang S, Qiu L. Device-free motion & trajectory detection via RFID. ACM Transactions on Embedded Computing Systems (TECS). 2018;17(4):1–27.

Xu J, Zhang X, Park SH, Guo K. The alleviation of perceptual blindness during driving in urban areas guided by saccades recommendation. In IEEE Transactions on Intelligent Transportation Systems. 2022;23(9):16386–16396.

Chen J, Wang Q, Peng W, Xu H, Li X, Xu W. Disparity-based multiscale fusion network for transportation detection. IEEE Transactions on Intelligent Transportation Systems 2022;23(10):18855–18863.

Zhou X, Zhang L. Sa-fpn: an effective feature pyramid network for crowded human detection. Applied Intelligence. 2022;52(11):12556–12568.

Xu J, Park SH, Zhang X, Hu J. The improvement of road driving safety guided by visual inattentional blindness. IEEE Trans Intell Transp Syst. 2021;23(6):4972–81.

Ogunrinde I, Bernadin S. A review of the impacts of defogging on deep learning-based object detectors in self-driving cars. SoutheastCon. 2021;2021:01–8.

Gupta A, Bhatia B, Chugh D, Sethia D. Icast: impact of climate on assistive scene text detection for autonomous vehicles. In 8th international conference on advanced computing and communication systems (ICACCS), vol. 1. IEEE. 2022;2022:841–6.

Cheng D, Chen L, Lv C, Guo L, Kou Q. Light-guided and cross-fusion U-Net for anti-illumination image super-resolution. IEEE Trans Circuits Syst Video Technol. 2022;32(12):8436–49.

Kulkarni R, Jenamani RK, Pithani P, Konwar M, Nigam N, Ghude SD. Loss to aviation economy due to winter fog in New Delhi during the winter of 2011–2016. Atmosphere. 2019;10(4):198.

Leung AC, Gough WA, Butler KA. Changes in fog, ice fog, and low visibility in the Hudson Bay region: impacts on aviation. Atmosphere. 2020;11(2):186.

Stambler A, Spiker S, Bergerman M, Singh S, “Toward autonomous rotorcraft flight in degraded visual environments: experiments and lessons learned”, in Degraded visual environments: enhanced, synthetic, and external vision solutions,. vol. 9839. SPIE. 2016;2016:19–30.

Chaturvedi P, Vijay R, Nirala R, “Visual improvement for dense foggy & hazy weather images, using multimodal enhancement techniques”, in,. international conference on micro-electronics and telecommunication engineering (ICMETE). IEEE. 2016;2016:620–8.

Mokayed H, Nayebiastaneh A, De K, Sozos S, Hagner O, Backe B. Nordic vehicle dataset (NVD): performance of vehicle detectors using newly captured NVD from UAV in different snowy weather conditions. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 2023;5313–5321.

Tian H, Pei J, Huang J, Li X, Wang J, Zhou B, Qin Y, Wang L. Garlic and winter wheat identification based on active and passive satellite imagery and the Google Earth engine in northern China. Remote Sensing. 2020;12(21):3539.

Xu J, Zhou G, Su S, Cao Q, Tian Z. The development of a rigorous model for bathymetric mapping from multispectral satellite-images. Remote Sensing. 2022;14(10):2495.

Liu Q, Yuan H, Hamzaoui R, Su H, Hou J, Yang H. Reduced reference perceptual quality model with application to rate control for video-based point cloud compression. IEEE Trans Image Process. 2021;30:6623–36.

Zheng Y, Liu P, Qian L, Qin S, Liu X, Ma Y, Cheng G. Recognition and depth estimation of ships based on binocular stereo vision. J Mar Sci Eng. 2022;10(8):1153.

Yang M, Wang H, Hu K, Yin G, Wei Z. Ia-net: an inception–attention-module-based network for classifying underwater images from others. IEEE J Oceanic Eng. 2022;47(3):704–17.

Ancuti CO, Ancuti C, Sbert M, Timofte R, “Dense-haze: a benchmark for image dehazing with dense-haze and haze-free images”, in,. IEEE international conference on image processing (ICIP). IEEE. 2019;2019:1014–8.

Ancuti CO, Ancuti C, Timofte R, De Vleeschouwer C. O-haze: a dehazing benchmark with real hazy and haze-free outdoor images. In Proceedings of the IEEE conference on computer vision and pattern recognition workshops. 2018;754–762.

Ancuti C, Ancuti CO, Timofte R, Vleeschouwer C. De I-haze: a dehazing benchmark with real hazy and haze-free indoor images, in Advanced concepts for intelligent vision systems: 19th international conference. ACIVS, 2018 Poitiers, France, September 24–27, 2018, Proceedings 19 Springer 2018;620–631.

Ancuti CO, Ancuti C, Timofte R. Nh-haze: an image dehazing benchmark with non-homogeneous hazy and haze-free images. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition workshops. 2020;444–445.

Dosovitskiy A, Ros G, Codevilla F, Lopez A, Koltun V. CARLA: an open urban driving simulator. In Proceedings of the 1st annual conference on robot learning. 2017;1–16.

Tarel J-P, Hautiere N, Cord A, Gruyer D, Halmaoui H. Improved visibility of road scene images under heterogeneous fog. In IEEE intelligent vehicles symposium. 2010;478–85.

Tarel J-P, Hautiere N, Caraffa L, Cord A, Halmaoui H, Gruyer D. Vision enhancement in homogeneous and heterogeneous fog. IEEE Intell Transp Syst Mag. 2012;4(2):6–20.

Han Y, Liu Z, Sun S, Li D, Sun J, Hong Z, Ang Jr MH. Carla-loc: synthetic slam dataset with full-stack sensor setup in challenging weather and dynamic environments. arXiv preprint , arXiv:2309.08909 2023.

Deschaud J.-E. Kitti-carla: a kitti-like dataset generated by carla simulator. arXiv preprint arXiv:2109.00892, 2021.

Jang J, Lee H, Kim J-C. Carfree: hassle-free object detection dataset generation using carla autonomous driving simulator. Appl Sci. 2021;12(1):281.

Wang A, Wang W, Liu J, Gu N. Aipnet: image-to-image single image dehazing with atmospheric illumination prior. IEEE Trans Image Process. 2018;28(1):381–93.

Zhang H, Patel VM. Densely connected pyramid dehazing network. In Proceedings of the IEEE conference on computer vision and pattern recognition. 2018;3194–3203.

Ren W, Ma L, Zhang J, Pan J, Cao X, Liu W, Yang M-H. Gated fusion network for single image dehazing. In Proceedings of the IEEE conference on computer vision and pattern recognition. 2018;3253–3261.

He K, Sun J, Tang X. Single image haze removal using dark channel prior. IEEE Trans Pattern Anal Mach Intell. 2010;33(12):2341–53.

Mehra A, Mandal M, Narang P, Chamola V. Reviewnet: a fast and resource optimized network for enabling safe autonomous driving in hazy weather conditions. IEEE Trans Intell Transp Syst. 2020;22(7):4256–66.

Ren W, Liu S, Zhang H, Pan J, Cao X, Yang M-H, “Single image dehazing via multi-scale convolutional neural networks”, in Computer vision–ECCV,. 14th European conference, Amsterdam, The Netherlands, October 11–14, 2016, Proceedings, part II 14. Springer. 2016;2016:154–69.

Dong H, Pan J, Xiang L, Hu Z, Zhang X, Wang F, Yang M.-H. Multi-scale boosted dehazing network with dense feature fusion. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 2020;2157–2167.

Liu X, Ma Y, Shi Z, Chen J. Griddehazenet: attention-based multi-scale network for image dehazing. In Proceedings of the IEEE/CVF international conference on computer vision. 2019;7314– 7323.

Zhang H, Sindagi V, Patel VM. Multi-scale single image dehazing using perceptual pyramid deep network. In Proceedings of the IEEE conference on computer vision and pattern recognition workshops. 2018;902–911.

Yi Q, Li J, Fang F, Jiang A, Zhang G. Efficient and accurate multi-scale topological network for single image dehazing. IEEE Trans Multimedia. 2021;24:3114–28.

Lian X, Pang Y, He Y, Li X, Yang A. Learning tone mapping function for dehazing. Cogn Comput. 2017;9:95–114.

Mehta A, Sinha H, Narang P, Mandal M. Hidegan: a hyperspectral-guided image dehazing gan. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition workshops. 2020;212–213.

Fu M, Liu H, Yu Y, Chen J, Wang K. Dw-gan: a discrete wavelet transform gan for nonhomogeneous dehazing. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 2021;203–212.

Qu Y, Chen Y, Huang J, Xie Y. Enhanced pix2pix dehazing network. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 2019;8160–8168.

Wang P, Zhu H, Huang H, Zhang H, Wang N. Tms-gan: a twofold multi-scale generative adversarial network for single image dehazing. IEEE Trans Circuits Syst Video Technol. 2021;32(5):2760–72.

Dong Y, Liu Y, Zhang H, Chen S, Qiao Y. Fd-gan: generative adversarial networks with fusion-discriminator for single image dehazing. In Proceedings of the AAAI conference on artificial intelligence. 2020;34(07):10729–10736.

Zhu H, Peng X, Chandrasekhar V, Li L, Lim J-H. Dehazegan: when image dehazing meets differential programming. In IJCAI. 2018;1234–1240.

Engin D, Genc A, Kemal Ekenel H. Cycle-dehaze: enhanced cyclegan for single image dehazing. In Proceedings of the IEEE conference on computer vision and pattern recognition workshops. 2018;825–833.

Li R, Pan J, Li Z, Tang J. Single image dehazing via conditional generative adversarial network. In Proceedings of the IEEE conference on computer vision and pattern recognition. 2018;8202–8211.

Song Y, He Z, Qian H, Du X. Vision transformers for single image dehazing. IEEE Transactions on Image Processing. 2023.

Sun Z, Liu C, Qu H, Xie G. A novel effective vehicle detection method based on swin transformer in hazy scenes. Mathematics. 2022;10(13):2199.

Dong P, Wang B. Transra: transformer and residual attention fusion for single remote sensing image dehazing. Multidimension Syst Signal Process. 2022;33(4):1119–38.

Chen S, Ye T, Shi J, Liu Y, Jiang J, Chen E, Chen P. Dehrformer: real-time transformer for depth estimation and haze removal from varicolored haze scenes. arXiv preprint arXiv:2303.06905, 2023.

Zhou Y, Chen Z, Li R, Sheng B, Zhu L, Li P. Eha-transformer: efficient and haze-adaptive transformer for single image dehazing. In The 18th ACM SIGGRAPH international conference on virtual-reality continuum and its applications in industry. 2022;1–8.

Wang C, Pan J, Lin W, Dong J, Wu X-M. Selfpromer: self-prompt dehazing transformers with depth-consistency. arXiv preprint arXiv:2303.07033, 2023.

M. Tong, Y. Wang, P. Cui, X. Yan, and M. Wei. Semi-uformer: semi-supervised uncertainty-aware transformer for image dehazing. arXiv preprint arXiv:2210.16057, 2022.

Lin Z, Wang H, Li S. Pavement anomaly detection based on transformer and self-supervised learning. Autom Constr. 2022;143: 104544.

Cong R, Sheng H, Yang D, Cui Z, Chen R. Exploiting spatial and angular correlations with deep efficient transformers for light field image super-resolution. IEEE Transactions on Multimedia. 2023.

Yu H, Huang J, Zheng K, Zhou M, Zhao F. High-quality image dehazing with diffusion model. arXiv preprint arXiv:2308.11949, 2023.

Wang J, Wu S, Xu K, Z. Yuan Z. Frequency compensated diffusion model for real-scene dehazing. arXiv preprint arXiv:2308.10510, 2023.

Luo Z, Gustafsson FK, Zhao Z, Sjo¨lund J, Scho¨n TB. Refusion: enabling large-size realistic image restoration with latent-space diffusion models. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 2023;1680–1691.

Özdenizci O, Legenstein R. Restoring vision in adverse weather conditions with patch-based denoising diffusion models. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2023.

Qi M, Cui S, Chang X, Xu Y, Meng H, Wang Y, Yin T, et al. Multi-region nonuniform brightness correction algorithm based on l-channel gamma transform. Security and Communication Networks. 2022;2022.

Julong D, et al. Introduction to grey system theory. J Grey Syst. 1989;1(1):1–24.

Xiong P-P, Huang S, Peng M, Wu X-H. Examination and prediction of fog and haze pollution using a multi-variable grey model based on interval number sequences. Appl Math Model. 2020;77:1531–44.

Wu Y-P, Zhu C-Y, Feng G-L, Li BL. Mathematical modeling of fog-haze evolution. Chaos, Solitons Fractals. 2018;107:1–4.

Huang G, Liu Z, van der Maaten L, Weinberger KQ. Densely connected convolutional networks. 2018.

Bahdanau D, Cho K, Bengio Y. Neural machine translation by jointly learning to align and translate. 2016.

Szegedy C, Liu W, Jia Y, Sermanet P, Reed S, Anguelov D, Erhan D, Vanhoucke V, Rabinovich A. Going deeper with convolutions. 2014.

Ronneberger O, Fischer P, T. Brox T. U-net: convolutional networks for biomedical image segmentation. 2015.

He K, Sun J, Tang X. Single image haze removal using dark channel prior. In IEEE conference on computer vision and pattern recognition. 2009;2009:1956–63.

Li B, Peng X, Wang Z, Xu J, Feng D. Aod-net: all-in-one dehazing network. In IEEE international conference on computer vision (ICCV). 2017;2017:4780–8.

Tran L-A, Moon S, Park D-C. A novel encoder-decoder network with guided transmission map for single image dehazing. Procedia Computer Science. 2022;204:682–9.

Jin Y, Yan W, Yang W, Tan RT. Structure representation network and uncertainty feedback learning for dense non-uniform fog removal. 2022.

Zhao H, Gallo O, Frosio I, Kautz J. Loss functions for neural networks for image processing. 2018.

Wang Z, Bovik A, Sheikh H, Simoncelli E. Image quality assessment: from error visibility to structural similarity. IEEE Trans Image Process. 2004;13(4):600–12.

Johnson J, Alahi A, Fei-Fei L. Perceptual losses for real-time style transfer and super-resolution. 2016.

Funding

This submission was carried out without any external funding sources. The authors declare that they have no financial or non-financial interests related to this work.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethical Approval

This article contains no studies with human participants or animals performed by any of the authors.

Conflict of Interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Vinay Chamola and Pratik Narang are senior members of IEEE.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Chougule, A., Bhardwaj, A., Chamola, V. et al. AGD-Net: Attention-Guided Dense Inception U-Net for Single-Image Dehazing. Cogn Comput 16, 788–801 (2024). https://doi.org/10.1007/s12559-023-10244-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12559-023-10244-2