Abstract

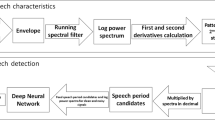

Recently, deep neural network (DNN)-based feature enhancement has been proposed for many speech applications. DNN-enhanced features have achieved higher performance than raw features. However, phase information is discarded during most conventional DNN training. In this paper, we propose a DNN-based joint phase- and magnitude -based feature (JPMF) enhancement (JPMF with DNN) and a noise-aware training (NAT)-DNN-based JPMF enhancement (JPMF with NAT-DNN) for noise-robust voice activity detection (VAD). Moreover, to improve the performance of the proposed feature enhancement, a combination of the scores of the proposed phase- and magnitude-based features is also applied. Specifically, mel-frequency cepstral coefficients (MFCCs) and the mel-frequency delta phase (MFDP) are used as magnitude and phase features. The experimental results show that the proposed feature enhancement significantly outperforms the conventional magnitude-based feature enhancement. The proposed JPMF with NAT-DNN method achieves the best relative equal error rate (EER), compared with individual magnitude- and phase-based DNN speech enhancement. Moreover, the combined score of the enhanced MFCC and MFDP using JPMF with NAT-DNN further improves the VAD performance.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Benyassine A, Shlomot E, Su H-Y, Massaloux D, Lamblin C, Petit J-P (1997) Itu-t recommendation g. 729 annex b: a silence compression scheme for use with g. 729 optimized for v. 70 digital simultaneous voice and data applications. IEEE Commun Mag 35(9):64–73

Chang J-H, Kim NS, Mitra SK (2006) Voice activity detection based on multiple statistical models. IEEE Trans Signal Process 54(6):1965–1976

Davis SB, Mermelstein P (1980) Comparison of parametric representations for monosyllabic word recognition in continuously spoken sentences. IEEE Trans Acoust Speech Signal Process 28:357–366

Enqing D, Heming Z, YongLi L (2002) Low bit and variable rate speech coding using local cosine transform. In: TENCON’02. Proceedings. 2002 IEEE Region 10 Conference on Computers, Communications, Control and Power Engineering, pp 423–426

Fan RE, Chang KW, Hsieh CJ (2008) LIBLINEAR: a library for large linear classification. J Mach Learn Res 9:1871–1874

Freeman D, Cosier G (1989) The voice activity detector for the Pan-European digital cellular mobile telephone service. In: 1989 international conference on acoustics, speech, and signal processing, 1989. ICASSP-89. pp 369–372

Hendriks RC (2010) MMSE based noise PSD tracking with low complexity. 2010 IEEE international conference on acoustics speech and signal processing (ICASSP), pp 4266–4269

Hinton G, Osindero S, Teh Y (2006) A fast learning algorithm for deep belief nets. Neural Comput 18:1527–1554

Hinton GE, Salakhutdinov RR (2006) Reducing the dimensionality of data with neural networks. Science 313:504–507

Junqua J, Reaves B, Mak B (1991) A study of endpoint detection algorithms in adverse conditions: incidence on a DTW and HMM recognizer. In: Second European conference on speech communication and technology

Kim C, Stern RM (2012) Power-normalized cepstral coefficients (PNCC) for robust speech recognition. In: 2012 IEEE international conference on acoustics, speech and signal processing (ICASSP), pp 4101–4104

Kinnunen T, Chernenko E (2007) Voice activity detection using MFCC features and support vector machine. In: Conf. on Speech and Computer (SPECOM07), Moscow, Russia, pp 556–561

Kitaoka N, Yamada T, Tsuge S (2009) CENSREC-1-C: an evaluation framework for voice activity detection under noisy environments. Acoust Sci Technol 30:363–371

Lu X, Tsao Y, Matsuda S, Hori C (2013) Speech enhancement based on deep denoising autoencoder. In: INTERSPEECH, pp 436–440

Malah D, Cox RV, Accardi AJ (1999) Tracking speech-presence uncertainty to improve speech enhancement in non-stationary noise environments. In: 1999 IEEE international conference on acoustics, speech, and signal processing, 1999. Proceedings, pp 789–792

McCowan I, Dean D (2011) The delta-phase spectrum with application to voice activity detection and speaker recognition. IEEE Trans Audio Speech Lang Process 19:2026–2038

Nakagawa S, Wang L, Ohtsuka S (2012) Speaker identification and verification by combining MFCC and phase information. IEEE Trans Audio Speech Lang Process 20:1085–1095

Povey D, Ghoshal A (2011) The Kaldi speech recognition toolkit. In: IEEE 2011 workshop on automatic speech recognition and understanding (No. EPFL-CONF-192584). IEEE Signal Processing Society

Ren B, Wang L, Lu L, Ueda Y, Kai A (2016) Combination of bottleneck feature extraction and dereverberation for distant-talking speech recognition. Multimed Tools Appl 75(9):5093–5108

Ryant N, Liberman M, Yuan J (2013) Speech activity detection on youtube using deep neural networks. In: INTERSPEECH, pp 728–731

Seltzer ML, Yu D, Wang Y (2013) An investigation of deep neural networks for noise robust speech recognition. In: 2013 IEEE international conference on acoustics, speech and signal processing (ICASSP). IEEE, pp 7398–7402

Tanrikulu O (1997) Residual echo signal in critically sampled subband acoustic echo cancellers based on IIR and FIR filter banks. IEEE Trans Signal Process 45:901–912

Tong R, Ma B, Lee KA, You C (2006) The IIR NIST 2006 Speaker Recognition System: Fusion of Acoustic and Tokenization Features. In: Presentation in 5th Int. Symp. on Chinese Spoken Language Processing, ISCSLP

Tucker R (1992) Voice activity detection using a periodicity measure. IEE Proc Commu Speech Vis I:377–380

Ueda Y, Wang L, Kai A, Ren B (2015) Environment-dependent denoising autoencoder for distant-talking speech recognition. EURASIP J Adv Signal Process 2015(92):1–11

Wang L, Minami K, Yamamoto K, Nakagawa S (2010) Speaker identification by combining MFCC and phase information in noisy environments. In: 2010 IEEE international conference on acoustics speech and signal processing (ICASSP), pp 4502–4505

Wang L, Ren B, Ueda Y (2014) Denoising autoencoder and environment adaptation for distant-talking speech recognition with asynchronous speech recording. In: asia-pacific signal and information processing association, 2014 annual summit and conference (APSIPA), pp 1–5

Wang L, Yoshida Y, Kawakami Y, Nakagawa S (2015) Relative phase information for detecting human speech and spoofed speech. In: INTERSPEECH, pp 2092–2096

Williamson DS, Wang Y, Wang D (2016a) Complex ratio masking for joint enhancement of magnitude and phase. In: 2016 IEEE international conference on acoustics, speech and signal processing (ICASSP). IEEE, pp 5220–5224

Williamson DS, Wang Y, Wang D (2016b) Complex ratio masking for monaural speech separation. IEEE/ACM Trans Audio Speech Lang Process 24(3):483–492

Wu J, Zhang X (2011) Efficient multiple kernel support vector machine based voice activity detection. IEEE Signal Process Lett 18:466–469

Xia B, Bao C (2013) Speech enhancement with weighted denoising auto-encoder. In: INTERSPEECH, pp 3444–3448

Xiao, X. (2016). SignalGraph. https://github.com/singaxiong/SignalGraph

Xiao X, Zhao S, Nguyen DHH (2014) The NTU-ADSC systems for reverberation challenge 2014. In: Proc, REVERB challenge workshop

Xu Y, Du J, Dai L, Lee C (2015) A regression approach to speech enhancement based on deep neural networks. IEEE/ACM Trans Audio Speech Lang Process 23:7–19

Xu Y, Du J, Dai LR, Lee CH (2014) Dynamic noise aware training for speech enhancement based on deep neural networks. In: INTERSPEECH, pp 2670–2674

Ying D, Yan Y, Dang J, Soong FK (2011) Voice activity detection based on an unsupervised learning framework. IEEE Trans Audio Speech Lang Process 19(8):2624–2633

Zhang X-L, Wang D (2016) Boosting contextual information for deep neural network based voice activity detection. IEEE/ACM Trans Audio Speech Lang Process 24(2):252–264

Zhang XL, Wu J (2013a) Deep belief networks based voice activity detection. IEEE Trans Audio Speech Lang Process 21:697–710

Zhang XL, Wu J (2013b) Denoising deep neural networks based voice activity detection. In: 2013 IEEE international conference on acoustics, speech and signal processing (ICASSP), pp 853–857

Zou YX, Zheng WQ, Shi W, Liu H (2014) Improved voice activity detection based on support vector machine with high separable speech feature vectors. In: 2014 19th international conference on digital signal processing (DSP), pp 763–767

Acknowledgements

This work was partially supported by and the JSPS KAKENHI Grant (No. 16K12461), the National Basic Research Program of China (No. 2013CB329301) and the National Natural Science Foundation of China (No. 61233009).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Phapatanaburi, K., Wang, L., Oo, Z. et al. Noise robust voice activity detection using joint phase and magnitude based feature enhancement. J Ambient Intell Human Comput 8, 845–859 (2017). https://doi.org/10.1007/s12652-017-0482-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12652-017-0482-8