Abstract

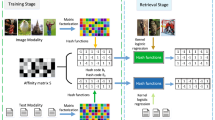

Cross-modal retrieval has been attracted attentively in the past years. Recently, the collective matrix factorization was proposed to learn the common representations for cross-modal retrieval based on assumption that the pairwise data from different modalities should have the same common semantic representations. However, this unified common representation could inherently sacrifice the modality-specific representations for each modality because the distributions and representations of different modalities are inconsistent. To mitigate this problem, in this paper, we propose Modality-specific Matrix Factorization Hashing (MsMFH) via alignment, which learns the modality-specific semantic representation for each modality and then aligns the representations via the correlation information. Specifically, we factorize the original feature representations into individual latent semantic representations, and then align the distributions of individual latent semantic representations via an orthogonal transformation. Then, we embed the class label into the hash codes learning via latent semantic space, and obtain hash codes directly by an efficient optimization with a closed solution. Extensive experimental results on three public datasets demonstrate that the proposed method outperforms to many existing cross-modal hashing methods up to 3% in term of mean average precision (mAP).

Similar content being viewed by others

References

Akaho S (2006) A kernel method for canonical correlation analysis. arXiv preprint cs/0609071

Andrew G, Arora R, Bilmes J, Livescu K (2013) Deep canonical correlation analysis. In The 30th International Conference on Machine Learning (ICML), pages 1247–1255

Bibi R, Mehmood Z, Yousaf RM, Saba T, Sardaraz M, Rehman A (2020) Query-by-visual-search: multimodal framework for content-based image retrieval. J Ambient Intell Humaniz Comput 1–20

Chen Z-D, Li C-X, Luo X, Nie L, Zhang W, Xu X-S (2019) Scratch: A scalable discrete matrix factorization hashing framework for cross-modal retrieval. In: IEEE Transactions on Circuits and Systems for Video Technology, PP:1–1

Chua T S, Tang J, Hong R, Li H, Luo Z, Zheng Y (2009) Nus-wide: a real-world web image database from national university of singapore. In: ACM International Conference on Image and Video Retrieval, page 48

Deng C, Chen Z, Liu X, Gao X, Tao D (2018) Triplet-based deep hashing network for cross-modal retrieval. IEEE Trans Image Process 27(8):3893–3903

Deng C, Yang E, Liu T, Li J, Liu W, Tao D (2019) Unsupervised semantic-preserving adversarial hashing for image search. IEEE Trans Image Process 28(8):4032–4044

Ding G, Guo Y, Zhou J (2014) Collective matrix factorization hashing for multimodal data. In: Proceedings of the IEEE conference on computer vision and pattern recognition 2075–2082

Ding G, Guo Y, Zhou J, Gao Y (2016) Large-scale cross-modality search via collective matrix factorization hashing. IEEE Trans Image Process 25(11):5427–5440

Gong Y, Ke Q, Isard M, Lazebnik S (2014) A multi-view embedding space for modeling internet images, tags, and their semantics. Int J Comput Vis 106(2):210–233

Huimin L, Zhang Ming X. X, Li Y, Shen H (2020) Deep fuzzy hashing network for efficient image retrieval. IEEE Trans Fuzzy Syst, PP:1–1

Huiskes MJ, Lew MS (2008) The mir flickr retrieval evaluation. In: ACM Sigmm International Conference on Multimedia information retrieval, Mir 2008. Vancouver, British Columbia, Canada October, pp 39–43

Hussain DM, Surendran D (2020) The efficient fast-response content-based image retrieval using spark and mapreduce model framework. J Ambient Intell Humaniz Comput 1–8

Jacobs DW, Daume H, Kumar A, Sharma A (2012) Generalized multiview analysis: A discriminative latent space. In: IEEE Conference on Computer Vision and Pattern Recognition(CVPR), pages 2160–2167

Li C, Chen Zhenduo ZP-F, Luo X, Nie L, Zhang W, Xu X-S (2018) Scratch: A scalable discrete matrix factorization hashing for cross-modal retrieval. In: Proceedings of the 26th ACM international conference on Multimedia, pages 1–9

Li C, Zhou B (2020) Fast key-frame image retrieval of intelligent city security video based on deep feature coding in high concurrent network environment. J Ambient Intell Humaniz Comput, 1–9

Lichao D, Li N, Liu W, Gao X, Tao D (2018) Self-supervised adversarial hashing networks for cross-modal retrieval. Comput Vis Pattern Recogn, p 4242–4251

Likai Qi G-J, Hua K A (2018) Learning label preserving binary codes for multimedia retrieval: a general approach. ACM Trans Mult Comput Commun Appl (TOMM) 14(1):1–23

Lu H, Li Y, Chen M, Kim H, Serikawa S (2018) Brain intelligence: go beyond artificial intelligence. Mobile Netw Appl 4(23):368–375

Lu W, Zhang X, Lu H, Li F (2020) Deep hierarchical encoding model for sentence semantic matching. J Vis Commun Image Represent, p 102794

Ma D, Liang J, Kong X, He R (2016) Frustratingly easy cross-modal hashing. In: Proceedings of the 24th ACM international conference on Multimedia, p 237–241. ACM

Ou W, Xuan R, Gou J, Zhou Q, Cao Y (2019) Semantic consistent adversarial cross-modal retrieval exploiting semantic similarity. Multimed Tools Appl 1–18

Peng Y, Huang X, Zhao Y (2017) An overview of cross-media retrieval: Concepts, methodologies, benchmarks and challenges. in: IEEE Transactions on Circuits and Systems for Video Technology, 1–14

Peng Y, Qi J, Yuan Y (2018) Modality-specific cross-modal similarity measurement with recurrent attention network. IEEE Trans Image Process 27(11):5585–5599

Rasiwasia N, Pereira JC, Coviello E, Doyle G, Lanckriet GRG, Levy R, Vasconcelos N (2010) A new approach to cross-modal multimedia retrieval. In: International Conference on Multimedia, 251–260

Schönemann PH (1966) A generalized solution of the orthogonal procrustes problem. Psychometrika 31(1):1–10

Singh AP, Gordon GJ (2008) Relational learning via collective matrix factorization. In: Proceedings of the 14th ACM SIGKDD international conference on Knowledge discovery and data mining, pp 650–658

Song J, Yang Y, Yang Y, Huang Z, Shen HT (2013) Inter-media hashing for large-scale retrieval from heterogeneous data sources. In: Proceedings of the 2013 ACM SIGMOD International Conference on Management of Data, pp 785–796

Tang J, Wang K, Shao L (2016) Supervised matrix factorization hashing for cross-modal retrieval. IEEE Trans Image Process 25(7):3157–3166

Wang D, Gao X, Wang X, He L (2015) Semantic topic multimodal hashing for cross-media retrieval. In: the International Joint Conference on Artificial Intelligence(IJCAI), pages 2291–2297

Wang D, Lu H (2013) On-line learning parts-based representation via incremental orthogonal projective non-negative matrix factorization. Signal Process 93(6):1608–1623

Wang D, Lu H, Yang M-H (2016) Robust visual tracking via least soft-threshold squares. IEEE Trans Circuits Syst Video Technol 26(9):1709–1721

Wang W, Livescu K (2015) Large-scale approximate kernel canonical correlation analysis. arXiv preprint arXiv:1511.04773

Xu X, He L, Shimada A, Taniguchi RI, Lu H (2017) Learning unified binary codes for cross-modal retrieval via latent semantic hashing. Neurocomputing 213:191–203

Xu X, Lu H, Song J, Yang Y, Shen HT, Li X (2019) Ternary adversarial networks with self-supervision for zero-shot cross-modal retrieval. IEEE Trans Cybern (in press)

Xu XS (2017) Dictionary learning based hashing for cross-modal retrieval. In: Proceedings of the 24th ACM international conference on Multimedia, pp 177–181

Yang Y, Zhuang Y-T, Wu F, Pan Y-H (2008) Harmonizing hierarchical manifolds for multimedia document semantics understanding and cross-media retrieval. IEEE Trans Multimed 10(3):437–446

Yao T, Han Y, Tao WR, Kong X, Yan L, Fu H, Tian Q (2019) Efficient discrete supervised hashing for large-scale cross-modal retrieval. arXiv preprint arXiv:1905.01304

Yao T, Kong K, Fu H, Tian Q, (2019) Discrete semantic alignment hashing for cross-media retrieval. IEEE Trans Cybern 99:1–12

Yaotao Zhang, Z, Yan L, Yue J, Tian Q (2019) Discrete robust supervised hashing for cross-modal retrieval. IEEE Access 7:39806–39814

Zhang D, Li W-J (2014) Large-scale supervised multimodal hashing with semantic correlation maximization. AAAI 2177–2183

Zhang Y, Lu W, Ou W, Zhang G, Zhang X, Cheng J, Zhang W (2020) Chinese medical question answer selection via hybrid models based on cnn and gru. Multimed Tools Appl 1–26

Zhou J, Ding G, Guo Y (2014) Latent semantic sparse hashing for cross-modal similarity search. In: Proceedings of the 37th international ACM SIGIR conference on Research & development in information retrieval, pages 415–424

Acknowledgements

Weihua Ou is the corresponding author. This work was supported by the National Natural Science Foundation of China (No.61762021, 61962010, 61976107, 61876093), Natural Science Foundation of Guizhou Province (Grant No.[2017]1130, [2017]5726-32), Excellent Young Scientific and Technological Talent of Guizhou Province ([2019]-5670), the National Natural Science Foundation of Jiangsu Province under Grant BK20181393 and in the part by the China Scholarship Council under Grant 201908320072.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Xiong, H., Ou, W., Yan, Z. et al. Modality-specific matrix factorization hashing for cross-modal retrieval. J Ambient Intell Human Comput 13, 5067–5081 (2022). https://doi.org/10.1007/s12652-020-02177-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12652-020-02177-7