Abstract

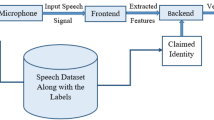

Nowadays, fingerprint and retina scans are the most reliable and widely used biometric authentication systems. Another emerging biometric approach for authentication, though vulnerable, is speech-based systems. However, speech-based systems are more prone to spoofing attacks. Through such malicious attacks, an unauthentic person tries to present himself as legitimate user, in order to acquire illegitimate advantage. Therefore, these attacks, created by synthesis or conversion of speech, pose an enormous threat to the reliable functioning of automatic speaker verification (ASV) authentication systems. The work presented in this paper tries to address this problem by using deep learning (DL) methods and ensemble of different neural networks. The first model is a combination of time-distributed dense layers and long short-term memory (LSTM) layers. The other two deep neural networks (DNNs) are based on temporal convolution (TC) and spatial convolution (SC). Finally, an ensemble model comprising of these three DNNs has also been analysed. All these models are analysed with Mel frequency cepstral coefficients (MFCC), inverse Mel frequency cepstral coefficients (IMFCC) and constant Q cepstral coefficients (CQCC) at the frontend, where the proposed ensemble performs best with CQCC features. The proposed work uses ASVspoof 2015 and ASVspoof 2019 datasets for training and testing, with the evaluation set having speech synthesis (SS) and voice conversion (VC) attacked utterances. Performance of proposed system trained with ASVspoof 2015 dataset degrades with evaluation set of ASVspoof 2019 dataset, whereas performance of the same system improves when training is also done with the ASVspoof 2019 dataset. Also, a joint ASVspoof 1519 dataset is created to add more variations into the single dataset, and it has been observed that the trained ensemble with this joint dataset performs even better, while performing evaluation using single datasets. This research promotes the development of systems that can cope with completely unknown data in testing. It has been further observed that the models can reach promising results, paving the way for future research in this domain using DL.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Alegre F, Vipperla R, Evans N (2012) Spoofing countermeasures for the protection of automatic speaker recognition from attacks with artificial signals. In: 13th annual conference of the international speech communication association 2012, INTERSPEECH 2012, pp 1686–1689

Alegre F, Amehraye A, Evans N (2013) Spoofing countermeasures to protect automatic speaker verification from voice conversion. In: ICASSP, IEEE international conference on acoustics, speech and signal processing—proceedings. IEEE, pp 3068–3072

Aleksic PS, Katsaggelos AK (2006) Audio-visual biometrics. Proc IEEE 94:2025–2044

Bengio Y, Frasconi P, Simard P (1993) Problem of learning long-term dependencies in recurrent networks. In: 1993 IEEE international conference on neural networks. IEEE, pp 1183–1188

Chakroborty S, Saha G (2009) Improved text-independent speaker identification using fused MFCC and IMFCC feature sets based on Gaussian filter. World Acad Sci Eng Technol 35:613–621

Chen LW, Guo W, Dai LR (2010) Speaker verification against synthetic speech. In: 2010 7th international symposium on Chinese spoken language processing, ISCSLP 2010—proceedings. IEEE, pp 309–312

Chettri B, Stoller D, Morfi V et al (2019) Ensemble models for spoofing detection in automatic speaker verification. arXiv

Cunningham P, Carney J, Jacob S (2000) Stability problems with artificial neural networks and the ensemble solution. Artif Intell Med 20:217–225. https://doi.org/10.1016/S0933-3657(00)00065-8

De Leon PL, Pucher M, Yamagishi J et al (2012a) Evaluation of speaker verification security and detection of HMM-based synthetic speech. IEEE Trans Audio Speech Lang Process 20:2280–2290. https://doi.org/10.1109/TASL.2012.2201472

De Leon PL, Stewart B, Yamagishi J (2012b) Synthetic speech discrimination using pitch pattern statistics derived from image analysis. In: 13th annual conference of the international speech communication association 2012, INTERSPEECH 2012, pp 370–373

Devi KJ, Thongam K (2019) Automatic speaker recognition with enhanced swallow swarm optimization and ensemble classification model from speech signals. J Ambient Intell Humaniz Comput. https://doi.org/10.1007/s12652-019-01414-y

Dinkel H, Qian Y, Yu K (2018) Investigating raw wave deep neural networks for end-to-end speaker spoofing detection. IEEE/ACM Trans Audio Speech Lang Process 26:2002–2014. https://doi.org/10.1109/TASLP.2018.2851155

Dua M, Kumar A, Chaudhary T (2015) Implementation and performance evaluation of speaker adaptive continuous Hindi ASR using tri-phone based acoustic modelling. In: Proceedings of 2015 international conference on future computational technologies, pp 68–73

Dua M, Aggarwal RK, Biswas M (2017) Discriminative training using heterogeneous feature vector for Hindi automatic speech recognition system. In: 2017 international conference on computer and applications, ICCA 2017. IEEE, pp 158–162

Dua M, Aggarwal RK, Biswas M (2018) Performance evaluation of Hindi speech recognition system using optimized filterbanks. Eng Sci Technol Int J 21:389–398. https://doi.org/10.1016/j.jestch.2018.04.005

Dua M, Aggarwal RK, Biswas M (2019a) GFCC based discriminatively trained noise robust continuous ASR system for Hindi language. J Ambient Intell Humaniz Comput 10:2301–2314. https://doi.org/10.1007/s12652-018-0828-x

Dua M, Wesanekar A, Gupta V et al (2019b) Color image encryption using synchronous CML-DNA and weighted bi-objective genetic algorithm. In: ACM international conference proceeding series, pp 121–125

Dua M, Aggarwal RK, Biswas M (2020) Optimizing integrated features for Hindi automatic speech recognition system. J Intell Syst 29:959–976. https://doi.org/10.1515/jisys-2018-0057

Dua M, Aggarwal RK, Biswas M (2020) Discriminative training using noise robust integrated features and refined HMM modeling. J Intell Syst 29:327–344. https://doi.org/10.1515/jisys-2017-0618

Elbayad M, Besacier L, Verbeek J (2018) Pervasive attention: 2D convolutional neural networks for sequence-to-sequence prediction. arXiv

Evans NWD, Kinnunen T, Yamagishi J (2013) Spoofing and countermeasures for automatic speaker verification. In: Interspeech, pp 925–929

Graves A, Mohamed AR, Hinton G (2013) Speech recognition with deep recurrent neural networks. In: ICASSP, IEEE international conference on acoustics, speech and signal processing—proceedings. IEEE, pp 6645–6649

Hermansky H, Ellis DPW, Sharma S (2000) Tandem connectionist feature extraction for conventional HMM systems. In: ICASSP, IEEE international conference on acoustics, speech and signal processing—proceedings. IEEE, pp 1635–1638

Hossan MA, Memon S, Gregory MA (2010) A novel approach for MFCC feature extraction. In: 4th international conference on signal processing and communication systems, ICSPCS’2010—proceedings. IEEE, pp 1–5

Hourri S, Kharroubi J (2019) A novel scoring method based on distance calculation for similarity measurement in text-independent speaker verification. Procedia Comput Sci 148:256–265. https://doi.org/10.1016/j.procs.2019.01.068

Kamble MR, Sailor HB, Patil HA, Li H (2020) Advances in anti-spoofing: from the perspective of ASVspoof challenges. APSIPA Trans Signal Inf Process. https://doi.org/10.1017/ATSIP.2019.21

Kuamr A, Dua M, Choudhary A (2014a) Implementation and performance evaluation of continuous Hindi speech recognition. In: 2014 international conference on electronics and communication systems, ICECS 2014. IEEE, pp 1–5

Kuamr A, Dua M, Choudhary T (2014b) Continuous Hindi speech recognition using Gaussian mixture HMM. In: 2014 IEEE Students’ conference on electrical, electronics and computer science, SCEECS 2014. IEEE, pp 1–5

Kumar A, Aggarwal RK (2020) Discriminatively trained continuous Hindi speech recognition using integrated acoustic features and recurrent neural network language modeling. J Intell Syst 30:165–179. https://doi.org/10.1515/jisys-2018-0417

Kumar A, Dua M, Choudhary T (2014) Continuous hindi speech recognition using monophone based acoustic modeling. Int J Comput Appl ICACEA(1):15–19

LeCun Y, Bengio Y, Hinton G (2015) Deep learning. Nature 521:436–444

Mittal A, Dua M (2021) Constant Q cepstral coefficients and long short-term memory model-based automatic speaker verification system. In: Proceedings of international conference on intelligent computing, information and control systems. Springer, pp 895–904

Mohammadi M, Sadegh Mohammadi HR (2017) Robust features fusion for text independent speaker verification enhancement in noisy environments. In: 2017 25th Iranian conference on electrical engineering, ICEE 2017. IEEE, pp 1863–1868

Muckenhirn H, Magimai-Doss M, Marcel S (2018) End-to-end convolutional neural network-based voice presentation attack detection. In: IEEE international joint conference on biometrics, IJCB 2017. IEEE, pp 335–341

Qian Y, Chen N, Yu K (2016) Deep features for automatic spoofing detection. Speech Commun 85:43–52. https://doi.org/10.1016/j.specom.2016.10.007

Sahu P, Dua M (2016) An overview: context-dependent acoustic modeling for LVCSR. In: Proceedings of the 10th INDIACom; 2016 3rd international conference on computing for sustainable global development, INDIACom 2016. IEEE, pp 2223–2227

Sahu P, Dua M (2017) A quinphone-based context-dependent acoustic modeling for LVCSR. Advances in intelligent systems and computing. Springer, Berlin, pp 105–111

Sahu P, Dua M, Kumar A (2018) Challenges and issues in adopting speech recognition. Advances in intelligent systems and computing. Springer, Singapore, pp 209–215

Sainath TN, Vinyals O, Senior A, Sak H (2015) Convolutional, long short-term memory, fully connected deep neural networks. In: ICASSP, IEEE international conference on acoustics, speech and signal processing—proceedings. IEEE, pp 4580–4584

Saranya S, Rupesh Kumar S, Bharathi B (2020) Deep learning approach: detection of replay attack in ASV systems. Advances in intelligent systems and computing. Springer, Berlin, pp 291–298

Satoh T, Masuko T, Kobayashi T, Tokuda K (2001) A robust speaker verification system against imposture using an HMM-based speech synthesis system. In: EUROSPEECH 2001—SCANDINAVIA—7th European conference on speech communication and technology, pp 759–762

Scardapane S, Stoffl L, Rohrbein F, Uncini A (2017) On the use of deep recurrent neural networks for detecting audio spoofing attacks. In: Proceedings of the international joint conference on neural networks. IEEE, pp 3483–3490

Shabtai NR, Rafaely B, Zigel Y (2011) The effect of reverberation on the performance of cepstral mean subtraction in speaker verification. Appl Acoust 72:124–126. https://doi.org/10.1016/j.apacoust.2010.09.009

Srivastava N, Hinton G, Krizhevsky A et al (2014) Dropout: a simple way to prevent neural networks from overfitting. J Mach Learn Res 15:1929–1958

Tagomori T, Tsuruda R, Matsuo K, Kurogi S (2020) Speaker verification from mixture of speech and non-speech audio signals via using pole distribution of piecewise linear predictive coding coefficients. J Ambient Intell Humaniz Comput 1–11. https://doi.org/10.1007/s12652-020-01716-6

Toda T, Tokuda K (2007) A speech parameter generation algorithm considering global variance for HMM-based speech synthesis. IEICE Trans Inf Syst 90:816–824

Todisco M, Delgado H, Evans N (2017) Constant Q cepstral coefficients: a spoofing countermeasure for automatic speaker verification. Comput Speech Lang 45:516–535. https://doi.org/10.1016/j.csl.2017.01.001

Todisco M, Wang X, Vestman V et al (2019) ASVspoof 2019: future horizons in spoofed and fake audio detection. arXiv

Wu ZK (2014) ASVspoof 2015: Automatic speaker verification spoofing and countermeasures challenge evaluation plan. Training 10:3750. https://doi.org/10.7488/ds/298

Wu Z, Kinnunen T, Evans N et al (2015) ASVspoof 2015: the first automatic speaker verification spoofing and countermeasures challenge. In: Sixteenth annual conference of the international speech communication association

Yamagishi J, Kinnunen TH, Evans N et al (2017) Introduction to the issue on spoofing and countermeasures for automatic speaker verification. IEEE J Sel Top Signal Process 11:585–587. https://doi.org/10.1109/JSTSP.2017.2698143

Yang J, Das RK, Li H (2019) Extended constant-Q Cepstral coefficients for detection of spoofing attacks. In: 2018 Asia-Pacific signal and information processing association annual summit and conference, APSIPA ASC 2018—Proceedings. IEEE, pp 1024–1029

Zhang C, Yu C, Hansen JHL (2017) An investigation of deep-learning frameworks for speaker verification antispoofing. IEEE J Sel Top Signal Process 11:684–694. https://doi.org/10.1109/JSTSP.2016.2647199

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The submitted work does not have any conflict of interest.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Dua, M., Jain, C. & Kumar, S. LSTM and CNN based ensemble approach for spoof detection task in automatic speaker verification systems. J Ambient Intell Human Comput 13, 1985–2000 (2022). https://doi.org/10.1007/s12652-021-02960-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12652-021-02960-0