Abstract

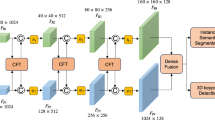

Precise 6DoF (6D) object pose estimation is an essential topic for many intelligent applications, for example, robot grasping, virtual reality, and autonomous driving. Lacking depth information, traditional pose estimators using only RGB cameras consistently predict bias 3D rotation and translation matrices. With the wide use of RGB-D cameras, we can directly capture both the depth for the object relative to the camera and the corresponding RGB image. Most existing methods concatenate these two data sources directly, which does not make full use of their complementary relationship. Therefore, we propose an efficient RGB-D fusion network for 6D pose estimation, called EFN6D, to exploit the 2D–3D feature more thoroughly. Instead of directly using the original single-channel depth map, we encode the depth information into a normal map and point cloud data. To effectively fuse the surface texture features and the geometric contour features of the object, we feed the RGB images and the normal map into two ResNets. Besides, the PSP modules and skip connections are used between the two ResNets, which not only enhances cross modal fusion performance of the network but also enhances the network’s capability in handling objects at different scales. Finally, the fused features obtained from these two ResNets and the point cloud features are densely fused point by point to further strengthen the fusion of 2D and 3D information at a per-pixel level. Experiments on the LINEMOD and YCB-Video datasets show that our EFN6D outperforms state-of-the-art methods by a large margin.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Aubry M, Maturana D, Efros AA, Russell BC, Sivic J (2014) Seeing 3D chairs: exemplar part-based 2D-3D alignment using a large dataset of cad models. In: IEEE conference on computer vision and pattern recognition (CVPR), pp 3762–3769. https://doi.org/10.1109/cvpr.2014.487

Besl PJ, McKay ND (1992) Method for registration of 3-D shapes. In: Sensor fusion IV: control paradigms and data structures, vol 1611, p 586–606. https://doi.org/10.1117/12.57955

Bui M, Zakharov S, Albarqouni S, Ilic S, Navab N (2018) When regression meets manifold learning for object recognition and pose estimation. In: IEEE international conference on robotics and automation (ICRA), pp 6140–6146. https://doi.org/10.1109/ICRA.2018.8460654

Charles RQ, Su H, Kaichun M, Guibas LJ (2017) PointNet: deep learning on point sets for 3D classification and segmentation. In: IEEE/CVF conference on computer vision and pattern recognition (CVPR), p 652–660. https://doi.org/10.1109/CVPR.2017.16

Chen W, Duan J, Basevi H, Chang HJ, Leonardis A (2020) PointPoseNet: point pose network for robust 6d object pose estimation. In: IEEE winter conference on applications of computer vision (WACV), p 2813–2822. https://doi.org/10.1109/WACV45572.2020.9093272

Du G, Wang K, Lian S, Zhao K (2021) Vision-based robotic grasping from object localization, object pose estimation to grasp estimation for parallel grippers: a review. Artif Intell Rev 54(3):1677–1734. https://doi.org/10.1007/s10462-020-09888-5

Eitel A, Springenberg JT, Spinello L, Riedmiller M, Burgard W (2015) Multimodal deep learning for robust RGB-D object recognition. In: IEEE/RSJ international conference on intelligent robots and systems (IROS), p 681–687. https://doi.org/10.1109/iros.2015.7353446

Gao G, Lauri M, Wang Y, Hu X, Zhang J, Frintrop S (2020) 6d object pose regression via supervised learning on point clouds. In: IEEE international conference on robotics and automation (ICRA), p 3643–3649. https://doi.org/10.1109/ICRA40945.2020.9197461

Guo J, Xing X, Quan W, Yan D-M, Gu Q, Liu Y, Zhang X (2021) Efficient center voting for object detection and 6D pose estimation in 3D point cloud. IEEE Trans Image Process 30:5072–5084. https://doi.org/10.1109/TIP.2021.3078109

Gupta S, Girshick R, Arbeláez P, Malik J (2014)Learning rich features from RGB-D images for object detection and segmentation. In: European conference on computer vision (ECCV), p 345–360. https://doi.org/10.1007/978-3-319-10584-0_23

Hagelskjær F, Buch AG (2020) Pointvotenet: accurate object detection and 6 DOF pose estimation in point clouds. In: IEEE international conference on image processing (ICIP), pp 2641–2645. https://doi.org/10.1109/ICIP40778.2020.9191119

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: IEEE conference on computer vision and pattern recognition (CVPR), p 770–778. https://doi.org/10.1109/CVPR.2016.90

Hinterstoisser S, Holzer S, Cagniart C, Ilic S, Konolige K, Navab N, Lepetit V (2011) Multimodal templates for real-time detection of texture-less objects in heavily cluttered scenes. In: IEEE international conference on computer vision (ICCV), p 858–865. https://doi.org/10.1109/ICCV.2011.6126326

Hinterstoisser S, Lepetit V, Ilic S, Holzer S, Bradski G, Konolige K, Navab N (2012) Model based training, detection and pose estimation of texture-less 3D objects in heavily cluttered scenes. In: Asian conference on computer vision (ACCV), pp 548–562. https://doi.org/10.1007/978-3-642-37331-2_42

Hodan T, Haluza P, Obdržálek Š, Matas J, Lourakis M, Zabulis X (2017) T-LESS: an RGB-D dataset for 6D pose estimation of texture-less objects. In: IEEE winter conference on applications of computer vision (WACV), pp 880–888. https://doi.org/10.1109/WACV.2017.103

Hodaň T, Michel F, Brachmann E, Kehl W, Buch AG, Kraft D, Drost B, Vidal J, Ihrke S, Zabulis X, Sahin C, Manhardt F, Tombari F, Kim T-K, Matas J, Rother C (2018) Bop: benchmark for 6D object pose estimation. In: European conference on computer vision (ECCV), p 19–35. https://doi.org/10.1007/978-3-030-01249-6_2

Hoppe H, DeRose T, Duchamp T, McDonald J, Stuetzle W (1992) Surface reconstruction from unorganized points. In: Proceedings of the 19th annual conference on computer graphics and interactive techniques, pp 71–78. https://doi.org/10.1145/133994.134011

Hua W, Guo J, Wang Y, Xiong R (2020) 3D point-to-keypoint voting network for 6D pose estimation. In: 16th International conference on control, automation, robotics and vision (ICARCV), p 536–541. https://doi.org/10.1109/ICARCV50220.2020.9305322

Hu Y, Fua P, Wang W, Salzmann M (2020) Single-stage 6D object pose estimation. In: IEEE/CVF conference on computer vision and pattern recognition (CVPR), p 2927–2936. https://doi.org/10.1109/CVPR42600.2020.00300

Hu Y, Hugonot J, Fua P, Salzmann M (2019) Segmentation-driven 6D object pose estimation. In: IEEE/CVF conference on computer vision and pattern recognition (CVPR), p 3380–3389. https://doi.org/10.1109/CVPR.2019.00350

Kehl W, Manhardt F, Tombari F, Ilic S, Navab N (2017) SSD-6D: making RGB-based 3D detection and 6D pose estimation great again. In: IEEE international conference on computer vision (ICCV), p 1530–1538. https://doi.org/10.1109/ICCV.2017.169

Kendall A, Grimes M, Cipolla R (2015) PoseNet: a convolutional network for real-time 6-DOF camera relocalization. In: IEEE international conference on computer vision (ICCV), p 2938–2946. https://doi.org/10.1109/ICCV.2015.336

Lepetit V, Moreno-Noguer F, Fua P (2009) Epnp: an accurate \(O(n)\) solution to the pnp problem. Int J Comput Vis 81(2):155–166. https://doi.org/10.1007/s11263-008-0152-6

Li C, Bai J, Hager GD (2018a) A unified framework for multi-view multi-class object pose estimation. In: European conference on computer vision (ECCV), p 263–281. https://doi.org/10.1007/978-3-030-01270-0_16

Li Y, Wang G, Ji X, Xiang Y, Fox D (2018b) DeepIM: deep iterative matching for 6D pose estimation. In: European conference on computer vision (ECCV), p 695–711. https://doi.org/10.1007/978-3-030-01231-1_42

Li Z, Wang G, Ji X (2019) CDPN: coordinates-based disentangled pose network for real-time RGB-based 6-DoF object pose estimation. In: IEEE/CVF international conference on computer vision (ICCV), p 7677–7686. https://doi.org/10.1109/ICCV.2019.00777

Li Q, Hu R, Xiao J, Wang Z, Chen Y (2020) Learning latent geometric consistency for 6D object pose estimation in heavily cluttered scenes. J Vis Commun Image Represent. https://doi.org/10.1016/j.jvcir.2020.102790

Li Y, Ma L, Zhong Z, Liu F, Chapman MA, Cao D, Li J (2021) Deep learning for LiDAR point clouds in autonomous driving: a review. IEEE Trans Neural Netw Learn Syst 32(8):3412–3432. https://doi.org/10.1109/TNNLS.2020.3015992

Makhataeva Z, Varol HA (2020) Augmented reality for robotics: a review. Robotics 9(2):21. https://doi.org/10.3390/robotics9020021

Park K, Patten T, Vincze M (2019) Pix2pose: pixel-wise coordinate regression of objects for 6D pose estimation. In: IEEE/CVF international conference on computer vision (ICCV), pp 7667–7676. https://doi.org/10.1109/ICCV.2019.00776

Peng S, Liu Y, Huang Q, Zhou X, Bao H (2019) PVNet: pixel-wise voting network for 6dof pose estimation. In: IEEE/CVF conference on computer vision and pattern recognition (CVPR), pp 4556–4565. https://doi.org/10.1109/CVPR.2019.00469

Qi CR, Su H, Nießner M, Dai A, Yan M, Guibas LJ (2016) Volumetric and multi-view CNNS for object classification on 3D data. In: IEEE/CVF conference on computer vision and pattern recognition (CVPR), pp 5648–5656. https://doi.org/10.1109/cvpr.2016.609

Qi C. R, Yi L, Su H, Guibas LJ (2017) Pointnet++: deep hierarchical feature learning on point sets in a metric space. In: Proceedings of the 31st international conference on neural information processing systems (NIPS), pp 5105–5114. https://doi.org/10.1109/cvpr.2017.16

Rad M, Lepetit V (2017) BB8: a scalable, accurate, robust to partial occlusion method for predicting the 3D poses of challenging objects without using depth. In: IEEE international conference on computer vision (ICCV), p 3848–3856. https://doi.org/10.1109/ICCV.2017.413

Saadi L, Besbes B, Kramm S, Bensrhair A (2021) Optimizing RGB-D fusion for accurate 6D of pose estimation. IEEE Robot Autom Lett 6(2):2413–2420. https://doi.org/10.1109/LRA.2021.3061347

Shi Y, Huang J, Xu X, Zhang Y, Xu K (2021) StablePose: learning 6D object poses from geometrically stable patches. In: IEEE/CVF conference on computer vision and pattern recognition (CVPR), p 15222–15231. https://doi.org/10.1109/CVPR46437.2021.01497

Shin Y, Balasingham I (2017) Comparison of hand-craft feature based SVM and CNN based deep learning framework for automatic polyp classification. In: 39th Annual international conference of the IEEE engineering in medicine and biology society (EMBC), p 3277–3280. https://doi.org/10.1109/embc.2017.8037556

Sock J, Kim KI, Sahin C, Kim TK (2018) Multi-task deep networks for depth-based 6D object pose and joint registration in crowd scenarios. In: Proceedings of of British machine vision conference (BMVC)

Song C, Song J, Huang Q (2020) HybridPose: 6D object pose estimation under hybrid representations. In: IEEE/CVF conference on computer vision and pattern recognition (CVPR), pp 428–437. https://doi.org/10.1109/CVPR42600.2020.00051

Su Y, Rambach J, Minaskan N, Lesur P, Pagani A, Stricker D (2019) Deep multi-state object pose estimation for augmented reality assembly. In: IEEE international symposium on mixed and augmented reality adjunct (ISMAR-Adjunct), p 222–227. https://doi.org/10.1109/ISMAR-Adjunct.2019.00-42

Tekin B, Sinha SN, Fua P (2018) Real-time seamless single shot 6D object pose prediction. In: IEEE/CVF conference on computer vision and pattern recognition (CVPR), pp 292–301. https://doi.org/10.1109/CVPR.2018.00038

Tian M, Pan L, Ang MH, Hee Lee G (2020) Robust 6D object pose estimation by learning RGB-D features. In: IEEE international conference on robotics and automation (ICRA), p 6218–6224. https://doi.org/10.1109/ICRA40945.2020.9197555

Tremblay J, To T, Sundaralingam B, Xiang Y, Fox D, Birchfield S (2018) Deep object pose estimation for semantic robotic grasping of household objects. In: Proceedings of the 2nd conference on robot learning, volume 87 of proceedings of machine learning research, p 306–316

Wang G, Manhardt F, Shao J, Ji X, Navab N, Tombari F (2020) Self6D: self-supervised monocular 6D object pose estimation. In: European conference on computer vision (ECCV), p 108–125. https://doi.org/10.1007/978-3-030-58452-8_7

Wang C, Xu D, Zhu Y, Martin-Martin R, Lu C, Fei-Fei L, Savarese S (2019) DenseFusion: 6D object pose estimation by iterative dense fusion. In: IEEE/CVF conference on computer vision and pattern recognition (CVPR), p 3338–3347. https://doi.org/10.1109/CVPR.2019.00346

Xiang Y, Schmidt T, Narayanan V, Fox D (2018) PoseCNN: a convolutional neural network for 6D object pose estimation in cluttered scenes. In: Robotics: science and systems (RSS). https://doi.org/10.15607/rss.2018.xiv.019

Xu D, Anguelov D, Jain A (2018) PointFusion: deep sensor fusion for 3D bounding box estimation. In: IEEE/CVF conference on computer vision and pattern recognition (CVPR), p 244–253. https://doi.org/10.1109/CVPR.2018.00033

Yuan Y, Wan J, Wang Q (2016) Congested scene classification via efficient unsupervised feature learning and density estimation. Pattern Recognit 56:159–169. https://doi.org/10.1016/j.patcog.2016.03.020

Zakharov S, Shugurov I, Ilic S (2019) DPOD: 6D pose object detector and refiner. In: IEEE/CVF international conference on computer vision (ICCV), p 1941–1950. https://doi.org/10.1109/ICCV.2019.00203

Zhao H, Shi J, Qi X, Wang X, Jia J (2017) Pyramid scene parsing network. In: IEEE conference on computer vision and pattern recognition (CVPR), p 6230–6239. https://doi.org/10.1109/CVPR.2017.660

Zhou Y, Tuzel O (2018) VoxelNet: end-to-end learning for point cloud based 3D object detection. In: IEEE/CVF conference on computer vision and pattern recognition (CVPR), p 4490–4499. https://doi.org/10.1109/CVPR.2018.00472

Acknowledgements

The work is supported by the following projects: National Natural Science Foundation of China (NSFC) Essential project, Nr.: U2033218, 61831018; NSFC Nr.: 61772328

Author information

Authors and Affiliations

Corresponding authors

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Wang, Y., Jiang, X., Fujita, H. et al. EFN6D: an efficient RGB-D fusion network for 6D pose estimation. J Ambient Intell Human Comput 15, 75–88 (2024). https://doi.org/10.1007/s12652-022-03874-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12652-022-03874-1