Abstract

Cognitive concept learning is to learn concepts from a given clue by simulating human thought processes including perception, attention and thinking. In recent years, it has attracted much attention from the communities of formal concept analysis, cognitive computing and granular computing. However, the classical cognitive concept learning approaches are not suitable for incomplete information. Motivated by this problem, this study mainly focuses on cognitive concept learning from incomplete information. Specifically, we put forward a pair of approximate cognitive operators to derive concepts from incomplete information. Then, we propose an approximate cognitive computing system to perform the transformation between granular concepts as incomplete information is updated periodically. Moreover, cognitive processes are simulated based on three types of similarities. Finally, numerical experiments are conducted to evaluate the proposed cognitive concept learning methods.

Similar content being viewed by others

1 Introduction

Cognitive computing is viewed as the development of computer systems modeled on the human brain [37]. As far as we know, such kind of computing is to simulate human thought processes by a computer such as thinking, learning, perception and attention. Cognitive learning, as a useful mathematical tool for the realization of cognitive computing, is considered as the function of simulating cognitive processes including the operations of learning, thinking and remembering something. With the development of cognitive learning, it has absorbed many effective methods from psychology, information theory and mathematics [36].

As is well known, a concept is the basic unit of human cognition [39]. Currently, many kinds of concepts [18, 34, 45, 46] have been developed to meet different requirements in the real world. It should be pointed out that these concepts have been applied in many fields such as rough analysis, rule acquisition and knowledge discovery [2, 4, 5, 12, 16, 19, 25, 27, 28, 38]. Moreover, granular computing [21–24, 41, 44, 48, 49] has been incorporated into concept learning to improve the learning efficiency [10, 20, 40].

Cognitive concept learning is to learn concepts from a given clue by simulating human thought processes. It was firstly investigated by Zhang and Xu [51], Wang [36] and Yao [47]. In recent years, there has been a growing interest on this topic. For instance, Xu et al. [43] discussed how to obtain sufficient and necessary information granules from an arbitrary information granule. Li et al. [14] put forward three cognitive concept learning methods from the perspectives of philosophy and cognitive psychology, and they [11] also designed a cognitive concept learning framework for big data. Moreover, the theory of three-way decisions has been incorporated into cognitive concept learning [15] as well. In addition, Aswani Kumar et al. [3] analyzed the concept learning mechanism of exploring cognitive functionalities of bidirectional associative memory. Very recently, Xu and Li [42] reconsidered the issue of cognitive concept learning under a fuzzy environment.

However, with the deepening of the research on cognitive concept learning, it is found that the classical cognitive concept learning methods are restrictive for many applications. For example, they are not suitable for incomplete information which is often encountered in the real world [13]. The main theme of this paper is to address this issue. Specifically, a pair of approximate cognitive operators is proposed to learn concepts from incomplete information. Then, an approximate cognitive computing system is established to perform the transformation between granular concepts as incomplete information is updated periodically. In addition, cognitive processes are simulated based on three types of similarities. Finally, we conduct some numerical experiments to evaluate the proposed cognitive concept learning methods.

The remainder of this paper is organized as follows. Section 2 analyzes cognitive mechanism of learning approximate concepts from incomplete information. Section 3 establishes an approximate cognitive computing system. Section 4 simulates cognitive processes based on similarity. Section 5 conducts some numerical experiments to assess the proposed concept learning methods. The paper is concluded in Sect. 6 with a brief summary and an outlook for further research.

2 Cognitive mechanism of learning approximate concepts from incomplete information

First of all, let us begin this section with an example.

Example 1

Table 1 depicts a dataset of four patients who suffer from severe acute respiratory syndrome (SARS) [14]. In the table, Fever, Cough, Headache and Difficulty breathing are four symptoms which were observed from the current patients.

As time goes on, however, there will appear more SARS patients from whom new symptoms could be found such as Diarrhea, Muscle aches, Nausea and vomiting. Suppose that the updating information is shown in Table 2.

From Table 2, it is noticed that the values of patients 1, 2, 3 and 4 under the new symptoms (Diarrhea, Muscle aches, Nausea and vomiting) were missing. This is not surprising because the previous patients may have gone and could not be contacted when it is able to test these symptoms by new technologies.

Unfortunately, for such a SARS dataset with incomplete information, the classical cognitive concept learning methods will become invalid since missing values are not allowed for them. Therefore, it is necessary to develop some new cognitive concept learning methods which are able to handle incomplete information.

To facilitate our subsequent discussions, let U be a nonempty object set and A be an attribute set. Moreover, \(2^{U}\) and \(2^{A}\) are used to denote the power sets of U and A, respectively. Then, a partial order relation is defined on the Cartesian product \(2^{A}\times 2^{A}\) as

and the intersection and union are respectively given by

Definition 1

Let \(f: 2^{U}\rightarrow 2^{A}\times 2^{A}\) and \(g: 2^{A}\times 2^{A}\rightarrow 2^{U}\) be two mappings. For \(X_{1},X_{2}\subseteq U\) and \((B,C)\in 2^{A}\times 2^{A}\), if the following properties hold:

-

(i)

\(X_{1}\subseteq X_{2}\Rightarrow f(X_{1})\ge f(X_{2})\),

-

(ii)

\(f(X_{1}\cup X_{2})\ge f(X_{1})\cap f(X_{2})\),

-

(iii)

\(g(B,C)=\{x\in U|(B,C)\le f(\{x\})\}\),

then f and g are called approximate-concept-forming cognitive operators (or simply approximate cognitive operators).

From Definition 1, it is easy to obtain the following properties:

Theorem 1

Let f and g be approximate cognitive operators. Then, they form a Galois connection between \(2^U\) and \(2^A\times 2^A\).

Proof

From (iii) of Definition 1, it is easy to get

Note that \(g(f(X))=\{x\in U|f(X)\le f(\{x\})\}\), and any \(x\in X\) satisfies \(f(X)\le f(\{x\})\) based on (i) of Definition 1. So, we obtain

Suppose that \(X_0\) is the objects satisfying \((B,C)\le f(\{x\})\). Then, by (iii) of Definition 1 and Eq. (3), it follows

Combining (i) of Definition 1 with Eqs. (4), (5) and (6), we conclude that f and g form a Galois connection between \(2^U\) and \(2^A\times 2^A\). \(\Box\)

Definition 2

Let f and g be approximate cognitive operators. For \(X\subseteq U\) and \((B,C)\in 2^{A}\times 2^{A}\), if \(f(X)=(B,C)\) and \(g(B,C)=X\), then (X, (B, C)) is called a concept of the approximate cognitive operators f and g (or simply approximate cognitive concept). In this case, X and (B, C) are referred to as the extent and intent of the approximate cognitive concept (X, (B, C)), respectively.

Moreover, the infimum (\(\bigwedge\)) and supremum (\(\bigvee\)) of a set of approximate cognitive concepts \(\{(X_t,(B_t,C_t))\mid t\in T\}\) are respectively given by

where \(fg(\cdot )\) and \(gf(\cdot )\) denote the composite mappings \(f(g(\cdot ))\) and \(g(f(\cdot ))\), respectively.

In what follows, we show how to derive approximate cognitive concepts from incomplete information. Before embarking on this issue, we need to construct a special pair of approximate cognitive operators f and g.

Let I be a “three-valued” mapping from \(U\times A\) to \(\{1,0,?\}\) for describing incomplete information. Specifically, \(I(x,a)=1\) indicates that the object x possesses the attribute a, \(I(x,a)=0\) indicates that the object x does not possess the attribute a, and \(I(x,a)=?\) indicates that it is unknown whether the object x possesses the attribute a. Then, for any \(X\subseteq U\), two mappings \(\pi (\cdot )\) and \(\theta (\cdot )\) are respectively defined as

In a similar manner, for any \(B\subseteq A\), we define

Furthermore, \(\pi (\cdot )\) and \(\theta (\cdot )\) are used to construct a special pair of approximate cognitive operators f and g:

Example 2

Continued with Example 1. For convenience, in Table 2, a, b, c, d, e, f and g are used to represent the symptoms Fever, Cough, Headache, Difficulty breathing, Diarrhea, Muscle aches, and Nausea and vomiting, respectively. Moreover, number 1 is used to replace “Yes”, number 0 is used to replace “No”, and the question mark “?” is reserved. Then, combining Eqs. (7) and (8) with Definition 2, we can verify that (45, (ac, ace)) is an approximate cognitive concept, where 45, ac and ace are the abbreviations of \(\{4,5\}\), \(\{a,c\}\) and \(\{a,c,e\}\), respectively.

3 An approximate cognitive computing system

In the real world, objects and attributes are updated dynamically, and they often accompany with incomplete information. So, the currently obtained approximate cognitive concepts need to be updated from time to time. To address this problem, an approximate cognitive computing system is proposed in this section.

3.1 Information granules and granular concepts

We first put forward the notion of information granules of approximate cognitive operators f and g. For brevity, hereinafter, \(f(\{x\})\) and \(\pi (\{a\})\) are rewritten as f(x) and \(\pi (a)\), and \(\pi (\pi (\cdot ))\), \(\theta (\pi (\cdot ))\) and \(f(\pi (\cdot ))\) are abbreviated as \(\pi \pi (\cdot )\), \(\theta \pi (\cdot )\) and \(f\pi (\cdot )\), respectively.

Definition 3

Let f and g be approximate cognitive operators. Then, \(f^{G}=\{\{x\}\mapsto f(x)|x\in U\}\) and \(g^{G}=\{f\pi (a)\mapsto gf\pi (a)|a\in A\}\) are called information granules of f and g, respectively.

The main purpose of introducing information granules is to simplify the description of approximate cognitive operators. In other words, basic information granules have the same ability to describe the mapping information under consideration. See the following theorem for the details.

Theorem 2

Let \(f^{G}\) and \(g^{G}\) be the information granules of approximate cognitive operators f and g. For \(X\subseteq U\) and \((B,C)\in 2^A\times 2^A\) with \(B=\bigcup \nolimits _{a\in B}\pi \pi (a)\) and \(C=\bigcup \nolimits _{a\in B}\theta \pi (a)\), we have

Proof

By Eq. (3), it follows \(f(X)=\bigcap \limits _{x\in X}f^{G}(x)\) directly. Besides, based on Eqs. (8), (9) and (10), we get

\(\square\)

Theorem 3

Let f and g be approximate cognitive operators. Then, for any \(x\in U\) and \(a\in A\), both \(\left( gf^G(x),f^G(x)\right)\) and \(\left( g^Gf\pi (a),f\pi (a)\right)\) are approximate cognitive concepts.

Proof

The proof is obvious from Definition 2. \(\Box\)

Definition 4

Let f and g be approximate cognitive operators. For any \(x\in U\) and \(a\in A\), we say that \(\left( gf^G(x),f^G(x)\right)\) and \(\left( g^Gf\pi (a),f\pi (a)\right)\) are granular concepts.

It can be known from Definition 3 and Theorem 2 that granular concepts can easily be computed by the information granules of f and g.

Theorem 4

Let f and g be approximate cognitive operators, and (X, (B, C)) be an approximate cognitive concept. Then,

-

(i)

\((X,(B,C))=\bigvee \limits _{x\in X}\left( gf^G(x),f^G(x)\right)\);

-

(ii)

\((X,(B,C))=\bigwedge \limits _{a\in B}\left( g^Gf\pi (a),f\pi (a)\right)\) if for any \(c\in C\backslash B\), there exists \(b\in B\) such that \(\pi (b)\subseteq \theta (c)\).

Proof

Note that \((B,C)=f(X)=f\left( \bigcup \nolimits _{x\in X}\{x\}\right) =\bigcap \limits _{x\in X}f^G(x)\). Based on Eq. (7), we know that (X, (B, C)) and \(\bigvee \limits _{x\in X}\left( gf^G(x),f^G(x)\right)\) have the same intent. As a result, they are the same approximate cognitive concept. That is, (i) is proved. The remainder is to prove (ii).

Firstly, we show \(B=\bigcup \nolimits _{a\in B}\pi \pi (a)\). Note that \(B\subseteq \bigcup \nolimits _{a\in B}\pi \pi (a)\) due to \(a\in \pi \pi (a)\). For any \(b\in \bigcup \nolimits _{a\in B}\pi \pi (a)\), there exists \(a_0\in B\) such that \(b\in \pi \pi (a_0)\). Since \(a_0\in B\Rightarrow \pi (a_0)\supseteq \pi (B)\supseteq X\Rightarrow \pi \pi (a_0)\subseteq \pi (X)=B\), it follows \(b\in B\), yielding \(\bigcup \nolimits _{a\in B}\pi \pi (a)\subseteq B\). To sum up, \(B=\bigcup \nolimits _{a\in B}\pi \pi (a)\) is true.

Secondly, we prove \(C=\bigcup \nolimits _{a\in B}\theta \pi (a)\). On one hand, for any \(c\in C\), if \(c\in B\), then \(c\in \bigcup \nolimits _{a\in B}\theta \pi (a)\) due to \(a\in \theta \pi (a)\); otherwise, \(c\in C\backslash B\). In this case, based on the assumption that there exists \(b\in B\) such that \(\pi (b)\subseteq \theta (c)\), we have \(c\in \theta \pi (b)\). That is, \(c\in \bigcup \nolimits _{a\in B}\theta \pi (a)\) is also true. As a result, \(C\subseteq \bigcup \nolimits _{a\in B}\theta \pi (a)\). On the other hand, for any \(c\in \bigcup \nolimits _{a\in B}\theta \pi (a)\), there exists \(a_0\in B\) such that \(c\in \theta \pi (a_0)\), i.e., \(\pi (a_0)\subseteq \theta (c)\). Since \(X\subseteq \pi (B)\subseteq \pi (a_0)\), it follows \(X\subseteq \theta (c)\). Note that \(c\in \theta \theta (c)\subseteq \theta (X)=C\). Then, we conclude \(\bigcup \nolimits _{a\in B}\theta \pi (a)\subseteq C\). In summary, \(C=\bigcup \nolimits _{a\in B}\theta \pi (a)\) is proved.

According to Theorem 2, we obtain \(X=g(B,C)=\bigcap \limits _{a\in B}g^Gf\pi (a)\). Then, we know from Eq. (7) that (X, (B, C)) and \(\bigwedge \limits _{a\in B}\left( g^Gf\pi (a),f\pi (a)\right)\) have the same extent. As a result, they are the same approximate cognitive concept. Consequently, (ii) is true. \(\Box\)

Theorem 4 indicates that any approximate cognitive concept can be induced by granular concepts. So, among all approximate cognitive concepts, granular concepts are the basic information granules. For convenience, we denote the set of all granular concepts by

In the rest of this paper, we discuss how to compute \(G_{fg}\) when objects and attributes are updated in batch.

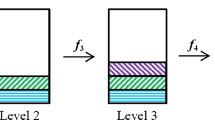

3.2 A cognitive computing system for learning granular concepts

To facilitate our subsequent discussions, n object sets \(U_1\subseteq U_2\subseteq \cdots \subseteq U_n\) are denoted as \(\{U_t\}^{\uparrow }\), and n attribute sets \(A_1\subseteq A_2\subseteq \cdots \subseteq A_{n}\) are denoted as \(\{A_t\}^{\uparrow }\). Let \(\Delta U_{i-1}=U_i-U_{i-1}\) and \(\Delta A_{i-1}=A_i-A_{i-1}\).

To express and process different types of incomplete information, let \(I_{i-1}\) be a “three-valued” mapping from \(U_{i-1}\times A_{i-1}\) to \(\{1,0,?\}\), \(I_{\Delta U_{i-1}}\) be a “three-valued” mapping from \(\Delta U_{i-1}\times A_{i-1}\) to \(\{1,0,?\}\), \(I_{\Delta A_{i-1}}\) be a “three-valued” mapping from \(U_i\times \Delta A_{i-1}\) to \(\{1,0,?\}\), and \(I_i\) be a “three-valued” mapping from \(U_i\times A_i\) to \(\{1,0,?\}\).

Then, we define sixteen mappings

Furthermore, four pairs of approximate cognitive operators are defined as

where

In fact, the above approximate cognitive operators: (i) \(f_{i-1},g_{i-1}\), (ii) \(f_{\Delta U_{i-1}},\) \(g_{\Delta U_{i-1}}\), (iii) \(f_{\Delta A_{i-1}},g_{\Delta A_{i-1}}\) and (iv) \(f_i,g_i\) are formed by adding objects and attributes in sequence. In order to establish a cognitive computing system for updating granular concepts, we need to further clarify their inner relationships.

Theorem 5

Let (i) \(f_{i-1},g_{i-1}\), (ii) \(f_{\Delta U_{i-1}},g_{\Delta U_{i-1}}\), (iii) \(f_{\Delta A_{i-1}},g_{\Delta A_{i-1}}\) and (iv) \(f_i,g_i\) be the approximate cognitive operators specified in Eqs. (13)–(16). Then, we have

Proof

By Eq. (16), it follows \(f_i^G(x)=(\pi _i(x),\theta _i(x))\). If \(x\in U_{i-1}\), we obtain

Therefore, we get

The conclusion \(f_i^G(x)=f_{\Delta U_{i-1}}^G(x)\cup f_{\Delta A_{i-1}}^G(x)\) can be proved in a similar manner when \(x\in \Delta U_{i-1}\). \(\Box\)

Theorem 5 shows how to obtain \(f_i^G(x)\) (\(x\in U_i\)). In fact, they can further be used to compute

That is, we find an approach to generate the granular concepts \(\left( g_if_i^G(x),f_i^G(x)\right)\). At the same time, \(f_i^G(x)\) (\(x\in U_i\)) can be used to compute

and

That is to say, we also find an approach to generate the granular concepts \(\left( g_i^Gf_i\pi _i(a),f_i\pi _i(a)\right)\) (\(a\in A_i\)). To sum up, we have the following theorem.

Theorem 6

Let (i) \(f_{i-1},g_{i-1}\), (ii) \(f_{\Delta U_{i-1}},g_{\Delta U_{i-1}}\), (iii) \(f_{\Delta A_{i-1}},g_{\Delta A_{i-1}}\) and (iv) \(f_i,g_i\) be the approximate cognitive operators specified in Eqs. (13)–(16). Then, the granular concepts \(G_{f_ig_i}\) of \(f_i\) and \(g_i\) can be computed by combining \(G_{f_{i-1}g_{i-1}}\) with \(f^G_{\Delta U_{i-1}}\) and \(f^G_{\Delta A_{i-1}}\).

Proof

It is immediate from Eqs. (17), (18), (19) and (20). \(\Box\)

Definition 5

Let (i) \(f_{i-1},g_{i-1}\), (ii) \(f_{\Delta U_{i-1}},g_{\Delta U_{i-1}}\), (iii) \(f_{\Delta A_{i-1}},g_{\Delta A_{i-1}}\) and (iv) \(f_i,g_i\) be the approximate cognitive operators specified in Eqs. (13)–(16). We say that \(\mathcal {AS}_{f_ig_i}=(G_{f_{i-1}g_{i-1}},f^G_{\Delta U_{i-1}},f^G_{\Delta A_{i-1}})\) is an approximate cognitive computing state. Furthermore, \(\mathcal {AS}=\bigcup \nolimits _{i=2}^{n}\{\mathcal {AS}_{f_ig_i}\}\) is said to be an approximate cognitive computing system.

It can be known from Definition 5 that an approximate cognitive computing system is constituted by a series of approximate cognitive computing states. Notice that granular concepts with objects and attributes being updated once are considered as a state. This is in accordance with our common sense. In fact, it is impossible to directly obtain the granular concepts of an approximate cognitive computing system since the information is updated dynamically. In other words, we only know the granular concepts from one state to another. Here, we adopt the recursive strategy to compute the granular concepts \(G_{f_1g_1}\), \(G_{f_2g_2}\), \(\cdots\), \(G_{f_ng_n}\) in sequence.

Based on the above discussion, we are ready to propose a procedure (called Algorithm 1) to compute the granular concepts \(G_{f_ng_n}\) of an approximate cognitive computing system \(\mathcal {AS}=\bigcup \nolimits _{i=2}^{n}\{\mathcal {AS}_{f_ig_i}\}\).

The correctness of Algorithm 1 is guaranteed by Eqs. (17), (18), (19) and (20). Its time complexity is analyzed as follows. Based on Eq. (12), running Step 1 takes \(O((|U_1|+|A_1|)|U_1||A_1|)\). Similarly, running Steps 3–18 takes \(O((|U_i|+|A_i|)|U_i||A_i|)\). So, the time complexity of Algorithm 1 is \(O(n(|U_n|+|A_n|)|U_n||A_n|)\), where n is the number of approximate cognitive computing states. That is, Algorithm 1 needs polynomial time.

Finally, we use an example to illustrate Algorithm 1.

Example 3

Continued with Example 2. Let \(U_1=\{1,2,3,4\}\) and \(A_1=\{a,b,c,d\}\). Then, we have the following equalities:

Based on Eq. (12), the granular concepts of \(f_1\) and \(g_1\) are as follows:

Take \(\Delta U_1=\{5,6,7,8,9\}\). We have

Set \(\Delta A_1=\{e,f,g\}\). It follows

Furthermore, let \(U_2=\{1,2,3,4,5,6,7,8,9\}\) and \(A_2=\{a,b,c,d,e,f,g\}\). By Eq. (17), we obtain

According to Eq. (18), we get

Based on Eq. (19), we conclude

Then, it follows from Eq. (20) that

Consequently, the granular concepts of the approximate cognitive computing system \(\mathcal {AS}=\mathcal {AS}_{f_2g_2}\) are

4 Cognitive processes

In Sect. 3, granular concepts were generated by an approximate cognitive computing system. From the viewpoint of cognitive computing, granular concepts are the basic information granules stored in human brain. These granular concepts will be reactivated when a clue comes into mind, which is often called a cognitive process.

Since a clue may be objects, attributes or both of them, cognitive processes will be investigated in this section from three aspects: a) learn granular concept from an object set; b) learn granular concept from a pair of attribute sets; c) learn granular concept from both of them.

4.1 Granular concept learning from an object set based on similarity

In cognitive computing, similarity is commonly adopted to remember something when a clue is provided. Up to now, there have been many methods for measuring concept similarity. More details are omitted here. Interested readers can refer to [6, 35].

The clue is assumed in this subsection to be a nonempty object set \(X_0\). According to the above discussion, the granular concepts \(G_{f_ng_n}\) of an approximate cognitive computing system \(\mathcal {AS}=\bigcup \nolimits _{i=2}^{n}\{\mathcal {AS}_{\mathcal {L}_i\mathcal {H}_i}\}\) are the basic knowledge used to achieve the learning task.

In what follows, we first introduce the notion of similarity between two nonempty object sets.

Definition 6

The similarity between two nonempty object sets \(X_1,X_2\subseteq U_n\) is defined as

According to Definition 6, we have the following proposition.

Proposition 1

For nonempty object sets \(X,X_1,X_2\subseteq U_n\), both \(s_u(X,X)=1\) and \(s_u(X_1,X_2)=s_u(X_2,X_1)\) are true.

Then, based on this similarity, granular concept learning from a nonempty object set \(X_0\) is to select the granular concept (X, (B, C)) which satisfies

Example 4

Continued with Example 3. Suppose that the given clue is \(X_0=\{4,5,9\}\). Then, based on Eq. (21), it follows

Similarly, we obtain the following equalities:

That is, \((X_{12},(B_{12},C_{12}))=(3459,(c,ce))\) with the similarity \(s_u(X_{12},X_0)=\frac{7}{8}\) is the best granular concept which matches the given clue.

4.2 Granular concept learning from a pair of attribute sets based on similarity

Similarly, to learn granular concept from a pair of attribute sets, similarity between attributes sets is also required.

Definition 7

Let \((B_1,C_1),(B_2,C_2)\in 2^{A_n}\times 2^{A_n}\) and \(\lambda \in (0,1)\). Then, the similarity between \((B_1,C_1)\) and \((B_2,C_2)\) is defined as

where \(s_a(\cdot ,\cdot )\) is the similarity similar to the one specified in Eq. (21).

Note that in Definition 7, \(\lambda\) is a weight parameter used to adjust the significance of the certain and possible parts. Furthermore, we have the following proposition.

Proposition 2

For \((B,C),(B_1,C_1),(B_2,C_2)\in 2^{A_n}\times 2^{A_n}\), we have \(s_{aa}((B,C),\) \((B,C))=1\) and \(s_{aa}((B_1,C_1),(B_2,C_2))=s_{aa}((B_2,C_2),(B_1,C_1))\).

Then, based on this similarity, granular concept learning from a pair of attribute sets \((B_0,C_0)\) is to select the granular concept (X, (B, C)) which satisfies

Example 5

Continued with Example 3. Suppose that the given clue is \((B_0,C_0)\) \(=(ac,ce)\) and \(\lambda =\frac{3}{5}\). Then, based on Eq. (23), it follows

Similarly, we obtain the following equalities:

That is, \((X_{12},(B_{12},C_{12}))=(3459,(c,ce))\) with the similarity \(s_{aa}((B_{12},C_{12}),\) \((B_0,C_0))=\frac{51}{60}\) is the best granular concept which matches the given clue.

4.3 Granular concept learning from an object set and a pair of attribute sets based on similarity

Definition 8

Let \(X_1,X_2\subseteq U_n\), \((B_1,C_1),(B_2,C_2)\in 2^{A_n}\times 2^{A_n}\) and \(\mu \in (0,1)\). Then, the similarity between \((X_1,(B_1,C_1))\) and \((X_2,(B_2,C_2))\) is defined as

Note that in Definition 8, \(\mu\) is a weight parameter used to adjust the significance of an object set and a pair of attribute sets. Furthermore, we have the following proposition.

Proposition 3

Let \(X,X_1,X_2\subseteq U_n\) and \((B,C),(B_1,C_1),(B_2,C_2)\in 2^{A_n}\times 2^{A_n}\). Then, \(s((X,(B,C)),(X,(B,C)))=1\) and \(s((X_1,(B_1,C_1)),(X_2,(B_2,C_2)))\) \(=s((X_2,(B_2,C_2)),(X_1,(B_1,C_1)))\).

Then, based on this similarity, granular concept learning from an object set \(X_0\) and a pair of attribute sets \((B_0,C_0)\) is to select the granular concept (X, (B, C)) which satisfies

Example 6

Continued with Example 3. Suppose that the given clue is constituted by \(X_0=\{4,5,9\}\) and \((B_0,C_0)=(ac,ce)\). Take \(\mu =\lambda =\frac{3}{5}\). Then, it follows from Definition 8 that

Similarly, we obtain the following equalities:

That is, \((X_{12},(B_{12},C_{12}))=(3459,(c,ce))\) with the similarity \(s((X_{12},(B_{12},C_{12})),\) \((X_0,(B_0,C_0)))=\frac{43}{50}\) is the best granular concept which matches the given clue.

5 Numerical experiments

In this section, we conduct some numerical experiments to evaluate the proposed cognitive concept learning algorithms.

In the experiments, we selected five data sets totally from UCI Machine Learning Repository [7] to achieve the evaluation task. That is, Post-Operative Patient data set, Hepatitis data set, Meta-data data set, Breast Cancer Wisconsin (Original) data set and Mushroom data set. Since all of them are not the standard data sets which can directly be used to evaluate the proposed concept learning algorithms, different data pre-processing techniques are required to convert them. In what follows, we introduce the details of these data pre-processing techniques.

-

(1)

Post-Operative Patient data set contains 90 instances (each of them representing a patient) and 8 attributes. The eighth attribute has 3 missing values and we divided its values into three categories: the first category is less than or equal to 7, the second is located between 7 and 14, and the third is greater than or equal to 14. In addition, each of other attributes has three values except the third one having four values. Then, the scaling approach [8] was applied to the data set for generating a standard one. We denote it by Data set 1.

-

(2)

Hepatitis data set has 155 instances and 19 attributes. In this data set, the fifth, sixth and seventh attributes have one missing value, the sixteenth has 4 missing values, the tenth, eleventh, twelfth and thirteenth have 5 missing values, the fourteenth has 6 missing values, the eighth has 9 missing values, the ninth has 10 missing values, the seventeenth has 16 missing values, the fifteenth has 27 missing values, and the eighteenth has 62 missing vales. In the experiments, each attribute was binarized. Then, a standard data set is obtained, and we denote it by Data set 2.

-

(3)

Meta-data data set contains 528 instances and 22 attributes. Since there are huge differences among the attributes, we only selected eleven of them which are from the fourth to the fourteenth. In this data set, both the eleventh and thirteenth attributes have 38 missing values. For the purpose of generating a standard data set, each attribute was binarized. We denote this standard data set by Data set 3.

-

(4)

Breast Cancer Wisconsin (Original) data set contains 699 instances (each of them representing a patient) and 10 attributes. In this data set, the sixth attribute has 16 missing values. Once again, each attribute was binarized to generate a standard data set which is denoted by Data set 4.

-

(5)

Mushroom data set consists of 8124 instances and 22 attributes. The twelfth attribute contains 2480 missing values. In the experiments, we split the values of each attribute, from small to large, into three pairwise disjoint intervals whose lengths are the same. Then, it was converted by the scaling approach into a standard data set. We denote it by Data set 5.

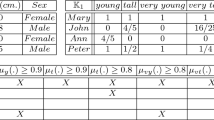

Furthermore, Data sets 1, 2, 3, 4 and 5 were divided into segments for designing their corresponding approximate cognitive computing systems: \(\mathcal {AS}^{(1)}=\bigcup \nolimits _{i=2}^{4}\{\mathcal {AS}_{f_ig_i}^{(1)}\}\), \(\mathcal {AS}^{(2)}=\bigcup \nolimits _{i=2}^{4}\{\mathcal {AS}_{f_ig_i}^{(2)}\}\), \(\mathcal {AS}^{(3)}=\bigcup \nolimits _{i=2}^{4}\{\mathcal {AS}_{f_ig_i}^{(3)}\}\), \(\mathcal {AS}^{(4)}=\bigcup \nolimits _{i=2}^{4}\{\mathcal {AS}_{f_ig_i}^{(4)}\}\) and \(\mathcal {AS}^{(5)}=\bigcup \nolimits _{i=2}^{4}\{\mathcal {AS}_{f_ig_i}^{(5)}\}\). See Table 3 for the details, where ACCS is the abbreviation of “Approximate cognitive computing system”. In the table, \(U_i=\{x\sim y\}\) means that \(U_i\) is constituted by the objects between the x-th and y-th including the endpoints, so is \(A_i\).

For convenience of description, we denote by Algorithms 2, 3, and 4 respectively the processes of learning granular concepts from an object set, a pair of attribute sets, and both of them. Moreover, we took the weight parameters \(\lambda =\mu =0.6\). Then, Algorithms 1, 2, 3 and 4 were applied to Data sets 1, 2, 3, 4 and 5. The corresponding running time is reported in Table 4, where |U| is the cardinality of the object set, |A| is that of the attribute set, and n is the number of approximate cognitive computing states. Note that in the experiments, we generated 100 clues randomly for Algorithm 2 as well as Algorithms 3 and 4. In other words, only the average running time of Algorithms 2, 3 and 4 is reported in Table 4. Finally, it can be observed from the table that all the algorithms are reasonably efficient for the five chosen data sets.

It deserves to be mentioned that the time complexity of Algorithm 1 is \(O(n(|U_n|+|A_n|)|U_n||A_n|)\), while those of Algorithms 2, 3 and 4 are \(O(|U_n|^2+|U_n||A_n|+|A_n|^2)\). That is to say, the time complexity of Algorithm 1 is far more than those of Algorithms 2, 3 and 4 in theory. This was also confirmed indirectly by Data set 5 in the experiments.

6 Final remarks

Cognitive concept learning has become a hot topic in recent years, and it has attracted much attention from the communities of formal concept analysis, granular computing and cognitive computing. However, it is still at the immature stage. That is, more theoretical frameworks, effective methods and potential applications are needed to be improved.

Our current work mainly focuses on cognitive concept learning from incomplete information. Specifically, we have proposed a pair of approximate cognitive operators and an approximate cognitive computing system to form and update granular concepts. Moreover, cognitive processes have been simulated based on three types of similarities to learn granular concept from a given clue. In addition, we have conducted some numerical experiments to evaluate the effectiveness of the proposed concept learning methods.

Compared to the existing work, the main contributions of our paper are as follows: (1) new cognitive operators have been defined for incomplete information environment; (2) an approximate cognitive computing system has been designed for applying incomplete information fusion; (3) three types of similarities have been presented and used to learn granular concepts from given clues.

It should also be pointed out that our work is completely different from the one in [13] although both of them discussed concept learning from incomplete information. As a matter of fact, our work is to learn part of concepts, while the one in [13] is to learn all of concepts. In addition, concept learning in our work was realized by an axiomatic method, while that in [13] was done by a constructive method.

Note that learning cognitive concepts from incomplete information is a challenging task. Although our work has put forward some theoretical frameworks and methods to address this problem, it is still not enough in many aspects. For example, how to apply them in the real world? It includes the semantic explanation of approximate cognitive concepts, the assignment of the parameters \(\lambda\) and \(\mu\), the evaluation of the learnt granular concepts, and how to improve the learning efficiency [17]. Moreover, cognitive logic [26, 50] should be incorporated into cognitive concept learning, and uncertainty [29] needs to be considered in incomplete information [29]. Besides, learning cognitive concepts from fuzzy data [31–33] also deserves to be investigated. Undoubtedly, approaches to cognitive concept learning from incomplete information cannot be directly extended to the case of fuzzy information since both knowledge representation and information measure are extremely different [1, 9, 30]. These issues will be studied in our future work.

References

Ashfaq RAR, Wang XZ, Huang JZX et al (2016) Fuzziness based semi-supervised learning approach for intrusion detection system. Inf Sci. doi:10.1016/j.ins.2016.04.019

Aswani Kumar Ch (2012) Fuzzy clustering-based formal concept analysis for association rules mining. Appl Artif Intell 26(3):274–301

Aswani Kumar Ch, Ishwarya MS, Loo CK (2015) Formal concept analysis approach to cognitive functionalities of bidirectional associative memory. Biol Inspired Cognit Architect 12:20–33

Chen JK, Li JJ, Lin YJ, Lin GP, Ma ZM (2015) Relations of reduction between covering generalized rough sets and concept lattices. Inf Sci 304:16–27

Dias SM, Viera NJ (2013) Applying the JBOS reduction method for relevant knowledge extraction. Exp Syst Appl 40(5):1880–1887

Formica A (2008) Concept similarity in formal concept analysis: an information content approach. Knowl Based Syst 21:80–87

Frank A, Asuncion A (2010) UCI repository of machine learning databases. Tech. Rep., Univ. California, Sch. Inform. Comp. Sci., Irvine, CA. http://archive.ics.uci.edu/ml

Ganter B, Wille R (1999) Formal concept analysis: mathematical foundations. Springer, Berlin

He YL, Wang XZ, Huang JZX (2016) Fuzzy nonlinear regression analysis using a random weight network. Inf Sci. doi:10.1016/j.ins.2016.01.037

Kang XP, Li DY, Wang SG, Qu KS (2012) Formal concept analysis based on fuzzy granularity base for different granulations. Fuzzy Sets Syst 203:33–48

Li JH, Huang CC, Xu WH, Qian YH, Liu WQ (2015) Cognitive concept learning via granular computing for big data. In: Proceedings of the 2015 international conference on machine learning and cybernetics. Guangzhou, pp 289–294

Li JH, Huang CC, Qi JJ, Qian YH, Liu WQ (2016) Three-way cognitive concept learning via multi-granularity. Inf Sci. doi:10.1016/j.ins.2016.04.051

Li JH, Mei CL, Lv YJ (2013) Incomplete decision contexts: approximate concept construction, rule acquisition and knowledge reduction. Int J Approx Reas 54(1):149–165

Li JH, Mei CL, Xu WH, Qian YH (2015) Concept learning via granular computing: a cognitive viewpoint. Inf Sci 298:447–467

Li JH, Ren Y, Mei CL, Qian YH, Yang XB (2016) A comparative study of multigranulation rough sets and concept lattices via rule acquisition. Knowl Based Syst 91:152–164

Li LF, Zhang JK (2010) Attribute reduction in fuzzy concept lattices based on T implication. Knowl Based Syst 23(6):497–503

Lu SX, Wang XZ, Zhang GQ, Zhou X (2015) Effective algorithms of the Moore–Penrose inverse matrices for extreme learning machine. Intell Data Anal 19(4):743–760

Qi JJ, Qian T, Wei L (2016) The connections between three-way and classical concept lattices. Knowl Based Syst 91:143–151

Ma JM, Zhang WX (2013) Axiomatic characterizations of dual concept lattices. Int J Approx Reas 54(5):690–697

Ma JM, Zhang WX, Leung Y, Song XX (2007) Granular computing and dual Galois connection. Inf Sci 177(23):5365–5377

Pedrycz W (2013) Granular computing: analysis and design of intelligent systems. CRC Press/Francis Taylor, Boca Raton

Qian YH, Liang JY, Pedrycz W, Dang CY (2010) Positive approximation: an accelerator for attribute reduction in rough set theory. Artif Intell 174:597–618

Qian YH, Liang JY, Yao YY, Dang CY (2010) MGRS: a multi-granulation rough set. Inf Sci 180(6):949–970

Salehi S, Selamat A, Fujita H (2015) Systematic mapping study on granular computing. Knowl Based Syst 80:78–97

Shao MW, Leung Y, Wu WZ (2014) Rule acquisition and complexity reduction in formal decision contexts. Int J Approx Reas 55(1):259–274

She YH (2014) On the rough consistency measures of logic theories and approximate reasoning in rough logic. Int J Approx Reas 55(1):486–499

Singh PK, Aswani Kumar Ch (2014) Bipolar fuzzy graph representation of concept lattice. Inf Sci 288:437–448

Tan AH, Li JJ, Lin GP (2015) Connections between covering-based rough sets and concept lattices. Int J Approx Reas 56:43–58

Wang XZ (2015) Uncertainty in learning from big data-editorial. J Intell Fuzzy Syst 28(5):2329–2330

Wang XZ, Ashfaq RAR, Fu AM (2015) Fuzziness based sample categorization for classifier performance improvement. J Intell Fuzzy Syst 29(3):1185–1196

Wang XZ, Dong CR (2009) Improving generalization of fuzzy if-then rules by maximizing fuzzy entropy. IEEE Trans Fuzzy Syst 17(3):556–567

Wang XZ, Dong LC, Yan JH (2012) Maximum ambiguity based sample selection in fuzzy decision tree induction. IEEE Trans Knowl Data Eng 24(8):1491–1505

Wang XZ, Xing HJ, Li Y et al (2014) A study on relationship between generalization abilities and fuzziness of base classifiers in ensemble learning. IEEE Trans Fuzzy Syst 23:1638–1654

Wang LD, Liu XD (2008) Concept analysis via rough set and AFS algebra. Inf Sci 178(21):4125–4137

Wang LD, Liu XD (2008) A new model of evaluating concept similarity. Knowl Based Syst 21:842–846

Wang Y (2008) On concept algebra: a denotational mathematical structure for knowledge and sofrware modelling. Int J Cognit Inf Nat Intell 2(2):1–19

Wang Y (2009) On cognitive computing. Int J Softw Sci Comput Intell 1(3):1–15

Wei L, Wan Q (2016) Granular transformation and irreducible element judgment theory based on pictorial diagrams. IEEE Trans Cybernet 46(2):380–387

Wille R (1982) Restructuring lattice theory: an approach based on hierarchies of concepts. In: Rival I (ed) Ordered sets. Reidel, Dordrecht, pp 445–470

Wu WZ, Leung Y, Mi JS (2009) Granular computing and knowledge reduction in formal contexts. IEEE Trans Knowl Data Eng 21(10):1461–1474

Wu WZ, Leung Y (2011) Theory and applications of granular labelled partitions in multi-scale decision tables. Inf Sci 181(18):3878–3897

Xu WH, Li WT (2016) Granular computing approach to two-way learning based on formal concept analysis in fuzzy datasets. IEEE Trans Cybernet 46(2):366–379

Xu WH, Pang JZ, Luo SQ (2016) A novel cognitive system model and approach to transformation of information granules. Int J Approx Reas 55(3):853–866

Yang XB, Qi Y, Yu HL, Song XN, Yang JY (2014) Updating multigranulation rough approximations with increasing of granular structures. Knowl Based Syst 64:59–69

Yao YY (2004) Concept lattices in rough set theory. Proceedings of 2004 annual meeting of the north american fuzzy information processing society. IEEE Computer Society, Washington, D.C., pp 796–801

Yao YY(2204) A comparative study of formal concept analysis and rough set theory in data analysis. In: Proceedings of 4th international conference on rough sets and current trends in computing (RSCTC 2004), Uppsala, Sweden, pp 59–68

Yao YY (2009) Interpreting concept learning in cognitive informatics and granular computing. IEEE Trans Syst Man Cybern Part B Cybern 39(4):855–866

Yao YY (2016) A triarchic theory of granular computing. Granul Comput 1(2):145–157

Zadeh LA (1997) Towards a theory of fuzzy information granulation and its centrality in human reasoning and fuzzy logic. Fuzzy Sets Syst 90:111–117

Zhai YH, Li DY, Qu KS (2015) Decision implication canonical basis: a logical perspective. J Comput Syst Sci 81(1):208–218

Zhang WX, Xu WH (2007) Cognitive model based on granular computing. Chin J Eng Math 24(6):957–971

Acknowledgments

This work was supported by the National Natural Science Foundation of China (Nos. 61305057, 61562050 and 61573173) and Key Laboratory of Oceanographic Big Data Mining & Application of Zhejiang Province (No. OBDMA201502).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Zhao, Y., Li, J., Liu, W. et al. Cognitive concept learning from incomplete information. Int. J. Mach. Learn. & Cyber. 8, 159–170 (2017). https://doi.org/10.1007/s13042-016-0553-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13042-016-0553-8