Abstract

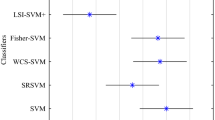

Support vector machines (SVMs) are well-known machine learning algorithms, however they may not effectively detect the intrinsic manifold structure of data and give a lower classification performance when learning from structured data sets. To mitigate the above deficiency, in this article, we propose a novel method termed as Locality similarity and dissimilarity preserving support vector machine (LSDPSVM). Compared to SVMs, LSDPSVM successfully inherits the characteristics of SVMs, moreover, it exploits the intrinsic manifold structure of data from both inter-class and intra-class to improve the classification accuracy. In our LSDPSVM a squared loss function is used to reduce the complexity of the model, and an algorithm based on concave-convex procedure method is used to solve the optimal problem. Experimental results on UCI benchmark datasets and Extend Yaleface datasets demonstrate LSDPSVM has better performance than other similar methods.

Similar content being viewed by others

References

Cortes C, Vapnik VN (1995) Support vector networks. Mach Learn 20(3):273–297

Deng NY, Tian YJ, Zhang CH (2013) Support vector machines: optimization based theory, algorithms, and extensions. CRC Press, Boca Raton

Fukunaga F (1990) Statistical pattern recognition. Academic, San Diego

Zafieriou S, Tefas A, Pitas I (2007) Minimum class variance support vector machines. IEEE Trans Image Process 16(10):2551–2564

Wang XM, Chung FL, Wang ST (2010) On minimum class locality preserving variance support vector machine. Pattern Recognit 43(8):2753–2762

He XF, Niyogi P (2005) 16(1):186–197. Locality preserving projections. Adv Neural InfProcessSyst

He XF, Yan SC, Hu TX, Niyogi P, Zhang HJ (2005) Face recognition using Laplacianfaces. IEEE Trans Pattern Anal Mach Intell 27(3):328–340

Kokiopoulou K, Saad Y (2007) Orthogonal neighborhood preserving projections: a projection-based dimensionality reduction technique. IEEE Trans Pattern Anal Mach Intell 29(12):2143–2156

Zhang HX, Cao LL, Gao S<background-color:#96C864;> (</background-color:#96C864;>2014) A locality correlation preserving support vector machine. Pattern Recognit 47(9):3168–3178

Tanaka Y, Zhang F, Yang W (2003) Diagnostics in multivariate data analysis: sensitivity analysis for principal components and canonical correlations. Exploratory data analysis in empirical research. Springer, Berlin

Tenenhaus A, Philippe C, Frouin V (2015) Kernel generalized canonical correlation analysis. Computational statistics and data. Analysis 90(C):114–131

Wu F, Wang F, Yang Y, Zhuang Y, Nie F (2010) Classification by semi-supervised discriminative regularization. Neurocomputing 73(10–12):254–263

Wang F, Zhang C (2008) On discriminative semi-supervised classification. Proceeding of the 23nd AAAI conference on Artificial intelligence, Chicago, pp 720–725

Wang YY, Chen SC, Xue H, Fu ZY (2014) Semi-supervised classification learning by discrimination-aware manifold regularization. Neurocomputing 147(1):299–306

Gao QS, Huang YF, Gao XB, Shen WG, Zhang HL (2015) A novel semi-supervised learning for face recognition. Neurocomputing 152(C):69–75

Suykens JAK, Vandewalle J (2007) Least squares support vector machines. Neural Process Lett 9(3):293–300

Kumar MA, Gopal M (2009) Least squares twin support vector machines for pattern classification. Expert Syst Appl 36(12):7535–7543

Shao YH, Deng NY, Yang ZM (2012) Least squares recursive projection twin support vector machine for classification. Pattern Recognit 45(6):2299–2307

Ding SF, Hua XP (2014) Recursive least squares projection twin support vector machines. Neurocomputing 130(3):3–9

Hua XP, Ding SF (2015) Weighted least squares projection twin support vector machines with local information. Neurocomputing 160:228–237

Yuille AL, Rangarajan A (2003) The concave convex procedure. Neural Comput 15(4):915–936

Duda RO, Hart PE, Stork DG (2001) Pattern classification, 2nd edn. Wiley, New York

Diamantaras KI, Kung SY (1996) Principal component neural networks. Wiley, New York

Scholkopf A, Smola B, Muller KR (1998) Nonlinear component analysis as a kernel eigenvalue problem. Neural Comput 10(5):1299–1319

Acknowledgements

This work is supported by the National Nature Science Foundation of China (Nos. 11371365, 11671032).

Author information

Authors and Affiliations

Corresponding authors

Rights and permissions

About this article

Cite this article

Zhang, J., Hou, Q., Zhen, L. et al. Locality similarity and dissimilarity preserving support vector machine. Int. J. Mach. Learn. & Cyber. 9, 1663–1674 (2018). https://doi.org/10.1007/s13042-017-0671-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13042-017-0671-y