Abstract

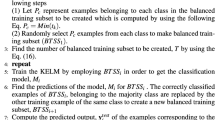

Many real-world applications are imbalance classification problems, where the number of samples present in one class is significantly less than the number of samples belonging to another class. The samples with larger and smaller class proportions are called majority and minority class respectively. Weighted extreme learning machine (WELM) was designed to handle the class imbalance problem. Several works such as boosting WELM (BWELM) and ensemble WELM extended WELM by using the ensemble method. All these variant use WELM with the sigmoid node to handle the class imbalance problem effectively. WELM with the sigmoid node suffers from the problem of performance fluctuation due to the random initialization of the weights between the input and the hidden layer. Hybrid artificial bee colony optimization-based WELM extends WELM by finding the optimal weights between the input and the hidden layer by using artificial bee colony optimization algorithm. The computational cost of the kernelized ELM is directly proportional to the number of kernel functions. So, this work proposes a novel ensemble using reduced kernelized WELM as the base classifier to solve the class imbalance problem more effectively. The proposed work uses random undersampling to design balanced training subsets, which act as the centroid of the reduced kernelized WELM classifier. The proposed ensemble generates the base classifier in a sequential manner. The majority class samples misclassified by the first base classifier along with all of the minority class samples act as the centroids of the second base classifier i.e. the base classifiers differ in the choice of the centroids for the kernel functions. The proposed work also has lower computational cost compared to BWELM. The proposed method is evaluated by utilizing the benchmark real-world imbalanced datasets taken from the KEEL dataset repository. The proposed method was also tested on binary synthetic datasets in order to analyze its robustness. The experimental results demonstrate the superiority of the proposed algorithm compared to the other state-of-the-art methods for the class imbalance learning. This is also revealed by the statistical tests conducted.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Huang GB, Zhu QY, Siew CK (2006) Extreme learning machine: theory and applications. Neurocomputing 70(1–3):489–501

Rumelhart D E, Hinton G E, Williams R J (1986) Learning representations by back propagating errors. Nature 323:533–536

Janakiraman VM, Nguyen X, Sterniak J, Assanis D (2015) Identification of the dynamic operating envelope of hcci engines using class imbalance learning. IEEE Trans Neural Netw Learn Syst 26(1):98–112

Janakiraman VM, Nguyen X, Assanis D (2016) Stochastic gradient based extreme learning machines for stable online learning of advanced combustion engines. Neurocomputing 177:304–316

Zong W, Huang GB, Chen Y (2013) Weighted extreme learning machine for imbalance learning. Neurocomputing 101:229–242

Li K, Kong X, Lu Z, Wenyin L, Yin J (2014) Boosting weighted ELM for imbalanced learning. Neurocomputing 128:15–21

Zhang Y, Liu B, Cai J, Zhang S (2017) Ensemble weighted extreme learning machine for imbalanced data classification based on differential evolution. Neural Comput Appl 28(1):259–267

Raghuwanshi BS, Shukla S (2018a) Class-specific cost-sensitive boosting weighted elm for class imbalance learning. Memet Comput 2018:1–21

Raghuwanshi BS, Shukla S (2018b) Underbagging based reduced kernelized weighted extreme learning machine for class imbalance learning. Eng Appl Artif Intell 74:252–270

Shukla S, Yadav RN (2015) Regularized weighted circular complex-valued extreme learning machine for imbalanced learning. IEEE Access 3:3048–3057

Raghuwanshi BS, Shukla S (2018a) Class-specific extreme learning machine for handling binary class imbalance problem. Neural Netw 105:206–217

Raghuwanshi BS, Shukla S (2018b) Class-specific kernelized extreme learning machine for binary class imbalance learning. Appl Soft Comput 73:1026–1038

Raghuwanshi BS, Shukla S (2019) Generalized class-specific kernelized extreme learning machine for multiclass imbalanced learning. Expert Syst Appl 121:244–255

Xiao W, Zhang J, Li Y, Zhang S, Yang W (2017) Class-specific cost regulation extreme learning machine for imbalanced classification. Neurocomputing 261:70–82

Tang X, Chen L (2018) Artificial bee colony optimization-based weighted extreme learning machine for imbalanced data learning. Clust Comput 2018:1–6

Raghuwanshi BS, Shukla S (2019) Class imbalance learning using underbagging based kernelized extreme learning machine. Neurocomputing 329:172–187

Iosifidis A, Gabbouj M (2015) On the kernel extreme learning machine speedup. Pattern Recognit Lett 68:205–210

Iosifidis A, Tefas A, Pitas I (2015) On the kernel extreme learning machine classifier. Pattern Recognit Lett 54:11–17

Iosifidis A, Tefas A, Pitas I (2017) Approximate kernel extreme learning machine for large scale data classification. Neurocomputing 219:210–220

Schapire RE (1999) A brief introduction to boosting. In: Proceedings of the 16th international joint conference on artificial intelligence, volume 2, IJCAI’99, pp 1401–1406

Lee YJ, Huang SY (2007) Reduced support vector machines: a statistical theory. IEEE Trans Neural Netw 18(1):1–13

Williams CKI, Seeger M (2001) Using the nyström method to speed up kernel machines. In: Leen TK, Dietterich TG, Tresp V (eds) Advances in neural information processing systems, vol 13. MIT, Oxford, pp 682–688

Deng W, Zheng Q, Zhang K (2013) Reduced kernel extreme learning machine. Springer, Heidelberg, pp 63–69

Deng WY, Ong YS, Zheng QH (2016) A fast reduced kernel extreme learning machine. Neural Netw 76:29–38

Polikar R (2006) Ensemble based systems in decision making. IEEE Circuit Syst Mag 6(3):21–45

Rokach L (2010) Ensemble-based classifiers. Artif Intell Rev 33(1):1–39

Breiman L (1996) Bagging predictors. Mach Learn 24(2):123–140

Cao J, Lin Z, Huang GB, Liu N (2012) Voting based extreme learning machine. Inf Sci 185(1):66–77

Freund Y, Schapire RE (1996) Experiments with a new boosting algorithm. In: Proceedings of the thirteenth international conference on machine learning, Morgan Kaufmann, pp 148–156

Freund Y, Schapire RE (1997) A decision-theoretic generalization of on-line learning and an application to boosting. J Comput Syst Sci 55(1):119–139

Seiffert C, Khoshgoftaar TM, Hulse JV, Napolitano A (2010) Rusboost: a hybrid approach to alleviating class imbalance. IEEE Trans Syst Man Cybern Part A Syst Hum 40(1):185–197

Chawla NV, Lazarevic A, Hall LO, Bowyer KW (2003) Smoteboost: improving prediction of the minority class in boosting. In: Lavrač N, Gamberger D, Todorovski L, Blockeel H (eds) Knowledge discovery in databases: PKDD 2003. Springer, Berlin, pp 107–119

Džeroski S, Ženko B (2004) Is combining classifiers with stacking better than selecting the best one? Mach Learn 54(3):255–273

Zhou Z (2012) Ensemble methods: foundations and algorithms. Chapman & Hall/CRC Data Mining and Knowledge Discovery Serie, Taylor & Francis, Abingdon

Haixiang G, Yijing L, Shang J, Mingyun G, Yuanyue H, Bing G (2017) Learning from class-imbalanced data: review of methods and applications. Expert Syst Appl 73:220–239

He H, Garcia EA (2009) Learning from imbalanced data. IEEE Trans Knowl Data Eng 21(9):1263–1284

López V, Fernández A, García S, Palade V, Herrera F (2013) An insight into classification with imbalanced data: empirical results and current trends on using data intrinsic characteristics. Inf Sci 250:113–141

Brown I, Mues C (2012) An experimental comparison of classification algorithms for imbalanced credit scoring data sets. Expert Syst Appl 39(3):3446–3453

Xiao J, Xie L, He C, Jiang X (2012) Dynamic classifier ensemble model for customer classification with imbalanced class distribution. Expert Syst Appl 39(3):3668–3675

Krawczyk B, Galar M, Jele L, Herrera F (2016) Evolutionary undersampling boosting for imbalanced classification of breast cancer malignancy. Appl Soft Comput 38(C):714–726

Galar M, Fernandez A, Barrenechea E, Bustince H, Herrera F (2012) A review on ensembles for the class imbalance problem: bagging-, boosting-, and hybrid-based approaches. IEEE Trans Syst Man Cybern Part C (Applications and Reviews) 42(4):463–484

Liu XY, Wu J, Zhou ZH (2009) Exploratory undersampling for class-imbalance learning. IEEE Trans Syst Man Cybern Part B (Cybernetics) 39(2):539–550

Chawla NV, Bowyer KW, Hall LO, Kegelmeyer WP (2002) Smote: synthetic minority over-sampling technique. J Artif Int Res 16(1):321–357

Mathew J, Pang CK, Luo M, Leong WH (2018) Classification of imbalanced data by oversampling in kernel space of support vector machines. IEEE Trans Neural Netw Learn Syst 29:1–12

Yang X, Song Q, WANG Y (2007) A weighted support vector machine for data classification. Int J Pattern Recognit Artif Intell 21(05):961–976

Dietterich TG (2000) Ensemble methods in machine learning. In: Multiple classifier systems, Springer, pp 1–15

Huang GB, Zhou H, Ding X, Zhang R (2012) Extreme learning machine for regression and multiclass classification. IEEE Trans Syst Man Cybern Part B (Cybernetics) 42(2):513–529

Deng W, Zheng Q, Chen L (2009) Regularized extreme learning machine. In: IEEE symposium on computational intelligence and data mining, pp 389–395

Zhu QY, Qin A, Suganthan P, Huang GB (2005) Evolutionary extreme learning machine. Pattern Recognit 38(10):1759–1763

Zhao YP (2016) Parsimonious kernel extreme learning machine in primal via cholesky factorization. Neural Netw 80:95–109

Zeng Y, Xu X, Shen D, Fang Y, Xiao Z (2017) Traffic sign recognition using kernel extreme learning machines with deep perceptual features. IEEE Trans Intell Transp Syst 18(6):1647–1653

Courrieu P (2005) Fast computation of moore-penrose inverse matrices. CoRR abs/0804.4809

Hoerl AE, Kennard RW (2000) Ridge regression: biased estimation for nonorthogonal problems. Technometrics 42(1):80–86

Alcalá J, Fernández A, Luengo J, Derrac J, García S, Sánchez L, Herrera F (2011) Keel data-mining software tool: data set repository, integration of algorithms and experimental analysis framework. J Multiple Valued Logic Soft Comput 17(2–3):255–287

Dheeru D, Karra Taniskidou E (2017) UCI machine learning repository. http://archive.ics.uci.edu/ml

Yu H, Yang X, Zheng S, Sun C (2018) Active learning from imbalanced data: a solution of online weighted extreme learning machine. IEEE Trans Neural Netw Learn Syst 30:1–16

Bradley AP (1997) The use of the area under the roc curve in the evaluation of machine learning algorithms. Pattern Recognit 30(7):1145–1159

Fawcett T (2003) Roc graphs: notes and practical considerations for researchers. Tech. rep., HP Labs, Tech. Rep. HPL-2003-4

Huang J, Ling CX (2005) Using auc and accuracy in evaluating learning algorithms. IEEE Trans Knowl Data Eng 17(3):299–310

Buda M, Maki A, Mazurowski MA (2018) A systematic study of the class imbalance problem in convolutional neural networks. Neural Netw 106:249–259

Chen Z, Lin T, Xia X, Xu H, Ding S (2018) A synthetic neighborhood generation based ensemble learning for the imbalanced data classification. Appl Intell 48(8):2441–2457

Nanni L, Fantozzi C, Lazzarini N (2015) Coupling different methods for overcoming the class imbalance problem. Neurocomputing 158(C):48–61

Hu S, Liang Y, Ma L, He Y (2009) Msmote: improving classification performance when training data is imbalanced. In: 2009 Second international workshop on computer science and engineering, vol 2, pp 13–17

Demšar J (2006) Statistical comparisons of classifiers over multiple data sets. J Mach Learn Res 7:1–30

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Raghuwanshi, B.S., Shukla, S. Classifying imbalanced data using ensemble of reduced kernelized weighted extreme learning machine. Int. J. Mach. Learn. & Cyber. 10, 3071–3097 (2019). https://doi.org/10.1007/s13042-019-01001-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13042-019-01001-9