Abstract

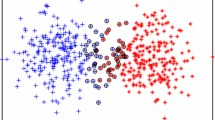

As a state-of-the-art multi-class supervised novelty detection method, supervised novelty detection-support vector machine (SND-SVM) is extended from one-class support vector machine (OC-SVM). It still requires to slove a more time-consuming quadratic programming (QP) whose scale is the number of training samples multiplied by the number of normal classes. In order to speed up SND-SVM learning, we propose a down sampling framework for SND-SVM. First, the learning result of SND-SVM is only decided by minor samples that have non-zero Lagrange multipliers. We point out that the potential samples with non-zero Lagrange multipliers are located in the boundary regions of each class. Second, the samples located in boundary regions can be found by a boundary detector. Therefore, any boundary detector can be incorporated into the proposed down sampling framework for SND-SVM. In this paper, we use a classical boundary detector, local outlier factor (LOF), to illustrate the effective of our down sampling framework for SND-SVM. The experiments, conducted on several benchmark datasets and synthetic datasets, show that it becomes much faster to train SND-SVM after down sampling.

Similar content being viewed by others

References

Butun I, Morgera SD, Sankar R (2014) A survey of intrusion detection systems in wireless sensor networks. IEEE Commun Surv Tutor 16(1):266–282

Lazzaretti AE, Tax DMJ, Neto HV et al (2016) Novelty detection and multi-class classification in power distribution voltage waveforms. Expert Syst Appl 45:322–330

Schubert E, Zimek A, Kriegel HP (2014) Local outlier detection reconsidered: a generalized view on locality with applications to spatial, video, and network outlier detection. Data Min Knowl Discov 28(1):190–237

Oster J, Behar J, Sayadi O et al (2015) Semisupervised ECG ventricular beat classification with novelty detection based on switching Kalman filters. IEEE Trans Biomed Eng 62(9):2125–2134

Yi Y, Zhou W, Shi Y et al (2018) Speedup two-class supervised outlier detection. IEEE Access 6:63923–63933

Pimentel MAF, Clifton DA, Clifton L et al (2014) A review of novelty detection. Signal Process 99:215–249

Schölkopf B, Platt JC, Shawe-Taylor J et al (2001) Estimating the support of a high-dimensional distribution. Neural Comput 13(7):1443–1471

Zhu F, Yang J, Gao C et al (2016) A weighted one-class support vector machine. Neurocomputing 189:1–10

Tax DMJ, Duin RPW (2004) Support vector data description. Mach Learn 54(1):45–66

Landgrebe T, Paclík P, Tax DMJ et al (2005) Optimising two-stage recognition systems. International workshop on multiple classifier systems. Berlin, Springer, pp 206–215

Tax DMJ, Duin RPW (2008) Growing a multi-class classifier with a reject option. Pattern Recogn Lett 29(10):1565–1570

Bodesheim P, Freytag A, Rodner E et al (2013) Kernel null space methods for novelty detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3374–3381.

Jumutc V, Suykens JAK (2014) Multi-class supervised novelty detection. IEEE Trans Pattern Anal Mach Intell 36(12):2510–2523

Li X, Lv J, Yi Z (2018) An efficient representation-based method for boundary point and outlier detection. IEEE Trans Neural Netw Learn Syst 29(1):51–62

Kriegel HP, Zimek A (2008) Angle-based outlier detection in high-dimensional data. In: Proceedings of the 14th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. ACM, pp. 444–452.

Pham N, Pagh R (2012) A near-linear time approximation algorithm for angle-based outlier detection in high-dimensional data. In: Proceedings of the 18th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. ACM, pp. 877–885.

Breunig MM, Kriegel HP, Ng RT et al (2000) LOF: identifying density-based local outliers. ACM Sigmod Rec ACM 29(2):93–104

Kriegel HP, Kröger P, Schubert E, et al (2009) LoOP: local outlier probabilities. In: Proceedings of the 18th ACM Conference on Information and Knowledge Management. ACM, pp. 1649–1652.

Eskin E, Arnold A, Prerau M et al (2002) A geometric framework for unsupervised anomaly detection. Applications of data mining in computer security. Springer, Berlin, pp 77–101

Das K, Schneider J (2007) Detecting anomalous records in categorical datasets. In: Proceedings of the 13th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. ACM, pp. 220–229.

Liu Z, Lai Z, Ou W et al (2020) Structured optimal graph based sparse feature extraction for semi-supervised learning. Signal Process 170:107456

Jumutc V, Suykens JAK (2013) Supervised novelty detection. In: 2013 IEEE Symposium on Computational Intelligence and Data Mining (CIDM). IEEE, pp. 143–149.

Zhu F, Yang J, Gao J et al (2017) Finding the samples near the decision plane for support vector learning. Inf Sci 382:292–307

Koggalage R, Halgamuge S (2004) Reducing the number of training samples for fast support vector machine classification. Neural Inform Process Lett Rev 2(3):57–65

Zhu F, Yang J, Ye N et al (2014) Neighbors’ distribution property and sample reduction for support vector machines. Appl Soft Comput 16:201–209

De Almeida MB, de Pádua Braga A, Braga JP (2000) SVM-KM: speeding SVMs learning with a priori cluster selection and k-means. In: Proceedings of Sixth Brazilian Symposium on Neural Networks. IEEE, pp. 162–167.

Wang D, Shi L (2008) Selecting valuable training samples for SVMs via data structure analysis. Neurocomputing 71(13):2772–2781

Li Y, Maguire L (2011) Selecting critical patterns based on local geometrical and statistical information. IEEE Trans Pattern Anal Mach Intell 33(6):1189–1201

Zhu F, Ye N, Yu W et al (2014) Boundary detection and sample reduction for one-class support vector machines. Neurocomputing 123:166–173

Li Y (2011) Selecting training points for one-class support vector machines. Pattern Recogn Lett 32(11):1517–1522

Zhu F, Yang J, Xu S et al (2016) Relative density degree induced boundary detection for one-class SVM. Soft Comput 20(11):4473–4485

Guo G, Zhang JS (2007) Reducing examples to accelerate support vector regression. Pattern Recogn Lett 28(16):2173–2183

Zhu F, Gao J, Xu C et al (2017) On selecting effective patterns for fast support vector regression training. IEEE Trans Neural Netw Learn Syst 29(8):3610–3622

Chandola V, Banerjee A, Kumar V (2009) Anomaly detection: a survey. ACM Comput Surv (CSUR) 41(3):15

Radovanović M, Nanopoulos A, Ivanović M (2014) Reverse nearest neighbors in unsupervised distance-based outlier detection. IEEE Trans Knowl Data Eng 27(5):1369–1382

Wächter A, Biegler LT (2006) On the implementation of an interior-point filter line-search algorithm for large-scale nonlinear programming. Math Program 106(1):25–57

Lichman M (2013) UCI Machine Learning Repository. Irvine, CA: University of California, School of Information and Computer Science. https://archive.ics.uci.edu/ml. Accessed 15 Sept 2015

Bradley AP (1997) The use of the area under the ROC curve in the evaluation of machine learning algorithms. Pattern Recogn 30(7):1145–1159

Liu Z, Wang J, Liu G et al (2019) Discriminative low-rank preserving projection for dimensionality reduction. Appl Soft Comput 85:105768

Yi Y, Wang J, Zhou W et al (2020) Non-Negative matrix factorization with locality constrained adaptive graph. IEEE Trans Circuits Syst Video Technol 30(2):427–441

Yi Y, Wang J, Zhou W et al (2019) Joint graph optimization and projection learning for dimensionality reduction. Pattern Recogn 92:258–273

Acknowledgements

This work is supported by the National Natural Science Foundation of China (Nos. 61602221, 61806126, 61976118, 41661083 and 71661015), the Natural Science Foundation of Jiangxi Province (No. 20171BAB212009) and the Provincial Key Research and Development Program of Jiangxi (No. 20181ACE50030), the Teaching Reform Project of Colleges and Universities in Jiangxi Province (JXJG-19-2-24).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations

Rights and permissions

About this article

Cite this article

Yi, Y., Shi, Y., Wang, W. et al. Combining Boundary Detector and SND-SVM for Fast Learning. Int. J. Mach. Learn. & Cyber. 12, 689–698 (2021). https://doi.org/10.1007/s13042-020-01196-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13042-020-01196-2