Abstract

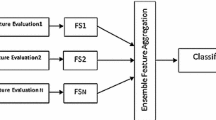

For the first time, the ensemble feature selection is modeled as a Multi-Criteria Decision-Making (MCDM) process in this paper. For this purpose, we used the VIKOR method as a famous MCDM algorithm to rank the features based on the evaluation of several feature selection methods as different decision-making criteria. Our proposed method, EFS-MCDM, first obtains a decision matrix using the ranks of every feature according to various rankers. The VIKOR approach is then used to assign a score to each feature based on the decision matrix. Finally, a rank vector for the features generates as an output in which the user can select a desired number of features. We have compared our approach with some ensemble feature selection methods using feature ranking strategy and basic feature selection algorithms to illustrate the proposed method's optimality and efficiency. The results show that our approach in terms of accuracy, F-score, and algorithm run-time is superior to other similar methods and performs in a short time, and it is more efficient than the other methods.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Rathore P, Kumar D, Bezdek JC et al (2019) A rapid hybrid clustering algorithm for large volumes of high dimensional data. IEEE Trans Knowl Data Eng 31:641–654. https://doi.org/10.1109/TKDE.2018.2842191

Miao J, Niu L (2016) A survey on feature selection. In: Procedia computer science, pp 919–926

Mlambo NWC (2016) A survey and comparative study of filter and wrapper feature selection techniques. Int J Eng Sci 5:57–67

Cai J, Luo J, Wang S, Yang S (2018) Feature selection in machine learning: a new perspective. Neurocomputing 300:70–79. https://doi.org/10.1016/j.neucom.2017.11.077

Li J, Cheng K, Wang S et al (2017) Feature selection: a data perspective. ACM Comput Surv. https://doi.org/10.1145/3136625

Zhang R, Nie F, Li X, Wei X (2019) Feature selection with multi-view data: a survey. Inf Fusion 50:158–167. https://doi.org/10.1016/j.inffus.2018.11.019

Dowlatshahi MB, Derhami V, Nezamabadi-pour H (2018) A novel three-stage filter-wrapper framework for miRNA subset selection in cancer classification. Informatics. https://doi.org/10.3390/informatics5010013

Anaraki JR, Usefi H (2019) A feature selection based on perturbation theory. Expert Syst Appl 127:1–8. https://doi.org/10.1016/j.eswa.2019.02.028

Hashemi A, Dowlatshahi MB (2020) MLCR: A Fast Multi-label Feature Selection Method Based on K-means and L2-norm. In: 2020 25th international computer conference, computer society of Iran (CSICC). IEEE, pp 1–7

Hashemi A, Dowlatshahi MB, Nezamabadi-pour H (2020) MGFS: a multi-label graph-based feature selection algorithm via PageRank centrality. Expert Syst Appl 142:113024. https://doi.org/10.1016/j.eswa.2019.113024

Hashemi A, Dowlatshahi MB, Nezamabadi-Pour H (2020) A bipartite matching-based feature selection for multi-label learning. Int J Mach Learn Cybern. https://doi.org/10.1007/s13042-020-01180-w

Paniri M, Dowlatshahi MB, Nezamabadi-pour H (2019) MLACO: A multi-label feature selection algorithm based on ant colony optimization. Knowledge-Based Syst. https://doi.org/10.1016/j.knosys.2019.105285

Bayati H, Dowlatshahi MB, Paniri M (2020) MLPSO: a filter multi-label feature selection based on particle swarm optimization. In: 2020 25th international computer conference, computer society of Iran (CSICC). IEEE, pp 1–6

Bayati H, Dowlatshahi MB, Paniri M (2020) Multi-label feature selection based on competitive swarm optimization. J Soft Comput Inf Technol 9:56–69

Pereira RB, Plastino A, Zadrozny B, Merschmann LHC (2018) Categorizing feature selection methods for multi-label classification. Artif Intell Rev 49:57–78. https://doi.org/10.1007/s10462-016-9516-4

Sheikhpour R, Sarram MA, Gharaghani S, Chahooki MAZ (2017) A Survey on semi-supervised feature selection methods. Pattern Recognit 64:141–158. https://doi.org/10.1016/j.patcog.2016.11.003

Sheikhpour R, Sarram MA, Gharaghani S, Chahooki MAZ (2020) A robust graph-based semi-supervised sparse feature selection method. Inf Sci (Ny) 531:13–30. https://doi.org/10.1016/j.ins.2020.03.094

Solorio-Fernández S, Carrasco-Ochoa JA, Martínez-Trinidad JF (2020) A review of unsupervised feature selection methods. Artif Intell Rev. https://doi.org/10.1007/s10462-019-09682-y

Lee J, Kim D-W (2015) Mutual Information-based multi-label feature selection using interaction information. Expert Syst Appl. https://doi.org/10.1016/j.eswa.2014.09.063

Reyes O, Morell C, Ventura S (2015) Scalable extensions of the ReliefF algorithm for weighting and selecting features on the multi-label learning context. Neurocomputing. https://doi.org/10.1016/j.neucom.2015.02.045

Kashef S, Nezamabadi-pour H, Nikpour B (2018) FCBF3Rules: a feature selection method for multi-label datasets, pp 1–5

Venkatesh B, Anuradha J (2019) A review of feature selection and its methods. Cybern Inf Technol 19:3–26. https://doi.org/10.2478/CAIT-2019-0001

Darshan SLS, Jaidhar CD (2020) An empirical study to estimate the stability of random forest classifier on the hybrid features recommended by filter based feature selection technique. Int J Mach Learn Cybern. https://doi.org/10.1007/s13042-019-00978-7

Tawhid MA, Ibrahim AM (2020) Feature selection based on rough set approach, wrapper approach, and binary whale optimization algorithm. Int J Mach Learn Cybern 11:573–602. https://doi.org/10.1007/s13042-019-00996-5

Bolón-Canedo V, Alonso-Betanzos A (2019) Ensembles for feature selection: a review and future trends. Inf Fusion 52:1–12. https://doi.org/10.1016/j.inffus.2018.11.008

Dowlatshahi MB, Derhami V, Nezamabadi-Pour H (2017) Ensemble of filter-based rankers to guide an epsilon-greedy swarm optimizer for high-dimensional feature subset selection. Inf. https://doi.org/10.3390/info8040152

Dowlatshahi MB, Rezaeian M (2016) Training spiking neurons with gravitational search algorithm for data classification. In: 1st conference on swarm intelligence and evolutionary computation, CSIEC 2016—Proceedings, pp 53–58

Dowlatshahi MB, Nezamabadi-Pour H, Mashinchi M (2014) A discrete gravitational search algorithm for solving combinatorial optimization problems. Inf Sci (Ny) 258:94–107. https://doi.org/10.1016/j.ins.2013.09.034

Rafsanjani MK, Dowlatshahi MB (2012) using gravitational search algorithm for finding near-optimal base station location in two-tiered WSNs. Int J Mach Learn Comput. https://doi.org/10.7763/ijmlc.2012.v2.148

Dowlatshahi MB, Derhami V, Nezamabadi-Pour H (2020) Fuzzy particle swarm optimization with nearest-better neighborhood for multimodal optimization. Iran J Fuzzy Syst 17:7–24. https://doi.org/10.22111/ijfs.2020.5403

Dowlatshahi MB, Derhami V (2019) Winner determination in combinatorial auctions using hybrid ant colony optimization and multi-neighborhood local search. J AI Data Min 5:169–181. https://doi.org/10.22044/jadm.2017.880

Momeni E, Yarivand A, Dowlatshahi MB, Jahed Armaghani D (2020) An efficient optimal neural network based on gravitational search algorithm in predicting the deformation of geogrid-reinforced soil structures. Transp Geotech. https://doi.org/10.1016/j.trgeo.2020.100446

Dowlatshahi MB, Nezamabadi-Pour H (2014) GGSA: a grouping gravitational search algorithm for data clustering. Eng Appl Artif Intell 36:114–121. https://doi.org/10.1016/j.engappai.2014.07.016

Momeni E, Dowlatshahi MB, Omidinasab F et al (2020) Gaussian process regression technique to estimate the pile bearing capacity. Arab J Sci Eng. https://doi.org/10.1007/s13369-020-04683-4

Rafsanjani MK, Dowlatshahi MB, Nezamabadi-Pour H (2015) Gravitational search algorithm to solve the K-of-N lifetime problem in two-tiered WSNs. Iran J Math Sci Inform 10:81–93. https://doi.org/10.7508/ijmsi.2015.01.006

Dowlatshahi MB, Derhami V, Nezamabadi-pour H (2019) Gravitational search algorithm with nearest-better neighborhood for multimodal optimization problems. J Soft Comput Inf Technol 8:10–19

Dowlatshahi MB, Derhami V, Professor A, Nezamabadi-pour H (2018) Gravitational locally informed particle swarm algorithm for solving multimodal optimization problems. Tabriz J Electr Eng 48:1131–1140

Patil MV, Kulkarni AJ (2020) Pareto dominance based Multiobjective Cohort Intelligence algorithm. Inf Sci (Ny) 538:69–118. https://doi.org/10.1016/j.ins.2020.05.019

Liu Y, Zhu N, Li K et al (2020) An angle dominance criterion for evolutionary many-objective optimization. Inf Sci (Ny). https://doi.org/10.1016/j.ins.2018.12.078

Hashemi A, Dowlatshahi MB, Nezamabadi-pour H (2020) MFS-MCDM: Multi-label feature selection using multi-criteria decision making. Knowl Based Syst. https://doi.org/10.1016/j.knosys.2020.106365

Zyoud SH, Fuchs-Hanusch D (2017) A bibliometric-based survey on AHP and TOPSIS techniques. Expert Syst Appl 78:158–181

Hendiani S, Jiang L, Sharifi E, Liao H (2020) Multi-expert multi-criteria decision making based on the likelihoods of interval type-2 trapezoidal fuzzy preference relations. Int J Mach Learn Cybern 11:2719–2741. https://doi.org/10.1007/s13042-020-01148-w

Chai J, Ngai EWT (2020) Decision-making techniques in supplier selection: recent accomplishments and what lies ahead. Expert Syst Appl 140

Kim JH, Ahn BS (2019) Extended VIKOR method using incomplete criteria weights. Expert Syst Appl 126:124–132. https://doi.org/10.1016/j.eswa.2019.02.019

Acuña-Soto CM, Liern V, Pérez-Gladish B (2019) A VIKOR-based approach for the ranking of mathematical instructional videos. Manag Decis 57:501–522. https://doi.org/10.1108/MD-03-2018-0242

Ebrahimpour MK, Eftekhari M (2017) Ensemble of feature selection methods: a hesitant fuzzy sets approach. Appl Soft Comput J 50:300–312. https://doi.org/10.1016/j.asoc.2016.11.021

Ansari G, Ahmad T, Doja MN (2019) Ensemble of feature ranking methods using hesitant fuzzy sets for sentiment classification. Int J Mach Learn Comput 9:599–608. https://doi.org/10.18178/ijmlc.2019.9.5.846

Seijo-Pardo B, Porto-Díaz I, Bolón-Canedo V, Alonso-Betanzos A (2017) Ensemble feature selection: homogeneous and heterogeneous approaches. Knowl Based Syst 118:124–139. https://doi.org/10.1016/j.knosys.2016.11.017

Drotár P, Gazda M, Vokorokos L (2019) Ensemble feature selection using election methods and ranker clustering. Inf Sci (Ny) 480:365–380. https://doi.org/10.1016/j.ins.2018.12.033

Das AK, Das S, Ghosh A (2017) Ensemble feature selection using bi-objective genetic algorithm. Knowl Based Syst 123:116–127. https://doi.org/10.1016/j.knosys.2017.02.013

Wang H, He C, Li Z (2020) A new ensemble feature selection approach based on genetic algorithm. Soft Comput 24:15811–15820. https://doi.org/10.1007/s00500-020-04911-x

Basir MA, Hussin MS, Yusof Y (2021) Ensemble feature selection method based on bio-inspired algorithms for multi-objective classification problem, pp 167–176

Ng WWY, Tuo Y, Zhang J, Kwong S (2020) Training error and sensitivity-based ensemble feature selection. Int J Mach Learn Cybern 11:2313–2326. https://doi.org/10.1007/s13042-020-01120-8

Alhamidi MR, Jatmiko W (2020) Optimal feature aggregation and combination for two-dimensional ensemble feature selection. Information 11:38. https://doi.org/10.3390/info11010038

Yu W, Zhang Z, Zhong Q (2019) Consensus reaching for MAGDM with multi-granular hesitant fuzzy linguistic term sets: a minimum adjustment-based approach. Ann Oper Res. https://doi.org/10.1007/s10479-019-03432-7

Liao H, Wu X (2020) DNMA: A double normalization-based multiple aggregation method for multi-expert multi-criteria decision making. Omega (United Kingdom). https://doi.org/10.1016/j.omega.2019.04.001

Fei L, Deng Y (2020) Multi-criteria decision making in Pythagorean fuzzy environment. Appl Intell. https://doi.org/10.1007/s10489-019-01532-2

Zhang Z, Gao Y, Li Z (2020) Consensus reaching for social network group decision making by considering leadership and bounded confidence. Knowl Based Syst. https://doi.org/10.1016/j.knosys.2020.106240

Zhang Z, Yu W, Martinez L, Gao Y (2020) Managing multigranular unbalanced hesitant fuzzy linguistic information in multiattribute large-scale group decision making: a linguistic distribution-based approach. IEEE Trans Fuzzy Syst. https://doi.org/10.1109/TFUZZ.2019.2949758

Bolón-Canedo V, Alonso-Betanzos A (2018) Evaluation of ensembles for feature selection. In: Intelligent Systems reference library, pp 97–113

Kacprzak D (2019) A doubly extended TOPSIS method for group decision making based on ordered fuzzy numbers. Expert Syst Appl 116:243–254. https://doi.org/10.1016/j.eswa.2018.09.023

Behzadian M, Khanmohammadi Otaghsara S, Yazdani M, Ignatius J (2012) A state-of the-art survey of TOPSIS applications. Expert Syst Appl 39:13051–13069

Opricovic S (1998) Multicriteria optimization in civil engineering (in Serbian)

Hwang C-L, Yoon K (1981) Methods for multiple attribute decision making, pp 58–191

Çalı S, Balaman ŞY (2019) A novel outranking based multi criteria group decision making methodology integrating ELECTRE and VIKOR under intuitionistic fuzzy environment. Expert Syst Appl 119:36–50. https://doi.org/10.1016/j.eswa.2018.10.039

Duda RO, Hart PE, Stork DG (2001) Pattern classification. Wiley, New York, Sect 10:l

Zeng H, Cheung YM (2011) Feature selection and kernel learning for local learning-based clustering. IEEE Trans Pattern Anal Mach Intell 33:1532–1547. https://doi.org/10.1109/TPAMI.2010.215

Michalak K, Kwasnicka H (2010) Correlation based feature selection method. Int J Bio-Inspir Comput 2:319–332. https://doi.org/10.1504/IJBIC.2010.036158

Bache, K. & Lichman M (2013) Repository, UCI machine learning. CA Univ. Calif, Irvine

Shipp MA, Ross KN, Tamayo P et al (2002) Diffuse large B-cell lymphoma outcome prediction by gene-expression profiling and supervised machine learning. Nat Med 8:68–74. https://doi.org/10.1038/nm0102-68

Lyons M, Akamatsu S, Kamachi M, Gyoba J (1998) Coding facial expressions with Gabor wavelets. In: Proceedings—3rd IEEE international conference on automatic face and gesture recognition, FG 1998, pp 200–205

Samaria FS, Harter AC (1994) Parameterisation of a stochastic model for human face identification. In: IEEE workshop on applications of computer vision—proceedings, pp 138–142

Pomeroy SL, Tamayo P, Gaasenbeek M et al (2002) Prediction of central nervous system embryonal tumour outcome based on gene expression. Nature 415:436–442. https://doi.org/10.1038/415436a

Hastie T, Tibshirani R, Friedman J, Franklin J (2017) The elements of statistical learning: data mining, inference, and prediction. Math Intell. https://doi.org/10.1007/BF02985802

Coakley CW, Conover WJ (2000) Practical nonparametric statistics. J Am Stat Assoc 95:332. https://doi.org/10.2307/2669565

Zhang Z, Kou X, Yu W, Gao Y (2020) Consistency improvement for fuzzy preference relations with self-confidence: an application in two-sided matching decision making. J Oper Res Soc. https://doi.org/10.1080/01605682.2020.1748529

Zhang Z, Gao J, Gao Y, Yu W (2020) Two-sided matching decision making with multi-granular hesitant fuzzy linguistic term sets and incomplete criteria weight information. Expert Syst Appl. https://doi.org/10.1016/j.eswa.2020.114311

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Hashemi, A., Dowlatshahi, M.B. & Nezamabadi-pour, H. Ensemble of feature selection algorithms: a multi-criteria decision-making approach. Int. J. Mach. Learn. & Cyber. 13, 49–69 (2022). https://doi.org/10.1007/s13042-021-01347-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13042-021-01347-z