Abstract

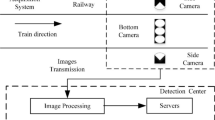

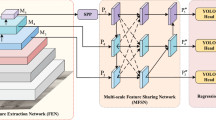

Faults in train mechanical parts pose a significant safety hazard to railway transportation. Although some image detection methods have replaced manual fault detection of train mechanical parts, the detection effect on small mechanical parts under low illumination conditions is not ideal. To improve the accuracy and efficiency of the detection of train faults under different environments, we propose a multi-mode aggregation feature enhanced network (MAFENet) based on a single-stage detector (SSD). This network uses the idea of a two-step adjustment structure from coarse to fine and uses the K-means algorithm to design anchors. The receptive field enhancement module (RFEM) is designed to obtain the fusion features of different receptive fields. The attention-guided detail feature enhancement module (ADEM) is designed to complement the detailed features of deep-level feature maps. Meanwhile, the complete intersection over union (CIoU) loss is used to obtain more accurate bounding boxes. The experimental results on the train mechanical parts fault (TMPF) dataset showed that the detection performance of MAFENet is better than those of other SSD models. MAFENet with an input size of 320 × 320 pixels can achieve a mean average precision (mAP) of 0.9787 and a detection speed of 33 frames per second (FPS), which indicates that it can realize real-time detection, has good robustness to images under different environmental conditions, and can be used to improve the efficiency of the detection of faulty train parts.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Liu R (2005) Principle and application of TFDS. Chinese Railways 5:26–27 ((in Chinese))

Kong R, Sun F, A. Yao, H. Liu, M. Lu, Y. Chen (2017) “Ron: Reverse connection with objectness prior networks for object detection,” in Proc. Comput. Vis. Pattern Recognit. (CVPR), 5936–5944

S. Zhang, L. Wen, X. Bian, Z. Lei, S. Z. Li, (2018) Single-Shot Refinement Neural Network for Object Detection," Proc. IEEE Comput. Vis. Pattern Recognit. (CVPR), pp. 4203–4212

K. He, X. Zhang, S. Ren, J. Sun (2016) Deep Residual Learning for Image Recognition, Proc. IEEE Comput. Vis. Pattern Recognit. (CVPR), pp. 770–778

N. Ma, X. Zhang, H. Zheng, and J. Sun, (2018) ShuffleNet V2: Practical Guidelines for Efficient CNN Architecture Design, Proc. Springer Euro. Conf. Comput. Vis. (ECCV), pp. 122–138

M. Tan and Q. V. Le (2019) EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks, Proc. Int. Conf. Machine Learning, pp. 6105–6114

Xiao L, Wu B, Hu Y (2020) Surface defect detection using image pyramid. IEEE Sens J 20(13):7181–7188

Zhong J, Liu Z, Han Z, Han Y, Zhang W (2019) A CNN-based defect inspection method for catenary split pins in high-speed railway. IEEE Trans Instrum Meas 68(8):2849–2860

K. He, G. Gkioxari, P. Dollar and R. Girshick (2017) Mask R-CNN," Proc. IEEE Int. Conf. Comput. Vis. (ICCV), pp. 2980–2988

L. Chen, G. Papandreou, F. Schroff, H. J. a. C. V. Adam, and P. Recognition, "Rethinking Atrous Convolution for Semantic Image Segmentation", arXiv: 1706.05587, 2017. [Online]. Available: https://arxiv.org/abs/1706.05587.

S. Mehta, M. Rastegari, A. Caspi, L. G. Shapiro, H. Hajishirzi (2018) ESPNet Efficient Spatial Pyramid of Dilated Convolutions for Semantic Segmentation," Proc. Springer Euro. Conf. Comput. Vis. (ECCV), Springer, Cham 561–580

R. Girshick, J. Donahue, T. Darrell, and J. Malik (2014) Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation Proc. IEEE Comput Vis Pattern Recognit (CVPR), pp. 580–587

S. Ren, K. He, R. Girshick, and J. Sun (2015) Faster R-CNN: towards real-time object detection with region proposal networks, Proc. Neural Inf. Process. Syst. (NIPS), pp. 91–99

T. Lin, P. Dollar, R. Girshick, K. He, B. Hariharan, S. Belongie (2017) Feature Pyramid Networks for Object Detection Proc. IEEE Comput. Vis. Pattern Recognit. (CVPR), pp. 936–944

W. Liu et al., (2016) SSD: Single shot multibox detector, Proc. Springer Euro. Conf. Comput. Vis. (ECCV), pp. 21–37

J. Redmon, S. K. Divvala, R. Girshick, A. Farhadi, (2016) You Only Look Once: Unified, Real-Time Object Detection," Proc. IEEE Comput. Vis. Pattern Recognit. (CVPR), pp. 779–788,

T. Lin, P. Goyal, R. Girshick, K. He, P. Dollar (2017) Focal Loss for Dense Object Detection Proc. Springer Euro. Conf. Comput. Vis. (ECCV), pp. 2999–3007, pp. 318–327

J. Redmon A. Farhadi, "YOLOv3: An incremental improvement", 2018, [online] Available: https://arxiv.org/abs/1804.02767.

Zhou F, Zou R, Qiu Y, Gao H (2014) Automated visual inspection of angle cocks during train operation. Proc Inst Mech Eng Part F-J Rail Rapid Transit 228(7):794–806

Liu L, Zhou F, He Y (2015) Automated status inspection of fastening bolts on freight trains using a machine vision approach. IEEE Trans Instrum Meas 230(7):1629–1641

G. Nan and J. E. Yao (2015) A real-time visual inspection method of fastening bolts in train operation," Proc. Aopc 2015: Image Processing and Analysis 9675

A. James, W. Jie, Y. Xulei, Y. Chenghao, Z. Zeng (2018) TrackNet - A Deep-learning Based Fault Detection for Railway Track Inspection, Proc. 2018 Int. Conf. Intell. Rail Transportation (ICIRT)

R. Singh Pahwa, J. Chao, J. paul (2019) FaultNet: Faulty Rail-Valves Detection using Deep-learning and Computer Vision," Proc. Inst. Mech. Eng. Part F-J. Rail Rapid Transit (IEEE-ITSC), pp. 59–566

Zhou F, Li J, Li X, Li Z, Cao Y (2018) Train target detection in a complex background based on convolutional neural networks. Proc Inst Mech Eng Part F-J Rail Rapid Transit 233(3):298–311

Vaswani A, Shazeer N, Parmar N (2017) Attention is all you need. Proc Neural Inf Process Syst (NIPS) 30:5998–6008

X. Wang, R. Girshick, A. Gupta, K. He (2018) Non-local Neural Networks," Proc. IEEE Comput. Vis. Pattern Recognit. (CVPR), pp. 7794–7803

Yi J, Wu P, Metaxas D, Understanding I (2019) ASSD: Attentive single shot multibox detector. Comput Vis Image Underst 189:1028

Chang C Y, Chang S E, Hsiao P Y, et al. (2020) EPSNet: Efficient Panoptic Segmentation Network with Cross-layer Attention Fusion[C]//Proceedings of the Asian Conference on Computer Vision

J. Yu, Y. Jiang, Z. Wang, Z. Cao, T. S. Huang (2016) UnitBox: An Advanced Object Detection Network," Proc. acm multimedia, pp. 516–520

H. Rezatofighi, N. Tsoi, J. Gwak, A. Sadeghian, I. Reid, S. Savarese (2019) Generalized Intersection Over Union: A Metric and a Loss for Bounding Box Regression," Proc. IEEE Comput. Vis. Pattern Recognit. (CVPR), pp. 658–666

Zheng Z, Wang P, Liu W, Li J, Ye R, Ren D (2020) Distance-IoU Loss: Faster and Better learning for bounding box regression," in national conference on artificial intelligence. Proc AAAI Conf Artif Intell 34:12993–13000

K. Simonyan and A. Zisserman (2014) Very deep convolutional networks for large-scale image recognition,” arXiv: 1409.1556, [Online]. Available: https://arxiv.org/abs/1409.1556.

C. Szegedy et al. (2015) Going deeper with convolutions, Proc. IEEE Comput. Vis. Pattern Recognit. (CVPR), pp. 1–9

S. Liu, D. Huang, Y. Wang (2018) Receptive field block net for accurate and fast object detection, Proc. Springer Euro. Conf. Comput. Vis. (ECCV), Cham 404–419

Hu J, Shen L, Albanie S, Sun G, Wu E (2020) Squeeze-and-excitation networks. IEEE Trans Pattern Anal Mach Intell 42(8):2011–2023

Z. Li, and F. Zhou, "FSSD: Feature Fusion Single Shot Multibox Detector," arXiv: 1712.00960, 2017, [Online]. Available: https://arxiv.org/abs/1712.00960.

Bolei Zhou, Aditya Khosla, Agata Lapedriza Aude Oliva, and Antonio Torralba (2016) “Learning deep features for discriminative localization”. Proc. IEEE CVPR, pp. 2921–2929

Acknowledgements

We thank China University of Mining and Technology (Beijing) for providing the experimental hardware platform. This work was supported by the National Natural Science Foundation of China (No. 52075027), the Fundamental Research Funds for the Central Universities (2020XJJD03), and the State Key Laboratory of Coal Mining and Clean Utilization, China (2021-CMCU-KF012).

Author information

Authors and Affiliations

Contributions

The overall research objectives and technical routes were formulated by YT. ZJ conducted the investigations, experiments, and wrote the first draft of the manuscript. ZZ-h, ZY, ZF-q, and GX-z assisted in writing the first draft.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have influenced the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Tao, Y., Jun, Z., Zhi-hao, Z. et al. Fault detection of train mechanical parts using multi-mode aggregation feature enhanced convolution neural network. Int. J. Mach. Learn. & Cyber. 13, 1781–1794 (2022). https://doi.org/10.1007/s13042-021-01488-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13042-021-01488-1