Abstract

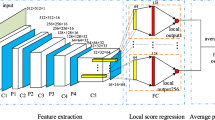

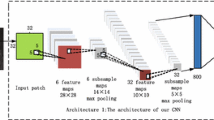

Convolutional neural networks (CNNs) have been widely applied in the image quality assessment (IQA) field, but the size of the IQA databases severely limits the performance of the CNN-based IQA models. The most popular method to extend the size of database in previous works is to resize the images into patches. However, human visual system (HVS) can only perceive the qualities of objects in an image rather than the qualities of patches in it. Motivated by this fact, we propose a CNN-based algorithm for no-reference image quality assessment (NR-IQA) based on object detection. The network has three parts: an object detector, an image quality prediction network, and a self-correction measurement (SCM) network. First, we detect objects from input image by the object detector. Second, a ResNet-18 network is applied to extract features of the input image and a fully connected (FC) layer is followed to estimate image quality. Third, another ResNet-18 network is used to extract features of both the images and its detected objects, where the features of the objects are concatenated to the features of the image. Then, another FC layer is followed to compute the correction value of each object. Finally, the predicted image quality is amended by the SCM values. Experimental results demonstrate that the proposed NR-IQA model has state-of-the-art performance. In addition, cross-database evaluation indicates the great generalization ability of the proposed model.

Similar content being viewed by others

References

Wang Z, Bovik AC, Sheikh HR, Simoncelli EP (2004) Image quality assessment: from error visibility to structural similarity. IEEE Trans Image Process 13(4):600–612

Sheikh HR, Bovik AC, de Veciana G (2005) An information fidelity criterion for image quality assessment using natural scene statistics. IEEE Trans Image Process 14(12):2117–2128

Zhang L, Zhang L, Mou X, Zhang D (2011) FSIM: a feature similarity index for image quality assessment. IEEE Trans Image Process 20(8):2378–2386

Moorthy AK, Bovik AC (2011) Blind image quality assessment: from natural scene statistics to perceptual quality. IEEE Trans Image Process 20(12):3350–3364

Saad MA, Bovik AC, Charrier C (2012) Blind image quality assessment: A natural scene statistics approach in the dct domain. IEEE Trans Image Process 21(8):3339–3352

Mittal A, Moorthy AK, Bovik AC (2012) No-reference image quality assessment in the spatial domain. IEEE Trans Image Process 21(12):4695–4708

Moorthy AK, Bovik AC (2010) A two-step framework for constructing blind image quality indices. IEEE Signal Process Lett 17(5):513–516

Kang L, Ye P, Li Y, Doermann D (2014) Convolutional neural networks for no-reference image quality assessment. IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 1733–1740

Kang L, Ye P, Li Y, Doermann D (2015) Simultaneous estimation of image quality and distortion via multi-task convolutional neural networks. IEEE international conference on image processing (ICIP) 2791–2795

Bosse S, Maniry D, Muller K-R, Wiegand T, Samek W (2016) Deep neural networks for no-reference and full-reference image quality assessment. IEEE Trans Image Process 27(1):206–219

Ponomarenko N, Jin L, Ieremeiev O, Lukin V, Egiazarian K, Astola J, Vozel B, Chehdi K, Carli M, Battisti F, Kuo CC (2015) Image database tid2013: peculiarities, results and perspectives. Signal process Image commun 30:57–77

Cheng MM, Mitra NJ, Huang X, Torr PH, Hu SM (2014) Global contrast based salient region detection. IEEE Trans Pattern Anal Mach Intell 37(3):569–582

Cheng MM, Zhang Z, Lin WY, Torr P (2014) Bing: binarized normed gradients for objectness estimation at 300fps. IEEE Conf Comput Vis Pattern Recogn (CVPR) 28(5):3286–3293

Viola P, Jones M (2001) Rapid object detection using a boosted cascade of simple features. IEEE Conf Comput Vis Pattern Recogn (CVPR) 1:905–910

Dalal N, Triggs B (2005) Histograms of oriented gradients for human detection. IEEE Conf Comput Vis Pattern Recogn (CVPR) 1:886–893

Chen Y, Han C, Wang N, Zhang Z (2019) Revisiting feature alignment for one-stage object detection. arXiv preprint arXiv:1908.01570

Tian Z, Shen C, Chen H, He T (2019) Fcos: Fully convolutional one-stage object detection. InProceedings of the IEEE/CVF International Conference on Computer Vision 9627–9636

Zhang S, Wen L, Bian X, Lei Z, Li S (2018) Single-shot refinement neural network for object detection. IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 4203–4212

Zhu C, He Y, Savvides M (2019) Feature selective anchor-free module for single-shot object detection. InProceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 840–849

He K, Gkioxari G, Dollar P, Girshick R (2017) Mask r-cnn. IEEE International Conference on Computer Vision (ICCV) 2961–2969

Lin T, Dollar P, Girshick R, He K, Hariharan B, Belongie S (2017) Feature pyramid networks for object detection. IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2117–2125

Lu X, Li B, Yue Y, Li Q, Yan J (2019) Grid r-cnn. IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 7363–7372

Zhang P, Zhou W, Wu L, Li H (2015) SOM: Semantic obviousness metric for image quality assessment. IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2394–2402

Gu J, Meng G, Redi JA, Xiang S, Pan C (2017) Blind image quality assessment via vector regression and object oriented pooling. IEEE Trans Multimedia 20(5):1140–1153

Kim J, Lee S (2017) Fully deep blind image quality predictor. IEEE J Selected Topics Signal Process 11(1):206–220

Bosse S, Maniry D, Wiegand T, Samek W (2016) A deep neural network for image quality assessment. IEEE International Conference on Image Processing (ICIP) 3773–3777

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 770–778

Deng J, Dong W, Socher R, Li L-J, Li K, Fei-Fei L (2009) Imagenet: A large-scale hierarchical image database. IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 248–255

Sheikh HR, Sabir MF, Bovik AC (2006) A statistical evaluation of recent full reference image quality assessment algorithms. IEEE Trans Image Process 15(11):3440–3451

Larson EC, Chandler DM (2009) Consumer subjective image quality database. [Online]. Available: http://vision.okstate.edu/index.php,

Daniel WW (1978) Applied nonparametric statistics. Houghton Mifflin, Boston, MA, USA

Eberly LE (2003) Correlation and simple linear regression. Radiology 227(3):617–628

Ye P, Kumar J, Kang L, Doermann D (2012) Unsupervised feature learning framework for no-reference image quality assessment. IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 1098–1105

Zhang L, Zhang L, Bovik AC (2015) A feature-enriched completely blind image quality evaluator. IEEE Trans Image Process 24(8):2579–2591

Xue W, Mou X, Zhang L, Bovik AC, Feng X (2014) Blind image quality assessment using joint statistics of gradient magnitude and Laplacian features. IEEE Trans Image Process 23(11):4850–4862

Xu J, Ye P, Li Q, Du H, Liu Y, Doermann D (2016) Blind image quality assessment based on high order statistics aggregation. IEEE Trans Image Process 25(9):4444–4457

Kim J, Nguyen AD, Lee S (2019) Deep cnn-based blind image quality predictor. IEEE Trans Neural Netw Learn Syst 30(1):11–24

Yan Q, Gong D, Zhang Y (2019) Two-stream convolutional networks for blind image quality assessment. IEEE Trans Image Process 28(5):2200–2211

Zhu H, Li L, Wu J, Dong W, Shi G (2020) Metaiqa: Deep meta-learning for no-reference image quality assessment. IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 14131–14140

Acknowledgements

This work was supported in part by the Hong Kong Innovation and Technology Commission (InnoHK Project CIMDA), in part by the General Research Fund-Research Grants Council (GRF-RGC) under Grant 9042816 (CityU 11209819) and Grant 9042958 (CityU 11203820), in part by the National Natural Science Foundation of China under Grants 62176160, 62006158 and 61732011, in part by the Guangdong Basic and Applied Basic Research Foundation under Grant 2022A1515010791, in part by the Natural Science Foundation of Shenzhen (University Stability Support Program) under Grants 20200804193857002 and 20200810150732001, and in part by the Interdisciplinary Innovation Team of Shenzhen University.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Cao, J., Wu, W., Wang, R. et al. No-reference image quality assessment by using convolutional neural networks via object detection. Int. J. Mach. Learn. & Cyber. 13, 3543–3554 (2022). https://doi.org/10.1007/s13042-022-01611-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13042-022-01611-w