Abstract

Pseudo-labeled data is used to solve the data shortage in few-shot learning, in which the quality of pseudo-labels and pseudo-labeled data selection determine the classification performance. In order to obtain the enhanced pseudo-labels, we used diverse inputs to encourage the label network to learn invariant and robust representations, improving the generalization ability. Simultaneously, the depthwise over-parameterized convolutional layer and group residual connection with shared parameters accelerate the network training and overcome the time-consuming caused by diverse inputs. Then, the graded pseudo-labeled data selection is proposed to determine various quantities of pseudo-labeled data based on the label network’s performance level, which improves the classification accuracy and avoids the high consumption caused by using all the pseudo-labeled data. Finally, we solved the data shortage in food recognition with the proposed method. The experiments show that our method has better classification accuracy and generalization ability in few-shot benchmark datasets and food recognition with few samples.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Data availability statement

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

References

Gao F, Cai L, Yang Z, Song S, Wu C (2022) Multi-distance metric network for few-shot learning. Int J Mach Learn Cybern 13(9):2495–2506

Wang K, Wang X, Zhang T, Cheng Y (2022) Few-shot learning with deep balanced network and acceleration strategy. Int J Mach Learn Cybern 13(1):133–144

Finn C, Abbeel P, Levine S (2017) Model-agnostic meta-learning for fast adaptation of deep networks. In: Proceedings of the International Conference on Machine Learning (ICML), Sydney, AUSTRALIA, pp 1126–1135

Nichol A, Achiam J, Schulman J (2018) On first-order meta-learning algorithms. arXiv:1803.02999

Rusu AA, Rao D, Sygnowski J, et al (2018) Meta-learning with latent embedding optimization. arXiv:1807.05960

Santoro A, Bartunov S, Botvinick M, Wierstra D, Lillicrap T (2016) Meta-learning with memory-augmented neural netowrks. In: Proceedings of the International Conference on Machine Learning (ICML), New York City, NY, USA, pp 1842–1850

Munkhdalai T, Yuan X, Mehri, S et al (2018) Rapid adaptation with conditionally shifted neurons. In: Proceedings of the International Conference on Machine Learning (ICML), Stockholm, SWEDEN, pp 3661–3670

Ravi S, Larochelle H (2017) Optimization as a model for few-shot learning. In: Proceedings of the International Conference on Learning Representations (ICLR), Toulon, FRANCE, https://openreview.net/forum?id=rJY0-Kcll

Li S, Chen D, Liu B, et al (2019) Memory-based neighbourhood embedding for visual recognition. In: Proceedings of the IEEE International Conference on Computer Vision (ICCV), Seoul, Koera (South), pp 6101–6110

Vinyals O, Blundell C, Lillicrap T, et al (2016) Matching networks for one shot learning. In: Proceedings of the Advances in Neural Information Processing Systems (NIPS), Barcelona, SPAIN, pp 3630–3638

Snell J, Swersky K, Zemel RS (2017) Prototypical networks for few-shot learning. In: Proceedings of the Advances in Neural Information Processing Systems (NIPS), Long Beach, CA, USA, pp 4077–4087

Sung F, Yang Y, Zhang L, et al (2018) Learning to compare: relation network for few-shot learning. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, pp 1199–1208

Oreshkin BN, Rodriguez P, Lacoste A (2018) Tadam: task dependent adaptive metric for improved few-shot learning. In: Proceedings of the Advances in Neural Information Processing Systems (NIPS), Montréal , CANADA, pp 719–729

Antoniou A, Storkey A, Edwards H (2017) Data augmentation generative adversarial networks. arXiv: 1711.04340

Zhang R, Che T, Ghahramani Z et al (2018) Metagan: an adversarial approach to few-shot learning. In: Proceedings of the Advances in Neural Information Processing Systems (NIPS), Montréal, CANADA, pp 2371–2380

Xu W, Guo D, Qian Y, Ding W (2022) Two-way concept-cognitive learning method: a fuzzy-based progressive learning. IEEE Trans Fuzzy Syst. https://doi.org/10.1109/TFUZZ.2022.3216110

Xu W, Li W (2016) Granular computing approach to two-way learning based on formal concept analysis in fuzzy datasets. IEEE Trans Cybern 46(2):366–379

Li K, Zhang Y, Li K, Fu, Y (2020) Adversarial feature hallucination networks for few-shot learning. arXiv: 2003.13193

Kim J, Kim H, Kim G (2020) Model-agnostic boundary-adversarial sampling for test-time generalization in few-shot learning. In: Proceedings of the European Conference on Computer Vision (ECCV), ELECTR NETWORK, pp 599–6175

Chen Z, Fu Y, Wang Y, Ma L, Liu W, Hebert M (2019) Image deformation meta-networks for one-shot learning. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, pp 8672–8681

Zhang H, Zhang J, Koniusz P (2019) Few-shot learning via saliency-guided hallucination of samples. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, pp 2765–2774

Wang Y, Xu C, Liu C, Zhang L, Fu Y (2020) Instance credibility inference for few-shot learning. arXiv: 2003.11853

Lee K, Lee K, Shin J, Lee H (2019) Network randomization: a simple technique for generalization in deep reinforcement learning. arXiv: 1910.05396v3

Cao J, Li Y, Sun M, et al (2020) DO-Conv: depthwise over-parameterized convolutional layer. arXiv: 2006.12030

Wang X, Yu S (2020) Tied block convolution: leaner and better CNNs with shared thinner filters. arXiv: 2009.12021

Bertinetto L, Henriques J, Torr P, Vedaldi A (2018) Meta-learning with differentiable closed-form solvers. arXiv: 1805.08136

Ren M, Triantafillou E, Ravi S, Snell J, Swersky K, Tenenbaum J, Larochelle H, Zemel R (2018) Meta-learning for semi-supervised few-shot classification. arXiv: 1803.00676

Liu Y, Lee J, Park M, Kim S, Yang E, Hwang S, Yang L (2018) Learning to propagate labels: transductive propagation network for few-shot learning. arXiv: 1805.10002

Simon C, Koniusz P, Nock R, Harandi M (2020) Adaptive subspaces for few-shot learning. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), ELECTR NETWORK, pp 4135–4144

Lee H, Hwang S, Shin J (2019) Self-supervised label augmentation via input transformations. arXiv: 1910.05872

Khrulkov V, Mirvakhabova L, Ustinova E, Oseledets I, Lempitsky V. (2019) Hyperbolic image embeddings. arXiv: 1904.02239

Luo Q, Wang L, Lv J, Xiang S, Pan C (2021) Few-shot learning via feature hallucination with variational inference. In: Proceedings of the IEEE Winter Conference on Applications of Computer Vision (WACV), ELECTR NETWORK, pp 3962–3971

Majumder O, Ravichandran A, Maji S, Polito M, Soatto S (2021) Revisiting contrastive learning for few-shot classification. arXiv: 2101.11058

Chen W, Liu Y, Kira Z, Wang Y, Huang J (2019) A closer look at few-shot classification. arXiv: 1904.04232

Zhang Y, Huang S, Peng X, Yang D (2021) Dizygotic conditional variational autoencoder for multi-modal and partial modality absent few-shot learning. arXiv: 2106.14467

Kang D, Kwon H, Min J, Cho M (2021) Relational embedding for few-shot classification. arXiv: 2108.09666

Afrasiyabi A, Lalonde JF, Gagné C (2020) Mixture-based feature space learning for few-shot image classification. arXiv: 2011.11872

Ye H, Ming L, Zhan D, Chao W (2021) Few-shot learning with a strong teacher. arXiv: 2107.00197

Yuan M, Wang W, Wang T, Cai C, Xu Q, Lu, T (2021) Learning class-level prototypes for few-shot learning. arXiv: 2108.11072

Dhillon G, Chaudhari P, Ravichandran A, Soatto S (2019) A baseline for few-shot image classification. arXiv: 1909.02729

Lee E, Huang C, Lee C. (2021) Few-shot and continual learning with attentive independent mechanisms. arXiv: 2107.14053

Schwarcz S, Rambhatla S, Chellappa R (2021) Self-denoising neural networks for few shot learning. arXiv: 2110.13386

Li W, Wang L, Huo J, Shi Y, Gao Y, Luo J (2020) Asymmetric distribution measure for few-shot learning. arXiv: 2002.00153

Yu Z, Raschka S (2020) Looking back to lower-level information in few-shot learning. arXiv: 2005.13638

Lee K, Maij S, Ravichandran A, Soatto S (2019) Meta-learning with differentiable convex optimization. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, pp 10649–10657

Ren M, Liao R, Fetaya E, Zemel R. (2018) Incremental few-shot learning with attention attractor networks. arXiv: 1810.07218

Qiao L, Shi Y, Li J, Tian Y, Huang T, Wang Y (2019) Transductive episodic-wise adaptive metric for few-shot learning. In: Proceedings of the IEEE International Conference on Computer Vision (ICCV), Seoul, Korea (South), pp 3602–3611

Ravichandran A, Bhotika R, Soatto S (2019) Few-shot learning with embedded class models and shot-free meta training. In: Proceedings of the IEEE International Conference on Computer Vision (ICCV), Seoul, Korea (South), pp 331–339

Tian Y, Wang Y, Krishnan D, Tenenbau J, Isola P (2020) Rethinking few-shot image classification: a good embedding is all you need? In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), ELECTR NETWORK, pp 266–282

Xu W, Xu Y, Wang H, Tu Z (2021) Attentional constellation nets for few-shot learning. In: Proceedings of the International Conference on Learning Representations (ICLR), ELECTR NETWORK, https://openreview.net/forum?id=vujTf_I8Kmc

Lazarou M, Avrithis Y, Stathaki T (2021) Few-shot learning via tensor hallucination. arXiv: 2104.09467

Sun Q, Liu Y, Chen Z, Tat-Seng C, Bernt S (2022) Meta-transfer learning through hard tasks. IEEE Trans Pattern Anal Mach Intell 44(3):1443–1456

Lifchitz Y, Avrithis Y, Picard S, Bursuc A (2019) Dense classification and implanting for few-shot learning. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, pp 9250–9259

Afrasiyabi A, Lalonde JF, Gagne C (2020) Associative alignment for few-shot image classification. In: Proceedings of the European Conference on Computer Vision (ECCV), ELECTR NETWORK, pp 18–35

Wah C, Branson S, Welinder P, Perona P, Belongie S (2011) “The caltech-ucsd birds-200–2011 dataset. Computation& Neural Systems Technical Report. CNS-TR-2011–001

Hilliard N, Phillips L, Howland S, Yankov A, Corley C, Hodas N (2018) Few-shot learning with metric-agnostic conditional embeddings. arXiv: 1802.04376

Patacchiola M, Turner J, Crowley E, O’Boyle M, Storkey A (2019) Bayesian meta-learning for the few-shot setting via deep kernels. arXiv: 1910.05199

Lazarou M, Avrithis Y, Stathaki T (2021) Tensor feature hallucination for few-shot learning. arXiv: 2106.05321

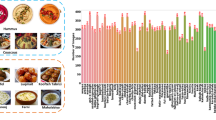

Bossard L, Guillaumin M, Gool L (2014) Food-101–mining discriminative components withrandom forests,” In: Proceedings of the European Conference on Computer Vision (ECCV), Zurich, SWITZERLAND, pp 446–461

Chen X, Zhu Y, Zhou H, Diao L, Wang D (2017) ChineseFoodNet: a large-scale image dataset for chinese food recognition. arXiv: 1705.02743

Jiang S, Min W, Lv Y, Liu L (2020) Few-shot food recognition via multi-view representation learning. ACM Trans Multimed Comput Commun Appl 16(3):1–20

Funding

This work was supported by the National Natural Science Foundation of China under Grant 62176259 and Grant 61976215.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work was supported by the National Natural Science Foundation of China under Grant 62176259 and Grant 61976215.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wang, K., Wang, X. & Cheng, Y. Few-shot learning based on enhanced pseudo-labels and graded pseudo-labeled data selection. Int. J. Mach. Learn. & Cyber. 14, 1783–1795 (2023). https://doi.org/10.1007/s13042-022-01727-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13042-022-01727-z