Abstract

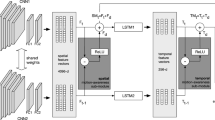

Action recognition is an active topic in video understanding, which aims to recognize human actions in videos. The critical step is to model the spatio-temporal information and extract key action clues. To this end, we propose a simple and efficient network (dubbed ESTI) which consists of two core modules. The Local Motion Extraction module highlights the short-term temporal context. While the Global Multi-scale Feature Enhancement module strengthens the spatio-temporal and channel features to model long-term information. By appending ESTI to a 2D ResNet backbone, our network is capable of reasoning different kinds of actions with various amplitudes in videos. Our network is developed under two Geforce RTX 3090 using Python3.7/Pytorch1.8. Extensive experiments have been conducted on 5 mainstream datasets to verify the effectiveness of our network, in which ESTI outperforms most of the state-of-the-arts methods in terms of accuracy, computational cost and network scale. Besides, we also visualize the feature representation of our model by using Grad-Cam to validate its accuracy.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Data Availability

The raw/processed data required to reproduce these findings will be shared once this paper has been accepted.

References

Bertasius G, Feichtenhofer C, Tran D, Shi J, Torresani L (2018) Learning discriminative motion features through detection. arXiv preprint arXiv:1812.04172

Carreira J, Zisserman A (2017) Quo vadis, action recognition? a new model and the kinetics dataset. In: proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 6299–6308

Dinarević EC, Husić JB, Baraković S (2019) Issues of human activity recognition in healthcare. In: 2019 18th International Symposium INFOTEH-JAHORINA (INFOTEH), IEEE. pp. 1–6

Feichtenhofer C, Fan H, Malik J, He K (2019) Slowfast networks for video recognition. In: Proceedings of the IEEE/CVF international conference on computer vision, pp. 6202–6211

Gedamu K, Ji Y, Yang Y, Gao L, Shen HT (2021) Arbitrary-view human action recognition via novel-view action generation. Pattern Recognition 118:108043

Goyal R, Ebrahimi Kahou S, Michalski V, Materzynska J, Westphal S, Kim H, Haenel V, Fruend I, Yianilos P, Mueller-Freitag M, et al (2017). The“ something something” video database for learning and evaluating visual common sense. In: Proceedings of the IEEE international conference on computer vision, pp. 5842–5850

He JY, Wu X, Cheng ZQ, Yuan Z, Jiang YG (2021) Db-lstm: Densely-connected bi-directional lstm for human action recognition. Neurocomputing 444:319–331

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 770–778

Host K, Ivašić-Kos M (2022) An overview of human action recognition in sports based on computer vision. Heliyon , e09633

Hu H, Zhou W, Li X, Yan N, Li H (2020) Mv2flow: Learning motion representation for fast compressed video action recognition. ACM Transactions on Multimedia Computing, Communications, and Applications (TOMM) 16, 1–19

Jiang B, Wang M, Gan W, Wu W, Yan J (2019) Stm: Spatiotemporal and motion encoding for action recognition. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 2000–2009

Kanojia G, Kumawat S, Raman S (2019) Attentive spatio-temporal representation learning for diving classification. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, pp. 0–0

Li RC, Wu XJ, Wu C, Xu TY, Kittler J (2021) Dynamic information enhancement for video classification. Image and Vision Computing 114:104244

Li X, Wang Y, Zhou Z, Qiao Y (2020a) Smallbignet: Integrating core and contextual views for video classification. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 1092–1101

Li X, Xie M, Zhang Y, Ding G, Tong W (2020) Dual attention convolutional network for action recognition. IET Image Processing 14:1059–1065

Li Y, Ji B, Shi X, Zhang J, Kang B, Wang L (2020c) Tea: Temporal excitation and aggregation for action recognition. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 909–918

Li Y, Li Y, Vasconcelos N (2018) Resound: Towards action recognition without representation bias. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 513–528

Li Z, Li D (2022) Action recognition of construction workers under occlusion. Journal of Building Engineering 45, 103352. https://www.sciencedirect.com/science/article/pii/S2352710221012109, doi:https://doi.org/10.1016/j.jobe.2021.103352

Lin J, Gan C, Han S (2019) Tsm: Temporal shift module for efficient video understanding. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 7083–7093

Liu Z, Luo D, Wang Y, Wang L, Tai Y, Wang C, Li J, Huang F, Lu T (2020) Teinet: Towards an efficient architecture for video recognition. In: Proceedings of the AAAI Conference on Artificial Intelligence, pp. 11669–11676

Liu Z, Wang L, Wu W, Qian C, Lu T (2021) Tam: Temporal adaptive module for video recognition. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 13708–13718

Luo C, Yuille AL (2019) Grouped spatial-temporal aggregation for efficient action recognition. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 5512–5521

Mahdisoltani F, Berger G, Gharbieh W, Fleet D, Memisevic R (2018) On the effectiveness of task granularity for transfer learning. arXiv preprint arXiv:1804.09235

Materzynska J, Berger G, Bax I, Memisevic R (2019) The jester dataset: A large-scale video dataset of human gestures. In: Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, pp. 0–0

Mazzia V, Angarano S, Salvetti F, Angelini F, Chiaberge M (2022) Action transformer: A self-attention model for short-time pose-based human action recognition. Pattern Recognition 124:108487

Qiu Z, Yao T, Mei T (2017) Learning spatio-temporal representation with pseudo-3d residual networks. In: proceedings of the IEEE International Conference on Computer Vision, pp. 5533–5541

Ranasinghe S, Al Machot F, Mayr HC (2016) A review on applications of activity recognition systems with regard to performance and evaluation. International Journal of Distributed Sensor Networks 12:1550147716665520

Selvaraju RR, Cogswell M, Das A, Vedantam R, Parikh D, Batra D (2017) Grad-cam: Visual explanations from deep networks via gradient-based localization. In: Proceedings of the IEEE international conference on computer vision, pp. 618–626

Shao H, Qian S, Liu Y (2020) Temporal interlacing network. In: Proceedings of the AAAI Conference on Artificial Intelligence, pp. 11966–11973

Shen Z, Wu XJ, Kittler J (2021) 2d progressive fusion module for action recognition. Image and Vision Computing 109:104122

Shi Q, Zhang HB, Li Z, Du JX, Lei Q, Liu JH (2022) Shuffle-invariant network for action recognition in videos. ACM Transactions on Multimedia Computing, Communications, and Applications (TOMM) 18, 1–18

Simonyan K, Zisserman A (2014a) Two-stream convolutional networks for action recognition in videos. Advances in neural information processing systems 27

Simonyan K, Zisserman A (2014b) Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556

Soomro K, Zamir AR, Shah M (2012) Ucf101: A dataset of 101 human actions classes from videos in the wild. arXiv preprint arXiv:1212.0402

Srivastava N, Mansimov E, Salakhudinov R (2015) Unsupervised learning of video representations using lstms. In: International conference on machine learning, PMLR. pp. 843–852

Sudhakaran S, Escalera S, Lanz O (2020) Gate-shift networks for video action recognition. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 1102–1111

Tan KS, Lim KM, Lee CP, Kwek LC (2022) Bidirectional long short-term memory with temporal dense sampling for human action recognition. Expert Systems with Applications 210:118484

Tran D, Bourdev L, Fergus R, Torresani L, Paluri M (2015) Learning spatiotemporal features with 3d convolutional networks. In: Proceedings of the IEEE international conference on computer vision, pp. 4489–4497

Tran D, Wang H, Torresani L, Ray J, LeCun Y, Paluri M (2018) A closer look at spatiotemporal convolutions for action recognition. In: Proceedings of the IEEE conference on Computer Vision and Pattern Recognition, pp. 6450–6459

Wang L, Tong Z, Ji B, Wu G (2021) Tdn: Temporal difference networks for efficient action recognition. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 1895–1904

Wang L, Xiong Y, Wang Z, Qiao Y, Lin D, Tang X, Gool LV (2016) Temporal segment networks: Towards good practices for deep action recognition. In: European conference on computer vision, Springer. pp. 20–36

Wang X, Girshick R, Gupta A, He K (2018). Non-local neural networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 7794–7803

Weng, J., Luo, D., Wang, Y., Tai, Y., Wang, C., Li, J., Huang, F., Jiang, X., Yuan, J., 2020. Temporal distinct representation learning for action recognition. In: European Conference on Computer Vision, Springer. pp. 363–378

Wu M, Jiang B, Luo D, Yan J, Wang Y, Tai Y, Wang C, Li J, Huang F, Yang X (2021) Learning comprehensive motion representation for action recognition. In: Proceedings of the AAAI Conference on Artificial Intelligence, pp. 2934–2942

Xu H, Jin X, Wang Q, Hussain A, Huang K (2022) Exploiting attention-consistency loss for spatial-temporal stream action recognition. ACM Transactions on Multimedia Computing, Communications, and Applications (TOMM)

Yu F, Koltun V (2015) Multi-scale context aggregation by dilated convolutions. arXiv preprint arXiv:1511.07122

Zhou B, Andonian A, Oliva A, Torralba A (2018) Temporal relational reasoning in videos. In: Proceedings of the European conference on computer vision (ECCV), pp. 803–818

Zolfaghari M, Singh K, Brox T (2018) Eco: Efficient convolutional network for online video understanding. In: Proceedings of the European conference on computer vision (ECCV), pp. 695–712

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Jiang, Z., Zhang, Y. & Hu, S. ESTI: an action recognition network with enhanced spatio-temporal information. Int. J. Mach. Learn. & Cyber. 14, 3059–3070 (2023). https://doi.org/10.1007/s13042-023-01820-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13042-023-01820-x