Abstract

Probabilistic error/loss performance evaluation instruments that are originally used for regression and time series forecasting are also applied in some binary-class or multi-class classifiers, such as artificial neural networks. This study aims to systematically assess probabilistic instruments for binary classification performance evaluation using a proposed two-stage benchmarking method called BenchMetrics Prob. The method employs five criteria and fourteen simulation cases based on hypothetical classifiers on synthetic datasets. The goal is to reveal specific weaknesses of performance instruments and to identify the most robust instrument in binary classification problems. The BenchMetrics Prob method was tested on 31 instrument/instrument variants, and the results have identified four instruments as the most robust in a binary classification context: Sum Squared Error (SSE), Mean Squared Error (MSE), Root Mean Squared Error (RMSE, as the variant of MSE), and Mean Absolute Error (MAE). As SSE has lower interpretability due to its [0, ∞) range, MAE in [0, 1] is the most convenient and robust probabilistic metric for generic purposes. In classification problems where large errors are more important than small errors, RMSE may be a better choice. Additionally, the results showed that instrument variants with summarization functions other than mean (e.g., median and geometric mean), LogLoss, and the error instruments with relative/percentage/symmetric-percentage subtypes for regression, such as Mean Absolute Percentage Error (MAPE), Symmetric MAPE (sMAPE), and Mean Relative Absolute Error (MRAE), were less robust and should be avoided. These findings suggest that researchers should employ robust probabilistic metrics when measuring and reporting performance in binary classification problems.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Data availability

The datasets generated during and/or analyzed during the current study are available in the GitHub repository, https://github.com/gurol/BenchMetricsProb.

Notes

For ten negative samples (e.g., i = 1, …, 10): ci = 0 and example pi = 0.49 then | ci – pi |= 0.49. For remaining ten positive samples (e.g., i = 11, …, 20): ci = 1 and example pi = 0.51 then | ci – pi |= 0.49. Hence, MAE = 0.49.

Also known as Measurement Error, Observational Error, or Mean Bias Error (MBE).

MdSE: From 1 to 0 with three unique values (five 1 s, one 0.5, and five 0 s), MdAE: From 1 to 0 with three unique values (five 1 s, one 0.5, and five 0 s) and MdRAE: From 2 to 0 with three unique values (five 2 s, one 1, and five 0 s).

References

Japkowicz N, Shah M (2011) Evaluating learning algorithms: a classification perspective. Cambridge University Press, Cambridge

Abdualgalil B, Abraham S (2020) Applications of machine learning algorithms and performance comparison: a review. In: International Conference on Emerging Trends in Information Technology and Engineering, ic-ETITE 2020. pp 1–6

Qi J, Du J, Siniscalchi SM et al (2020) On mean absolute error for deep neural network based vector-to-vector regression. IEEE Signal Process Lett 27:1485–1489. https://doi.org/10.1109/LSP.2020.3016837

Karunasingha DSK (2022) Root mean square error or mean absolute error? Use their ratio as well. Inf Sci (Ny) 585:609–629. https://doi.org/10.1016/j.ins.2021.11.036

Pham-Gia T, Hung TL (2001) The mean and median absolute deviations. Math Comput Model 34:921–936. https://doi.org/10.1016/S0895-7177(01)00109-1

Zhang Z, Ding S, Sun Y (2020) A support vector regression model hybridized with chaotic krill herd algorithm and empirical mode decomposition for regression task. Neurocomputing 410:185–201. https://doi.org/10.1016/j.neucom.2020.05.075

Atsalakis GS, Valavanis KP (2009) Surveying stock market forecasting techniques—part II: soft computing methods. Expert Syst Appl 36:5932–5941. https://doi.org/10.1016/j.eswa.2008.07.006

Ru Y, Li B, Liu J, Chai J (2018) An effective daily box office prediction model based on deep neural networks. Cogn Syst Res 52:182–191. https://doi.org/10.1016/j.cogsys.2018.06.018

Zhang X, Zhang T, Young AA, Li X (2014) Applications and comparisons of four time series models in epidemiological surveillance data. PLoS ONE 9:1–16. https://doi.org/10.1371/journal.pone.0088075

Huang C-J, Chen Y-H, Ma Y, Kuo P-H (2020) Multiple-Input deep convolutional neural network model for COVID-19 Forecasting in China (preprint). medRxiv. https://doi.org/10.1101/2020.03.23.20041608

Fan Y, Xu K, Wu H et al (2020) Spatiotemporal modeling for nonlinear distributed thermal pProcesses based on KL decomposition, MLP and LSTM network. IEEE Access 8:25111–25121. https://doi.org/10.1109/ACCESS.2020.2970836

Hmamouche Y, Lakhal L, Casali A (2021) A scalable framework for large time series prediction. Knowl Inf Syst. https://doi.org/10.1007/s10115-021-01544-w

Shakhari S, Banerjee I (2019) A multi-class classification system for continuous water quality monitoring. Heliyon 5:e01822. https://doi.org/10.1016/j.heliyon.2019.e01822

Sumaiya Thaseen I, Aswani Kumar C (2017) Intrusion detection model using fusion of chi-square feature selection and multi class SVM. J King Saud Univ - Comput Inf Sci 29:462–472. https://doi.org/10.1016/j.jksuci.2015.12.004

Ling QH, Song YQ, Han F et al (2019) An improved learning algorithm for random neural networks based on particle swarm optimization and input-to-output sensitivity. Cogn Syst Res 53:51–60. https://doi.org/10.1016/j.cogsys.2018.01.001

Pwasong A, Sathasivam S (2016) A new hybrid quadratic regression and cascade forward backpropagation neural network. Neurocomputing 182:197–209. https://doi.org/10.1016/j.neucom.2015.12.034

Chen T (2014) Combining statistical analysis and artificial neural network for classifying jobs and estimating the cycle times in wafer fabrication. Neural Comput Appl 26:223–236. https://doi.org/10.1007/s00521-014-1739-1

Cano JR, Gutiérrez PA, Krawczyk B et al (2019) Monotonic classification: An overview on algorithms, performance measures and data sets. Neurocomputing 341:168–182. https://doi.org/10.1016/j.neucom.2019.02.024

Jiao J, Zhao M, Lin J, Liang K (2020) A comprehensive review on convolutional neural network in machine fault diagnosis. Neurocomputing 417:36–63. https://doi.org/10.1016/j.neucom.2020.07.088

Cecil D, Campbell-Brown M (2020) The application of convolutional neural networks to the automation of a meteor detection pipeline. Planet Space Sci 186:104920. https://doi.org/10.1016/j.pss.2020.104920

Banan A, Nasiri A, Taheri-Garavand A (2020) Deep learning-based appearance features extraction for automated carp species identification. Aquac Eng 89:102053. https://doi.org/10.1016/j.aquaeng.2020.102053

Afan HA, Ibrahem Ahmed Osman A, Essam Y et al (2021) Modeling the fluctuations of groundwater level by employing ensemble deep learning techniques. Eng Appl Comput Fluid Mech 15:1420–1439. https://doi.org/10.1080/19942060.2021.1974093

Lu Z, Lv W, Cao Y et al (2020) LSTM variants meet graph neural networks for road speed prediction. Neurocomputing 400:34–45. https://doi.org/10.1016/j.neucom.2020.03.031

Canbek G, Taskaya Temizel T, Sagiroglu S (2022) PToPI: a comprehensive review, analysis, and knowledge representation of binary classification performance measures/metrics. SN Comput Sci 4:1–30. https://doi.org/10.1007/s42979-022-01409-1

Armstrong JS (2001) Principles of forecasting: a handbook for researchers and practitioners. Springer, Boston

Canbek G, Taskaya Temizel T, Sagiroglu S (2021) BenchMetrics: A systematic benchmarking method for binary-classification performance metrics. Neural Comput Appl 33:14623–14650. https://doi.org/10.1007/s00521-021-06103-6

Cohen J (1960) A coefficient of agreement for nominal scales. Educ Psychol Meas 20:37–46. https://doi.org/10.1177/001316446002000104

Matthews BW (1975) Comparison of the predicted and observed secondary structure of T4 phage lysozyme. BBA Protein Struct 405:442–451. https://doi.org/10.1016/0005-2795(75)90109-9

Hodson TO, Over TM, Foks SS (2021) Mean squared error, deconstructed. J Adv Model Earth Syst 13:1–10. https://doi.org/10.1029/2021MS002681

Ferri C, Hernández-Orallo J, Modroiu R (2009) An experimental comparison of performance measures for classification. Pattern Recognit Lett 30:27–38. https://doi.org/10.1016/j.patrec.2008.08.010

Shen F, Zhao X, Li Z et al (2019) A novel ensemble classification model based on neural networks and a classifier optimisation technique for imbalanced credit risk evaluation. Phys A Stat Mech Appl. https://doi.org/10.1016/j.physa.2019.121073

Reddy CK, Park JH (2011) Multi-resolution boosting for classification and regression problems. Knowl Inf Syst 29:435–456. https://doi.org/10.1007/s10115-010-0358-0

Smucny J, Davidson I, Carter CS (2021) Comparing machine and deep learning-based algorithms for prediction of clinical improvement in psychosis with functional magnetic resonance imaging. Hum Brain Mapp 42:1197–1205. https://doi.org/10.1002/hbm.25286

Zammito F (2019) What’s considered a good Log Loss in Machine Learning? https://medium.com/@fzammito/whats-considered-a-good-log-loss-in-machine-learning-a529d400632d. Accessed 15 Jul 2020

Baldwin B (2010) Evaluating with Probabilistic Truth: Log Loss vs. O/1 Loss. http://lingpipe-blog.com/2010/11/02/evaluating-with-probabilistic-truth-log-loss-vs-0-1-loss/. Accessed 20 May 2020

Sokolova M, Lapalme G (2009) A systematic analysis of performance measures for classification tasks. Inf Process Manag 45:427–437. https://doi.org/10.1016/j.ipm.2009.03.002

Pereira RB, Plastino A, Zadrozny B, Merschmann LHC (2018) Correlation analysis of performance measures for multi-label classification. Inf Process Manag 54:359–369. https://doi.org/10.1016/j.ipm.2018.01.002

Kolo B (2011) Binary and multiclass classification. Weatherford Press

Carbonero-Ruz M, Martínez-Estudillo FJ, Fernández-Navarro F et al (2017) A two dimensional accuracy-based measure for classification performance. Inf Sci (Ny) 382–383:60–80. https://doi.org/10.1016/j.ins.2016.12.005

Madjarov G, Gjorgjevikj D, Dimitrovski I, Džeroski S (2016) The use of data-derived label hierarchies in multi-label classification. J Intell Inf Syst 47:57–90. https://doi.org/10.1007/s10844-016-0405-8

Hossin M, Sulaiman MN (2015) A review on evaluation metrics for data classification evaluations. Int J Data Min Knowl Manag Process 5:1–11. https://doi.org/10.5121/ijdkp.2015.5201

Tavanaei A, Maida A (2019) BP-STDP: approximating backpropagation using spike timing dependent plasticity. Neurocomputing 330:39–47. https://doi.org/10.1016/j.neucom.2018.11.014

Mostafa SA, Mustapha A, Mohammed MA et al (2019) Examining multiple feature evaluation and classification methods for improving the diagnosis of Parkinson’s disease. Cogn Syst Res 54:90–99. https://doi.org/10.1016/j.cogsys.2018.12.004

Di Nardo F, Morbidoni C, Cucchiarelli A, Fioretti S (2021) Influence of EMG-signal processing and experimental set-up on prediction of gait events by neural network. Biomed Signal Process Control 63:102232. https://doi.org/10.1016/j.bspc.2020.102232

Alharthi H, Inkpen D, Szpakowicz S (2018) A survey of book recommender systems. J Intell Inf Syst 51:139–160. https://doi.org/10.1007/s10844-017-0489-9

Pakdaman Naeini M, Cooper GF (2018) Binary classifier calibration using an ensemble of piecewise linear regression models. Knowl Inf Syst 54:151–170. https://doi.org/10.1007/s10115-017-1133-2

Botchkarev A (2019) A new typology design of performance metrics to measure errors in machine learning regression algorithms. Interdiscip J Inform Knowledge Manag 14:45–79. https://doi.org/10.2894/4184

Hyndman RJ, Koehler AB (2006) Another look at measures of forecast accuracy. Int J Forecast 22:679–688. https://doi.org/10.1016/j.ijforecast.2006.03.001

Tofallis C (2015) A better measure of relative prediction accuracy for model selection and model estimation. J Oper Res Soc 66:1352–1362. https://doi.org/10.1057/jors.2014.103

Shin Y (2017) Time series analysis in the social sciences: the fundamentals. Time series analysis in the social sciences: the fundamentals. University of California Press, Oakland, pp 90–105

Flach P (2019) Performance evaluation in machine learning: The good, the bad, the ugly and the way forward. In: 33rd AAAI Conference on Artificial Intelligence. Honolulu, Hawaii

Kline DM, Berardi VL (2005) Revisiting squared-error and cross-entropy functions for training neural network classifiers. Neural Comput Appl 14:310–318. https://doi.org/10.1007/s00521-005-0467-y

Ghosh A, Himanshu Kumar B, Sastry PS (2017) Robust loss functions under label noise for deep neural networks. In: Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence (AAAI-17). Association for the Advancement of ArtificialIntelligence, San Francisco, California USA, pp 1919–1925

Kumar H, Sastry PS (2019) Robust loss functions for learning multi-class classifiers. In: Proceedings - 2018 IEEE International Conference on Systems, Man, and Cybernetics, SMC 2018. Institute of Electrical and Electronics Engineers Inc., pp 687–692

Canbek G, Sagiroglu S, Temizel TT, Baykal N (2017) Binary classification performance measures/metrics: A comprehensive visualized roadmap to gain new insights. In: 2017 International Conference on Computer Science and Engineering (UBMK). IEEE, Antalya, Turkey, pp 821–826

Kim S, Kim H (2016) A new metric of absolute percentage error for intermittent demand forecasts. Int J Forecast 32:669–679. https://doi.org/10.1016/j.ijforecast.2015.12.003

Ayzel G, Heistermann M, Sorokin A, et al (2019) All convolutional neural networks for radar-based precipitation nowcasting. In: Procedia Computer Science. Elsevier B.V., pp 186–192

Xu B, Ouenniche J (2012) Performance evaluation of competing forecasting models: a multidimensional framework based on MCDA. Expert Syst Appl 39:8312–8324. https://doi.org/10.1016/j.eswa.2012.01.167

Khan A, Yan X, Tao S, Anerousis N (2012) Workload characterization and prediction in the cloud: A multiple time series approach. In: Proceedings of the 2012 IEEE Network Operations and Management Symposium, NOMS 2012. pp 1287–1294

Gwanyama PW (2004) The HM-GM-AM-QM inequalities. Coll Math J 35:47–50

Prestwich S, Rossi R, Armagan Tarim S, Hnich B (2014) Mean-based error measures for intermittent demand forecasting. Int J Prod Res 52:6782–6791. https://doi.org/10.1080/00207543.2014.917771

Luque A, Carrasco A, Martín A, de las Heras A (2019) The impact of class imbalance in classification performance metrics based on the binary confusion matrix. Pattern Recognit 91:216–231. https://doi.org/10.1016/j.patcog.2019.02.023

Trevisan V (2022) Comparing robustness of MAE, MSE and RMSE. In: Towar. Data Sci. https://towardsdatascience.com/comparing-robustness-of-mae-mse-and-rmse-6d69da870828. Accessed 6 Feb 2023

Hodson TO (2022) Root-mean-square error (RMSE) or mean absolute error (MAE): when to use them or not. Geosci Model Dev 15:5481–5487. https://doi.org/10.5194/gmd-15-5481-2022

Tabataba FS, Chakraborty P, Ramakrishnan N et al (2017) A framework for evaluating epidemic forecasts. BMC Infect Dis. https://doi.org/10.1186/s12879-017-2365-1

Gong M (2021) A novel performance measure for machine learning classification. Int J Manag Inf Technol 13:11–19. https://doi.org/10.5121/ijmit.2021.13101

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author declares that he has no conflict or competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: Preliminaries

Classification and Binary Classification: Classification is a specific problem in machine learning at which a classifier (i.e. a computer program) improves its performance through learning from experience. In a supervised approach, the experience is gained by providing labeled examples (i.e. training dataset) of one or more classes with common properties or characteristics. In a binary classification or two-class classification, a classifier separates an example given into two classes. The classes are named positive (e.g., malicious software or spam) and negative (e.g., benign software or non-spam) in general.

Classification Performance and Confusion Matrix: The performance of the trained classifier (i.e. to what degree it predicts the labels of known examples) is then improved or evaluated on different labeled examples (i.e. validation or test datasets). At this stage, the classifier is supposed to be ready to predict the class of additional unknown or unlabeled instances. The binary classification performances in training, validation, or test datasets are presented by a confusion matrix, also known as a “2 × 2 contingency table” or “four-fold table”, (i.e. the number of correct and incorrect classification per positive and negative classes).

1.1 Confusion-matrix-derived instruments

Confusion-matrix-derived instruments are a convenient, familiar, and frequently used instrument category. Along with well-known metrics such as accuracy (ACC), true positive rate (TPR), and F1, other specific metrics such as Cohen’s Kappa (CK) [27] and Mathews Correlation Coefficient (MCC) [28] have been used in the evaluation of crisp classifiers that assign instances to either a positive (value: one) or negative (value: zero) class absolutely (also known as “hard label”) [24, 55]. The performance measured by these instruments can be interpreted as.

External: They present observed results without an explicit connection to internal design parameters. The classifier is modeled with a single/final optimum configuration (i.e. a model threshold).

Production-ready: They provide an estimate of the classifier’s performance in a production environment for the intended problem domain when compared to other classifiers.

Kinetic: They represent a classifier’s performance summarizing a specific application of the samples of a dataset (e.g., the ACC or MSE values that are measured for the first run or iteration in k-fold cross-validation).

1.2 Graphical-based instruments

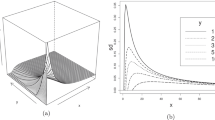

Graphical-based performance instruments are not based on a single instance of confusion-matrix elements yielded from a specific application. Instead, they list a classifier’s performance panorama by varying a decision threshold (i.e. full operating range of a classifier) in terms of metric pairs that involve trade-off (e.g., x: FPR and y: TPR for ROC, receiver-operating-characteristic) [1]. A graph is used to visualize the variance and the area under the curve provides a single value (e.g., AUCROC, Area-Under-ROC-Curve) to summarize the variance [66]. These instruments represent the classifiers’ internal capability designed with different possible settings and provide insight into the classifiers’ potential during model development. However, since a classifier is eventually deployed with a single decision threshold in a production environment, a confusion-matrix-derived (e.g., ACC) and/or a probabilistic error/loss instrument (e.g., MSE) should be used and reported to represent the final performance. A graphical-based instrument (e.g., AUCPR) can also be included to show the classifiers' potential when used with different decision thresholds.

Appendix B

2.1 Probabilistic error/loss instruments’ equations

See Table 10.

Appendix C

3.1 Probabilistic error instruments aggregation and error function frequency distribution

Table 11 lists the frequency distribution of aggregation (g) and error (ei) functions described in Table 1. The most used aggregation functions are mean and square(d) mean and error functions (shown in underlined) are absolute and percentage.

Appendix D

4.1 Introduction to BenchMetrics Prob calculator and simulation tool

The BenchMetrics Prob, depicted in Fig. 2, is a spreadsheet-based tool that is designed to prepare cases for evaluating the robustness of probabilistic error/loss instruments. The tool can be accessed online at https://github.com/gurol/BenchMetricsProb. The user interface of the tool is divided into nine parts:

I. Class label/prediction score values settings

II. Ground truth/prediction input method settings

III. Synthetic dataset instances

IV. Hypothetical classifier predictions

V. Classification examples/outputs/confusions

VI. Confusion matrix and other measures

VII. Performance metrics/measure results

VIII. Different error function results

IX. Probabilistic error/loss performance instrument results

Part I. Class label/prediction score values settings

The first part of the tool allows the users to define the class label and prediction score values. By default, the tool is set up for conventional binary classification, as shown in Fig. 5a below, where the minimum prediction score (min(pi)) for the negative class is set to 0 and the maximum prediction score (max(pi)) for the positive class is set to 1.

The decision threshold (class-decision boundary) is set to the middle of [0, 1] prediction score interval. However, the user can change these values by editing the cells with blue text/background color. For example, if the users want to avoid division-by-zero errors, they can set min(pi) for the negative class to 1, max(pi) for the positive class to 2, and θ = 1.5.

Part II. Ground truth/prediction input method settings

In the second part of the tool, shown in Fig. 5b, the users can choose between “manual” or “random” input methods for the ground truth and prediction values. If the users select the random input method, the tool will generate values according to the class label and prediction score values defined in Part I above. The user can also set the classifier’s prediction by adjusting TPR and/or TNR to be above a given value. If the users set these values to 0.5, the tool will generate purely random values. Setting a higher value made the classifier predict stratified random values. Additionally, the users can specify the number of samples (Sn) by defining the starting (e.g., 21) and ending (e.g., 40) row numbers in the sheet. Note that refreshing the sheet using the SHIFT and F9 shortcut keys will change the random values.

Part III. Synthetic dataset instances

Part II of the BenchMetrics Prob tool, shown in Fig. 6, generates the synthetic dataset instances for evaluation. The dataset instances (“i”) are numbered sequentially in the first column, starting from 1. In the cells with blue text/background colors in the second column, users can manually enter the class labels for each instance when the ground truth input method is selected as “Manual”. The values should be either 0 or 1 for default binary classification problems. When the ground truth input method is set to “Random”, automatically generated random class labels are displayed in the third column. These values should not be changed by the users. The total number of instances generated can be changed in Part II by adjusting the "Number of samples (Sn)" parameter. The formulas should be pasted to the rows for the new instances.

Part IV. Hypothetical classifier predictions

The same approach in Part III is applied to the predictions of the hypothetical classifier per corresponding synthetic dataset instances. Figure 7 shows the predictions along with the dataset instances where pi values are either manually entered in the fourth column (shown in blue text/background) or automatically generated in the last column. Note that the tool takes the columns according to current “random”/“manual” settings shown in Fig. 5b.

Part V. Classification examples/outputs/confusions

Having generated synthetic dataset examples and corresponding hypothetical classifier’s prediction outputs, Part IV summarizes ground truth, predictions, and confusion status. Figure 8 shows all possible confusions (e.g., the first instance is “positive” but predicted as “outcome negative” so that the instance is classified as “false negative”).

Part VI. Confusion matrix and other measures

Part VI shown in Fig. 9 provides the confusion matrix and other measures based on the matrix (total of 15 measures). The values summarize the current case’s classification performance as a crisp classifier.

Part VII. Performance metrics/measure results

In Part VII, shown in Fig. 10, the tool provides various performance metrics and measures derived from the confusion matrix, with a total of 21 instruments. The zero–one loss metrics described in Sect. 2.4 are shown in red text color. In addition, two measures of classifier model complexity are calculated based on the number of model parameters (k). These measures are:

Akaike Information Criterion (AIC): A measure of the quality of a model that penalizes models for the number of parameters used. Lower AIC values indicate a better model fit.

Bayesian Information Criterion (BIC): Similar to AIC, BIC also penalizes models for the number of parameters used. However, BIC has a stronger penalty for model complexity than AIC. Lower BIC values indicate a better model fit.

The AIC and BIC values can be used to compare different models and select the one with the best fit.

Part VIII. Error function results

Part VIII shows the results of error function based on class labels (ci) and prediction scores (pi), as shown in Fig. 11. Probabilistic error/loss instruments summarize those errors listed in rows into a single figure (in Part IX below) according to their aggregation functions.

Part IX. Probabilistic error/loss performance instrument results

Part IX, shown in Fig. 12, lists the results of probabilistic performance instruments for the predictions made on dataset instances. The instruments are grouped into subtypes (shown in black background text).

Note that the current instrument outputs, including confusion-matrix-based ones and the configuration setting, are listed in the sixth row in a separate worksheet (‘simulation cases’). You can copy and paste the row into another row to create a simulation case for your analysis. The benchmarking results were already provided in this sheet per seven cases.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Canbek, G. BenchMetrics Prob: benchmarking of probabilistic error/loss performance evaluation instruments for binary classification problems. Int. J. Mach. Learn. & Cyber. 14, 3161–3191 (2023). https://doi.org/10.1007/s13042-023-01826-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13042-023-01826-5