Abstract

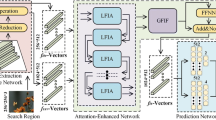

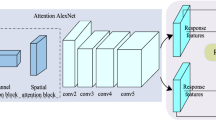

Visual tracking is widely used in industrial systems such as vision servo systems and in intelligent robots. However, most tracking algorithms are designed without considering the balance of algorithmic efficiency and accuracy in system applications, making them less preferable for applications. This paper proposes a siamese global location-aware object tracking algorithm (SiamGLA) to address this issue. First, due to the limited performance of efficient lightweight backbone networks, this study designs an internal feature combination (IFC) module that improves feature representation with almost no additional parameters. Second, a global-aware (GA) attention module is proposed to improve the classification ability of foreground and background, which is especially important for trackers. Finally, a location-aware (LA) attention module is designed to improve the regression performance of the tracking framework. Comprehensive experiments show that SiamGLA is effective, and overcomes the drawbacks of poor robustness and weak generalization ability. When the performance reaches state-of-the-art, SiamGLA requires fewer calculations and parameters, making it more likely to be applied in practice.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Data availability

The datasets generated and analysed during the current study are available from the corresponding author on reasonable request.

References

Tao Y, Zongyang Z, Jun Z, Xinghua C, Fuqiang Z (2021) Low-altitude small-sized object detection using lightweight feature-enhanced convolutional neural network. J Syst Eng Electron 32(4):841–853. https://doi.org/10.23919/JSEE.2021.000073

Tan K, Xu T-B, Wei Z (2022) Imsiam: Iou-aware matching-adaptive siamese network for object tracking. Neurocomputing 492:222–233

Wei B, Chen H, Ding Q, Luo H (2022) Siamoan: Siamese object-aware network for real-time target tracking. Neurocomputing 471:161–174

Lan X, Zhang W, Zhang S, Jain DK, Zhou H (2019) Robust multi-modality anchor graph-based label prediction for rgb-infrared tracking. IEEE Trans Indus Inform. https://doi.org/10.1109/TII.2019.2947293

Yang H, Wen J, Wu X, He L, Mumtaz S (2019) An efficient edge artificial intelligence multipedestrian tracking method with rank constraint. IEEE Trans Indus Inform 15(7):4178–4188. https://doi.org/10.1109/TII.2019.2897128

Bolme DS, Beveridge JR, Draper BA, Lui YM (2010) Visual object tracking using adaptive correlation filters. In: 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, IEEE. pp. 2544–2550

Henriques JF, Caseiro R, Martins P, Batista J (2014) High-speed tracking with kernelized correlation filters. IEEE Trans Pattern Analysis Mach Intellig 37(3):583–596

Yang L, Zhu J (2014) A scale adaptive kernel correlation filter tracker with feature integration. In: European Conference on Computer Vision, pp. 254–265

Danelljan M, Häger G, Khan F.S, Felsberg M (2015) Learning spatially regularized correlation filters for visual tracking. In: 2015 IEEE International Conference on Computer Vision (ICCV), pp. 4310–4318. https://doi.org/10.1109/ICCV.2015.490

Bertinetto L, Valmadre J, Golodetz S, Miksik O, Torr P (2016) Staple: Complementary learners for real-time tracking. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1401–1409 https://doi.org/10.1109/CVPR.2016.156

Danelljan M, Bhat G, Shahbaz Khan F, Felsberg M (2017) Eco: Efficient convolution operators for tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 6638–6646

Wang N, Zhou W, Tian Q, Hong R, Wang M, Li H (2018) Multi-cue correlation filters for robust visual tracking. In: 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 4844–4853 . https://doi.org/10.1109/CVPR.2018.00509

Yao S, Wang G, Li Z (2018) Correlation filter learning toward peak strength for visual tracking. IEEE Trans Cybernet 48(99):1290–1303

Dai K, Wang D, Lu H, Sun C, Li J (2019) Visual tracking via adaptive spatially-regularized correlation filters. In: 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 4665–4674 . https://doi.org/10.1109/CVPR.2019.00480

Lu X, Ma C, Ni B, Yang X (2019) Adaptive region proposal with channel regularization for robust object tracking. IEEE transactions on circuits and systems for video technology

Bertinetto L, Valmadre J, Henriques J.F, Vedaldi A, Torr P (2016) Fully-convolutional siamese networks for object tracking. Eur Confer Computer Vision

Fan H, Ling H (2017) Parallel tracking and verifying: A framework for real-time and high accuracy visual tracking. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 5486–5494

He A, Luo C, Tian X, Zeng W (2018) A twofold siamese network for real-time object tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4834–4843

Zhang Y, Wang L, Qi J, Wang D, Feng M, Lu H (2018) Structured siamese network for real-time visual tracking. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 351–366

Zhang Z, Peng H (2019) Deeper and wider siamese networks for real-time visual tracking. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 4591–4600

Bhat G, Danelljan M, Gool L.V, Timofte R (2019) Learning discriminative model prediction for tracking. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 6182–6191

Danelljan M, Bhat G, Khan F.S, Felsberg M (2019) Atom: Accurate tracking by overlap maximization. In: 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 4655–4664. https://doi.org/10.1109/CVPR.2019.00479

Tan H, Zhang X, Zhang Z, Lan L, Zhang W, Luo Z (2021) Nocal-siam: Refining visual features and response with advanced non-local blocks for real-time siamese tracking. IEEE Trans Image Process 30:2656–2668

Yao S, Han X, Zhang H, Wang X, Cao X (2021) Learning deep lucas-kanade siamese network for visual tracking. IEEE Trans Image Process 30:4814–4827

Wang X, Tang J, Luo B, Wang Y, Tian Y, Wu F (2021) Tracking by joint local and global search: A target-aware attention-based approach. IEEE transactions on neural networks and learning systems

Li B, Yan J, Wu W, Zhu Z, Hu X (2018) High performance visual tracking with siamese region proposal network. In: 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 8971–8980. https://doi.org/10.1109/CVPR.2018.00935

Fan H, Ling H (2019) Siamese cascaded region proposal networks for real-time visual tracking. In: 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 7944–7953. https://doi.org/10.1109/CVPR.2019.00814

Xu Y, Wang Z, Li Z, Yuan Y, Yu G (2020) Siamfc++: Towards robust and accurate visual tracking with target estimation guidelines. In: AAAI, pp. 12549–12556

Zhu Z, Wang Q, Li B, Wu W, Yan J, Hu W (2018) Distractor-aware siamese networks for visual object tracking. In: Computer Vision – ECCV 2018, pp. 103–119

Li B, Wu W, Wang Q, Zhang F, Xing J, Yan J (2019) Siamrpn++: Evolution of siamese visual tracking with very deep networks. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 4282–4291

Wang Q, Zhang L, Bertinetto L, Hu W, Torr PH (2019) Fast online object tracking and segmentation: A unifying approach. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 1328–1338

Tang F, Ling Q (2021) Learning to rank proposals for siamese visual tracking. IEEE Trans Image Process 30:8785–8796

Chen Z, Zhong B, Li G, Zhang S, Ji R (2020) Siamese box adaptive network for visual tracking. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 6668–6677

Guo D, Wang J, Cui Y, Wang Z, Chen S (2020) Siamcar: Siamese fully convolutional classification and regression for visual tracking. Computer vision and pattern recognition

Zhang Z, Peng H (2020) Ocean: Object-aware anchor-free tracking. In: Computer Vision – ECCV 2020, pp. 771–787

Tian Z, Shen C, Chen H, He T (2019) Fcos: Fully convolutional one-stage object detection. In: 2019 IEEE/CVF International Conference on Computer Vision (ICCV), pp. 9626–9635. https://doi.org/10.1109/ICCV.2019.00972

Tao R, Gavves E, Smeulders AWM (2016) Siamese instance search for tracking. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1420–1429. https://doi.org/10.1109/CVPR.2016.158

Huang L, Zhao X, Huang K (2022) Got-10k: A large high-diversity benchmark for generic object tracking in the wild. IEEE Transactions on Pattern Analysis and Machine Intelligence

Mueller M, Smith N, Ghanem B (2016) A benchmark and simulator for uav tracking. In: European Conference on Computer Vision (ECCV16), pp. 445–461

Fan H, Bai H, Lin L, Yang F, Ling H (2020) Lasot: A high-quality large-scale single object tracking benchmark. Int J Comput Vision

Wu Y, Lim J, Yang MH (2015) Object tracking benchmark. IEEE Trans Pattern Analysis Mach Intellig 37(9):1834–1848

Acknowledgements

This work was supported in part by the Beijing Natural Science Foundation under Grant L211017, and in part by the General Program of Beijing Municipal Education Commission under Grant KM202110005027, and in part by National Natural Science Foundation of China under Grant 61971016 and 61701011, and in part by Beijing Municipal Education Commission Cooperation Beijing Natural Science Foundation under Grant KZ201910005077.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Li, J., Li, B., Ding, G. et al. Siamese global location-aware network for visual object tracking. Int. J. Mach. Learn. & Cyber. 14, 3607–3620 (2023). https://doi.org/10.1007/s13042-023-01853-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13042-023-01853-2