Abstract

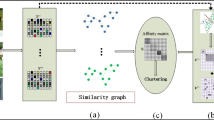

Conventional graph-based dimensionality reduction methods treat graph leaning and subspace learning as two separate steps, and fix the graph during subspace learning. However, the graph obtained from the original data may be not optimal, because the original high-dimensional data contains redundant information and noise, thus the subsequent subspace learning based on the graph may be affected. In this paper, we propose a model called adaptive affinity matrix learning (AAML) for unsupervised dimensionality reduction. Different from traditional graph-based methods, we integrate two steps into a unified framework and adaptively adjust the learned graph. To obtain an ideal neighbor assignment, we introduce a rank constraint to the Laplacian matrix of the affinity matrix. In this way, the number of connected components of the graph is exactly equal to the number of class numbers. By approximating two low-dimensional subspaces, the affinity matrix can obtain the original neighbor structure from the similarity matrix, and the projection matrix can get low-rank information from the affinity matrix, then a distinctive subspace can be learned. Moreover, we propose an efficient algorithm to solve the optimization problem of AAML. Experimental results on four data sets show the effectiveness of the proposed model.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Belhumeur PN, Hespanha JP, Kriegman DJ (1997) Eigenfaces vs. fisherfaces: recognition using class specific linear projection. IEEE Trans Pattern Anal Mach Intell 19(7):711–720

Turk M, Pentland A (1991) Eigenfaces for recognition. J Cogn Neurosci 3(1):71–86

Martinez AM, Kak AC (2001) Pca versus lda. IEEE Trans Pattern Anal Mach Intell 23(2):228–233

Zhao M, Jia Z, Cai Y, Chen X, Gong D (2021) Advanced variations of two-dimensional principal component analysis for face recognition. Neurocomputing 452:653–664

Wang Q, Gao Q, Gao X, Nie F (2018) \(\ell _{2, p}\) -norm based PCA for image recognition. IEEE Trans Image Process 27(3):1336–1346

Zhou J, Qi H, Chen Y, Wang H (2021) Progressive principle component analysis for compressing deep convolutional neural networks. Neurocomputing 440:197–206

Wen J, Fang X, Cui J, Fei L, Yan K, Chen Y, Xu Y (2019) Robust sparse linear discriminant analysis. IEEE Trans Circuits Syst Video Technol 29(2):390–403

Li C-N, Shao Y-H, Chen W-J, Wang Z, Deng N-Y (2021) Generalized two-dimensional linear discriminant analysis with regularization. Neural Netw 142:73–91

Dornaika F, Khoder A (2020) Linear embedding by joint robust discriminant analysis and inter-class sparsity. Neural Netw 127:141–159

Ren Z, Sun Q (2021) Simultaneous global and local graph structure preserving for multiple kernel clustering. IEEE Trans Neural Netw Learning Syst 32(5):1839–1851

Kang Z, Peng C, Cheng Q, Liu X, Peng X, Xu Z, Tian L (2021) Structured graph learning for clustering and semi-supervised classification. Pattern Recogn 110:107627

Yang J, Gao X, Zhang D, Yang J-Y (2005) Kernel ICA: an alternative formulation and its application to face recognition. Pattern Recogn 38(10):1784–1787

Tonin F, Patrinos P, Suykens JA (2021) Unsupervised learning of disentangled representations in deep restricted kernel machines with orthogonality constraints. Neural Netw 142:661–679

Zheng Y, Zhang X, Yang S, Jiao L (2013) Low-rank representation with local constraint for graph construction. Neurocomputing 122:398–405

Luo F, Huang Y, Tu W, Liu J (2020) Local manifold sparse model for image classification. Neurocomputing 382:162–173

Peng Y, Lu B-L, Wang S (2015) Enhanced low-rank representation via sparse manifold adaption for semi-supervised learning. Neural Netw 65:1–17

Belkin M, Niyogi P (2003) Laplacian eigenmaps for dimensionality reduction and data representation. Neural Comput 15(6):1373–1396

Roweis ST, Saul LK (2000) Nonlinear dimensionality reduction by locally linear embedding. Science 290(5500):2323–2326

Tenenbaum JB, De Silva V, Langford JC (2000) A global geometric framework for nonlinear dimensionality reduction. Science 290(5500):2319–2323

Cai D, He X, Han J et al. (2007) Isometric projection. In: AAAI, pp 528–533

He X, Cai D, Yan S, Zhang HJ (2005) Neighborhood preserving embedding. Tenth IEEE international conference on computer vision (ICCV’05), vol 2. IEEE, pp 1208–1213

He X, Niyogi P (2004) Locality preserving projections. Adv Neural Inf Process Syst 16(16):153–160

Qiao L, Chen S, Tan X (2010) Sparsity preserving projections with applications to face recognition. Pattern Recogn 43(1):331–341

Liu J, Xiu X, Jiang X, Liu W, Zeng X, Wang M, Chen H (2021) Manifold constrained joint sparse learning via non-convex regularization. Neurocomputing 458:112–126

Liu G, Lin Z, Yan S, Sun J, Yu Y, Ma Y (2012) Robust recovery of subspace structures by low-rank representation. IEEE Trans Pattern Anal Mach Intell 35(1):171–184

Lu G-F, Yu Q-R, Wang Y, Tang G (2020) Hyper-laplacian regularized multi-view subspace clustering with low-rank tensor constraint. Neural Netw 125:214–223

Zhan S, Wu J, Han N, Wen J, Fang X (2019) Unsupervised feature extraction by low-rank and sparsity preserving embedding. Neural Netw 109:56–66

Fang X, Han N, Wu J, Xu Y, Yang J, Wong WK, Li X (2018) Approximate low-rank projection learning for feature extraction. IEEE Trans Neural Netw Learning Syst 29(11):5228–5241

Wen J, Han N, Fang X, Fei L, Yan K, Zhan S (2018) Low-rank preserving projection via graph regularized reconstruction. IEEE Trans Cybern 49(4):1279–1291

Nie F, Wang X, Huang H (2014) Clustering and projected clustering with adaptive neighbors. In: Proceedings of the 20th ACM SIGKDD international conference on knowledge discovery and data mining. pp 977–986

Wong WK, Lai Z, Wen J, Fang X, Lu Y (2017) Low-rank embedding for robust image feature extraction. IEEE Trans Image Process 26(6):2905–2917

Chung FR, Graham FC (1997) Spectral graph theory. American Mathematical Soc., p 92

Oellermann OR, Schwenk AJ (1991) The laplacian spectrum of graphs. University of Manitoba

Fan K (1949) On a theorem of Weyl concerning eigenvalues of linear transformations i. Proc Natl Acad Sci USA 35(11):652

Lin Z, Chen M, Ma Y (2010) The augmented lagrange multiplier method for exact recovery of corrupted low-rank matrices,” arXiv preprint arXiv:1009.5055

Cai J-F, Candès EJ, Shen Z (2010) A singular value thresholding algorithm for matrix completion. SIAM J Optim 20(4):1956–1982

Georghiades AS, Belhumeur PN, Kriegman DJ (2001) From few to many: illumination cone models for face recognition under variable lighting and pose. IEEE Trans Pattern Anal Mach Intell 23(6):643–660

Sim T, Baker S, Bsat M (2003) The cmu pose, illumination, and expression database. IEEE Trans Pattern Anal Mach Intell 25(12):1615–1618

Martinez AM, Benavente R (1998) The ar face database. cvc technical report

Cai D, He X (2005) Orthogonal locality preserving indexing. In: Proceedings of the 28th annual international ACM SIGIR conference on research and development in information retrieval. pp. 3–10

Zou H, Hastie T, Tibshirani R (2006) Sparse principal component analysis. J Comput Graph Stat 15(2):265–286

Liu G, Yan S (2011) Latent low-rank representation for subspace segmentation and feature extraction. 2011 international conference on computer vision. IEEE, pp 615–1622

Yang W, Wang Z, Sun C (2015) A collaborative representation based projections method for feature extraction. Pattern Recogn 48(1):20–27

Zhang Y, Xiang M, Yang B (2017) Low-rank preserving embedding. Pattern Recogn 70:112–125

Lu Y, Lai Z, Xu Y, Li X, Zhang D, Yuan C (2015) Low-rank preserving projections. IEEE Trans Cybern 46(8):1900–1913

Naseem I, Togneri R, Bennamoun M (2010) Linear regression for face recognition. IEEE Trans Pattern Anal Mach Intell 32(11):2106–2112

Zhang L, Yang M, Feng X (2011) Sparse representation or collaborative representation: Which helps face recognition? In: 2011 International conference on computer vision. IEEE, pp 471–478

Xiang S, Nie F, Meng G, Pan C, Zhang C (2012) Discriminative least squares regression for multiclass classification and feature selection. IEEE Trans Neural Netw Learning Syst 23(11):1738–1754

Zhang X-Y, Wang L, Xiang S, Liu C-L (2014) Retargeted least squares regression algorithm. IEEE Trans Neural Netw Learning Syst 26(9):2206–2213

Wen J, Xu Y, Li Z, Ma Z, Xu Y (2018) Inter-class sparsity based discriminative least square regression. Neural Netw 102:36–47

Lai Z, Mo D, Wong WK, Xu Y, Miao D, Zhang D (2017) Robust discriminant regression for feature extraction. IEEE Trans Cybern 48(8):2472–2484

Fang X, Teng S, Lai Z, He Z, Xie S, Wong WK (2017) Robust latent subspace learning for image classification. IEEE Trans Neural Netw Learning Syst 29(6):2502–2515

Zhan S, Wu J, Han N, Wen J, Fang X (2020) Group low-rank representation-based discriminant linear regression. IEEE Trans Circuits Syst Video Technol 30(3):760–770

Zhang C, Li H, Qian Y, Chen C, Gao Y (2021) Pairwise relations oriented discriminative regression. IEEE Trans Circuits Syst Video Technol 31(7):2646–2660

Acknowledgements

This work was supported in part by the National Natural Science Foundation of China under Grant 62176065, Grant 62202107, and Grant 62006048, in part by Science and Technology Planning Project of Guangdong Province, China, under Grant 2019B110210002, and in part by the School level scientific research project of Guangdong Polytechnic Normal University under Grant 2021SDKYA013.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

He, J., Fang, X., Kang, P. et al. Adaptive affinity matrix learning for dimensionality reduction. Int. J. Mach. Learn. & Cyber. 14, 4063–4077 (2023). https://doi.org/10.1007/s13042-023-01881-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13042-023-01881-y