Abstract

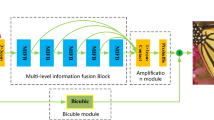

In recent years, deep convolution neural networks have made significant progress in single-image super-resolution (SISR). However, high-resolution (HR) images obtained by most SISR reconstruction methods still suffer from edge blur-ring and texture distortion. To address this issue, we propose a two-stage feature enhancement network (TFEN) for the SISR reconstruction to realize nonlinear mapping from low-resolution (LR) images to HR images. In the first stage, an initial feature reconstruction module (IFRM) is constructed by combining a feature attention enhancement block and multiple convolution layers that simulate degradation and reconstruction operations to reconstruct a coarse HR image. In the second stage, based on the extracted features and the coarse HR image in the first stage, multiple residual attention modules (RAMs) consisting of the proposed spatial feature enhancement blocks (SFEBs) and an attention interaction block (AIB) are cascaded to generate the final HR image. In RAM, the SFEB is designed to learn more refined features for the reconstruction by adopting dilated convolutions and constructing spatial feature enhancement block, and the AIB is built to enhance the important features learned by RAMs through constructing multi-directional attention maps. Extensive experiments show that the proposed method has better performance than some current state-of-the-art SISR networks.

Similar content being viewed by others

Data availability statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.

References

Wang Z, Chen J, Hoi S (2021) Deep learning for image super-resolution: a survey. IEEE Trans Pattern Anal Mach Intell 43(10):3365–3387

Wilman W, Yuen P (2010) Very low resolution face recognition problem. In: IEEE International Conference on Biometrics: Theory, Applications and Systems (BTAS), Washington, DC, USA, pp 1–6

Arun P, Buddhiraju K, Porwal A, Chanussot J (2020) CNN based spectral super-resolution of remote sensing images. Signal Process 169:107394

Shi W, Caballero J, Ledig C et al. (2013) Cardiac image super-resolution with global correspondence using multi-atlas PatchMatch. In: Medical Image Computing and Computer-Assisted Intervention (MICCAI), Heidelberg, Berlin, pp 9–16

Sajjadi M, Schölkopf B, Hirsch M (2017) EnhanceNet: single image super-resolution through automated texture synthesis. In: IEEE International Conference on Computer Vision (ICCV), Venice, Italy, pp 4501–4510

Keys R (1981) Cubic convolution interpolation for digital image processing. IEEE Trans Acoustics, Speech, Signal Process 29(6):1153–1160

Huang J, Singh A, Ahuja N (2015) Single image super-resolution from transformed self-exemplars. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, pp 5197–5206

Huang S, Sun J, Yang Y, Fang Y, Lin P, Que Y (2018) Robust single-image super-resolution based on adaptive edge-preserving smoothing regularization. IEEE Trans Image Process 27(6):2650–2663

Chen X, Qi C (2014) Nonlinear neighbor embedding for single image super-resolution via kernel mapping. Signal Process 94:6–22

Park S, Park M, Kang M (2003) Super-resolution image reconstruction: a technical overview. IEEE Signal Process Mag 20(3):21–36

Yang S, Wang M, Chen Y, Sun Y (2012) Single-image super-resolution reconstruction via learned geometric dictionaries and clustered sparse coding. IEEE Trans Image Process 21(9):4016–4028

Esmaeilzehi A, Ahmad M, Swamy M (2021) MuRNet: a deep recursive network for super resolution of bicubically interpolated images. Signal Process: Image Commun 94:116228

Lin Z, Li S, Jiang Y, Qing J, Wang J, Luo Q (2022) Feedback multi-scale residual dense network for image super-resolution. Signal Process: Image Commun 107:116760

Zareapoor M, Celebi M, Yang J (2019) Diverse adversarial network for image super-resolution. Signal Process: Image Commun 74:191–200

Dong C, Loy CC, He K, Tang X (2014) Learning a deep convolutional network for image super-resolution. In: European Conference on Computer Vision (ECCV), Zurich, Switzerland, pp 184–199

Kim J, Kwon J, Lee, Lee KM (2016) Accurate image super-resolution using very deep convolutional networks. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, pp 1646–1654

Kim J, Lee JK, Lee KM (2016) Deeply-recursive convolutional network for image super-resolution. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, pp 1637–1654

Yang Y, Zhang D, Huang S, Wu J (2019) Multilevel and multiscale network for single-image super-resolution. IEEE Signal Process Lett 26(12):1877–1881

Tai Y, Yang J, Liu X, Xu C (2017) MemNet: a persistent memory network for image restoration. In: IEEE International Conference on Computer Vision (ICCV), Venice, Italy, pp 4549–4557

Lim B, Son S, Kim H, Nah S, Lee KM (2017) Enhanced deep residual networks for single image super-resolution. In: IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Honolulu, HI, USA, pp 1132–1140

Zhang Y, Li K et al. (2018) Image super-resolution using very deep residual channel attention networks. In: European Conference on Computer Vision (ECCV), Munich, Germany, pp 294–310

Dai T, Cai J, Zhang Y, Xia S-T, Zhang L (2019) Second-order attention network for single image super-resolution. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, pp 11057–11066

Niu B, Wen W, Ren W, Zhang X, Yang L, Wang S, Zhang K, Cao, Shen H (2020) Single image super-resolution via a holistic attention network. In: European Conference on Computer Vision (ECCV), pp 191–207

Huang G, Liu Z, Van Der Maaten L, Weinberger KQ (2017) Densely connected convolutional networks. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, pp 2261–2269

Shi W et al. (2016) Real-time single image and video super-resolution using an efficient sub-pixel convolutional neural network. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, pp 1874–1883

Agustsson E, Timofte R (2017) NTIRE 2017 challenge on single image super-resolution: dataset and study. In: IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Honolulu, HI, USA, pp 1122–1131

Bevilacqua M, Roumy A, Guillemot C, Morel MLA (2012) Low-complexity single-image super-resolution based on nonnegative neighbour embedding. In: British Machine Vision Conference (BMVC), UK, pp 1–10

Zeyde R, Elad M, Protter M (2010) On single image scale up using sparse-representations. In: Proceedings of the Curves and Surfaces. Springer, Berlin, Heidelberg, vol. 6920, pp 711–730

Martin D, Fowlkes C, Tal D, Malik J (2001) A database of human segmented natural images and its application to evaluating segmentation algorithms and measuring ecological statistics. In: IEEE International Conference on Computer Vision. (ICCV), Vancouver, BC, Canada, pp 416–423

Matsui Y, Ito K, Aramaki Y, Fujimoto A, Ogawa T, Yamasaki T, Aizawa K (2017) Sketch-based manga retrieval using manga109 dataset. Multimed Tools Appl 76:21811–21838

Wang Z, Bovik AC, Sheikh HR, Simoncelli EP et al (2004) Image quality assessment: from error visibility to structural similarity. IEEE Trans Image Process 13:600–612

He K, Zhang X, Ren S, Sun J (2015) Delving deep into rectifiers: surpassing human-level performance on ImageNet classification. In: IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, pp 1026–1034

Lai W, Huang J, Ahuja N, Yang M (2017) Deep Laplacian pyramid networks for fast and accurate super-resolution. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, pp 5835–5843

Hui Z, Wang X, Gao X (2018) Fast and accurate single image super-resolution via information distillation network. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, pp 723–731

Haris M, Shakhnarovich G, Ukita N (2018) Deep back-projection networks for super-resolution. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, pp 1664–1673

Ahn N, Kang B, Sohn K (2018) Fast, accurate, and lightweight super-resolution with cascading residual network. In: European Conference on Computer Vision (ECCV), Munich, Germany, pp 256–272

Li J, Fang F, Mei K, Zhang G (2018) Multi-scale residual network for image super-resolution. In: European conference on computer vision (ECCV), Munich, Germany, October, pp 517–532

Hui Z, Gao X, Yang Y, Wang X (2019) Lightweight image super-resolution with information multi-distillation network. In: ACM International Conference on Multimedia (ACMM), Nice, France, pp 2024–2032

He X, Mo Z, Wang P et al. (2019) Ode-inspired network design for single image super-resolution. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, pp 1732–1741

Luo X, Xie Y, Zhang Y et al. (2020) Latticenet: towards lightweight image super-resolution with lattice block. In: European Conference on Computer Vision (ECCV), Glasgow, United Kingdom, pp 272–289

Zhao B, Wu X, Feng J, Peng Q, Yan S (2017) Diversified visual attention networks for fine-grained object classification. IEEE Trans Multimed 19(6):1245–1256

Liu S-A, Xie H, Xu H, Zhang Y, Tian Q (2022) Partial class activation attention for semantic segmentation. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, pp 16815–16824

Deng S, Liang Z, Sun L, Jia K (2022) VISTA: boosting 3D object detection via dual cross-view spatial attention. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, pp 8438–8447

Wang L, Dong X, Wang Y et al. (2021) Exploring sparsity in image super-resolution for efficient inference. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, pp 4915–4924

Lan R, Sun L, Liu Z et al (2021) Cascading and enhanced residual networks for accurate single-image super-resolution. IEEE Trans Cybern 51(1):115–125

Zhou Y, Liang T, Jiang Z, Men A (2022) Efficient network removing feature redundancy for single image super-resolution. In: IEEE International Symposium on Broadband Multimedia Systems and Broadcasting (BMSB), Bilbao, Spain, pp 1–6

Liu Y et al. (2022) Hierarchical similarity learning for aliasing suppression image super-resolution. In: IEEE Transactions on Neural Networks and Learning Systems (TNNLS), TNNLS.2022.3191674

Ji J, Zhong B, Mu K-K (2023) A content-based multi-scale network for single image super-resolution. In: IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, pp 1–5, ICASSP49357.2023.10095714

Li Z, Yang J, Liu Z, Yang X, Jeon G, Wu W (2019) Feedback network for image super-resolution. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, pp 3862–3871

Funding

This work is supported by the National Natural Science Foundation of China (No.62072218 and No.61862030), by the Natural Science Foundation of Jiangxi Province (No. 20182BCB22006, No. 20181BAB202010, No.20192ACB20002, and No.20192ACBL21008), and by the Talent project of Jiangxi Thousand Talents Program (No. jxsq2019201056).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Huang, S., Lai, H., Yang, Y. et al. TFEN: two-stage feature enhancement network for single-image super-resolution. Int. J. Mach. Learn. & Cyber. 15, 605–619 (2024). https://doi.org/10.1007/s13042-023-01928-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13042-023-01928-0