Abstract

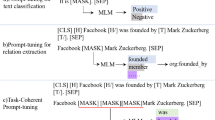

Relation extraction is designed to extract semantic relation between predefined entities from text. Recently, prompt tuning has achieved promising results in the field of relation extraction, and its core idea is to insert a template into the input and model the relation extraction as an masked language modeling (MLM) problem. However, existing prompt tuning approaches ignore the rich semantic information between entities and relations resulting in suboptimal performance. In addition, since MLM tasks can only identify one relation at a time, the widespread problem of entity overlap in relation extraction cannot be solved. To this end, we propose a novel Context-Aware Generative Prompt Tuning (CAGPT) method which ensures the comprehensiveness of triplet extraction by modeling relation extraction as a generative task, and outputs triplets related to the same entity at one time to overcome the entity overlap problem. Moreover, we connect entities and relations with natural language and inject entity and relationship information into the designed template which can make full use of the rich semantic information between entities and relations. Extensive experimental results on four benchmark datasets demonstrate the effectiveness of the proposed method.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Data availability

All datasets used in the experiments are available for public access, and the links are given in the corresponding references.

References

Liu P, Yuan W, Fu J, Jiang Z, Hayashi H, Neubig G (2023) Pre-train, prompt, and predict: A systematic survey of prompting methods in natural language processing. ACM Comput Surv 55(9):1–35

Brown T, Mann B, Ryder N, Subbiah M, Kaplan JD, Dhariwal P, Neelakantan A, Shyam P, Sastry G, Askell A et al (2020) Language models are few-shot learners. Adv Neural Inf Process Syst 33:1877–1901

Han X, Zhao W, Ding N, Liu Z, Sun M (2022) Ptr: Prompt tuning with rules for text classification. AI Open 3:182–192

Chen X, Zhang N, Xie X, Deng S, Yao Y, Tan C, Huang F, Si L, Chen H (2022) Knowprompt: Knowledge-aware prompt-tuning with synergistic optimization for relation extraction. In: Proceedings of the ACM Web Conference 2022, pp. 2778–2788

Huffman SB (1995) Learning information extraction patterns from examples. In: International Joint Conference on Artificial Intelligence, pp. 246–260. Springer

Zeng D, Liu K, Chen Y, Zhao J (2015) Distant supervision for relation extraction via piecewise convolutional neural networks. In: Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, pp. 1753–1762

Zhou P, Shi W, Tian J, Qi Z, Li B, Hao H, Xu B (2016) Attention-based bidirectional long short-term memory networks for relation classification. In: Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics (volume 2: Short Papers), pp. 207–212

Zhang Y, Zhong V, Chen D, Angeli G, Manning CD (2017) Position-aware attention and supervised data improve slot filling. In: Conference on Empirical Methods in Natural Language Processing

Zhang J, Hong Y, Zhou W, Yao J, Zhang M (2020) Interactive learning for joint event and relation extraction. Int J Mach Learn Cybern 11:449–461

Zhang Y, Qi P, Manning CD (2018) Graph convolution over pruned dependency trees improves relation extraction. arXiv preprint arXiv:1809.10185

Guo Z, Zhang Y, Lu W (2019) Attention guided graph convolutional networks for relation extraction. arXiv preprint arXiv:1906.07510

Guo Z, Nan G, Lu W, Cohen SB (2021) Learning latent forests for medical relation extraction. In: Proceedings of the Twenty-Ninth International Conference on International Joint Conferences on Artificial Intelligence, pp. 3651–3657

Lin Y, Ji H, Huang F, Wu L (2020) A joint neural model for information extraction with global features. In: Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, pp. 7999–8009

Wang Z, Wen R, Chen X, Huang S-L, Zhang N, Zheng Y (2020) Finding influential instances for distantly supervised relation extraction. arXiv preprint arXiv:2009.09841

Li J, Wang R, Zhang N, Zhang W, Yang F, Chen H (2020) Logic-guided semantic representation learning for zero-shot relation classification. arXiv preprint arXiv:2010.16068

Zheng H, Wen R, Chen X, Yang Y, Zhang Y, Zhang Z, Zhang N, Qin B, Xu M, Zheng Y (2021) Prgc: Potential relation and global correspondence based joint relational triple extraction. arXiv preprint arXiv:2106.09895

Ye H, Zhang N, Deng S, Chen M, Tan C, Huang F, Chen H (2021) Contrastive triple extraction with generative transformer. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 35, pp. 14257–14265

Zhang N, Chen X, Xie X, Deng S, Tan C, Chen M, Huang F, Si L, Chen H (2021) Document-level relation extraction as semantic segmentation. arXiv preprint arXiv:2106.03618

Wu S, He Y (2019) Enriching pre-trained language model with entity information for relation classification. In: Proceedings of the 28th ACM International Conference on Information and Knowledge Management, pp. 2361–2364

Joshi M, Chen D, Liu Y, Weld DS, Zettlemoyer L, Levy O (2020) Spanbert: Improving pre-training by representing and predicting spans. Transactions of the association for computational linguistics 8:64–77

Yu D, Sun K, Cardie C, Yu D (2020) Dialogue-based relation extraction. arXiv preprint arXiv:2004.08056

Ma Y, Hiraoka T, Okazaki N (2022) Named entity recognition and relation extraction using enhanced table filling by contextualized representations. Journal of Natural Language Processing 29(1):187–223

Zeng D, Xu L, Jiang C, Zhu J, Chen H, Dai J, Jiang L (2023) Sequence tagging with a rethinking structure for joint entity and relation extraction. International Journal of Machine Learning and Cybernetics, 1–13

Eberts M, Ulges A (2019) Span-based joint entity and relation extraction with transformer pre-training. arXiv preprint arXiv:1909.07755

Yu H, Zhang N, Deng S, Ye H, Zhang W, Chen H (2020) Bridging text and knowledge with multi-prototype embedding for few-shot relational triple extraction. arXiv preprint arXiv:2010.16059

Dong B, Yao Y, Xie R, Gao T, Han X, Liu Z, Lin F, Lin L, Sun M (2020) Meta-information guided meta-learning for few-shot relation classification. In: Proceedings of the 28th International Conference on Computational Linguistics, pp. 1594–1605

Ben-David E, Oved N, Reichart R (2021) Pada: A prompt-based autoregressive approach for adaptation to unseen domains. arXiv preprint arXiv:2102.12206

Lester B, Al-Rfou R, Constant N (2021) The power of scale for parameter-efficient prompt tuning. arXiv preprint arXiv:2104.08691

Reynolds L, McDonell K (2021) Prompt programming for large language models: Beyond the few-shot paradigm. In: Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems, pp. 1–7

Lu Y, Bartolo M, Moore A, Riedel S, Stenetorp P (2021) Fantastically ordered prompts and where to find them: Overcoming few-shot prompt order sensitivity. arXiv preprint arXiv:2104.08786

Ding N, Chen Y, Han X, Xu G, Xie P, Zheng H-T, Liu Z, Li J, Kim H-G (2021) Prompt-learning for fine-grained entity typing. arXiv preprint arXiv:2108.10604

Schick T, Schmid H, Schütze H (2020) Automatically identifying words that can serve as labels for few-shot text classification. arXiv preprint arXiv:2010.13641

Gao T, Fisch A, Chen D (2020) Making pre-trained language models better few-shot learners. arXiv preprint arXiv:2012.15723

Shin T, Razeghi Y, Logan IV RL, Wallace E, Singh S (2020) Autoprompt: Eliciting knowledge from language models with automatically generated prompts. arXiv preprint arXiv:2010.15980

Chen Y, Shi B, Xu K (2024) Ptcas: Prompt tuning with continuous answer search for relation extraction. Inf Sci 659:120060

Wei C, Chen Y, Wang K, Qin Y, Huang R, Zheng Q (2024) Apre: Annotation-aware prompt-tuning for relation extraction. Neural Process Lett 56(2):62

Roth D, Yih W-t (2004) A linear programming formulation for global inference in natural language tasks. In: Proceedings of the Eighth Conference on Computational Natural Language Learning (CoNLL-2004) at HLT-NAACL 2004, pp. 1–8

Gurulingappa H, Rajput AM, Roberts A, Fluck J, Hofmann-Apitius M, Toldo L (2012) Development of a benchmark corpus to support the automatic extraction of drug-related adverse effects from medical case reports. J Biomed Inform 45(5):885–892

Hendrickx I, Kim SN, Kozareva Z, Nakov P, Séaghdha DO, Padó S, Pennacchiotti M, Romano L, Szpakowicz S (2019) Semeval-2010 task 8: Multi-way classification of semantic relations between pairs of nominals. arXiv preprint arXiv:1911.10422

Cabot P-LH, Navigli R (2021) Rebel: Relation extraction by end-to-end language generation. In: Findings of the Association for Computational Linguistics: EMNLP 2021, pp. 2370–2381

Crone P (2020) Deeper task-specificity improves joint entity and relation extraction. arXiv preprint arXiv:2002.06424

Zheng C, Cai Y, Xu J, Leung H, Xu G (2019) A boundary-aware neural model for nested named entity recognition. In: Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP). Association for Computational Linguistics

Yu N, Liu J, Shi Y (2023) Span-based fine-grained entity-relation extraction via sub-prompts combination. Appl Sci 13(2):1159

Acknowledgements

This study was supported by National Natural Science Foundation of China (Grant Nos. 62172113 and 62006049), The Ministry of education of Humanities and Social Science project (Grant No. 18JDGC012), Guangdong Science and Technology Project (Grant Nos. KTP20210197 and 2017A040403068), Guangdong Basic and Applied Basic Research Foundation(Grant No. 2023A1515010939) and Project of Education Department of Guangdong Province (Grant Nos. 2022KTSCX068 and 2021ZDZX1079), Guangzhou Science and Technology Planning Project (Grant No. 2023A04J0364).

Author information

Authors and Affiliations

Contributions

Xiaoyong Liu: Conceptualization, Methodology, Funding acquisition, Supervision, Writing-review and editing. Handong Wen: Methodology, Data curation, Writing - original draft. Chunlin Xu: Conceptualization, Writing-review and editing, Funding acquisition Zhiguo Du: Formal analysis, Writing-review and editing Huihui Li: Supervision, Formal analysis, Funding acquisition Miao Hu: Writing-review and editing

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Liu, X., Wen, H., Xu, C. et al. Context-aware generative prompt tuning for relation extraction. Int. J. Mach. Learn. & Cyber. 15, 5495–5508 (2024). https://doi.org/10.1007/s13042-024-02255-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13042-024-02255-8