Abstract

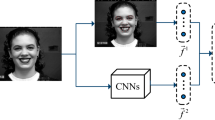

Emotion based music player is an interdisciplinary study of computer vision and psychology. As music enhances the positive vibes it plays a significant role in soothing people’s emotion. Emotions can be predicted through facial expression analysis using vision-based methods. However, challenges like environment and expression complexity have become hindrance to attain a good recognition rate. Therefore, we put forward a deep dual domain joint feature framework based on linear discriminant analysis for facial emotion recognition. First, we detect the human face and learn the emotion pattern using the popular complementary deep domain networks called EfficientNet and ResNet50. The learned deep dual domain space is projected onto linear discriminant space to achieve a joint discriminant feature space. The recognition rate of the proposed joint discriminant feature framework is analyzed using support vector machine. To prove the efficacy of the proposed framework, we validated it on two Benchmarks namely FER2013 and CK48+ datasets. The proposed framework achieved a good recognition rate of 99% and 98.6% on FER2013 and CK48+ respectively. Experimental analysis on our EmDe dataset showed an accuracy of 99% and proves that the deep dual domain joint discriminant framework as a promising pipeline for emotion-based music player system.

Similar content being viewed by others

Data availability

Data and material will be made available to the readers based on reasonable request.

Abbreviations

- DNN:

-

Deep neural network

- ML:

-

Machine learning

- SIFT:

-

Scale-invariant feature transform

- BRIEF:

-

Binary robust independent elementary features

- ASM:

-

Active Shape Model

- FAST:

-

Features from accelerated segment test

- FER:

-

Facial expressions

- CNN:

-

Convolutional neural network

- PSO:

-

Particle Swarm Optimization

- ALO:

-

Ant Lion Optimization

- EN:

-

EfficientNet

- LDA:

-

Linear Discriminant Analysis

- PCA:

-

Principal Component Analysis

References

Acharya V, Ravi V, Mohammad N, EfficientNet-based convolutional neural networks for malware classification, In: 2021 12th international conference on computing communication and networking technologies (ICCCNT), 2021, pp. 1–6, https://doi.org/10.1109/ICCCNT51525.2021.9579750

Caroppo A, Leone A, Siciliano P (2020) Comparison between deep learning models and traditional machine learning approaches for facial expression recognition in ageing adults. J Comput Sci Technol 35:1127–1146

Caroppo A, Leone A, Siciliano P (2020) Comparison between deep learning models and traditional machine learning approaches for facial expression recognition in ageing adults. J Comput Sci Technol 35(5):1127–1146. https://doi.org/10.1007/s11390-020-9665-4

Cherland E (2012) The polyvagal theory: Neurophysiological foundations of emotions, attachment, communication, self-regulation. J Canad Acad Child Adolesc Psychiatr 21(4):313

Fathallah A, Abdi L, Douik A (2017) Facial expression recognition via deep learning, In: 2017 IEEE/ACS 14th international conference on computer systems and applications (AICCSA), 2017, IEEE, 745–750, https://doi.org/10.1109/AICCSA.2017.124.

Gevers T, Smeulders AWM (2010) PicToSeek: combining color and shape invariant features for image retrieval. IEEE Trans Image Process 9(1):102–119

Goodfellow IJ, Erhan D, Carrier PL et al. (2013) Challenges in representation learning: A report on three machine learning contests. In Proc. the 20th international conference on neural information processing, 117–124

Guo L, Li J, Zhu Y, Tang Z (2011) A novel features from accelerated segment test algorithm based on LBP on image matching. In: 2011 IEEE 3rd international conference on communication software and networks, 2011, 355–358, https://doi.org/10.1109/ICCSN.2011.6013732.

He K, Zhang X, Ren S, Sun J, (2016) Deep residual learning for image recognition, In: proceedings of the IEEE conference on computer vision and pattern recognition (CVPR), 770–778

Jain S, Shrivastava S (2013) A novel approach for image classification in content based image retrieval using support vector machine. Int J Comput Sci Eng Technol (IJCSET) 4(3):223–227

Jaiswal A, Raju AK, Deb S (2020) Facial emotion detection using deep learning. In 2020 international conference for emerging technology (INCET), IEEE, 1–5

Kahou SE, Pal C, Bouthillier X et al. (2013) Combining modality specific deep neural networks for emotion recognition in video. In: Proc. the 15th ACM on international conference on multimodal interaction, 543–550

Kekre HB, Sarode TK, Thepade SD (2009) Image retrieval using color-texture features from DCT on VQ codevectors obtained by Kekre’s Fast codebook generation”. ICGST Int J Gr Vision Image Process (GVIP) 9(5):1–8

Kher HR, Thakar VK (2014) Scale invariant feature transform based image matching and registration. Fifth Int Conf Signal Image Process 2014:50–55. https://doi.org/10.1109/ICSIP.2014.12

Kumari N, Bhatia R (2023) Deep learning based efficient emotion recognition technique for facial images. Int J Syst Assur Eng Manag 14:1421–1436. https://doi.org/10.1007/s13198-023-01945-w

Kumar S, Kumar A, (2018) A brief review on antlion optimization algorithm, In: 2018 international conference on advances in computing, communication control and networking (ICACCCN), 236–240, https://doi.org/10.1109/ICACCCN.2018.8748862.

Liu M, Wang R, Li S, Shan S, Huang Z, Chen X (2014) Combining multiple kernel methods on Riemannian manifold for emotion recognition in the wild. In: Proc. the 16th international conference on multimodal interaction, 494–501

Li G, Zhang C, Wang M et al (2018) Scene classification of high resolution remote sensing image using transfer learning with multi modal feature extraction framework chinese conference on image and graphic technologies. Springer, Singapore

Mirjalili S (2015) “The Ant Lion optimizer.” Adv Eng Softw 83:80–98

Pan SJ, Yang Q (2010) A survey on transfer learning. IEEE Trans Knowl Data Eng 22(10):1345–1359

Popescu MC, Sasu LM (2014) Feature extraction, feature selection and machine learning for image classification: a case study. Int Conf Optim Electr Electron Equip (OPTIM) 2014:968–973. https://doi.org/10.1109/OPTIM.2014.6850925

Rizhevsky A, Sutskever I, Hinton GE (2012) ImageNet classification with deep convolutional neural networks. In: Proc. the 26th annual conference on neural information processing systems, 1106–1114.

SergyanSzabolcs (2008) “Color histogram features based image classification in content-based image retrieval systems”, Applied Machine Intelligence and Informatics, 221–224

Shbib R, Zhou S (2015) Facial expression analysis using active shape model. Int J Signal Process Image Process Pattern Recognit 8(1):9–22

Shinde SR, Sabale S, Kulkarni S, Bhatia D, (2015) Experiments on content based image classification using Color feature extraction. In: 2015 international conference on communication, information & computing technology (ICCICT), 1–6, https://doi.org/10.1109/ICCICT.2015.7045737.

Sushma NiketBorade and Adgaonkar RP (2011) Comparative analysis of PCA and LDA. In: 2011 international conference on business, engineering and industrial applications, 203–206, https://doi.org/10.1109/ICBEIA.2011.5994243.

Singh SK, Thakur RK, Kumar S, Anand R (2022) Deep learning and machine learning based facial emotion detection using CNN. In 2022 9th international conference on computing for sustainable global development (INDIACom), IEEE, 530–535

Sudibyo U, Rustad S, NurtantioAndono P, ZainulFanani A, Purwanto P, Muljono M (2020) A novel approach on linear discriminant analysis (LDA). Int Semin Appl Technol Inf Commun (iSemantic) 2020:131–136. https://doi.org/10.1109/iSemantic50169.2020.9234274

Wu SX, Wai H-T, Li L, Scaglione A (2018) A review of distributed algorithms for principal component analysis. Proc IEEE 106(8):1321–1340. https://doi.org/10.1109/JPROC.2018.2846568

Xu H, Ma Z (2008) An improved active shape model for facial feature location. Second Int Symp Intell Inf Technol Appl 2008:114–118. https://doi.org/10.1109/IITA.2008.419

Yang X, Dong Y, Li J (2018) Review of data features-based music emotion recognition methods. Multimed Syst 24:365–389

Ye Z, Pei Y, Shi J (2009) An improved algorithm for harris corner detection. In: 2009 2nd international congress on image and signal processing, 1–4, https://doi.org/10.1109/CISP.2009.5304635

Yustiawati R et al., (2018) Analyzing of different features using haar cascade classifier, In: 2018 international conference on electrical engineering and computer science (ICECOS), Pangkal, Indonesia, 129–134, https://doi.org/10.1109/ICECOS.2018.8605266.

Zhang F, Ye F, Su Z (2014) A modified feature point descriptor based on binary robust independent elementary features. In: 2014 7th international congress on image and signal processing, 258–263, https://doi.org/10.1109/CISP.2014.7003788

Acknowledgements

Authors would like to express gratitude to the anonymous reviewers.

Funding

No fund obtained for this work from any agency.

Author information

Authors and Affiliations

Contributions

Sasithradevi. A formulated the problem and methodology. Ravi Teja, Siva Saketh and Saketh Chakka carried out simulation and experiments under the guidance and supervision of D. ArumugaPerumal and P. Prakash.

Corresponding author

Ethics declarations

Conflict of interest

Authors have no conflict of Interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Sasithradevi, A., Challa, R.T., Saketh, S. et al. Deep dual domain joint discriminant feature framework for emotion based music player. Int J Syst Assur Eng Manag 15, 3854–3868 (2024). https://doi.org/10.1007/s13198-024-02382-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13198-024-02382-z