Abstract

The attention mechanism is an important component of cross-modal research. It can improve the performance of convolutional neural networks by distinguishing the informative parts of the feature map from the useless ones. Various kinds of attention are proposed by recent studies. Different attentions use distinct division method to weight each part of the feature map. In this paper, we propose a full-dimension attention module, which is a lightweight, fully interactive 3-D attention mechanism. FDAM generates 3-D attention maps for both spatial and channel dimensions in parallel and then multiplies them to the feature map. It is difficult to obtain discriminative attention map cell under channel interaction at a low computational cost. Therefore, we adapt a generalized Elo rating mechanism to generate cell-level attention maps. We store historical information with a slight amount of non-training parameters to spread the computation over each training iteration. The proposed module can be seamlessly integrated into the end-to-end training of the CNN framework. Experiments demonstrate that it outperforms many existing attention mechanisms on different network structures and datasets for computer vision tasks, such as image classification and object detection.

Similar content being viewed by others

References

Berg A (2020) Statistical analysis of the elo rating system in chess. Chance 33(3):31–38

Cao Y, Xu J, Lin S, et al (2019) Gcnet: non-local networks meet squeeze-excitation networks and beyond. In: Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, pp 0–0

Chen H, Ding G, Liu X, et al (2020) Imram: iterative matching with recurrent attention memory for cross-modal image-text retrieval. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 12655–12663

Chen K, Wang J, Pang J, et al (2019) Mmdetection: open mmlab detection toolbox and benchmark. arXiv preprint arXiv:1906.07155

Chen LC, Papandreou G, Kokkinos I et al (2017) Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans Pattern Anal Mach Intell 40(4):834–848

Elo AE (1978) The rating of chessplayers, past and present. BT Batsford Limited, London

Goyal P, Dollár P, Girshick R, et al (2017) Accurate, large minibatch sgd: training imagenet in 1 hour. arXiv preprint arXiv:1706.02677

Guo MH, Xu TX, Liu JJ, et al (2021) Attention mechanisms in computer vision: a survey. arXiv preprint arXiv:2111.07624

Guo Y, Liu Y, Georgiou T et al (2018) A review of semantic segmentation using deep neural networks. Int J Multimedia Inf Retriev 7(2):87–93

He K, Zhang X, Ren S, et al (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 770–778

He K, Zhang X, Ren S et al (2016) Identity mappings in deep residual networks. European conference on computer vision. Springer, Cham, pp 630–645

Hou Q, Zhou D, Feng J (2021) Coordinate attention for efficient mobile network design. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. pp 13713–13722

Hu J, Shen L, Sun G (2018) Squeeze-and-excitation networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 7132–7141

Huang G, Liu Z, Van Der Maaten L, et al (2017) Densely connected convolutional networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp 4700–4708

Huang G, Liu S, Van der Maaten L, et al (2018) Condensenet: an efficient densenet using learned group convolutions. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp 2752–2761

Huddar MG, Sannakki SS, Rajpurohit VS (2020) Multi-level context extraction and attention-based contextual inter-modal fusion for multimodal sentiment analysis and emotion classification. Int J Multimedia Inf Retriev 9(2):103–112

Krizhevsky A, Hinton G, et al (2009) Learning multiple layers of features from tiny images

Krizhevsky A, Sutskever I, Hinton GE (2012) Imagenet classification with deep convolutional neural networks. Adv Neural Inf Process Syst 25:1097–1105

LeCun Y, Bottou L, Bengio Y et al (1998) Gradient-based learning applied to document recognition. Proc IEEE 86(11):2278–2324

Lee CY, Xie S, Gallagher P, et al (2015) Deeply-supervised nets. In: Artificial intelligence and statistics. PMLR, pp 562–570

Li J, Guo Y, Lao S et al (2022) Few2decide: towards a robust model via using few neuron connections to decide. Int J Multimedia Inf Retriev 11(2):189–198

Li Z, Tang J, Mei T (2018) Deep collaborative embedding for social image understanding. IEEE Trans Pattern Anal Mach Intell 41(9):2070–2083

Lin TY, Maire M, Belongie S et al (2014) Microsoft coco: common objects in context. European conference on computer vision. Springer, Cham, pp 740–755

Lin TY, Dollár P, Girshick R, et al (2017) Feature pyramid networks for object detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp 2117–2125

Liu W, Anguelov D, Erhan D et al (2016) Ssd: single shot multibox detector. European conference on computer vision. Springer, Cham, pp 21–37

Park J, Woo S, Lee JY, et al (2018) Bam: bottleneck attention module. arXiv preprint arXiv:1807.06514

Pelánek R (2016) Applications of the elo rating system in adaptive educational systems. Comput Educ 98:169–179

Peng Z, Li Z, Zhang J, et al (2019) Few-shot image recognition with knowledge transfer. In: Proceedings of the IEEE/CVF international conference on computer vision. pp 441–449

Qin Z, Zhang P, Wu F, et al (2021) Fcanet: frequency channel attention networks. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 783–792

Ren S, He K, Girshick R, et al (2015) Faster r-cnn: towards real-time object detection with region proposal networks. Adva Neural Inf Process Syst 28

Sandler M, Howard A, Zhu M, et al (2018) Mobilenetv2: inverted residuals and linear bottlenecks. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp 4510–4520

Shojaei G, Razzazi F (2019) Semi-supervised domain adaptation for pedestrian detection in video surveillance based on maximum independence assumption. Int J Multimedia Inf Retriev 8(4):241–252

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. Comput Sci

Szczecinski L, Djebbi A (2020) Understanding draws in elo rating algorithm. J Quant Anal Sports 16(3):211–220

Szegedy C, Liu W, Jia Y, et al (2015) Going deeper with convolutions. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp 1–9

Wang F, Jiang M, Qian C, et al (2017) Residual attention network for image classification. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp 3156–3164

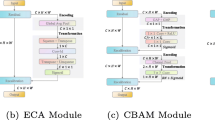

Wang Q, Wu B, Zhu P, et al (2020) Eca-net: efficient channel attention for deep convolutional neural networks, 2020 ieee. In: CVF conference on computer vision and pattern recognition (CVPR). IEEE

Wang X, Girshick R, Gupta A, et al (2018) Non-local neural networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp 7794–7803

Woo S, Park J, Lee JY, et al (2018) Cbam: convolutional block attention module. In: Proceedings of the European conference on computer vision (ECCV). pp 3–19

Xie J, Ma Z, Chang D, et al (2021) Gpca: a probabilistic framework for gaussian process embedded channel attention. IEEE Trans Pattern Anal Mach Intell

Xie S, Girshick R, Dollár P, et al (2017) Aggregated residual transformations for deep neural networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp 1492–1500

Yang L, Zhang RY, Li L, et al (2021) Simam: a simple, parameter-free attention module for convolutional neural networks. In: International conference on machine learning. PMLR, pp 11863–11874

Yang Z, Zhu L, Wu Y, et al (2020) Gated channel transformation for visual recognition. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. pp 11794–11803

Yuan Y, Chen X, Wang J (2020) Object-contextual representations for semantic segmentation. In: Computer vision–ECCV 2020: 16th European conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part VI 16. Springer, pp 173–190

Zagoruyko S, Komodakis N (2016) Wide residual networks. arXiv preprint arXiv:1605.07146

Zeiler MD, Fergus R (2014) Visualizing and understanding convolutional networks. European conference on computer vision. Springer, Cham, pp 818–833

Acknowledgements

The authors acknowledge the financial supported by the Fundamental Research Funds for the Provincial Universities of Zhejiang (Grant No. GK219909299001-015), the Natural Science Foundation of China (Grant No. 62206082), National Undergraduate Training Program for Innovation and Entrepreneurship (Grant No. 202110336042) and Planted talent plan (Grant No. 2022R407A002).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no competing interests to declare that are relevant to the content of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Cai, S., Wang, C., Ding, J. et al. FDAM: full-dimension attention module for deep convolutional neural networks. Int J Multimed Info Retr 11, 599–610 (2022). https://doi.org/10.1007/s13735-022-00248-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13735-022-00248-3