Abstract

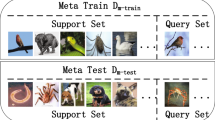

Few-shot learning aims to classify novel classes with extreme few labeled samples. Existing metric-learning-based approaches tend to employ the off-the-shelf CNN models for feature extraction, and conventional clustering algorithms for feature matching. These methods neglect the importance of image regions and might trap in over-fitting problems during feature clustering. In this work, we propose a novel MHA-WoML framework for few-shot learning, which adaptively focuses on semantically dominant regions, and well relieves the over-fitting problem. Specifically, we first design a hierarchical multi-head attention (MHA) module, which consists of three functional heads (i.e., rare head, syntactic head and positional head) with masks, to extract comprehensive image features, and screen out invalid features. The MHA behaves better than current transformers in few-shot recognition. Then, we incorporate the optimal transport theory into Wasserstein distance and propose a Wasserstein-OT metric learning (WoML) module for category clustering. The WoML module focuses more on calculating the appropriately approximate barycenter to avoid the over accurate sub-stage fitting which may threaten the global fitting, thus alleviating the problem of over-fitting in the training process. Experimental results show that our approach achieves remarkably better performance compared to current state-of-the-art methods by scoring about 3% higher accuracy, across four benchmark datasets including MiniImageNet, TieredImageNet, CIFAR-FS and CUB200.

Similar content being viewed by others

Data Availability Statement

All data include in this study are available upon request by contact with the corresponding author.

References

Graves A, Wayne G, Danihelka I (2014) Neural turing machines. arXiv preprint arXiv:1410.5401

Finn C, Abbeel P, Levine S (2017) Model-agnostic meta-learning for fast adaptation of deep networks. In: ICML

Chopra S, Hadsell R, LeCun Y (2005) Learning a similarity metric discriminatively, with application to face verification. In: CVPR

Bateni P, Barber J, van de Meent J-W, Wood F (2022) Enhancing few-shot image classification with unlabelled examples. In: WACV

Rodriguez P, Laradji I, Drouin A, Lacoste A (2020) Embedding propagation: Smoother manifold for few-shot classification. In: ECCV

Ziko I, Dolz J, Granger E, Ayed IB (2020) Laplacian regularized few-shot learning. In: ICML

Rizve MN, Khan S, Khan FS, Shah M (2021) Exploring complementary strengths of invariant and equivariant representations for few-shot learning. In: CVPR

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: CVPR

Lee K, Maji S, Ravichandran A, Soatto S (2019) Meta-learning with differentiable convex optimization. In: CVPR

Xie S, Girshick R, Dollar P, Tu Z, He K (2017) Aggregated residual transformations for deep neural networks. In: CVPR

Ravichandran A, Bhotika R, Soatto S (2019) Few-shot learning with embedded class models and shot-free meta training. In: ICCV

Hiroyuki K (2020) Multi-view Wasserstein discriminant analysis with entropic regularized Wasserstein distance. In: ICASSP

Peyré G, Cuturi M (2019) Computational optimal transport: with applications to data science. Found Trends Machine Learn 11(5–6):355–607

Hu Y, Gripon V, Pateux S (2021) Leveraging the feature distribution in transfer-based few-shot learning. In: ICANN

Bendou Y, Hu Y, Lafargue R, Lioi G, Pasdeloup B, Pateux S (2021) Easy: Ensemble augmented-shot y-shaped learning: State-of-the-art few-shot classification with simple ingredients

Zhang H, Cao Z, Yan Z, Zhang C (2021) Sill-net: Feature augmentation with separated illumination representation. arXiv preprint arXiv:2102.03539

Yang S, Liu L, Xu M (2021) Free lunch for few-shot learning: distribution calibration. In: ICLR

Zhang H, Cisse M, Dauphin YN, Lopez-Paz D (2018) Mixup: Beyond empirical risk minimization. In: ICLR

Hu J, Shen L, Sun G (2018) Squeeze-and-excitation networks. In: CVPR

Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Jones L, Lukasz Kaiser Polosukhin I (2017) Attention is all you need. In: NeurIPS

Yang Z, Yang D, Dyer C, He X, Smola A, Hovy E (2016) Hierarchical attention networks for document classification. In: NAACL-HLT

Barz B, Rodner E, Garcia YG, Denzler J (2019) Detecting regions of maximal divergence for spatio-temporal anomaly detection. IEEE Trans Pattern Anal Mach Intell 41(5):1088–1101

Goodfellow IJ, Pouget-Abadie J, Mirza M, Xu B, Xu B, Ozair S, Courville A, Bengio Y (2014) Generative adversarial nets. In: NeurIPS

Nezza ED, Lu CH (2015) Generalized monge-ampère capacities. Int Math Res Notices 2015(16):7287–7322

Yang I (2017) A convex optimization approach to distributionally robust Markov decision processes with Wasserstein distance. IEEE Control Syst Lett 1(1):164–169

Zhang R, Li X, Zhang H, Nie F (2020) Deep fuzzy k-means with adaptive loss and entropy regularization. IEEE Trans Fuzzy Syst 28(11):2814–2824

Daniel C (2019) Sinkhorn-knopp theorem for rectangular positive maps. Linear Multilinear Algebra 67(11):2345–2365

Muzellec B, Josse J, Boyer C, Cuturi M (2020) Missing data imputation using optimal transport. In: ICML

Huang G, Liu Z, van der Maaten L, Weinberger KQ (2017) Densely connected convolutional networks. In: CVPR

Voita E, Serdyukov P, Sennrich R, Titov, I (2019) Analyzing multi-head self-attention: Specialized heads do the heavy lifting, the rest can be pruned. In: ACL

Zeiler MD, Fergus R (2014) Visualizing and understanding convolutional networks. In: ECCV

Vinyals O, Blundell C, Lillicrap T, koray kavukcuoglu Wierstra D (2016) Matching networks for one shot learning. In: NeurIPS

Ren M, Triantafillou E, Ravi S, Snell J, Swersky K, Tenenbaum JB, Larochelle H, Zemel RS Meta-learning for semi-supervised few-shot classification. arXiv preprint arXiv:1803.00676

Bertinetto L, Henriques JF, Torr PHS, Vedaldi A (2019) Meta-learning with differentiable closed-form solvers. In: ICLR

Wah C, Branson S, Welinder P, Perona P, Belongie S (2011) The caltech-ucsd birds-200-2011 dataset

Oreshkin BN, Rodriguez P, Lacoste A (2018) Tadam: Task dependent adaptive metric for improved few-shot learning. In: NeurIPS

Zagoruyko S, Komodakis N (2016) Wide residual networks. In: BMVC

Huang K, Geng J, Jiang W, Deng X, Xu Z (2021) Pseudo-loss confidence metric for semi-supervised few-shot learning. In: ICCV

Le D, Nguyen KD, Nguyen K, Tran Q-H, Nguyen R, Hua B-S (2021) Poodle: Improving few-shot learning via penalizing out-of-distribution samples. In: NeurIPS

Shao S, Xing L, Wang Y, Xu R, Zhao C, Wang Y, Liu B (2021) Mhfc: Multi-head feature collaboration for few-shot learning. In: ACM MM

Ye H-J, Hu H, Zhan D-C, Sha F (2020) Few-shot learning via embedding adaptation with set-to-set functions. In: CVPR

Wu J, Zhang T, Zhang Y, Wu F (2021) Task-aware part mining network for few-shot learning. In: ICCV

Yang L, Li L, Zhang Z, Zhou X, Zhou E, Liu Y (2020) Dpgn: Distribution propagation graph network for few-shot learning. In: CVPR

Jian Y, Torresani L (2022) Label hallucination for few-shot classification. In: AAAI

Chen D, Chen Y, Li Y, Mao F, He Y, Xue H (2021) Self-supervised learning for few-shot image classification. In: ICASSP

Bateni P, Goyal R, Masrani V, Wood F, Sigal L (2020) Improved few-shot visual classification. In: CVPR

Boudiaf M, Masud ZI, Rony J, Dolz J, Piantanida P, Ayed IB (2020) Transductive information maximization for few-shot learning. In: NeurIPS

Kye SM, Lee HB, Kim H, Hwang SJ (2020) Meta-learned confidence for few-shot learning. arXiv preprint arXiv:2002.12017

Li X, Sun Q, Liu Y, Zheng S, Zhou Q, Chua T-S, Schiele B (2019) Learning to self-train for semi-supervised few-shot classification. In: NeurIPS

Wang Y, Xu C, Liu C, Zhang L, Fu Y (2020) Instance credibility inference for few-shot learning. In: CVPR

Rajasegaran J, Khan S, Hayat M, Khan FS, Shah M (2020) Self-supervised knowledge distillation for few-shot learning. arXiv preprint arXiv:2006.09785

Yang F, Wang R, Chen X (2022) Sega: Semantic guided attention on visual prototype for few-shot learning. In: WACV

Esfandiarpoor R, Pu A, Hajabdollahi M, Bach SH (2020) Extended few-shot learning: Exploiting existing resources for novel tasks. arXiv preprint arXiv:2012.07176

Mangla P, Kumari N, Sinha A, Singh M, Krishnamurthy B, Balasubramanian VN (2020) Charting the right manifold: Manifold mixup for few-shot learning. In: WACV

Hu Y, Gripon V, Pateux S (2021) Graph-based interpolation of feature vectors for accurate few-shot classification. In: ICPR

Wang W, Xie E, Li X, Fan D-P, Song K, Liang D, Lu T, Luo P, Shao L (2021) Pyramid vision transformer: A versatile backbone for dense prediction without convolutions. In: ICCV

Liu Z, Lin Y, Cao Y, Hu H, Wei Y, Zhang Z, Lin S, Guo B (2021) Swin transformer: Hierarchical vision transformer using shifted windows. In: ICCV

Zhou B, Khosla A, Lapedriza A, Oliva A, Torralba A (2016) Learning deep features for discriminative localization. In: CVPR

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Funding

This research was supported in part by the Ministry of Science and Technology of China under grant No. 2020AAA0108800. The authors have no financial or proprietary interests in any material discussed in this article.

Conflicts of Interest

The authors declare that they have no conflict of interests and this paper does not contain any studies with human participants or animals by any of the authors.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Yang, J., Jiang, J. & Guo, Y. MHA-WoML: Multi-head attention and Wasserstein-OT for few-shot learning. Int J Multimed Info Retr 11, 681–694 (2022). https://doi.org/10.1007/s13735-022-00254-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13735-022-00254-5