Abstract

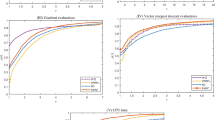

Conjugate gradient methods for solving vector optimization problems provide an alternative approach to scalarization techniques that do not require assigning weights to specific objective functions. This paper proposes a hybrid conjugate gradient method as a convex combination of modified Liu–Storey and Dai–Yuan conjugate gradient methods. This approach guarantees a sufficient descent property for the vector search direction and satisfies the Dai–Liao vector conjugacy condition independent of any line search. The global convergence is established using the Wolfe line search without regular restart and assuming convexity on the objective functions. We conducted numerical experiments to showcase the implementation and effectiveness of the proposed hybrid method over the Liu–Storey and Dai–Yuan conjugate gradient methods.

Similar content being viewed by others

Data availability

Not applicable.

References

Andrei N (2008) Another hybrid conjugate gradient algorithm for unconstrained optimization. Numer Algorithms 47(2):143–156

Andrei N (2009a) Hybrid conjugate gradient algorithm for unconstrained optimization. J Optim Theory Appl 141:249–264

Andrei N (2009b) New hybrid conjugate gradient algorithms for unconstrained optimization. Springer US, Boston, pp 2560–2571

Andrei N (2010) New accelerated conjugate gradient algorithms as a modification of Dai–Yuan’s computational scheme for unconstrained optimization. J Comput Appl Math 234(12):3397–3410

Ansary MA, Panda G (2015) A modified quasi-Newton method for vector optimization problem. Optimization 64(11):2289–2306

Bello Cruz J (2013) A subgradient method for vector optimization problems. SIAM J Optim 23(4):2169–2182

Birgin EG, Martínez JM (2001) A spectral conjugate gradient method for unconstrained optimization. Appl Math Optim 43:117–128

Bonnel H, Iusem AN, Svaiter BF (2005) Proximal methods in vector optimization. SIAM J Optim 15(4):953–970

Chen W, Zhao Y, Yang X (2023) Conjugate gradient methods without line search for multiobjective optimization. arXiv e-prints, p arXiv-2312. https://doi.org/10.48550/arXiv.2312.02461

Dai Y, Yuan YX (1996) Convergence properties of the Fletcher–Reeves method. IMA J Numer Anal 16(2):155–164

Dai YH, Yuan YX (1999) A nonlinear conjugate gradient method with a strong global convergence property. SIAM J Optim 10(1):177–182

Dai YH, Yuan YX (2001) An efficient hybrid conjugate gradient method for unconstrained optimization. Ann Oper Res 103:33–47

Das I, Dennis JE (1998) Normal-boundary intersection: a new method for generating the pareto surface in nonlinear multicriteria optimization problems. SIAM J Optim 8(3):631–657

Djordjević SS (2019) New hybrid conjugate gradient method as a convex combination of LS and FR methods. Acta Math Sci 39:214–228

Dolan ED, Moré JJ (2002) Benchmarking optimization software with performance profiles. Math Program 91:201–213

Drummond LG, Svaiter BF (2005) A steepest descent method for vector optimization. J Comput Appl Math 175(2):395–414

Elboulqe Y, El Maghri M (2024) An explicit spectral Fletcher-Reeves conjugate gradient method for bi-criteria optimization. IMA J Numer Anal 45(1):223–242

Fletcher R (2013) Practical methods of optimization. Wiley, Hoboken

Fletcher R, Reeves CM (1964) Function minimization by conjugate gradients. Comput J 7(2):149–154

Fliege J, Svaiter BF (2000) Steepest descent methods for multicriteria optimization. Math Methods Oper Res 51(3):479–494

Fliege J, Vicente LN (2006) Multicriteria approach to bilevel optimization. J Optim Theory Appl 131:209–225

Fliege J, Drummond LG, Svaiter BF (2009) Newton’s method for multiobjective optimization. SIAM J Optim 20(2):602–626

Fukuda EH, Drummond LMG (2014) A survey on multiobjective descent methods. Pesquisa Operacional 34:585–620

Gonçalves ML, Prudente L (2020) On the extension of the Hager–Zhang conjugate gradient method for vector optimization. Comput Optim Appl 76(3):889–916

Gonçalves M, Lima F, Prudente L (2022a) Globally convergent Newton-type methods for multiobjective optimization. Comput Optim Appl 83(2):403–434

Gonçalves ML, Lima F, Prudente L (2022b) A study of Liu–Storey conjugate gradient methods for vector optimization. Appl Math Comput 425:127099

Hager WW, Zhang H (2005) A new conjugate gradient method with guaranteed descent and an efficient line search. SIAM J Optim 16(1):170–192

Hager WW, Zhang H (2006) A survey of nonlinear conjugate gradient methods. Pac J Optim 2(1):35–58

He QR, Chen CR, Li SJ (2023) Spectral conjugate gradient methods for vector optimization problems. Comput Optim Appl 86:457–489

Hestenes MR, Stiefel E (1952) Methods of conjugate gradients for solving linear systems. J Res Natl Bureau Stand 49(6):409

Hillermeier C (2001) Generalized homotopy approach to multiobjective optimization. J Optim Theory Appl 110(3):557–583

Hu Q, Li R, Zhang Y, Zhu Z (2024a) On the extension of Dai–Liao conjugate gradient method for vector optimization. J Optim Theory Appl 203(1):810–843

Hu Q, Zhu L, Chen Y (2024b) Alternative extension of the Hager–Zhang conjugate gradient method for vector optimization. Comput Optim Appl 88:217–250

Huband S, Hingston P, Barone L, While L (2006) A review of multiobjective test problems and a scalable test problem toolkit. IEEE Trans Evol Comput 10(5):477–506

Ibrahim AH, Kumam P, Kamandi A, Abubakar AB (2022) An efficient hybrid conjugate gradient method for unconstrained optimization. Optim Methods Softw 37(4):1370–1383

Jahn J (2011) Vector optimization, theory. Applications and extensions. Springer, Berlin

Jahn J, Kirsch A, Wagner C (2004) Optimization of rod antennas of mobile phones. Math Methods Oper Res 59:37–51

Jian J, Chen Q, Jiang X, Zeng Y, Yin J (2017) A new spectral conjugate gradient method for large-scale unconstrained optimization. Optim Methods Softw 32(3):503–515

Jin Y, Olhofer M, Sendhoff B (2001) Dynamic weighted aggregation for evolutionary multi-objective optimization: why does it work and how. In: Proceedings of the genetic and evolutionary computation conference, Morgan Kaufmann Publishers, San Francisco, pp 1042–1049

Johannes J (1984) Scalarization in vector optimization. Math Program 29:203–218

Liu J, Li S (2014) New hybrid conjugate gradient method for unconstrained optimization. App Math Comput 245:36–43

Liu Y, Storey C (1991) Efficient generalized conjugate gradient algorithms, part 1: theory. J Optim Theory Appl 69(1):129–137

Lovison A (2011) Singular continuation: generating piecewise linear approximations to pareto sets via global analysis. SIAM J Optim 21(2):463–490

Luc DT (1989) Theory of vector optimization. Springer, Berlin

Lucambio Pérez LR, Prudente LF (2018) Nonlinear conjugate gradient methods for vector optimization. SIAM J Optim 28(3):2690–2720

Lucambio Pérez L, Prudente L (2019) A Wolfe line search algorithm for vector optimization. ACM Trans Math Softw (TOMS) 45(4):1–23

Miglierina E, Molho E, Recchioni MC (2008) Box-constrained multi-objective optimization: a gradient-like method without a priori scalarization. Eur J Oper Res 188(3):662–682

Polak E, Ribiere G (1969) Note sur la convergence de méthodes de directions conjuguées. Revue française d’informatique et de recherche opérationnelle. Série rouge 3(16):35–43

Powell MJ (1984) Nonconvex minimization calculations and the conjugate gradient method. In: Griffiths DF (ed) Numerical analysis. Springer, Berlin, pp 122–141

Preuss M, Naujoks B, Rudolph G (2006) Pareto set and EMOA behavior for simple multimodal multiobjective functions. In: Runarsson TP, Beyer H-G, Burke E, Merelo-Guervós JJ, Whitley Ll, Yao X (eds) PPSN. Springer, Berlin, pp 513–522

Qu S, Goh M, Chan FT (2011) Quasi-Newton methods for solving multiobjective optimization. Oper Res Lett 39(5):397–399

Salihu N, Babando HA, Arzuka I, Salihu S (2023) A hybrid conjugate gradient method for unconstrained optimization with application. Bangmod Int J Math Comput Sci 9:24–44

Schütze O, Laumanns M, Coello Coello CA, Dellnitz M, Talbi E-G (2008) Convergence of stochastic search algorithms to finite size pareto set approximations. J Glob Optim 41:559–577

Schütze O, Lara A, Coello CC (2011) The directed search method for unconstrained multi-objective optimization problems. In: Proceedings of the EVOLVE—a bridge between probability, set oriented numerics, and evolutionary computation, Springer-Verlag Berlin Heidelberg, pp 1–4

Stewart T, Bandte O, Braun H, Chakraborti N, Ehrgott M, Göbelt M, Jin Y, Nakayama H, Poles S, Di Stefano D (2008) Real-world applications of multiobjective optimization. In: Branke J, Deb K, Miettinen K, Słowiński R (eds) Multiobjective optimization. Lecture Notes in Computer Science, vol 5252. Springer, Berlin, Heidelberg, pp 285–327

Thomann J, Eichfelder G (2019) Numerical results for the multiobjective trust region algorithm MHT. Data Brief 25:104103

Toint P (1983) Test problems for partially separable optimization and results for the routine pspmin. The University of Namur, Department of Mathematics. Technical report, Belgium

Touati-Ahmed D, Storey C (1990) Efficient hybrid conjugate gradient techniques. J Optim Theory Appl 64:379–397

Yahaya J, Kumam P (2024) Efficient hybrid conjugate gradient techniques for vector optimization. Results in control and optimization, 14, p100348. https://doi.org/10.1016/j.rico.2023.100348

Yahaya J, Arzuka I, Isyaku M (2023) Descent modified conjugate gradient methods for vector optimization problems. Bangmod Int J Math Comput Sci 9:72–91

Yahaya J, Kumam P, Abubakar J (2024) Efficient nonlinear conjugate gradient techniques for vector optimization problems. Carpath J Math 40(2):515–533

Yahaya J, Kumam P, Bello A, Sitthithakerngkiet K (2024) On the Dai-Liao conjugate gradient method for vector optimization. Optimization 74:1–31

Yahaya J, Kumam P, Salisu S, Sitthithakerngkiet K (2024c) Spectral-like conjugate gradient methods with sufficient descent property for vector optimization. PloS One 19(5):e0302441

Yahaya J, Kumam P, Salisu S, Timothy AJ (2024d) On the class of Wei–Yao–Liu conjugate gradient methods for vector optimization. Nonlinear Convex Anal Optim: Int J Numer Comput Appl 3(1):1–23

Zhang BY, He QR, Chen CR, Li SJ, Li MH (2024) The Dai-Liao-type conjugate gradient methods for solving vector optimization problems. Optim Methods Softw 40:1–35

Acknowledgements

The authors gratefully acknowledge the financial support provided by the Center of Excellence in Theoretical and Computational Science (TaCS-CoE), King Mongkut’s University of Technology Thonburi (KMUTT). This research was funded by the NSRF through the Program Management Unit for Human Resources & Institutional Development, Research, and Innovation [grant number B41G67002]. The first author also acknowledges the support provided by the Petchra Pra Jom Klao PhD scholarship of KMUTT (Contract No. 23/2565). In addition, we sincerely thank anonymous reviewers and associate editor for their invaluable feedback, which has greatly improved the manuscript.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no competing interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Yahaya, J., Kumam, P. New hybrid conjugate gradient algorithm for vector optimization problems. Comp. Appl. Math. 44, 163 (2025). https://doi.org/10.1007/s40314-025-03101-5

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40314-025-03101-5