Abstract

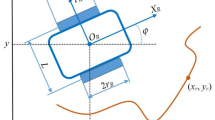

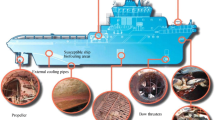

Working in collaboration with an Autonomous Underwater Vehicle is a new working method for divers. Using gestures to give instructions for the diver is a simple and effective mode of underwater human–robot interaction (HRI). In this paper, a gestures tracking method for under human–robot interaction based on fuzzy control is proposed. Firstly, four object recognition algorithms in terms of gesture recognition are compared. YOLO V4-tiny was an extremely high performance, as the gesture area recognition algorithm. We propose a model based on Siamese Network for gesture classification. A gesture tracking method based on fuzzy control is proposed, analyzing the image from AUV front camera to establish a 3D fuzzy rule set. This method can realize the self-regulation of AUV and keep the diver's gestures in the camera view. The experiment result shows the efficiency of the proposed method.

Similar content being viewed by others

References

Kim, Y.J., Kim, H.T., Cho, Y.J., et al.: Development of a power control system for AUVs probing for underwater mineral resources. J. Mar. Sci. Appl. 8(4), 259 (2009)

Zhang, J., Wang, D.R., Jennerjahn, T., et al.: Land–sea interactions at the east coast of Hainan Island, South China Sea: a synthesis. Cont. Shelf Res. 57, 132–142 (2013)

Mišković, N., Pascoal, A., Bibuli, M., Caccia, M., Neasham, J.: A., Birk, A., et al.: CADDY project, year 3: The final validation trials. In: Oceans 2017-aberdeen, 1–5 (2017)

Chiarella, D., Bibuli, M., Bruzzone, G., Caccia, M., Ranieri, A., Zereik, E., et al.: Gesture-based language for diver-robot underwater interaction. In: Oceans 2015-genova, 1–9 (2015)

Chiarella, D., Bibuli, M., Bruzzone, G., Caccia, M., Ranieri, A., Zereik, E., et al.: A novel gestur.e-based language for underwater human–robot interaction. J. Marine Sci. Eng. 6(3), 91 (2018)

Zhao, M., Hu, C., Wei, F., Wang, K., Wang, C., Jiang, Y.: Real-time underwater image recognition with FPGA embedded system for convolutional neural network. Sensors 19(2), 350 (2019)

LeCun, Y., Yoshua, B., Geoffrey, H.: Deep learning. Nature 521(7553), 436–444 (2015)

Jiang, Y., Zhao, M., Hu, C., He, L., Bai, H., Wang, J.: A parallel FP-growth algorithm on World Ocean Atlas data with multi-core CPU. J. Supercomput. 75(2), 732–745 (2019)

Li, X., Liang, Y., Zhao, M., Wang, C., Bai, H., Jiang, Y.: Simulation of evacuating crowd based on deep learning and social force model. IEEE Access 7, 155361–155371 (2019)

Girshick, R., Donahue, J., Darrell, T., et al.: Rich feature hierarchies for accurate object detection and semantic segmentation. In: Proceedings of the IEEE conference on computer vision and pattern recognition, 580–587 (2014)

Ren, S., He, K., Girshick, R., et al.: Faster R-CNN: Towards real-time object detection with region proposal networks. In: Advances in neural information processing systems, 91–99 (2015)

Girshick, R.: Fast R-CNN, In: IEEE International Conference on Computer Vision (ICCV), 1440–1448 (2015)

He, K., Gkioxari, G., Dollár, P., Girshick, R.: Mask R-CNN. In Proceedings of the IEEE international conference on computer vision, 2961–2969 (2017)

Liu, W., Anguelov, D., Erhan, D., et al.: SSD: Single shot multibox detector. In: European conference on computer vision, 21–37 (2016)

Redmon, J., Divvala, S., Girshick, R., et al.: In: You only look once: Unified, real-time object detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 779–788 (2016)

Jiang, Y., Zhang, T., Gou, Y., He, L., Bai, H., Hu, C.: High-resolution temperature and salinity model analysis using support vector regression. J. Ambient. Intell. Humaniz. Comput. (2018). https://doi.org/10.1007/s12652-018-0896-y

Redmon, J., Farhadi, A.: YOLO9000: better, faster, stronger. In: Proceedings of the IEEE conference on computer vision and pattern recognition, 7263–7271 (2017)

Redmon, J., Farhadi, A.: Yolov3: an incremental improvement. arXiv preprint arXiv:1804.02767 (2018)

Bochkovskiy, A., Wang, C.Y., Liao, H.Y.M.: YOLOv4: Optimal speed and accuracy of object detection. arXiv preprint arXiv:2004.10934 (2020)

Liu, Y., Wang, X., Zhai, Z., Chen, R., Zhang, B., Jiang, Y.: Timely daily activity recognition from headmost sensor events. ISA Trans. 94, 379–390 (2019)

Odetti, A., Bibuli, M., Bruzzone, G., et al.: e-URoPe: a reconfgurable AUV/ROV for man-robot underwater cooperation. IFAC-PapersOnLine 50(1), 11203–11208 (2017)

Xiang, X., Yu, C., Lapierre, L., Zhang, J., Zhang, Q.: Survey on fuzzy-logic-based guidance and control of marine surface vehicles and underwater vehicles. Int. J. Fuzzy Syst. 20(2), 572–586 (2018)

Hassanein, O., Anavatti, S.G., Ray, T.: Fuzzy modeling and control for autonomous underwater vehicle. In: The 5th International Conference on Automation, Robotics and Applications, 169–174 (2011)

Sun, B., Zhu, D., Yang, S.X.: An optimized fuzzy control algorithm for three-dimensional AUV path planning. Int. J. Fuzzy Syst. 20(2), 597–610 (2018)

Yu, C., Xiang, X., Zhang, Q., Xu, G.: Adaptive fuzzy trajectory tracking control of an under-actuated autonomous underwater vehicle subject to actuator saturation. Int. J. Fuzzy Syst. 20(1), 269–279 (2018)

Li, Q., Shi, X.H., Kang, Z.Q.: The research of fuzzy-PID control based on grey prediction for AUV. Appl. Mech. Mater. 246, 888–892 (2012)

Guo, Q., Feng, W., Zhou, C., Huang, R., Wan, L., Wang, S.: Learning dynamic Siamese network for visual object tracking. In: Proceedings of the IEEE international conference on computer vision, 1763–1771 (2017)

Dong, X., Shen, J.: Triplet loss in siamese network for object tracking. In: Proceedings of the European Conference on Computer Vision (ECCV), 459–474 (2018)

Wang, Q., Teng, Z., Xing, J., Gao, J., Hu, W., Maybank, S.: Learning attentions: residual attentional Siamese network for high performance online visual tracking. In: Proceedings of the IEEE conference on computer vision and pattern recognition, 4854–4863 (2018)

Buelow, H., Birk, A.: Gesture-recognition as basis for a human robot interface (HRI) on a AUV. In: OCEANS'11 MTS/IEEE KONA, 1–9. IEEE (2011)

Islam, M.J., Sattar, J.: Mixed-domain biological motion tracking for underwater human-robot interaction. In: 2017 IEEE international conference on robotics and automation (ICRA). 4457–4464. IEEE (2017)

Chavez, A.G., Mueller, C.A., Birk, A., Babic, A., Miskovic, N.: Stereo-vision based diver pose estimation using LSTM recurrent neural networks for AUV navigation guidance. In: OCEANS 2017-Aberdeen. 1–7. IEEE (2017)

Fulton, M., Edge, C., Sattar, J. Robot communication via motion: closing the underwater human-robot interaction loop. In: 2019 International Conference on Robotics and Automation (ICRA). 4660–4666. IEEE. (2019)

Sun, K., Qiu, J., Karimi, H. R., Gao, H., A novel finite-time control for Nonstrict feedback saturated nonlinear systems with tracking error constraint. IEEE Transactions on Syst. Man Cyberneti. Syst. (2019)

Acknowledgements

This work was supported by the National Natural Science Foundation of China under Grant 51679105, Grant 51809112, and Grant 51939003. And this work is partly supported by the Science-Technology Development Plan Project of Jilin Province of China grants 20170101081JC, 20190303006SF, and 20190302107GX.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Jiang, Y., Zhao, M., Wang, C. et al. A Method for Underwater Human–Robot Interaction Based on Gestures Tracking with Fuzzy Control. Int. J. Fuzzy Syst. 23, 2170–2181 (2021). https://doi.org/10.1007/s40815-021-01086-x

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40815-021-01086-x