Abstract

Classification of data with imbalanced characteristics is an essential research problem as the data from most real-world applications follow non-uniform class proportions. Solutions to handle class imbalance depend on how important one data point is versus the other. Directed data sampling and data-level cost-sensitive methods use the data point importance information to sample from the dataset such that the essential data points are retained and possibly oversampled. In this paper, we propose a novel topic modeling-based weighting framework to assign importance to the data points in an imbalanced dataset based on the topic posterior probabilities estimated using the latent Dirichlet allocation and probabilistic latent semantic analysis models. We also propose TOMBoost, a topic modeled boosting scheme based on the weighting framework, particularly tuned for learning with class imbalance. In an empirical study spanning 40 datasets, we show that TOMBoost wins or ties with 37 datasets on an average against other boosting and sampling methods. We also empirically show that TOMBoost minimizes the model bias faster than the other popular boosting methods for class imbalance learning.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Kubat, M., Holte, R.C., Matwin, S.: Machine learning for the detection of oil spills in satellite radar images. Mach. Learn. 30(2–3), 195–215 (1998)

Branco, P., Torgo, L., Ribeiro, R.P.: A survey of predictive modeling on imbalanced domains. ACM Comput. Surv. 49(2), 1–50 (2016)

Haixiang, G., et al.: Learning from class-imbalanced data: review of methods and applications. Expert Syst. Appl. 73, 220–239 (2017). https://doi.org/10.1016/j.eswa.2016.12.035

Leevy, J.L., Khoshgoftaar, T.M., Bauder, R.A., Seliya, N.: A survey on addressing high-class imbalance in big data. J. Big Data 5(1), 42 (2018). https://doi.org/10.1186/s40537-018-0151-6

Johnson, J.M., Khoshgoftaar, T.M.: Survey on deep learning with class imbalance. J. Big Data (2019). https://doi.org/10.1186/s40537-019-0192-5

Mease, D., Wyner, A., Buja, A.: Boosted classification trees and class probability/quantile estimation. J. Mach. Learn. Res. 8, 409–439 (2007)

Lopez, V., Fernandez, A., García, S., Palade, V., Herrera, F.: An insight into classification with imbalanced data: empirical results and current trends on using data intrinsic characteristics. Inf. Sci. 250, 113–141 (2013). https://doi.org/10.1016/j.ins.2013.07.007

Lopez, V., Fernandez, A., Moreno-Torres, J.G., Herrera, F.: Analysis of preprocessing vs. cost-sensitive learning for imbalanced classification. Open problems on intrinsic data characteristics. Expert Syst. Appl. 39(7), 6585–6608 (2012). https://doi.org/10.1016/j.eswa.2011.12.043

He, H., Ma, Y.: Imbalanced Learning: Foundations, Algorithms, and Applications, 1st edn. Wiley-IEEE Press (2013)

Guo, H., et al.: Learning from class-imbalanced data: review of methods and applications. Expert Syst. Appl. 73, 220–239 (2017)

Agrawal, A., Viktor, H.L., Paquet, E., Fred, A.L.N., Dietz, J.L.G., Aveiro, D., Liu, K., Filipe, J.: SCUT: multi-class imbalanced data classification using SMOTE and cluster-based undersampling. In: Fred, A.L.N., Dietz, J.L.G., Aveiro, D., Liu, K., Filipe, J. (eds.) KDIR, pp. 226–234. SciTePress (2015)

Hofmann, T.: Probabilistic Latent Semantic Analysis, pp. 289–296. Morgan Kaufmann Publishers Inc. (1999)

Kim, Y.-M., Pessiot, J.-F., Amini, M.-R., Gallinari, P., Shanahan, J.G. et al.: An extension of PLSA for document clustering. In: Shanahan, J.G. et al. (eds.) CIKM, pp. 1345–1346. ACM (2008). http://dblp.uni-trier.de/db/conf/cikm/cikm2008.html#KimPAG08

Wang, L., Li, X., Tu, Z., Jia, J.: Discriminative clustering via generative feature mapping, pp. 1–7 (2012). https://www.aaai.org/ocs/index.php/AAAI/AAAI12/paper/view/5034

Santhosh, K.K., Dogra, D.P., Roy, P.P.: Temporal unknown incremental clustering model for analysis of traffic surveillance videos. IEEE Trans. Intell. Transp. Syst. 20(5), 1762–1773 (2019). https://doi.org/10.1109/TITS.2018.2834958

Griffiths, A.J., Gelbart, W.M., Lewontin, R.C., Miller, J.H.: Modern Genetic Analysis: Integrating Genes and Genomes, vol. 1. Macmillan (2002)

Pritchard, J.K., Stephens, M., Donnelly, P.: Inference of population structure using multilocus genotype data. Genetics 155, 945–959 (2000)

Santhosh, K.K., Dogra, D.P., Roy, P.P., Chaudhuri, B.B.: Trajectory-based scene understanding using Dirichlet process mixture model. IEEE Trans. Cybern. 51(8), 4148–4161 (2021). https://doi.org/10.1109/TCYB.2019.2931139

Kennedy, T.F., et al.: Topic Models for RFID Data Modeling and Localization, pp. 1438–1446 (2017)

Chen, X., Huang, K., Jiang, H.: Detecting changes in the spatiotemporal pattern of bike sharing: a change-point topic model. IEEE Trans. Intell. Transp. Syst. (2022). https://doi.org/10.1109/TITS.2022.3161623

Freund, Y., Schapire, R.E.: A decision-theoretic generalization of on-line learning and an application to boosting. J. Comput. Syst. Sci. 55(1), 119–139 (1997). https://doi.org/10.1006/jcss.1997.1504

Sun, Y., Kamel, M.S., Wang, Y.: Boosting for learning multiple classes with imbalanced class distribution, pp. 592–602. IEEE Computer Society (2006). http://dblp.uni-trier.de/db/conf/icdm/icdm2006.html#SunKW06

Schapire, R.E.: Boosting: Foundations and Algorithms (2013)

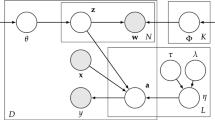

Blei, D., Ng, A., Jordan, M.: Latent Dirichlet allocation. J. Mach. Learn. Res. 3, 993–1022 (2003)

Drummond, C., Holte, R.: C4.5, class imbalance, and cost sensitivity: why under-sampling beats over-sampling, pp. 1–8 (2003). http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.68.6858& rep=rep1& type=pdf

Holte, R.C., Acker, L., Porter, B.W., Sridharan, N.S. Concept learning and the problem of small disjuncts. In: Sridharan, N.S. (ed.) IJCAI, pp. 813–818. Morgan Kaufmann (1989). http://dblp.uni-trier.de/db/conf/ijcai/ijcai89.html#HolteAP89

Chawla, N., Bowyer, K., Hall, L., Kegelmeyer, W.: SMOTE: synthetic minority over-sampling technique. J. Artif. Intell. Res. 16, 321–357 (2002)

Han, H., Wang, W. & Mao, B. Huang, D.-S., Zhang, X.-P., Huang, G.-B. Borderline-SMOTE: a new over-sampling method in imbalanced data sets learning. In: Huang, D.-S., Zhang, X.-P., Huang, G.-B. (eds.) ICIC (1), Lecture Notes in Computer Science, vol. 3644, pp. 878–887. Springer (2005). http://dblp.uni-trier.de/db/conf/icic/icic2005-1.html#HanWM05

Barua, S., Islam, M.M., Yao, X., Murase, K.: MWMOTE-majority weighted minority oversampling technique for imbalanced data set learning. IEEE Trans. Knowl. Data Eng. 26(2), 405–425 (2014)

Chen, E., Lin, Y., Xiong, H., Luo, Q., Ma, H.: Exploiting probabilistic topic models to improve text categorization under class imbalance. Inf. Process. Manag. 47(2), 202–214 (2011). https://doi.org/10.1016/j.ipm.2010.07.003

Barredo Arrieta, A., et al.: Explainable artificial intelligence (XAI): concepts, taxonomies, opportunities and challenges toward responsible AI. Inf. Fus. (2020). https://doi.org/10.1016/j.inffus.2019.12.012, arXiv:1910.10045

Bellinger, C., Drummond, C., Japkowicz, N.: Manifold-based synthetic oversampling with manifold conformance estimation. Mach. Learn. 107(3), 605–637 (2018). https://doi.org/10.1007/s10994-017-5670-4

Santhiappan, S., Chelladurai, J., Ravindran, B.: A novel topic modeling based weighting framework for class imbalance learning. In: CoDS-COMAD’18, pp. 20–29. ACM, New York (2018). https://doi.org/10.1145/3152494.3152496

Peng, Y. Bonet, B., Koenig, S.: Adaptive sampling with optimal cost for class-imbalance learning. In: Bonet, B. & Koenig, S. (eds.) AAAI, pp. 2921–2927. AAAI Press (2015). http://dblp.uni-trier.de/db/conf/aaai/aaai2015.html#Peng15

Nekooeimehr, I., Lai-Yuen, S.K.: Adaptive semi-unsupervised weighted oversampling (A-SUWO) for imbalanced datasets. Expert Syst. Appl. 46, 405–416 (2016)

Mustafa, G., Niu, Z., Yousif, A., Tarus, J.: Distribution based ensemble for class imbalance learning, pp. 5–10 (2015)

Galar, M., Fernandez, A., Barrenechea, E., Bustince, H., Herrera, F.: A review on ensembles for the class imbalance problem: bagging-, boosting-, and hybrid-based approaches. IEEE Trans. Syst. Man Cybern. Part C Appl. Rev. 42(4), 463–484 (2012). https://doi.org/10.1109/TSMCC.2011.2161285

Chawla, N.V., Lazarevic, A., Hall, L.O., Bowyer, K.W., Lavrac, N., Gamberger, D., Blockeel, H., Todorovski, L.: SMOTEBoost: improving prediction of the minority class in boosting. In: Lavrac, N., Gamberger, D., Blockeel, H., Todorovski, L. (eds.) PKDD, Lecture Notes in Computer Science, vol. 2838, pp. 107–119. Springer (2003). http://dblp.uni-trier.de/db/conf/pkdd/pkdd2003.html#ChawlaLHB03

Guo, H., Viktor, H.L.: Learning from imbalanced data sets with boosting and data generation: the DataBoost-IM approach. SIGKDD Explor. 6(1), 30–39 (2004). https://doi.org/10.1145/1007730.1007736

Seiffert, C., Khoshgoftaar, T.M., Hulse, J.V., Napolitano, A.: RUSBoost: a hybrid approach to alleviating class imbalance. IEEE Trans. Syst. Man Cybern. Part A 40(1), 185–197 (2010)

Rayhan, F. et al. Cusboost: cluster-based under-sampling with boosting for imbalanced classification. CoRR (2017). arXiv:1712.04356

Lin, W.-C., Tsai, C.-F., Hu, Y.-H., Jhang, J.-S.: Clustering-based undersampling in class-imbalanced data. Inf. Sci. 409–410, 17–26 (2017). https://doi.org/10.1016/j.ins.2017.05.008

Lingchi, C., Xiaoheng, D., Hailan, S., Congxu, Z., Le, C.: Dycusboost: Adaboost-based imbalanced learning using dynamic clustering and undersampling, pp. 208–215 (2018)

Ge, J.-F., Luo, Y.-P.: A comprehensive study for asymmetric AdaBoost and its application in object detection. Acta Automatica Sinica 35(11), 1403–1409 (2009)

Fan, W., Stolfo, S.J., Zhang, J., Chan, P.K., Bratko, I., Dzeroski, S.: AdaCost: misclassification cost-sensitive boosting. In: Bratko, I., Dzeroski, S. (eds.) ICML, pp. 97–105. Morgan Kaufmann (1999). http://dblp.uni-trier.de/db/conf/icml/icml1999.html#FanSZC99

Domingos, P.M. Fayyad, U.M., Chaudhuri, S., Madigan, D.: MetaCost: a general method for making classifiers cost-sensitive. In: Fayyad, U.M., Chaudhuri, S., Madigan, D. (eds.) KDD, pp. 155–164. ACM (1999). http://dblp.uni-trier.de/db/conf/kdd/kdd99.html#Domingos99

Zadrozny, B., Langford, J., Abe, N.: Cost-sensitive learning by cost-proportionate example weighting, p. 435. IEEE Computer Society (2003). http://dblp.uni-trier.de/db/conf/icdm/icdm2003.html#ZadroznyLA03

Yang, Y., Xiao, P., Cheng, Y., Liu, W., Huang, Z.: Ensemble strategy for hard classifying samples in class-imbalanced data set, pp. 170–175 (2018)

Chen, T., Guestrin, C.: Xgboost: a scalable tree boosting system. CoRR (2016). arXiv:1603.02754

Gong, J., Kim, H.: Rhsboost: improving classification performance in imbalance data. Comput. Stat. Data Anal. 111, 1–13 (2017). https://doi.org/10.1016/j.csda.2017.01.005

Lunardon, N., Menardi, G., Torelli, N.: ROSE: a package for binary imbalanced learning. R J. 6(1), 82–92 (2014)

Lu, W., Li, Z., Chu, J.: Adaptive ensemble undersampling-boost: a novel learning framework for imbalanced data. J. Syst. Softw. 132, 272–282 (2017). https://doi.org/10.1016/j.jss.2017.07.006

Tsai, C.-F., Lin, W.-C., Hu, Y.-H., Yao, G.-T.: Under-sampling class imbalanced datasets by combining clustering analysis and instance selection. Inf. Sci. 477, 47–54 (2019). https://doi.org/10.1016/j.ins.2018.10.029

Sun, L., Song, J., Hua, C., Shen, C., Song, M.: Value-aware resampling and loss for imbalanced classification. In: CSAE’18, pp. 1–6. ACM, New York (2018). https://doi.org/10.1145/3207677.3278084

Hofmann, T.: Unsupervised Learning from Dyadic Data, pp. 466–472. MIT Press (1998)

Sakai, Y., Iwata, K.: Extremal relations between Shannon entropy and \(\ell \alpha \)-norm, pp. 428–432 (2016)

Blei, D.M.: Introduction to probabilistic topic models. Commun. ACM (2011). http://www.cs.princeton.edu/~blei/papers/Blei2011.pdf

Xiao, H., Stibor, T.: Efficient collapsed Gibbs sampling for latent Dirichlet allocation. J. Mach. Learn. Res. Proc. Track 13, 63–78 (2010)

Phan, X.-H., Nguyen, C.-T.: gibbslda (2008). http://gibbslda.sourceforge.net/

Blei, D.M.: lda-c (2003). http://www.cs.princeton.edu/~blei/lda-c/

Leães, A., Fernandes, P., Lopes, L., Assunção, J.: Classifying with adaboost.m1: the training error threshold myth, pp. 1–7 (2017). https://aaai.org/ocs/index.php/FLAIRS/FLAIRS17/paper/view/15498

He, H., Bai, Y., Garcia, E.A., Li, S.: ADASYN: adaptive synthetic sampling approach for imbalanced learning, pp. 1322–1328. IEEE (2008). http://dblp.uni-trier.de/db/conf/ijcnn/ijcnn2008.html#HeBGL08

Yen, S.-J., Lee, Y.-S.: Cluster-based under-sampling approaches for imbalanced data distributions. Expert Syst. Appl. 36, 5718–5727 (2006). https://doi.org/10.1016/j.eswa.2008.06.108

Hart, P.E.: The condensed nearest neighbor rule. IEEE Trans. Inf. Theory 14, 515–516 (1968)

Smith, M.R., Martinez, T., Giraud-Carrier, C.: An instance level analysis of data complexity. Mach. Learn. 95(2), 225–256 (2014). https://doi.org/10.1007/s10994-013-5422-z

Last, F., Douzas, G., Bação, F.: Oversampling for imbalanced learning based on k-means and SMOTE. CoRR (2017). arXiv:1711.00837

Zhang, J., Mani, I.: KNN Approach to Unbalanced Data Distributions: A Case Study Involving Information Extraction, pp. 1–7 (2003)

Kubat, M.: Addressing the curse of imbalanced training sets: one-sided selection. In: Fourteenth International Conference on Machine Learning (2000)

Batista, G., Bazzan, A., Monard, M.-C.: Balancing training data for automated annotation of keywords: a case study, pp. 10–18 (2003)

Tomek, I.: Two modifications of CNN. IEEE Trans. Syst. Man Cybern. 7(2), 679–772 (1976)

Zhao, W., et al.: A heuristic approach to determine an appropriate number of topics in topic modeling. BMC Bioinform. 16 Suppl 13(Suppl 13), S8–S8 (2015). https://doi.org/10.1186/1471-2105-16-S13-S8

Terragni, S., Fersini, E., Galuzzi, B. G., Tropeano, P., Candelieri, A.: OCTIS: comparing and optimizing topic models is simple!, pp. 263–270. Association for Computational Linguistics, Online (2021). https://aclanthology.org/2021.eacl-demos.31

Terragni, S., Fersini, E.: Fersini, E., Passarotti, M., Patti, V.: OCTIS 2.0: optimizing and comparing topic models in Italian is even simpler!. In: Fersini, E., Passarotti, M., Patti, V. (eds.) Proceedings of the Eighth Italian Conference on Computational Linguistics, CLiC-it 2021, Milan, Italy, January 26–28, 2022, CEUR Workshop Proceedings, vol. 3033. CEUR-WS.org (2021). http://ceur-ws.org/Vol-3033/paper55.pdf

Lichman, M.: UCI Machine Learning Repository (2013). http://archive.ics.uci.edu/ml

Blagus, R., Lusa, L.: SMOTE for high-dimensional class-imbalanced data. BMC Bioinform. 14, 106 (2013)

Acknowledgements

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Santhiappan, S., Chelladurai, J. & Ravindran, B. TOMBoost: a topic modeling based boosting approach for learning with class imbalance. Int J Data Sci Anal 17, 389–409 (2024). https://doi.org/10.1007/s41060-022-00363-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s41060-022-00363-8