Abstract

One of the first steps in many text-based social science studies is to retrieve documents that are relevant for an analysis from large corpora of otherwise irrelevant documents. The conventional approach in social science to address this retrieval task is to apply a set of keywords and to consider those documents to be relevant that contain at least one of the keywords. But the application of incomplete keyword lists has a high risk of drawing biased inferences. More complex and costly methods such as query expansion techniques, topic model-based classification rules, and active as well as passive supervised learning could have the potential to more accurately separate relevant from irrelevant documents and thereby reduce the potential size of bias. Yet, whether applying these more expensive approaches increases retrieval performance compared to keyword lists at all, and if so, by how much, is unclear as a comparison of these approaches is lacking. This study closes this gap by comparing these methods across three retrieval tasks associated with a data set of German tweets (Linder in SSRN, 2017. https://doi.org/10.2139/ssrn.3026393), the Social Bias Inference Corpus (SBIC) (Sap et al. in Social bias frames: reasoning about social and power implications of language. In: Jurafsky et al. (eds) Proceedings of the 58th annual meeting of the association for computational linguistics. Association for Computational Linguistics, p 5477–5490, 2020. https://doi.org/10.18653/v1/2020.aclmain.486), and the Reuters-21578 corpus (Lewis in Reuters-21578 (Distribution 1.0). [Data set], 1997. http://www.daviddlewis.com/resources/testcollections/reuters21578/). Results show that query expansion techniques and topic model-based classification rules in most studied settings tend to decrease rather than increase retrieval performance. Active supervised learning, however, if applied on a not too small set of labeled training instances (e.g. 1000 documents), reaches a substantially higher retrieval performance than keyword lists.

Similar content being viewed by others

Data availibility

The code that supports the findings of this study are available from https://doi.org/10.6084/m9.figshare.19699840.

Notes

A corpus is a set of documents. A document is the unit of observation. A document can be a very short to a very long text (e.g. a sentence, a speech, a newspaper article). Here, the term corpus refers to the set of documents a researcher has collected and from which he or she then seeks to retrieve the relevant documents.

Note that the vocabulary used in this study often makes use of the term retrieval. As this study focuses on contexts in which the task is to retrieve relevant documents from corpora of otherwise irrelevant documents, the usage of the term retrieval seems adequate. Yet, the task examined in this study is different from the task that is typically examined in document retrieval. Document retrieval is a subfield of information retrieval in which the task usually is to rank documents according to their relevance for an explicitly stated user query [74, p. 14, 16]. In this study, in contrast, the aim was to classify—rather than rank—documents as being relevant vs. not relevant. Moreover, not all of the approaches evaluated here require the query, that states the information need, to be expressed explicitly in the form of keywords or phrases.

More specifically: Selection biases occur (1) if the mechanism that selects observational units for an analysis from a larger population of units for which inferences are to be made is correlated with the units’ values on the dependent variable, (2) if the assignment of units to the values of the explanatory variables is correlated with the units’ values on the dependent variable, or (3) if the selection rule or the assignment rule are correlated with the size of the causal effect that units will experience [63, p. 115–116, 124–125, 138–139]. Here, the focus is on the first mentioned type of selection bias. If a study seeks to make descriptive rather than causal inferences, a selection bias is produced if the rule for selecting observational units for analysis is correlated with the variable of interest (rather than the dependent variable) [63, p. 135]. For the sake of simplicity, the following illustrations focus on studies whose goal is descriptive rather than causal inference. Accordingly, when referring to the definition of selection bias, here the expressions ‘the outcome variable’ or ‘the variable of interest’ rather than ‘the dependent variable’ are used.

Formal definitions of the performance metrics of recall, precision, and the \(F_1\)-Score are given in Appendix 1.

Such a comprehensive list of keywords implies low precision and thus would come with another problem: a large share of false positives. Nevertheless, a comprehensive list would imply perfect recall and thus would preclude selection bias due to false negatives.

There are several likely reasons for the problems human researchers encounter when trying to set up an extensive list of keywords. First, language is highly varied [39]. There are numerous ways to refer to the same entity—and entities also can be referred to indirectly without the usage of proper names or well-defined denominations [6, p. 167]. Especially if the entity of interest is abstract and/or not easily denominated, the universe of terms and expressions referring to the entity is likely to be large and not easily to be captured [6, p. 167]. Such entities are abundant in social science. Typical entities of interest, for example, are policies (e.g. the policies implemented to address the COVID-19 pandemic), concepts (e.g. European integration or homophobia), and occurrences (e.g. the 2015 European refugee crisis or the 2021 United States Capitol riot). A second likely reason for the human inability to come up with a comprehensive keyword list are inhibitory processes [13; 61, p. 974]. After a set of concepts has been retrieved from memory, inhibitory processes suppress the representation of related, non-retrieved concepts in memory and thereby reduce the probability of those concepts to be recovered [13]. One method that has the potential to alleviate this second aspect are query expansion methods.

If the elements of the term vectors are non-negative, e.g. because they indicate the (weighted) frequency with which a term occurs across the documents in the corpus, then the angle between the vectors will be in the range \([0^{\circ }, 90^{\circ }]\) and cosine similarity will be in the range [0, 1]. If, on the other hand, elements of term representation vectors can become negative, then the vectors can also point into opposing directions. In the extreme: If the vectors point into diametrically opposing directions, then \(cos(\theta ) = -1\).

Note that beside the similarity-based automatic query expansion approaches discussed so far, there are further expansion methods. Most prominently there are query language modeling and operations based on relevance feedback from the user or pseudo-relevant feedback [67; 74, p. 177–188; 4, p. 1709-1713].

The most likely terms are the terms with the highest occurrence probabilities, \(\beta _{ku}\), for a given topic k. The most exclusive terms refer to highly discriminating terms whose probability to occur is high for topic k but low for all or most other topics. Exclusivity can be measured as: \(exclusivity_{ku} = \beta _{ku}/\sum _{j=1}^K \beta _{ju}\) (see for example [105], p. 12).

A coherent topic referring to the entity of interest would have high occurrence probabilities for frequently co-occurring terms that refer to the entity [106, p. 1069; 105, p. 10]. It would be clearly about the entity of interest rather than being a fuzzy topic without a nameable content. An exclusive topic would solely refer to the entity of interest and would not refer to any other entities.

Note that research suggests that it is rather the number of training examples in the positive relevant class than the number of all documents in the training set that affects the amount of information provided to the learning method [132].

Beside these random resampling techniques mentioned here, there are also methods that perform oversampling or undersampling in an informed way; e.g. based on distance criteria (see [24, p. 23–24]).

Note that the outlined relationship between cost ratios and over- or undersampling rates only holds if the threshold at which the classifier considers an instance to fall into the positive rather than the negative class is at \(p = 0.5\) [41, p. 975]. Note furthermore that although it would be good practice for resampling rates to reflect an underlying distribution of misclassification costs as specified in the cost matrix, resampling with rates reflecting misclassification costs will not yield the same results as incorporating misclassification costs into the learning process [24, p. 36]. One reason, for example, is that in random undersampling instances are removed entirely [24, p. 36]. For information on the relationship between oversampling/undersampling, cost-sensitive learning, and domain adaptation see Kouw and Loog [64, p. 4–5, 7].

Ideally, a single instance is selected and labeled in each iteration [70, p. 4]. Yet, re-training a model often is costly and time-consuming. An economic alternative is batch-mode active learning [117, p. 35]. Here a batch of instances is selected and labeled in each iteration [117, p. 35]. When selecting a batch of instances, there is the question of which instances to select. Selecting the K most informative instances is one strategy that, however, ignores the homogeneity of the selected instances [117, p. 35]. Alternative approaches that seek to increase the heterogeneity among the selected instances have been developed (see [117], p. 35).

Note that in multi-class classification tasks it is less straight forward to operationalize uncertainty. Here one can distinguish between least confident sampling, margin sampling, and entropy-based sampling (for precise definitions, see [117], p. 12–13). Moreover, the usage of such a definition of uncertainty and informativeness only is possible for learning methods that return predicted probabilities [117, p. 12]. For methods that do not, other uncertainty-based sampling strategies have been developed (see [117], p. 14–15). With regard to SVMs, Tong and Koller [124] have introduced three theoretically motivated query strategies. In their Simple Margin strategy, the data point that is closest to the hyperplane is selected to be labeled next [124, p. 53–54].

The employed R packages are data.table [38], dplyr [138], facetscales [88], ggplot2 [136], ggridges [140], lsa [139], plot3D [120], quanteda [17], RcppParallel [1], rstudioapi [126], stm [105], stringr [137], text2vec [116], and xtable [31]. The used Python packages and libraries are Beautiful Soup [102], gdown [59], imbalanced-learn [68], matplotlib [56], NumPy [87], mittens [35], pandas [76], seaborn [77], scikit-learn [90], PyTorch [89], watermark [98], and HuggingFace’s Transformers [141]. If a GPU was used, an NVIDIA Tesla P100-PCIE-16GB was employed.

For a detailed elaboration about the exact composition of the SBIC see Sap et al. [110, p. 5480]

Note that each post was annotated by three independent coders and that the data shared by Sap et al. [110] lists each annotation separately. Here the SBIC is preprocessed such that the post is considered to be offensive toward a group category if at least on annotator indicated that a group falling into this category was targeted.

The documents are preprocessed by tokenization into unigrams, lowercasing, removing terms that occur in less than 5 documents or less than five times throughout the corpus, and applying a Boolean weighting on the entries of the document-feature matrix such that a 1 signals the occurrence of a term in a document and a 0 indicates the absence of the term in a document.

Note that the keyword lists comprising empirically highly predictive terms are not only applied on the corpora to evaluate the retrieval performance of keyword lists, but also form the basis for query expansion (see Section 4.2.2). The query expansion technique makes use of GloVe word embeddings [91] trained on the local corpora at hand and also makes use of externally obtained GloVe word embeddings trained on large global corpora. In the case of the locally trained word embeddings there is a learned word embedding for each predictive term. Thus, the set of extracted highly predictive terms can be directly used as starting terms for query expansion. In the case of the globally pretrained word embeddings, however, not all of the highly predictive terms have a corresponding global word embedding. Hence, for the globally pretrained embeddings the 50 most predictive terms for which a globally pretrained word embedding is available are extracted. If a predictive term has no corresponding global embedding, the set of extracted predictive terms is enlarged with the next most predictive term until there are 50 extracted terms. Consequently, in Tables 6, 7, and 8 in Appendix 7 for each corpus two lists of the most predictive features are shown. Moreover, for the evaluation of the initial keyword lists of 10 predictive keywords, the local keyword lists have to be used because the global keyword lists have been adapted for the purposes of query expansion on the global word embedding space.

The embeddings can be downloaded from https://nlp.stanford.edu/projects/glove/. GloVe embeddings here are used because they tend to be frequently employed in social science [107, p. 104].

The embeddings can be downloaded from https://deepset.ai/german-word-embeddings.

For example, in a topic model with \(K=15\) topics, there are 15 ways to select one relevant topic from 15 topics (namely: the first, the second, ..., and the 15th); and there are \(\left( {\begin{array}{c}15\\ 2\end{array}}\right) = 105\) ways of choosing two relevant topics from the set of 15 topics, and there are \(\left( {\begin{array}{c}15\\ 3\end{array}}\right) = 455\) ways to pick three topics from 15 topics.

Transfer learning with deep neural networks has sparked substantive enhancements in prediction performances across the field of NLP and beyond and now constitutes the foundation of modern NLP [22]. Yet this mode of learning also comes with limitations. One issue is that the models tend to learn the representational biases that are reflected in the texts on which they are pretrained on [22, p. 129–131]. For an introduction to transfer learning with Transformer-based language representation models see Wankmüller [134]. For a detailed discussion of the potentials and limitations of the usage of pretrained deep neural networks see Bommasani et al. [22].

A too high \(\xi\), however, at times may lead to a classification rule in which none of the documents is assigned to the positive relevant class—thereby producing an undefined value for precision and the \(F_1\)-Score (here visualized by 0).

Note that the x-axis denotes the number of unique labeled instances in set \(\mathcal {I}\). As in passive learning with random oversampling the documents from the relevant minority class in set \(\mathcal {I}\) are randomly resampled with replacement to then form the training set on which the model is trained, in passive learning the size of the training set is larger than the size of set \(\mathcal {I}\). Yet, the size of \(\mathcal {I}\) indicates the number of unique documents on which training is performed and—as only unique documents have to be annotated—it indicates the annotation costs.

There is a single outlier article that is as long as 3,797 tokens.

Note that to meet the input format required by BERT in single sequence text classification tasks, two additional special tokens, ‘[CLS]’ and ‘[SEP]’, have to be added [32, p. 4174].

References

Allaire, J. J., Francois, R., Ushey, K., Vandenbrouck, G., Geelnard, M., & Intel (2020). RcppParallel: Parallel programming tools for ‘Rcpp’ (Version 5.0.2). [R package]. CRAN. https://CRAN.R-project.org/package=RcppParallel.

ALMasri, M., Berrut, C., & Chevallet, J.-P. (2013). Wikipedia-based semantic query enrichment. In: Bennett, P. N., Gabrilovich, E., Kamps, J., & Karlgren, J. (Eds.), Proceedings of the sixth international workshop on exploiting semantic annotations in information retrieval (ESAIR ’13) (pp. 5–8). Association for Computing Machinery.

Arora, S., Ge, R., Halpern, Y., Mimno, D., Moitra, A., Sontag, D., Wu, Y., & Zhu, M. (2013). A practical algorithm for topic modeling with provable guarantees. In: Dasgupta, S., & McAllester, D. (Eds.), Proceedings of the 30th international conference on machine learning (pp. 280–288). Proceedings of Machine Learning Research.

Azad, H. K., & Deepak, A. (2019). Query expansion techniques for information retrieval: A survey. Information Processing and Management, 56(5), 1698–1735. https://doi.org/10.1016/j.ipm.2019.05.009

Azar, E. E. (2009). Conflict and Peace Data Bank (COPDAB), 1948-1978. [Data set]. Inter-University Consortium for Political and Social Research. https://doi.org/10.3886/ICPSR07767.v4.

Baden, C., Kligler-Vilenchik, N., & Yarchi, M. (2020). Hybrid content analysis: Toward a strategy for the theory-driven, computer-assisted classification of large text corpora. Communication Methods and Measures, 14(3), 165–183. https://doi.org/10.1080/19312458.2020.1803247

Baerg, N., & Lowe, W. (2020). A textual Taylor rule: Estimating central bank preferences combining topic and scaling methods. Political Science Research and Methods, 8(1), 106–122. https://doi.org/10.1017/psrm.2018.31

Bahdanau, D., Cho, K., & Bengio, Y. (2015). Neural machine translation by jointly learning to align and translate. In: Bengio, Y., & LeCun, Y. (Eds.), 3rd International conference on learning representations (ICLR 2015) (pp. 1–15).

Barberá, P. (2016). Less is more? How demographic sample weights can improve public opinion estimates based on twitter data. Manuscript. Retrieved June 4, 2021 from http://pablobarbera.com/static/less-is-more.pdf.

Barbieri, F., Anke, L. E., & Camacho-Collados, J. (2021). XLM-T: Multilingual language models in twitter for sentiment analysis and beyond. arXiv:2104.12250.

Bauer, P. C., Barberá, P., Ackermann, K., & Venetz, A. (2017). Is the left-right scale a valid measure of ideology? Political Behavior, 39(3), 553–583. https://doi.org/10.1007/s11109-016-9368-2

Baum, M., Cohen, D. K., & Zhukov, Y. M. (2018). Does rape culture predict rape? Evidence from U.S. newspapers, 2000–2013. Quarterly Journal of Political Science, 13(3), 263–289. https://doi.org/10.1561/100.00016124

Bäuml, K.-H. (2007). Making memories unavailable: The inhibitory power of retrieval. Journal of Psychology, 215(1), 4–11. https://doi.org/10.1027/0044-3409.215.1.4

Beauchamp, N. (2017). Predicting and interpolating state-level polls using Twitter textual data. American Journal of Political Science, 61(2), 490–503. https://doi.org/10.1111/ajps.12274

Bengio, Y., Ducharme, R., Vincent, P., & Janvin, C. (2003). A neural probabilistic language model. Journal of Machine Learning Research, 3, 1137–1155.

Benoit, K. (2020). Text as data: An overview. In: Curini, L., & Franzese, R. (Eds.), The SAGE handbook of research methods in political science and international relations (pp. 461–497). London. SAGE Publications. https://doi.org/10.4135/9781526486387.n29.

Benoit, K., Watanabe, K., Wang, H., Nulty, P., Obeng, A., Müller, S., & Matsuo, A. (2018). quanteda: An R package for the quantitative analysis of textual data. Journal of Open Source Software, 3(30), 774. https://doi.org/10.21105/joss.00774

Blei, D. M., & Lafferty, J. D. (2007). A correlated topic model of science. The Annals of Applied Statistics, 1(1), 17–35. https://doi.org/10.1214/07-AOAS114

Blei, D. M., Ng, A. Y., & Jordan, M. I. (2003). Latent Dirichlet allocation. Journal of Machine Learning Research, 3, 993–1022.

Blum, A., & Mitchell, T. (1998). Combining labeled and unlabeled data with co-training. In: Proceedings of the eleventh annual conference on computational learning theory (COLT’98) (pp. 92–100) New York, NY, USA. Association for Computing Machinery. https://doi.org/10.1145/279943.279962.

Bojanowski, P., Grave, E., Joulin, A., & Mikolov, T. (2017). Enriching word vectors with subword information. Transactions of the Association for Computational Linguistics, 5, 135–146. https://doi.org/10.1162/tacl_a_00051

Bommasani, R., Hudson, D. A., Adeli, E., Altman, R., Arora, S., von Arx, S., Bernstein, M. S., Bohg, J., Bosselut, A., Brunskill, E., Brynjolfsson, E., Buch, S., Card, D., Castellon, R., Chatterji, N. S., Chen, A. S., Creel, K., Davis, J. Q., Demszky, D., Donahue, C., Doumbouya, M., Durmus, E., Ermon, S., Etchemendy, J., Ethayarajh, K., Fei-Fei, L., Finn, C., Gale, T., Gillespie, L., Goel, K., Goodman, N. D., Grossman, S., Guha, N., Hashimoto, T., Henderson, P., Hewitt, J., Ho, D. E., Hong, J., Hsu, K., Huang, J., Icard, T., Jain, S., Jurafsky, D., Kalluri, P., Karamcheti, S., Keeling, G., Khani, F., Khattab, O., Koh, P. W., Krass, M. S., Krishna, R., & Kuditipudi, R., et al. (2021). On the opportunities and risks of foundation models. arXiv:2108.07258.

Boser, B. E., Guyon, I. M., & Vapnik, V. N. (1992). A training algorithm for optimal margin classifiers. In: Haussler, D. (Ed.), Proceedings of the fifth annual workshop on computational learning theory (COLT ’92) (pp. 144–152). Association for Computing Machinery.

Branco, P., Torgo, L., & Ribeiro, R. P. (2016). A survey of predictive modeling on imbalanced domains. ACM Computing Surveys, 49(2), 1–50. https://doi.org/10.1145/2907070

Brownlee, J. (2020). Cost-sensitive learning for imbalanced classification. Machine learning mastery. Retrieved June 9, 2021 from https://machinelearningmastery.com/cost-sensitive-learning-for-imbalanced-classification/.

Brownlee, J. (2021). Random oversampling and undersampling for imbalanced classification. Machine learning mastery. Retrieved June 8, 2021, from https://machinelearningmastery.com/random-oversampling-and-undersampling-for-imbalanced-classification/.

Burnap, P., Gibson, R., Sloan, L., Southern, R., & Williams, M. (2016). 140 characters to victory?: Using Twitter to predict the UK 2015 General Election. Electoral Studies, 41, 230–233. https://doi.org/10.1016/j.electstud.2015.11.017

Chawla, N. V., Bowyer, K. W., Hall, L. O., & Kegelmeyer, W. P. (2002). SMOTE: Synthetic minority over-sampling technique. Journal of Artificial Intelligence Research, 16(1), 321–357.

Conneau, A., Khandelwal, K., Goyal, N., Chaudhary, V., Wenzek, G., Guzmán, F., Grave, E., Ott, M., Zettlemoyer, L., & Stoyanov, V. (2020). Unsupervised cross-lingual representation learning at scale. In: Jurafsky, D., Chai, J., Schluter, N., & Tetreault, J. (Eds.), Proceedings of the 58th annual meeting of the association for computational linguistics (pp. 8440–8451). Stroudsburg, PA, USA. Association for Computational Linguistics. https://doi.org/10.18653/v1/2020.acl-main.747.

Cortes, C., & Vapnik, V. (1995). Support-vector networks. Machine Learning, 20(3), 273–297.

Dahl, D. B., Scott, D., Roosen, C., Magnusson, A., & Swinton, J. (2019). xtable: Export tables to LaTeX or HTML (version 1.8-4). [R package]. CRAN. https://cran.r-project.org/web/packages/xtable/index.html.

Devlin, J., Chang, M.-W., Lee, K., & Toutanova, K. (2019). BERT: Pre-training of deep bidirectional Transformers for language understanding. In: Burstein, J., Doran, C., & Solorio, T. (Eds.), Proceedings of the 2019 conference of the north american chapter of the association for computational linguistics: human language technologies (pp. 4171–4186). Association for Computational Linguistics. https://doi.org/10.18653/v1/N19-1423.

Diaz, F., Mitra, B., & Craswell, N. (2016). Query expansion with locally-trained word embeddings. In: Erk, K. & Smith, N. A. (Eds.), Proceedings of the 54th annual meeting of the association for computational linguistics (pp. 367–377). Association for Computational Linguistics. https://doi.org/10.18653/v1/P16-1035.

Diermeier, D., Godbout, J.-F., Yu, B., & Kaufmann, S. (2011). Language and ideology in Congress. British Journal of Political Science, 42(1), 31–55. https://doi.org/10.1017/S0007123411000160

Dingwall, N., & Potts, C. (2018). Mittens: an extension of GloVe for learning domain-specialized representations. In: Proceedings of the 2018 conference of the North American chapter of the Association for Computational Linguistics: Human Language Technologies, Vol. 2 (Short Papers) (pp. 212–217). Association for Computational Linguistics. https://doi.org/10.18653/v1/N18-2034.

Dodge, J., Ilharco, G., Schwartz, R., Farhadi, A., Hajishirzi, H., & Smith, N. (2020). Fine-tuning pretrained language models: Weight initializations, data orders, and early stopping. arXiv:2002.06305v1 [cs.CL].

D’Orazio, V., Landis, S. T., Palmer, G., & Schrodt, P. (2014). Separating the wheat from the chaff: Applications of automated document classification using Support Vector Machines. Political Analysis, 22(2), 224–242. https://doi.org/10.1093/pan/mpt030

Dowle, M., & Srinivasan, A. (2020). data.table: Extension of ‘data.frame’ (Version 1.13.0). [R package]. CRAN. https://CRAN.R-project.org/package=data.table.

Durrell, M. (2008). Linguistic variable - linguistic variant. In: Ammon, U., Dittmar, N., Mattheier, K. J., & Trudgill, P. (Eds.), Sociolinguistics (pp. 195–200). De Gruyter Mouton. https://doi.org/10.1515/9783110141894.1.2.195.

Ein-Dor, L., Halfon, A., Gera, A., Shnarch, E., Dankin, L., Choshen, L., Danilevsky, M., Aharonov, R., Katz, Y., & Slonim, N. (2020). Active learning for BERT: An empirical study. In: Webber, B., Cohn, T., He, Y., & Liu, Y. (Eds.), Proceedings of the 2020 conference on empirical methods in natural language processing (EMNLP) (pp. 7949–7962). Association for Computational Linguistics. https://doi.org/10.18653/v1/2020.emnlp-main.638.

Elkan, C. (2001). The foundations of cost-sensitive learning. In: Proceedings of the 17th international joint conference on artificial intelligence (IJCAI ’01), (pp. 973–978). Morgan Kaufmann Publishers Inc.

Ennser-Jedenastik, L., & Meyer, T. M. (2018). The impact of party cues on manual coding of political texts. Political Science Research and Methods, 6(3), 625–633. https://doi.org/10.1017/psrm.2017.29

Erlich, A., Dantas, S. G., Bagozzi, B. E., Berliner, D., & Palmer-Rubin, B. (2021). Multi-label prediction for political text-as-data. Political analysis (pp. 1–18). https://doi.org/10.1017/pan.2021.15.

Ertekin, S., Huang, J., Bottou, L., & Giles, L. (2007). Learning on the border: Active learning in imbalanced data classification. In: Proceedings of the sixteenth ACM conference on information and knowledge management (CIKM ’07) (pp. 127–136). Association for Computing Machinery. https://doi.org/10.1145/1321440.1321461.

Eshima, S., Imai, K., & Sasaki, T. (2021). Keyword assisted topic models. arXiv:2004.05964v2 [cs.CL].

Firth, J. R. (1957). Studies in linguistic analysis. Blackwell, Publications of the Philological Society.

Fogel-Dror, Y., Shenhav, S. R., Sheafer, T., & Atteveldt, W. V. (2019). Role-based association of verbs, actions, and sentiments with entities in political discourse. Communication Methods and Measures, 13(2), 69–82. https://doi.org/10.1080/19312458.2018.1536973

Gessler, T. & Hunger, S. (2021). How the refugee crisis and radical right parties shape party competition on immigration. Political Science Research and Methods, 1–21. https://doi.org/10.1017/psrm.2021.64.

Google Colaboratory. (2020). Google colaboratory frequently asked questions. Google Colaboratory. Retrieved October 28, 2020, from https://research.google.com/colaboratory/faq.html.

Grimmer, J. (2013). Appropriators not position takers: The distorting effects of electoral incentives on Congressional representation. American Journal of Political Science, 57(3), 624–642. https://doi.org/10.1111/ajps.12000

Grün, B., & Hornik, K. (2011). topicmodels: An R package for fitting topic models. Journal of Statistical Software, 40(13), 1–30. https://doi.org/10.18637/jss.v040.i13

Hayes, A. F., & Krippendorff, K. (2007). Answering the call for a standard reliability measure for coding data. Communication Methods and Measures, 1(1), 77–89. https://doi.org/10.1080/19312450709336664

Howard, J., & Ruder, S. (2018). Universal language model fine-tuning for text classification. In: Gurevych, I., & Miyao, Y. (Eds.), Proceedings of the 56th annual meeting of the association for computational linguistics, pp. 328–339. Association for Computational Linguistics. https://doi.org/10.18653/v1/P18-1031.

Huang, Y., Giledereli, B., Köksal, A., Özgür, A., & Ozkirimli, E. (2021). Balancing methods for multi-label text classification with long-tailed class distribution. In Proceedings of the 2021 Conference on empirical methods in natural language processing (pp. 8153–8161). Association for Computational Linguistics. https://doi.org/10.18653/v1/2021.emnlp-main.643.

HuggingFace (2021). Dataset card for reuters21578. Retrieved May 19, 2021, from https://huggingface.co/datasets/reuters21578.

Hunter, J. D. (2007). Matplotlib: A 2D graphics environment. Computing in Science & Engineering, 9(3), 90–95. https://doi.org/10.1109/MCSE.2007.55

Jungherr, A., Schoen, H., & Jürgens, P. (2016). The mediation of politics through Twitter: An analysis of messages posted during the campaign for the German Federal Election 2013. Journal of Computer-Mediated Communication, 21(1), 50–68. https://doi.org/10.1111/jcc4.12143

Katagiri, A., & Min, E. (2019). The credibility of public and private signals: A document-based approach. American Political Science Review, 113(1), 156–172. https://doi.org/10.1017/S0003055418000643

Kentaro, W. (2020). gdown: Download a large file from Google Drive. [Python package]. GitHub. https://github.com/wkentaro/gdown.

Khan, J., & Lee, Y.-K. (2019). Lessa: A unified framework based on lexicons and semi-supervised learning approaches for textual sentiment classification. Applied Sciences, 9(24). https://doi.org/10.3390/app9245562.

King, G., Lam, P., & Roberts, M. E. (2017). Computer-assisted keyword and document set discovery from unstructured text. American Journal of Political Science, 61(4), 971–988. https://doi.org/10.1111/ajps.12291

King, G., Pan, J., & Roberts, M. E. (2013). How censorship in China allows government criticism but silences collective expression. American Political Science Review, 107(2), 326–343. https://doi.org/10.1017/S0003055413000014

King, K., Keohane, R. O., & Verba, S. (1994). Designing Social Inquiry: Scientific Inference in Qualitative Research. Princeton: Princeton University Press.

Kouw, W. M., & Loog, M. (2019). A review of domain adaptation without target labels. arXiv:1901.05335.

Krippendorff, K. (2013). Content analysis: An introduction to its methodology. Sage Publications, 3rd edition.

Kuzi, S., Shtok, A., & Kurland, O. (2016). Query expansion using word embeddings. In: Proceedings of the 25th ACM international on conference on information and knowledge management (CIKM ’16) (pp. 1929–1932). Association for Computing Machinery. https://doi.org/10.1145/2983323.2983876.

Lavrenko, V., & Croft, W. B. (2001). Relevance based language models. In: Proceedings of the 24th annual international ACM SIGIR conference on research and development in information retrieval (SIGIR ’01) (pp. 120–127). Association for Computing Machinery. https://doi.org/10.1145/383952.383972.

Lemaître, G., Nogueira, F., & Aridas, C. K. (2017). Imbalanced-learn: A Python toolbox to tackle the curse of imbalanced datasets in machine learning. Journal of Machine Learning Research, 18(17), 1–5.

Lewis, D. D. (1997). Reuters-21578 (Distribution 1.0). [Data set]. http://www.daviddlewis.com/resources/testcollections/reuters21578/.

Lewis, D. D., & Gale, W. A. (1994). A sequential algorithm for training text classifiers. In Croft, B. W. & van Rijsbergen, C. J. (Eds.), Proceedings of the 17th annual international ACM SIGIR conference on research and development in information retrieval (SIGIR ’94) (pp. 3–12). Springer.

Linder, F. (2017). Reducing bias in online text datasets: Query expansion and active learning for better data from keyword searches. SSRN. https://doi.org/10.2139/ssrn.3026393

Loshchilov, I., & Hutter, F. (2019). Decoupled weight decay regularization. In: 7th International conference on learning representations (ICLR 2019). OpenReview.net.

Maier, D., Waldherr, A., Miltner, P., Wiedemann, G., Niekler, A., Keinert, A., Pfetsch, B., Heyer, G., Reber, U., Häussler, T., Schmid-Petri, H., & Adam, S. (2018). Applying LDA topic modeling in communication research: Toward a valid and reliable methodology. Communication Methods and Measures, 12(2–3), 93–118. https://doi.org/10.1080/19312458.2018.1430754

Manning, C. D., Raghavan, P., & Schütze, H. (2008). Introduction to Information Retrieval. Cambridge University Press.

Manning, C. D., & Schütze, H. (1999). Foundations of statistical natural language processing. MIT Press, Cambridge. https://mitpress.mit.edu/books/foundations-statistical-natural-language-processing.

McKinney, W. (2010). Data structures for statistical computing in Python. In: van der Walt, S., & Millman, J. (Eds.), Proceedings of the 9th Python in science conference (SciPy 2010) (pp. 56–61). https://doi.org/10.25080/Majora-92bf1922-00a.

Michael Waskom and Team. (2020). Seaborn. [Python package]. Zenodo. https://zenodo.org/record/4379347.

Mikhaylov, S., Laver, M., & Benoit, K. R. (2012). Coder reliability and misclassification in the human coding of party manifestos. Political Analysis, 20(1), 78–91. https://doi.org/10.1093/pan/mpr047

Mikolov, T., Chen, K., Corrado, G., & Dean, J. (2013). Efficient estimation of word representations in vector space. arXiv:1301.3781v3 [cs.CL].

Mikolov, T., Yih, W.-T., & Zweig, G. (2013). Linguistic regularities in continuous space word representations. In: Vanderwende, L., Daumé III, H., & Kirchhoff, K. (Eds.), Proceedings of the 2013 conference of the North American chapter of the association for computational linguistics: Human language technologies (pp. 746–751). Association for Computational Linguistics.

Miller, B., Linder, F., & Mebane, W. R. (2020). Active learning approaches for labeling text: Review and assessment of the performance of active learning approaches. Political Analysis, 28(4), 532–551. https://doi.org/10.1017/pan.2020.4

Moore, W. H., & Siegel, D. A. (2013). A Mathematics Course for Political and Social Research. Princeton University Press.

Mosbach, M., Andriushchenko, M., & Klakow, D. (2021). On the stability of fine-tuning BERT: Misconceptions, explanations, and strong baselines. In: International conference on learning representations (ICLR 2021). OpenReview.net.

Muchlinski, D., Yang, X., Birch, S., Macdonald, C., & Ounis, I. (2021). We need to go deeper: Measuring electoral violence using Convolutional Neural Networks and social media. Political Science Research and Methods, 9(1), 122–139. https://doi.org/10.1017/psrm.2020.32

Münchener Digitalisierungszentrum der Bayerischen Staatsbibliothek (dbmdz). (2021). Model card for bert-base-german-uncased from dbmdz. Retrieved May 19, 2021, from https://huggingface.co/dbmdz/bert-base-german-uncased.

Neelakantan, A., Shankar, J., Passos, A., & McCallum, A. (2014). Efficient non-parametric estimation of multiple embeddings per word in vector space. In: Moschitti, A., Pang, B., & Daelemans, W. (Eds.), Proceedings of the 2014 conference on empirical methods in natural language processing (EMNLP) (pp. 1059–1069). Stroudsburg, PA, USA. Association for Computational Linguistics. https://doi.org/10.3115/v1/D14-1113.

Oliphant, T. E. (2006). A guide to NumPy. Trelgol Publishing USA.

Oller Moreno, S. (2021). facetscales: Facet grid with different scales per facet (Version 0.1.0.9000). [R package]. GitHub. https://github.com/zeehio/facetscales.

Paszke, A., Gross, S., Massa, F., Lerer, A., Bradbury, J., Chanan, G., Killeen, T., Lin, Z., Gimelshein, N., Antiga, L., Desmaison, A., Kopf, A., Yang, E., DeVito, Z., Raison, M., Tejani, A., Chilamkurthy, S., Steiner, B., Fang, L., Bai, J., & Chintala, S. (2019). PyTorch: An imperative style, high-performance deep learning library. In: H. Wallach, H. Larochelle, A. Beygelzimer, F. d’Alché Buc, E. Fox, & R. Garnett (Eds.), Advances in neural information processing systems 32 (pp. 8024–8035). Curran Associates Inc.

Pedregosa, F., Varoquaux, G., Gramfort, A., Michel, V., Thirion, B., Grisel, O., Blondel, M., Prettenhofer, P., Weiss, R., Dubourg, V., Vanderplas, J., Passos, A., Cournapeau, D., Brucher, M., Perrot, M., & Duchesnay, E. (2011). Scikit-learn: Machine learning in Python. Journal of Machine Learning Research, 12, 2825–2830.

Pennington, J., Socher, R., & Manning, C. (2014). GloVe: Global vectors for word representation. In Moschitti, A., Pang, B., & Daelemans, W. (Eds.), Proceedings of the 2014 conference on empirical methods in natural language processing (EMNLP) (pp. 1532–1543). Association for Computational Linguistics. https://doi.org/10.3115/v1/D14-1162.

Phang, J., Févry, T., & Bowman, S. R. (2019). Sentence encoders on STILTs: Supplementary training on intermediate labeled-data tasks. arXiv:1811.01088v2 [cs.CL].

Pilehvar, M. T., & Camacho-Collados, J. (2020). Embeddings in natural language processing: theory and advances in vector representations of meaning. Morgan & Claypool Publishers. https://doi.org/10.2200/S01057ED1V01Y202009HLT047

Pilny, A., McAninch, K., Slone, A., & Moore, K. (2019). Using supervised machine learning in automated content analysis: An example using relational uncertainty. Communication Methods and Measures, 13(4), 287–304. https://doi.org/10.1080/19312458.2019.1650166

Puglisi, R., & Snyder, J. M. (2011). Newspaper coverage of political scandals. The Journal of Politics, 73(3), 931–950. https://doi.org/10.1017/s0022381611000569

Quinn, K. M., Monroe, B. L., Colaresi, M., Crespin, M. H., & Radev, D. R. (2010). How to analyze political attention with minimal assumptions and costs. American Journal of Political Science, 54(1), 209–228. https://doi.org/10.1111/j.1540-5907.2009.00427.x

R Core Team (2020). R: A Language and Environment for Statistical Computing. R Foundation for Statistical Computing, Vienna, Austria. https://www.R-project.org/.

Raschka, S. (2020). watermark. [Python package]. GitHub. https://github.com/rasbt/watermark.

Rauh, C., Bes, B. J., & Schoonvelde, M. (2020). Undermining, defusing or defending European integration? assessing public communication of European executives in times of EU politicisation. European Journal of Political Research, 59, 397–423. https://doi.org/10.1111/1475-6765.12350

Reda, A. A., Sinanoglu, S., & Abdalla, M. (2021). Mobilizing the masses: Measuring resource mobilization on twitter. Sociological Methods & Research, 1–40.

Reimers, N., & Gurevych, I. (2018). Why comparing single performance scores does not allow to draw conclusions about machine learning approaches. arXiv:1803.09578.

Richardson, L. (2020). Beautiful Soup 4. [Python library]. Crummy. https://www.crummy.com/software/BeautifulSoup/.

Roberts, M. E., Stewart, B. M., & Airoldi, E. M. (2016). A model of text for experimentation in the social sciences. Journal of the American Statistical Association, 111(515), 988–1003. https://doi.org/10.1080/01621459.2016.1141684

Roberts, M. E., Stewart, B. M., & Tingley, D. (2016). Navigating the local modes of big data: The case of topic models. In: Alvarez, R. M. (Ed.), Computational social science: discovery and prediction (pp. 51–97). Cambridge University Press.

Roberts, M. E., Stewart, B. M., & Tingley, D. (2019). stm: An R package for structural topic models. Journal of Statistical Software, 91(2), 1–40. https://doi.org/10.18637/jss.v091.i02.

Roberts, M. E., Stewart, B. M., Tingley, D., Lucas, C., Luis, J. L., Gadarian, S. K., Albertson, B., & Rand, D. G. (2014). Structural Topic Models for open-ended survey responses. American Journal of Political Science, 58(4), 1064–1082. https://doi.org/10.1111/ajps.12103

Rodriguez, P. L., & Spirling, A. (2022). Word embeddings: What works, what doesn’t, and how to tell the difference for applied research. The Journal of Politics, 84(1), 101–115. https://doi.org/10.1086/715162

Ruder, S. (2019). Neural transfer learning for natural language processing. PhD thesis, National University of Ireland, Galway.

Ruder, S. (2020). NLP-Progress. Retrieved June 21, 2021, from https://nlpprogress.com/english/text_classification.html.

Sap, M., Gabriel, S., Qin, L., Jurafsky, D., Smith, N. A., & Choi, Y. (2020). Social bias frames: Reasoning about social and power implications of language. In: Jurafsky, D., Chai, J., Schluter, N., & Tetreault, J. (Eds.), Proceedings of the 58th annual meeting of the association for computational linguistics (pp. 5477–5490). Association for Computational Linguistics. https://doi.org/10.18653/v1/2020.acl-main.486.

Schulze, P., Wiegrebe, S., Thurner, P. W., Heumann, C., Aßenmacher, M., & Wankmüller, S. (2021). Exploring topic-metadata relationships with the STM: A Bayesian approach. arXiv:2104.02496v1 [cs.CL].

Schütze, H. (1998). Automatic word sense discrimination. Computational Linguistics, 24(1), 97–123. https://aclanthology.org/J98-1004.

scikit-learn Developers. (2020). 1.4. Support Vector Machines. Retrieved November 23, 2020, from https://scikit-learn.org/stable/modules/svm.html.

scikit-learn Developers (2020). RBF SVM Parameters. Retrieved November 23, 2020, from https://scikit-learn.org/stable/auto_examples/svm/plot_rbf_parameters.html.

Sebők, M., & Kacsuk, Z. (2020). The multiclass classification of newspaper articles with machine learning: The hybrid binary snowball approach. Political Analysis, 1–14. https://doi.org/10.1017/pan.2020.27.

Selivanov, D., Bickel, M., & Wang, Q. (2020). text2vec: Modern text mining framework for R. [R package]. CRAN. https://CRAN.R-project.org/package=text2vec.

Settles, B. (2010). Active learning literature survey. Computer Sciences Technical Report 1648. University of Wisconsin–Madison. http://burrsettles.com/pub/settles.activelearning.pdf.

Silva, A., & Mendoza, M. (2020). Improving query expansion strategies with word embeddings. In: Proceedings of the ACM symposium on document engineering 2020 (DocEng ’20) (pp. 1–4). Association for Computing Machinery. https://doi.org/10.1145/3395027.3419601.

Socher, R., Perelygin, A., Wu, J., Chuang, J., Manning, C. D., Ng, A., & Potts, C. (2013). Recursive deep models for semantic compositionality over a sentiment treebank. In Yarowsky, D., Baldwin, T., Korhonen, A., Livescu, K., & Bethard, S. (Eds.), Proceedings of the 2013 conference on empirical methods in natural language processing (pp. 1631–1642). Association for Computational Linguistics.

Soetaert, K. (2019). plot3D: Plotting multi-dimensional data (Version 1.3). [R package]. CRAN. https://CRAN.R-project.org/package=plot3D.

Song, H., Tolochko, P., Eberl, J.-M., Eisele, O., Greussing, E., Heidenreich, T., Lind, F., Galyga, S., & Boomgaarden, H. G. (2020). In validations we trust? The impact of imperfect human annotations as a gold standard on the quality of validation of automated content analysis. Political Communication, 37(4), 550–572. https://doi.org/10.1080/10584609.2020.1723752

Stier, S., Bleier, A., Bonart, M., Mörsheim, F., Bohlouli, M., Nizhegorodov, M., Posch, L., Maier, J., Rothmund, T., & Staab, S. (2018). Systematically Monitoring Social Media: the Case of the German Federal Election 2017. GESIS - Leibniz-Institut für Sozialwissenschaften. https://doi.org/10.21241/ssoar.56149.

Sun, C., Qiu, X., Xu, Y., & Huang, X. (2019). How to fine-tune BERT for text classification? arXiv:1905.05583v3 [cs.CL].

Tong, S., & Koller, D. (2002). Support Vector Machine active learning with applications to text classification. Journal of Machine Learning Research, 2, 45–66. https://doi.org/10.1162/153244302760185243

Turney, P. D., & Pantel, P. (2010). From frequency to meaning: Vector space models of semantics. Journal of Artificial Intelligence Research, 37, 141–188. https://doi.org/10.1613/jair.2934

Ushey, K., Allaire, J., Wickham, H., & Ritchie, G. (2020). rstudioapi: Safely Access the RStudio API (Version 0.11). [R package]. CRAN. https://CRAN.R-project.org/package=rstudioapi.

Uyheng, J., & Carley, K. M. (2020). Bots and online hate during the COVID-19 pandemic: Case studies in the United States and the Philippines. Journal of Computational Social Science, 3, 445–468. https://doi.org/10.1007/s42001-020-00087-4

van Atteveldt, W., Sheafer, T., Shenhav, S. R., & Fogel-Dror, Y. (2017). Clause analysis: Using syntactic information to automatically extract source, subject, and predicate from texts with an application to the 2008–2009 Gaza War. Political Analysis, 25(2), 207–222. https://doi.org/10.1017/pan.2016.12

van Rijsbergen, C. J. (2000). Information retrieval—Session 1: Introduction to information retrieval. [Lecture notes]. Universität Duisburg Essen. https://www.is.inf.uni-due.de/courses/dortmund/lectures/ir_ws00-01/folien/keith_intro.ps.

Van Rossum, G., & Drake, F. L. (2009). Python 3 Reference Manual. CreateSpace.

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N., Kaiser, L., & Polosukhin, I. (2017). Attention is all you need. In I. Guyon, U. V. Luxburg, S. Bengio, H. Wallach, R. Fergus, S. Vishwanathan, & R. Garnett (Eds.), Advances in Neural Information Processing Systems 30 (pp. 5998–6008). Curran Associates Inc.

Wang, H. (2020). Logistic regression for massive data with rare events. In Daumé III, H., & Singh, A. (Eds.), Proceedings of the 37th international conference on machine learning (pp. 9829–9836). Proceedings of Machine Learning Research.

Wang, Y.-S. & Chang, Y. (2022). Toxicity detection with generative prompt-based inference. arXiv:2205.12390.

Wankmüller, S. (2021). Neural transfer learning with Transformers for social science text analysis. arXiv:2102.02111v1 [cs.CL].

Watanabe, K. (2021). Latent Semantic Scaling: A semisupervised text analysis technique for new domains and languages. Communication Methods and Measures, 15(2), 81–102. https://doi.org/10.1080/19312458.2020.1832976

Wickham, H. (2016). ggplot2: Elegant graphics for data analysis. Springer.

Wickham, H. (2019). stringr: Simple, consistent wrappers for common string operations (Version 1.4.0). [R package]. CRAN. https://CRAN.R-project.org/package=stringrCRAN.

Wickham, H., François, R., Henry, L., & Müller, K. (2021). dplyr: A grammar of data manipulation (version 1.0.6). [R package]. CRAN. https://CRAN.R-project.org/package=dplyr.

Wild, F. (2020). lsa: Latent semantic analysis (Version 0.73.2). [R package]. CRAN. https://CRAN.R-project.org/package=lsa.

Wilke, C. O. (2021). ggridges: Ridgeline plots in ’ggplot2’ (version 0.5.3). [R package]. CRAN. https://CRAN.R-project.org/package=ggridgesCRAN.

Wolf, T., Debut, L., Sanh, V., Chaumond, J., Delangue, C., Moi, A., Cistac, P., Rault, T., Louf, R., Funtowicz, M., Davison, J., Shleifer, S., von Platen, P., Ma, C., Jernite, Y., Plu, J., Xu, C., Scao, T. L., Gugger, S., Drame, M., Lhoest, Q., & Rush, A. M. (2020). HuggingFace’s transformers: state-of-the-art natural language processing. arXiv:1910.03771v5 [cs.CL].

Yarowsky, D. (1995). Unsupervised word sense disambiguation rivaling supervised methods. In: 33rd Annual meeting of the association for computational linguistics (pp. 189–196). Cambridge, Massachusetts, USA. Association for Computational Linguistics. https://doi.org/10.3115/981658.981684.

Zhang, H., & Pan, J. (2019). CASM: A deep-learning approach for identifying collective action events with text and image data from social media. Sociological Methodology, 49(1), 1–57. https://doi.org/10.1177/0081175019860244

Zhao, H., Phung, D., Huynh, V., Jin, Y., Du, L., & Buntine, W. (2021). Topic modelling meets deep neural networks: A survey. In: Zhou, Z.-H. (Ed.), Proceedings of the thirtieth international joint conference on artificial intelligence, IJCAI-21. (pp. 4713–4720). International Joint Conferences on Artificial Intelligence Organization. https://doi.org/10.24963/ijcai.2021/638.

Zhou, Z.-H., & Li, M. (2005). Tri-training: Exploiting unlabeled data using three classifiers. IEEE Transactions on Knowledge and Data Engineering, 17(11), 1529–1541. https://doi.org/10.1109/TKDE.2005.186.

Zhu, Y., Kiros, R., Zemel, R., Salakhutdinov, R., Urtasun, R., Torralba, A., & Fidler, S. (2015). Aligning books and movies: Towards story-like visual explanations by watching movies and reading books. In: Proceedings of the 2015 IEEE international conference on computer vision (ICCV ’15) (pp. 19–27). IEEE Computer Society. https://doi.org/10.1109/ICCV.2015.11.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix 1: Imbalanced classification, precision, recall

Imbalanced classification problems are common in information retrieval tasks [74, p. 155]. They are characterized by an imbalance in the proportions made up by one vs. the other category. When retrieving relevant documents from large corpora typically only a small fraction of documents falls into the positive relevant category, whereas an overwhelming majority of documents is part of the negative irrelevant category [74, p. 155].

When evaluating the performance of a method in a situation of imbalance, the accuracy measure that gives the share of correctly classified documents is not adequate [74, p. 155]. The reason is that a method that would assign all documents to the negative irrelevant category would get a very high accuracy value [74, p. 155] Thus, evaluation metrics that allow for a refined view, such as precision and recall, should be employed [74, p. 155]. Precision and recall are defined as follows:

whereby TP, FP and FN are defined in Table 4. Precision and recall are in the range [0, 1]. However, if none of the documents is predicted to be positive, then \(TP + FP = 0\) and precision is undefined. If there are no truly positive documents in the corpus, then \(TP + FN = 0\) and recall is undefined. The higher precision and recall, the better.

Precision exclusively takes into account all documents that have been assigned to the positive relevant category by the classification method and informs about the share of truly positive documents among all documents that are predicted to fall into the positive category. Recall, on the other hand, exclusively focuses on the truly relevant documents and informs about the share of documents that has been identified as relevant among all truly relevant documents.

There is a trade-off between precision and recall [74, p. 156]. A keyword list comprising many terms or a classification algorithm that is lenient in considering documents to be relevant will likely identify many of the truly relevant documents (high recall). Yet, as the hurdle for being considered relevant is low, they will also classify many truly irrelevant documents into the relevant category (low precision). A keyword list consisting of few specific terms or a classification algorithm with a high threshold for assigning documents to the relevant class will likely miss out many relevant instances (low recall), but among those considered relevant many are likely to indeed be relevant (high precision).

The following trade-off between precision and recall is incorporated in the \(F_{\omega }\)-measure, which is the weighted harmonic mean of precision and recall [74, p. 156]:

The \(F_{\omega }\)-measure also is in the range [0, 1]. \(\omega\) is a real-valued factor balancing the importance of precision vs. recall [74, p. 156]. For \(\omega > 1\) recall is considered more important than precision and for \(\omega < 1\) precision is weighted more than recall [74, p. 156]. A very common choice for \(\omega\) is 1 [74, p. 156]. In this case, the \(F_1\)-measure (or synonymously: \(F_1\)-Score) is the harmonic mean between precision and recall [74, p. 156].

Appendix 2: Social science studies applying keyword lists

See Table 5.

Appendix 3: Topic model-based classification rule building procedure

See Fig. 10.

Building topic model-based classification rules. Classification rules can be built from any topic model that on the basis of a corpus comprising N documents estimates a latent topic structure characterized by two matrices: \(N \times K\) document-topic matrix \(\varvec{\Theta }\) and \(K \times U\) topic-term matrix \(\varvec{B}\). \(\beta _{ku}\) is the estimated probability for the uth term to occur given topic k. \(\theta _{ik}\) is the estimated share assigned to topic k in the ith document. The topic model-based classification rule procedure proceeds as follows: Step 1: Researchers inspect matrix \(\varvec{B}\), determine which topics are relevant and create \(K \times 1\) relevance matrix \(\varvec{C}\). Step 2: Matrix multiplication of \(\varvec{\Theta }\) with \(\varvec{C}\) yields the resulting vector \(\varvec{r}\). Step 3 (not shown): Documents with \(r_i >=\) threshold \(\xi \in [0,1]\) are retrieved

Appendix 4: Details on training local glove embeddings

In training, a symmetric context window size of six tokens on either side of the target feature as well as a decreasing weighting function is used; such that a token that is q tokens away from the target feature counts 1/q to the co-occurrence count [91]. After training, following the approach in Pennington et al. [91], the word embedding matrix and the context word embedding matrix are summed to yield the finally applied embedding matrix. Note that in their analysis of a large spectrum of settings for training word embeddings, Rodriguez and Spirling [107] found that the here used popular setting of using 300-dimensional embeddings with a symmetric window size of six tokens tends to be a setting that yields good performances whilst at the same time being cost-effective regarding the embedding dimensions and the context window size.

Appendix 5: Details on estimating CTMs

The CTM is estimated via the stm R-package [105] that originally is designed to estimate the Structural Topic Model (STM) [103]. The STM extends the LDA by allowing document-level variables to affect the topic proportions within a document (topical prevalence) or to affect the term probabilities of a topic (topical content) [103, p. 989]. If no document-level variables are specified (as is done here), the STM reduces to the CTM [103, p. 991]. In estimation, the approximate variational expectation-maximization (EM) algorithm as described in Roberts et al. [103, p. 992–993] is employed. This estimation procedure tends to be faster and tends to produce higher held-out log-likelihood values than the original variational approximation algorithm for the CTM presented in Blei and Lafferty [18] [105, p. 29–30]. The model is initialized via spectral initialization [3; 104, p. 82–85; 105, p. 11]. The model is considered to have converged if the relative change in the approximate lower bound on the marginal likelihood from one step to the next is smaller than 1e-04 [103, p. 992; 105, p. 10, 28].

Appendix 6: Details on text preprocessing and training settings for SVM and BERT

An SVM operates on a document-feature matrix, \(\varvec{X}\). In a document-feature matrix, each document is represented as a feature vector of length U: \(\varvec{x}_i = (x_{i1}, \dots , x_{iu}, \dots , x_{iU})\). The information contained in the vector’s entries, \(x_{iu}\), typically is based on the frequency with which each of the U textual features occurs in the ith document [125, p. 147]. Given the document feature vectors, \(\{\varvec{x}_i\}_{i=1}^N\), and corresponding binary class labels, \(\{y_i\}_{i=1}^N\), whereby \(y_i \in \{-1, +1\}\), an SVM tries to find a hyperplane, that separates the training documents as well as possible into the two classes [30].

To create the required vector representation for each document, here the following text preprocessing steps are applied: The documents are tokenized into unigram tokens. Punctuation, symbols, numbers, and URLs are removed. The tokens are lowercased and stemmed. Subsequently terms whose mean tf-idf value across all documents in which they occur belongs to the lowest 0.1\(\%\) (Twitter, SBIC) or 0.2\(\%\) (Reuters) of mean tf-idf values of all terms in the corpus are discarded. Also terms that occur in only one (Twitter) or two (SBIC, Reuters) documents are removed. Finally, a Boolean weighting scheme, in which only the absence (0) vs. presence (1) of a term in a corpus is recorded, is applied on the document-feature matrix. To determine the hyperparameter values for the SVMs, hyperparameter tuning via a grid search across sets of hyperparameter values is conducted in a stratified 5-fold cross-validation setting on one fold of the training data. A linear kernel and a Radial Basis Function (RBF) kernel are tried. Moreover, for the inverse regularization parameter \(\mathcal {C}\), that governs the trade-off between the slack variables and the training error, the values \(\{0.1, 1.0, 10.0, 100.0\}\) (linear) and \(\{0.1, 1.0, 10.0\}\) (RBF) are inspected. Additionally, for the RBF’s parameter \(\gamma\), that governs the training example’s radius of influence, the values \(\{0.001, 0.01, 0.1\}\) are evaluated.Footnote 27 The folds are stratified such that the share of instances falling into the relevant minority class is the same across all folds. In each cross-validation iteration, in the folds used for training, random oversampling of the minority class is conducted such that the number of relevant minority class examples increases by a factor of 5. Among the inspected hyperparameter settings, the setting that achieves the highest \(F_1\)-Score regarding the prediction of the relevant minority class and does not exhibit excessive overfitting is selected.

There are two limiting factors when applying BERT: First, due to memory limitations, BERT cannot process text sequences that are longer than 512 tokens [32, p. 4183]. This poses no problem for the Twitter corpus that has a maximum sequence length of 73 tokens. In the Reuters news corpus, however, whilst the largest share of articles is shorter than 512 tokens, there is a long tail of longer articles comprising up to around 1500 tokens.Footnote 28 Following the procedure by Sun et al. [123], Reuters news stories that exceed 512 tokens are reduced to the maximum accepted token length by keeping the first 128 and keeping the last 382 tokens whilst discarding the remaining tokens in the middle.Footnote 29 The maximum sequence length recorded for the SBIC is 354 tokens. In order to reduce the required memory capacities, the few posts that are longer than 250 tokens are shortened to 250 tokens by keeping the first 100 and the last 150 tokens.

The second limiting factor is that the prediction performance achieved by BERT after fine-tuning on the target task can vary considerably—even if the same training data set is used for fine-tuning and only the random seeds, that initialize the optimization process and set the order of the training data, differ ([32], p. 4176; [92], p. 5–7; [36]]. Especially when the training data set is small (e.g. smaller than 10,000 or 5000 documents), fine-tuning with BERT has been observed to yield unstable prediction performances [32, p. 4176; 92, p. 5–7]. Recently, Mosbach et al. [83] established that the variance in the prediction performance of BERT models, that have been fine-tuned on the same training data set with different seeds, to a large extent are likely due to vanishing gradients in the fine-tuning optimization process. Mosbach et al. [83, p. 5] also note that it is not that small training data sets per se yield unstable performances but rather that if small data sets are fine-tuned for the same number of epochs than larger data sets (typically for 3 epochs), then this implies that smaller data sets are fine-tuned for a substantively smaller number of training iterations—which in turn negatively affects the learning rate schedule and the generalization ability [83, p. 4–5]. Finally, Mosbach et al. [83, p. 2, 8–9] show that fine-tuning with a small learning rate (in the paper: 2e−05), with warmup, bias correction, and a large number of epochs (in the paper: 20) not only tends to increase prediction performances but also significantly decreases the performance instability in fine-tuning. Here, the advice of Mosbach et al. [83] is followed. For BERT, the AdamW algorithm [72] with bias correction, a warmup period lasting 10\(\%\) of the training steps, and a global learning rate of 2e− 05 is used. Training is conducted for 20 epochs. Dropout is set to 0.1. The batch size is set to 16.

Appendix 7: Most predictive terms

Note on Tables 6, 7 and 8: The keyword lists comprising empirically highly predictive terms are not only applied on the corpora to evaluate the retrieval performance of keyword lists, but also form the basis for query expansion (see Sect. 4.2.2). The query expansion technique makes use of GloVe word embeddings [91] trained on the local corpora at hand and also makes use of externally obtained GloVe word embeddings trained on large global corpora. In the case of the locally trained word embeddings there is a learned word embedding for each predictive term. Thus, the set of extracted highly predictive terms can be directly used as starting terms for query expansion. In the case of the globally pretrained word embeddings, however, not all of the highly predictive terms have a corresponding global word embedding. Hence, for the globally pretrained embeddings the 50 most predictive terms for which a globally pretrained word embedding is available are extracted. If a predictive term has no corresponding global embedding, the set of extracted predictive terms is enlarged with the next most predictive term until there are 50 extracted terms. In consequence, below for each corpus two lists of the most predictive features are shown.

Appendix 8: Recall and precision of keyword lists and query expansion

Recall and precision for retrieving relevant documents with keyword lists and global query expansion. This plot shows recall and precision scores resulting from the application of the keyword lists of 10 highly predictive terms as well as the evolution of the recall and precision scores across the query expansion procedure based on globally trained GloVe embeddings. For each of the sampled 100 keyword lists that then are expanded, one light blue line is plotted. The thick dark blue line gives the mean over the 100 lists

Recall and Precision for Retrieving Relevant Documents with Keyword Lists and Local Query Expansion. This plot shows recall and precision scores resulting from the application of the keyword lists of 10 highly predictive terms as well as the evolution of the recall and precision scores across the query expansion procedure based on locally trained GloVe embeddings. For each of the sampled 100 keyword lists that then are expanded, one light blue line is plotted. The thick dark blue line gives the mean over the 100 lists. Note that the strong increase in recall for some keyword lists in the Twitter data set is due to the fact that the textual feature with the highest cosine similarity to the highly predictive initial term ‘flüchtlinge’ (translation: ‘refugees’) is the colon ‘:’

Appendix 9: Mittens: F1-score, recall, and precision

See Fig. 13.

Retrieving relevant documents with query expansion based on Mittens embeddings. This plot shows the \(F_1\)-Scores, as well as the recall and precision values, resulting from the application of the keyword lists of 10 highly predictive terms as well as the evolution of the \(F_1\)-Scores, recall values, and precision values across the query expansion procedure based on Mittens embeddings. For each of the sampled 100 keyword lists that then are expanded, one light blue line is plotted. The thick dark blue line gives the mean over the 100 lists

Appendix 10: Terms with the highest probabilities and the highest FREX-Scores

Appendix 11: Recall and precision of topic model-based classification rules

See Fig. 14.

Recall and precision of topic model-based classification rules. The height of a bar indicates the recall values (left column) and precision values (right column) resulting from the application of a topic model-based classification rule. For each number of topics \(K \in \{5, 15, 30, 50, 70, 90, 110\}\), those two combinations out of all explored combinations regarding the question how many and which topics are considered relevant are shown that reach the highest \(F_1\)-Score for the given topic number. For each combination, recall and precision values for each threshold value \(\xi \in \{0.1, 0.3, 0.5, 0.7 \}\) is given. Classification rules that assign none of the documents to the positive class have a recall value of 0 and an undefined value for precision and the \(F_1\)-Score. Undefined values here are visualized by the value 0

Appendix 12: Recall and precision of active and passive supervised learning

See Fig. 15.

Recall and Precision of Active and Passive Supervised Learning. Recall values and precision values achieved on the set aside test set as the number of unique labeled documents in set \(\mathcal {I}\) increases from 250 to 1000. Passive supervised learning results are visualized by blue lines, active learning results are given in red. For each of the 10 (Twitter, SBIC) or 5 (Reuters) conducted iterations, one light colored line is plotted. The thick and dark blue and red lines give the means across the iterations. If a trained model assigns none of the documents to the positive relevant class, then it has a recall value of 0 and an undefined value for precision and the \(F_1\)-Score. Undefined values here are visualized by the value 0

Appendix 13: Comparing BERT and SVM for active and passive supervised learning

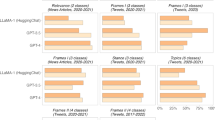

Comparing BERT and SVM for Active and Passive Supervised Learning I. \(F_1\)-Scores achieved on the set aside test set as the number of unique labeled documents in set \(\mathcal {I}\) increases from 250 to 1000. Active learning results are visualized in the left panels, passive learning results are given in the right panels. \(F_1\)-Scores of the SVMs are visualized by blue lines, BERT performances are given in red. For each of the 10 (Twitter, SBIC) or 5 (Reuters) conducted iterations, one light colored line is plotted. The thick and dark blue and red lines give the mean across the iterations. If a trained model assigns none of the documents to the positive relevant class, then it has a recall value of 0 and an undefined value for precision and the \(F_1\)-Score. Undefined values here are visualized by the value 0

Comparing BERT and SVM for active and passive supervised learning II. Distribution of the differences in the \(F_1\)-Scores achieved on the set aside test set as the number of unique labeled documents in set \(\mathcal {I}\) increases from one training step to the next by a batch of 50 documents. Boxplots visualizing the distribution of differences in \(F_1\)-Scores of the SVMs are presented in blue. \(F_1\)-Score differences for BERT are given in red. The mean is visualized by a star dot. The value of the mean as well as the standard deviation (SD) are given below the respective boxplots

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wankmüller, S. A comparison of approaches for imbalanced classification problems in the context of retrieving relevant documents for an analysis. J Comput Soc Sc 6, 91–163 (2023). https://doi.org/10.1007/s42001-022-00191-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s42001-022-00191-7