Abstract

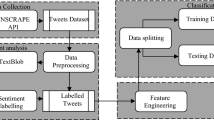

In this work, we compare the performance of a machine learning framework based on a support vector machine (SVM) with fastText embeddings, and a Deep Learning framework consisting on fine-tuning Large Language Models (LLMs) like Bidirectional Encoder Representations from Transformers (BERT), DistilBERT, and Twitter roBERTa Base, to automate the classification of text data to analyze the country image of Mexico in selected data sources, which is described using 18 different classes, based in International Relations theory. To train each model, a data set consisting of tweets from relevant selected Twitter accounts and news headlines from The New York Times is used, based on an initial manual classification of all the entries. However, the data set presents issues in the form of imbalanced classes and few data. Thus, a series of text augmentation techniques are explored: gradual augmentation of the eight less represented classes and an uniform augmentation of the data set. Also, we study the impact of hashtags, user names, stopwords, and emojis as additional text features for the SVM model. The results of the experiments indicate that the SVM reacts negatively to all the data augmentation proposals, while the Deep Learning one shows small benefits from them. The best result of 52.92%, in weighted-average \(F_1\) score, is obtained by fine-tuning the Twitter roBERTa Base model without data augmentation.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Data availability

The data that support the findings of this study are available from the corresponding author upon reasonable request.

Notes

References

Paul, D., Li, F., Teja, M. K., Yu, X., & Frost, R. (2017). Compass: Spatio temporal sentiment analysis of us election what twitter says! In Proceedings of the 23rd ACM SIGKDD international conference on knowledge discovery and data mining. KDD’17 (pp. 1585–1594). Association for Computing Machinery, New York, NY, USA. https://doi.org/10.1145/3097983.3098053

Lu, N., Wu, G., Zhang, Z., Zheng, Y., Ren, Y., & Choo, K.-K.R. (2020). Cyberbullying detection in social media text based on character-level convolutional neural network with shortcuts. Concurrency and Computation: Practice and Experience, 32(23), 5627. https://doi.org/10.1002/cpe.5627

Piña-García, C. A., & Ramírez-Ramírez, L. (2019). Exploring crime patterns in Mexico City. J Big Data. https://doi.org/10.1186/s40537-019-0228-x

Linnell, K., Arnold, M., Alshaabi, T., McAndrew, T., Lim, J., Dodds, P. S., & Danforth, C. M. (2021). The sleep loss insult of spring daylight savings in the US is observable in Twitter activity. J Big Data. https://doi.org/10.1186/s40537-021-00503-0

Sakib, T. H., Ishak, M., Jhumu, F. F., & Ali, M. A. (2021). Analysis of suicidal tweets from Twitter using ensemble machine learning methods. In 2021 international conference on automation, control and mechatronics for industry 4.0 (ACMI) (pp. 1–7). https://doi.org/10.1109/ACMI53878.2021.9528252

Muggah, R., & Whitlock, M. (2022). Reflections on the evolution of conflict early warning. Stability. https://doi.org/10.5334/sta.857

Trappl, R., Fürnkranz, J., & Petrak, J. (1996). Digging for peace: Using machine learning methods for assessing international conflict databases. In W. Wahlster (Ed.), 12th European conference on artificial intelligence (pp. 453–457).

Whang, T., Lammbrau, M., & Joo, H. M. (2018). Detecting patterns in North Korean military provocations: What machine-learning tells us. International Relations of the Asia-Pacific, 18, 193–220. https://doi.org/10.1093/irap/lcw016

Le, M. T., Sweeney, J., Lawlor, M. F., & Zucker, S. W. (2013). Discovering thematic structure in political datasets (pp. 163–165). https://doi.org/10.1109/ISI.2013.6578810

Villanueva Rivas, C. (2007). Representing cultural diplomacy: Soft power, cosmopolitan constructivism and nation branding in Mexico and Sweden. PhD thesis, Växjö Universitet.

Boulding, K. E. (1997). The image, 21 [print] edn. Ann Arbor paperbacks. Ann Arbor: University of Michigan Press.

Morgenthau, H. J. (1993). Politics among nations, 7. ed edn. McGraw-Hill Higher Education, Boston [u.a.] (2006). Includes bibliographical references and index.—Previous ed.: New York; London: McGraw-Hill,—Formerly CIP.—Formerly CIP.

Jervis, R. (1989) The logic of images in international relations. Reprint. edn. A Morningside book. Columbia Univ. Press, New York [u.a.].

Anholt, S. (2010). Places. Palgrave Macmillan, Basingstoke. In: palgraveconnect.com.

Bleiker, R. (Ed.). (2018). Visual global politics. Interventions (1st ed.). London: Taylor and Francis.

Marinao-Artigas, E., & Barajas-Portas, K. (2021). A cross-destination analysis of country image: A key factor of tourism marketing. Sustainability. https://doi.org/10.3390/su13179529

Shen, Y. S., Jo, W. M., & Joppe, M. (2022). Role of country image, subjective knowledge, and destination trust on travel attitude and intention during a pandemic. Journal of Hospitality and Tourism Management, 52, 275–284. https://doi.org/10.1016/j.jhtm.2022.07.003

Laroche, M., Papadopoulos, N., Heslop, L. A., & Mourali, M. (2005). The influence of country image structure on consumer evaluations of foreign products. International Marketing Review, 22, 96–115. https://doi.org/10.1108/02651330510581190

Melissen, J. (ed.). (2005). The New Public Diplomacy, [nachdr.] edn. Studies in diplomacy and international relations. Palgrave Macmillan, Basingstoke [u.a.].

Cull, N. J. (2019). Public Diplomacy. Cambridge: Contemporary Political Communication. Polity.

Snow, N., & Cull, N. J. (2020). (Eds.). Routledge handbook of public diplomacy (2nd edn). New York, NY: Routledge, Taylor & Francis Group.

Chen, H., Zhu, Z., Qi, F., Ye, Y., Liu, Z., Sun, M., & Jin, J. (2021). Country image in COVID-19 pandemic: A case study of China. IEEE Transactions on Big Data, 7, 81–92. https://doi.org/10.1109/TBDATA.2020.3023459

Yang, Z., Men, H., & Ingham, R. (2022). Media representation of china in COVID-19 reports: Text mining of the language of New York Times. In 2022 European conference on natural language processing and information retrieval (ECNLPIR) (pp. 101–107). https://doi.org/10.1109/ECNLPIR57021.2022.00030

Wang, F., & Gong, Y. (2023). Public opinion analysis for the Covid-19 pandemic based on Sina Weibo data. In Advances in natural computation, fuzzy systems and knowledge discovery (Vol. 153). https://doi.org/10.1007/978-3-031-20738-9_109

Aschauer, W., & Egger, R. (2023). Transformations in tourism following COVID-19? A longitudinal study on the perceptions of tourists. Journal of Tourism Futures. https://doi.org/10.1108/JTF-08-2022-0215

Lee, S. T. (2022). A battle for foreign perceptions: Ukraine’s country image in the 2022 war with Russia. Place Branding and Public Diplomacy. https://doi.org/10.1057/s41254-022-00284-0

Pandey, D. K., & Kumar, R. (2023). Russia–Ukraine War and the global tourism sector: A 13-day tale. Current Issues in Tourism, 26, 692–700. https://doi.org/10.1080/13683500.2022.2081789

Gripsrud, G., Nes, E., & Olsson, U. (2010). Effects of hosting a mega-sport event on country image. Event Management, 14(3), 193–204. https://doi.org/10.3727/152599510X12825895093551

Lascu, D.-N., Ahmed, Z. U., Ahmed, I., & Min, T. H. (2020). Dynamics of country image: Evidence from Malaysia. Asia Pacific Journal of Marketing and Logistics, 32(8), 1675–1697. https://doi.org/10.1108/APJML-04-2019-0241

Xiao, M., & Yi, H. (2016). The Chinese image on Twitter: An empirical study based on text mining. Journalism and Mass Communication, 6(8), 469–479. https://doi.org/10.17265/2160-6579/2016.08.003

Thøgersen, J., Aschemann-Witzel, J., & Pedersen, S. (2021). Country image and consumer evaluation of imported products: Test of a hierarchical model in four countries. European Journal of Marketing, 55(2), 444–467. https://doi.org/10.1108/EJM-09-2018-0601

Golan, G. J., & Lukito, J. (2015). The rise of the dragon? Framing China’s global leadership in elite American newspapers. International Communication Gazette, 27(8), 754–772. https://doi.org/10.1177/1748048515601576

Zhang, L. (2011). Soft power, country image, and media-policy interrelations in international politics. In News media and EU-China relations (pp. 13–34). https://doi.org/10.1057/9780230118638_2

Seo, H. (2013). Online social relations and country reputation. International Journal of Communication, 7, 1.

Kwak, J.-A., & Cho, S. K. (2018). Analyzing public opinion with social media data during election periods: A selective literature review. Asian Journal for Public Opinion Research, 5(4), 285–301. https://doi.org/10.15206/ajpor.2018.5.4.285

Cañas, L. M. E., Horst, E. T., & Parra, J. H. (2015). Imagen país de colombia desde la perspectiva extranjera. Arbor, 191(773), 244. https://doi.org/10.3989/arbor.2015.773n3014

Villanueva Rivas, C. (2012). Imagen país y política exterior de México. Revista Mexicana De Política Exterior, 96, 13–43.

Villanueva Rivas, C. (2016). La Imagen de México en el Mundo 2006–2015. Mexico: Fernández Editores.

Wendt, A. (Ed.) (1999). Social theory of international politics. Cambridge studies in international relations, vol. 67. Cambridge University Press, Cambridge, U.K. Includes bibliographical references (pp. 379–419) and index.—Description based on print version record.

Williams, M. C. (2018). International Relations in the Age of the Image. International Studies Quarterly, 62(4), 880–891. https://doi.org/10.1093/isq/sqy030

Gantzel, K. J., & Nicklas, H. (1979). Foreign policy friend–enemy images and stereotypes in the federal republic of Germany 1949–1971. Bulletin of Peace Proposals, 10(1), 143–153. https://doi.org/10.1177/096701067901000118

Herrmann, R. K., & Fischerkeller, M. P. (1995). Beyond the enemy image and spiral model: Cognitive-strategic research after the cold war. International Organization, 49(3), 415–450. https://doi.org/10.1017/S0020818300033336

Latifah, R., Baddalwan, R., Meilina, P., Saputra, A. D., & Adharani, Y. (2021). Sentiment analysis of Covid-19 vaccines from Indonesian tweets and news headlines using various machine learning techniques. In 2021 international conference on informatics, multimedia, cyber and information system (ICIMCIS) (pp. 69–73). https://doi.org/10.1109/ICIMCIS53775.2021.9699187

França Costa, D., & Silva, N. F. F.: Inf-ufg at fiqa 2018 task 1: Predicting sentiments and aspects on financial tweets and news headlines. In Companion proceedings of the the web conference 2018. WWW’18 (pp. 1967–1971). International World Wide Web Conferences Steering Committee, Republic and Canton of Geneva, CHE (2018). https://doi.org/10.1145/3184558.3191828

Peñuela, F. J. F.-B. (2019). Deception detection in Arabic tweets and news. In Fire. https://api.semanticscholar.org/CorpusID:209444121.

Rivas, C. V. (2022). Imagen de méxico en el mundo. informe 2013–2018. resreport, Universidad Iberoamericana. https://www.imagendemexico.org/informe-2013-2018.

Fernández, A., García, S., Galar, M., Prati, R. C., Krawczyk, B., & Herrera, F (2018). 2. Foundations on imbalanced classification (pp. 19–46). Cham: Springer. https://doi.org/10.1007/978-3-319-98074-4_4

Li, C., & Liu, S. (2018). A comparative study of the class imbalance problem in twitter spam detection. Concurrency and Computation: Practice and Experience, 30(5), 4281. https://doi.org/10.1002/cpe.4281

Ganganwar, V. (2012). An overview of classification algorithms for imbalanced datasets. International Journal of Emerging Technology and Advanced Engineering, 2(4), 42–47.

Fadaee, M., Bisazza, A., & Monz, C. (2017). Data augmentation for low-resource neural machine translation. https://doi.org/10.18653/v1/P17-2090. arXiv:1705.00440 [cs.CL]

Zhang, X., Zhao, J., & LeCun, Y. (2015). Character-level convolutional networks for text classification. In Proceedings of the 28th international conference on neural information processing systems—Volume 1. NIPS’15 (pp. 649–657). Cambridge, MA: MIT Press.

Wei, J., & Zou, K. (2019). Easy data augmentation techniques for boosting performance on text classification tasks. In Proceedings of the 2019 conference on empirical methods in natural language processing and the 9th international joint conference on natural language processing (pp. 6382–6388).

Guibon, G., Ochs, M., & Bellot, P. (2016). From emojis to sentiment analysis. In WACAI 2016, Brest, France. Lab-STICC and ENIB and LITIS. https://hal-amu.archives-ouvertes.fr/hal-01529708

Oliveira, N., Cortez, P., & Areal, N. (2016). Stock market sentiment lexicon acquisition using microblogging data and statistical measures. Decision Support Systems, 85, 62–73.

Rustam, F., Ashraf, I., Mehmood, A., Ullah, S., & Choi, G. S. (2019). Tweets classification on the base of sentiments for us airline companies. Entropy. https://doi.org/10.3390/e21111078

Ghosal, S., & Jain, A. (2023). Depression and suicide risk detection on social media using fasttext embedding and xgboost classifier. Procedia Computer Science, 218, 1631–1639. https://doi.org/10.1016/j.procs.2023.01.141. (International Conference on Machine Learning and Data Engineering).

Umer, M., Imtiaz, Z., Ahmad, M., Nappi, M., Medaglia, C., Choi, G. S., & Mehmood, A. (2023). Impact of convolutional neural network and fasttext embedding on text classification. Multimedia Tools and Applications, 82, 5569–5585. https://doi.org/10.1007/s11042-022-13459-x

Yan, J. (2009). Text representation. Encyclopedia of database systems (pp. 3069–3072). Boston, MA: Springer. https://doi.org/10.1007/978-0-387-39940-9_420

Joulin, A., Grave, E., Bojanowski, P., & Mikolov, T. (2017). Bag of tricks for efficient text classification. In Proceedings of the 15th conference of the European chapter of the association for computational linguistics: Volume 2, short papers (pp. 427–431). https://aclanthology.org/E17-2068

Alessa, A., Faezipour, M., & Alhassan, Z.(2018). Text classification of flu-related tweets using fasttext with sentiment and keyword features. In 2018 IEEE international conference on healthcare informatics (ICHI) (pp. 366–367). https://doi.org/10.1109/ICHI.2018.00058

Ali, R., Farooq, U., Arshad, U., Shahzad, W., & Beg, M. O. (2022). Hate speech detection on Twitter using transfer learning. Computer Speech and Language. https://doi.org/10.1016/j.csl.2022.101365

D’Sa, A. G., Illina, I., & Fohr, D. (2020). BERT and fastText embeddings for automatic detection of toxic speech. In 2020 international multi-conference on: “Organization of knowledge and advanced technologies” (OCTA) (pp. 1–5). https://doi.org/10.1109/OCTA49274.2020.9151853

Jha, A., & Mamidi, R. (2017). When does a compliment become sexist? Analysis and classification of ambivalent sexism using Twitter data. In Proceedings of the 2nd workshop on NLP and computational social science (pp. 7–16). https://doi.org/10.18653/v1/W17-2902

Riza, M. A., & Charibaldi, N. (2021). Emotion detection in twitter social media using Long Short-Term Memory (LSTM) and Fast Text. International Journal of Artificial Intelligence and Robotics (IJAIR), 3(1), 15–26. https://doi.org/10.25139/ijair.v3i1.3827

Fernández, A., García, S., Galar, M., Prati, R. C., Krawczyk, B., & Herrera, F. (2018). 4. Cost-sensitive learning (pp. 63–78). Cham: Springer. https://doi.org/10.1007/978-3-319-98074-4_4

Hsu, C.-W., & Lin, C.-J. (2002). A comparison of methods for multiclass support vector machines. IEEE Transactions on Neural Networks, 13(2), 415–425. https://doi.org/10.1109/72.991427

Veropoulos, K., Campbell, C., & Cristianini, N. (1999). Controlling the sensitivity of support vector machines. In Proceedings of international joint conference artificial intelligence.

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N., Kaiser, L., & Polosukhin, I. (2017). Attention is all you need. In Proceedings of the 31st international conference on neural information processing systems. NIPS’17 (pp. 6000–6010). Curran Associates Inc., Red Hook, NY, USA.

Yang, Z., Ding, M., Guo, Y., Lv, Q., & Tang, J. (2022). Parameter-efficient tuning makes a good classification head. In Proceedings of the 2022 conference on empirical methods in natural language processing (pp. 7576–7586). Association for Computational Linguistics, Abu Dhabi, United Arab Emirates. https://aclanthology.org/2022.emnlp-main.514

Devlin, J., Chang, M., Lee, K., & Toutanova, K. (2018) BERT: Pre-training of deep bidirectional transformers for language understanding. CoRRarXiv:1810.04805

Sanh, V., Debut, L., Chaumond, J., & Wolf, T. (2019). Distilbert, a distilled version of bert: Smaller, faster, cheaper and lighter. arXiv:1910.01108

Camacho-collados, J., Rezaee, K., Riahi, T., Ushio, A., Loureiro, D., Antypas, D., Boisson, J., Espinosa Anke, L., Liu, F., & Martínez Cámara, E. (2022). TweetNLP: Cutting-edge natural language processing for social media. In Proceedings of the 2022 conference on empirical methods in natural language processing: System demonstrations (pp. 38–49). Association for Computational Linguistics, Abu Dhabi, UAE. https://aclanthology.org/2022.emnlp-demos.5

Gu, Q., Zhu, L., & Cai, Z. (2009). Evaluation measures of the classification performance of imbalanced data sets. In Z. Cai, Z. Li, Z. Kang, & Y. Liu (Eds.), Computational intelligence and intelligent systems (pp. 461–471). London: Springer.

Fernández, A., García, S., Galar, M., Prati, R. C., Krawczyk, B., & Herrera, F. (2018). 4. Cost-sensitive learning (pp. 47–61). Cham: Springer. https://doi.org/10.1007/978-3-319-98074-4_4

Matthews, B. W. (1975). Comparison of the predicted and observed secondary structure of T4 phage lysozyme. Biochimica et Biophysica Acta (BBA)—Protein Structure, 405, 442–451. https://doi.org/10.1016/0005-2795(75)90109-9

Powers, D. (2008). Evaluation: From precision, recall and F-factor to ROC, informedness, markedness & correlation. Journal of Machine Learning Technologies, 2(1), 37–63.

Gorodkin, J. (2004). Comparing two K-category assignments by a K-category correlation coefficient. Computational Biology and Chemistry, 28(5), 367–374. https://doi.org/10.1016/j.compbiolchem.2004.09.006

Lipton, Z. C., Elkan, C., & Naryanaswamy, B. (2014). Optimal thresholding of classifiers to maximize F1 measure. In T. Calders, F. Esposito, E. Hüllermeier, & R. Meo (Eds.), Machine learning and knowledge discovery in databases (pp. 225–239). London: Springer.

Sokolova, M., & Lapalme, G. (2009). A systematic analysis of performance measures for classification tasks. Information Processing and Management, 45(4), 427–437. https://doi.org/10.1016/j.ipm.2009.03.002

Seghier, M. L. (2022). Ten simple rules for reporting machine learning methods implementation and evaluation on biomedical data. International Journal of Imaging Systems and Technology, 32(1), 5–11. https://doi.org/10.1002/ima.22674

Hsu, C.-W., Chang, C.-C., & Lin, C.-J. (2016). A practical guide to support vector classification. Technical report, National Taiwan University.

Wolf, T., Debut, L., Sanh, V., Chaumond, J., Delangue, C., Moi, A., Cistac, P., Rault, T., Louf, R., Funtowicz, M., Davison, J., Shleifer, S., Platen, P., Ma, C., Jernite, Y., Plu, J., Xu, C., Scao, T.L., Gugger, S., Drame, M., Lhoest, Q., & Rush, A. M. (2020). Transformers: State-of-the-art natural language processing. In Proceedings of the 2020 conference on empirical methods in natural language processing: system demonstrations (pp. 38–45). Association for Computational Linguistics, Online. https://www.aclweb.org/anthology/2020.emnlp-demos.6

Tunstall, L., Werra, L., & Wolf, T. (2022). Natural language processing with transformers, revised edition (1st ed.). New York: O’Reilly Media. (Incorporated).

Zúñiga-Morales, L. N., González-Ordiano, J. Á., Quiroz-Ibarra, J. E., & Simske, S. J. (2022). Impact evaluation of multimodal information on sentiment analysis. In O. Pichardo Lagunas, J. Martínez-Miranda, & B. Martínez Seis (Eds.), Advances in computational intelligence (pp. 18–29). Cham: Springer.

Acknowledgements

The Authors would like to thank Universidad Iberoamericana Ciudad de México and Instituto de Investigación Aplicada y Tecnología for their support and for providing access to the Research Laboratory in Advanced Computer Technologies (LITAC).

Funding

The authors disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: This work was supported by the “Instituto de Investigación Aplicada y Tecnología” and the “Universidad Iberoamericana Ciudad de México”.

Author information

Authors and Affiliations

Contributions

LNZ-M: writing original draft, investigation, methodology, programming, data visualization. JAG-O: writing review and editing, methodology, supervision. JEQ-I: writing review and editing, methodology, supervision. CVR: writing review and editing, investigation, supervision.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Author’s Google Scholar URLs: Luis N. Zúñiga-Morales, Jorge Ángel González-Ordiano, J. Emilio Quiroz-Ibarra, César Villanueva Rivas.

Appendix: Classic framework train results

Appendix: Classic framework train results

In this appendix we show the results obtained during the training step of the Classic Framework during the data augmentation experiments. As shown in Table 4, as the synthetic data increases, the results obtained during this phase indicate the presence of overfitting in the SVM model. The worst case of this behavior is observed during the AMG All experiment, where all train metrics are above 98%, but the evaluation results indicate performances below 46%, as demonstrated in Table 2. The previous observation further highlights the negative impact of the proposed data augmentation scheme over the SVM model.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zúñiga-Morales, L.N., González-Ordiano, J.Á., Quiroz-Ibarra, J.E. et al. Machine learning framework for country image analysis. J Comput Soc Sc 7, 523–547 (2024). https://doi.org/10.1007/s42001-023-00246-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s42001-023-00246-3