Abstract

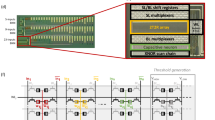

Resistive random access memory (ReRAM) has been proven capable to efficiently perform in-situ matrix-vector computations in convolutional neural network (CNN) processing. The computations are often conducted on multi-level cell (MLC) that have limited precision and hence, show significant vulnerability to noises. The binarized neural network (BNN) is a hardware-friendly model that can dramatically reduce the computation and storage overheads. However, XNOR, which is the key operation in BNNs, cannot be directly computed in-situ in ReRAM because of its nonlinear behavior. To enable real in-situ processing of BNNs in ReRAM, we modified the BNN algorithm to enable direct computation of XNOR, POPCOUNT and POOL based on ReRAM cells. We also proposed the complementary resistive cell (CRC) design to efficiently conduct XNOR operations and optimized the pipeline design with decoupled buffer and computation stages. Our results show our scheme, namely, ReBNN, improves the system performance by \(25.36\times\) and the energy efficiency by \(4.26\times\) compared to conventional ReRAM based accelerator, and ensures a throughput higher than state-of-the-art BNN accelerators. The correctness of the modified algorithm is also validated.

Similar content being viewed by others

References

Akhlaghi, V., Yazdanbakhsh, A., Samadi, K., Gupta, R.K., Esmaeilzadeh, H.: Snapea: Predictive early activation for reducing computation in deep convolutional neural networks. In: 2018 ACM/IEEE 45th Annual International Symposium on Computer Architecture (ISCA), pp. 662–673. IEEE (2018)

Alibart, F., Gao, L., Hoskins, B.D., Strukov, D.B.: High precision tuning of state for memristive devices by adaptable variation-tolerant algorithm. Nanotechnology 23(7), 075201 (2012)

Andri, R., Cavigelli, L., Rossi, D., Benini, L.: Yodann: an ultra-low power convolutional neural network accelerator based on binary weights. In: 2016 IEEE Computer Society Annual Symposium on VLSI (ISVLSI), pp. 236–241. IEEE (2016)

Baldi, P., Sadowski, P., Whiteson, D.: Searching for exotic particles in high-energy physics with deep learning. Nat. Commun. 5, 4308 (2014)

Chang, M.F., Sheu, S.S., Lin, K.F., Wu, C.W., Kuo, C.C., Chiu, P.F., Yang, Y.S., Chen, Y.S., Lee, H.Y., Lien, C.H., et al.: A high-speed 7.2-ns read-write random access 4-mb embedded resistive ram (ReRAM) macro using process-variation-tolerant current-mode read schemes. IEEE J. Solid State Circuits 48(3), 878–891 (2012)

Chen, T., Du, Z., Sun, N., Wang, J., Wu, C., Chen, Y., Temam, O.: Diannao: a small-footprint high-throughput accelerator for ubiquitous machine-learning. In: ACM Sigplan Notices, vol. 49, pp. 269–284. ACM (2014a)

Chen, Y., Luo, T., Liu, S., Zhang, S., He, L., Wang, J., Li, L., Chen, T., Xu, Z., Sun, N., et al.: Dadiannao: a machine-learning supercomputer. In: Proceedings of the 47th Annual IEEE/ACM International Symposium on Microarchitecture, pp. 609–622. IEEE Computer Society (2014b)

Chen, Y.H., Krishna, T., Emer, J.S., Sze, V.: Eyeriss: an energy-efficient reconfigurable accelerator for deep convolutional neural networks. IEEE J. Solid State Circuits 52(1), 127–138 (2016)

Chen, F., Li, H.: Emat: an efficient multi-task architecture for transfer learning using ReRAM. In: Proceedings of the International Conference on Computer-Aided Design, p. 33. ACM (2018a)

Chen, F., Song, L., Chen, Y.: Regan: A pipelined ReRAM-based accelerator for generative adversarial networks. In: 2018 23rd Asia and South Pacific Design Automation Conference (ASP-DAC), pp. 178—183. IEEE (2018b)

Chen, P.Y., Peng, X., Yu, S.: Neurosim: a circuit-level macro model for benchmarking neuro-inspired architectures in online learning. IEEE Trans. Comput. Aided Des. Integr. Circuits Syst. 37(12), 3067–3080 (2018c)

Chen, F., Song, L., Li, H.: Efficient process-in-memory architecture design for unsupervised GAN-based deep learning using ReRAM. In: Proceedings of the 2019 on Great Lakes Symposium on VLSI, pp. 423–428. ACM (2019a)

Chen, F., Song, L., Li, H.H., Chen, Y.: Zara: a novel zero-free dataflow accelerator for generative adversarial networks in 3d ReRAM. In: Proceedings of the 56th Annual Design Automation Conference 2019, p. 133. ACM (2019b)

Chen, F., Song, L., Li, H., Chen, Y.: Parc: a processing-in-cam architecture for genomic long read pairwise alignment using ReRAM. In: 2020 25th Asia and South Pacific Design Automation Conference (ASP-DAC). ACM (2020)

Cheng, M., Xia, L., Zhu, Z., Cai, Y., Xie, Y., Wang, Y., Yang, H.: Time: a training-in-memory architecture for memristor-based deep neural networks. In: Proceedings of the 54th Annual Design Automation Conference 2017, p. 26. ACM (2017)

Chetlur, S., Woolley, C., Vandermersch, P., Cohen, J., Tran, J., Catanzaro, B., Shelhamer, E.: CUDNN: efficient primitives for deep learning. arXiv:1410.0759 (2014)

Chi, P., Li, S., Xu, C., Zhang, T., Zhao, J., Liu, Y., Wang, Y., Xie, Y.: Prime: a novel processing-in-memory architecture for neural network computation in ReRAM-based main memory. In: ACM SIGARCH Computer Architecture News, vol. 44, pp. 27–39. IEEE Press (2016)

Ching, T., Himmelstein, D.S., Beaulieu-Jones, B.K., Kalinin, A.A., Do, B.T., Way, G.P., Ferrero, E., Agapow, P.M., Zietz, M., Hoffman, M.M., et al.: Opportunities and obstacles for deep learning in biology and medicine. J. R. Soc. Interface 15(141), 20170387 (2018)

Collobert, R., Kavukcuoglu, K., Farabet, C.: Torch7: a matlab-like environment for machine learning. Tech. rep. (2011)

Dai, G., Huang, T., Wang, Y., Yang, H., Wawrzynek, J.: Graphsar: a sparsity-aware processing-in-memory architecture for large-scale graph processing on ReRAMs. In: Proceedings of the 24th Asia and South Pacific Design Automation Conference, pp. 120–126. ACM (2019)

Dong, X., Xu, C., Xie, Y., Jouppi, N.P.: Nvsim: a circuit-level performance, energy, and area model for emerging nonvolatile memory. IEEE Trans. Comput. Aided Des. Integr. Circuits Syst. 31(7), 994–1007 (2012)

Du, Z., Fasthuber, R., Chen, T., Ienne, P., Li, L., Luo, T., Feng, X., Chen, Y., Temam, O.: Shidiannao: shifting vision processing closer to the sensor. In: ACM SIGARCH Computer Architecture News, vol. 43, pp. 92–104. ACM (2015)

Esmaeilzadeh, H., Sampson, A., Ceze, L., Burger, D.: Neural acceleration for general-purpose approximate programs. In: Proceedings of the 2012 45th Annual IEEE/ACM International Symposium on Microarchitecture, pp. 449–460. IEEE Computer Society (2012)

Faust, O., Hagiwara, Y., Hong, T.J., Lih, O.S., Acharya, U.R.: Deep learning for healthcare applications based on physiological signals: a review. Comput. Methods Programs Biomed. 161, 1–13 (2018)

Goh, G.B., Hodas, N.O., Vishnu, A.: Deep learning for computational chemistry. J. Comput. Chem. 38(16), 1291–1307 (2017)

Guan, Y., Liang, H., Xu, N., Wang, W., Shi, S., Chen, X., Sun, G., Zhang, W., Cong, J.: FP-DNN: an automated framework for mapping deep neural networks onto FPGAs with RTL-HLS hybrid templates. In: 2017 IEEE 25th Annual International Symposium on Field-Programmable Custom Computing Machines (FCCM), pp. 152–159. IEEE (2017a)

Guan, Y., Yuan, Z., Sun, G., Cong, J.: FPGA-based accelerator for long short-term memory recurrent neural networks. In: 2017 22nd Asia and South Pacific Design Automation Conference (ASP-DAC), pp. 629–634. IEEE (2017b)

Han, S., Kang, J., Mao, H., Hu, Y., Li, X., Li, Y., Xie, D., Luo, H., Yao, S., Wang, Y., et al.: ESE: efficient speech recognition engine with sparse LSTM on FPGA. In: Proceedings of the 2017 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays, pp. 75–84. ACM (2017)

Hu, M., Strachan, J.P., Li, Z., Grafals, E.M., Davila, N., Graves, C., Lam, S., Ge, N., Yang, J.J., Williams, R.S.: Dot-product engine for neuromorphic computing: programming 1T1M crossbar to accelerate matrix-vector multiplication. In: Proceedings of the 53rd annual design automation conference, p. 19. ACM (2016)

Huangfu, W., Li, S., Hu, X., Xie, Y.: Radar: a 3D-ReRAM based DNA alignment accelerator architecture. In: 2018 55th ACM/ESDA/IEEE Design Automation Conference (DAC), pp. 1–6. IEEE (2018)

Hubara, I., Courbariaux, M., Soudry, D., El-Yaniv, R., Bengio, Y.: Binarized neural networks. In: Advances in Neural Information Processing Systems, pp. 4107–4115 (2016)

Ji, Y., Zhang, Y., Li, S., Chi, P., Jiang, C., Qu, P., Xie, Y., Chen, W.: Neutrams: neural network transformation and co-design under neuromorphic hardware constraints. In: The 49th Annual IEEE/ACM International Symposium on Microarchitecture, p. 21. IEEE Press (2016)

Ji, H., Song, L., Jiang, L., Li, H.H., Chen, Y.: ReCom: an efficient resistive accelerator for compressed deep neural networks. In: 2018 Design, Automation & Test in Europe Conference & Exhibition (DATE), pp. 237–240. IEEE (2018a)

Ji, Y., Zhang, Y., Chen, W., Xie, Y.: Bridge the gap between neural networks and neuromorphic hardware with a neural network compiler. In: ACM SIGPLAN Notices, vol. 53, pp. 448–460. ACM (2018b)

Ji, H., Jiang, L., Li, T., Jing, N., Ke, J., Liang, X.: HUBPA: high utilization bidirectional pipeline architecture for neuromorphic computing. In: Proceedings of the 24th Asia and South Pacific Design Automation Conference, pp. 249–254. ACM (2019)

Jiang, L., Kim, M., Wen, W., Wang, D.: XNOR-pop: a processing-in-memory architecture for binary convolutional neural networks in wide-IO2 drams. In: 2017 IEEE/ACM International Symposium on Low Power Electronics and Design (ISLPED), pp. 1–6. IEEE (2017)

Jouppi, N.P., Young, C., Patil, N., Patterson, D., Agrawal, G., Bajwa, R., Bates, S., Bhatia, S., Boden, N., Borchers, A., et al.: In-datacenter performance analysis of a tensor processing unit. In: 2017 ACM/IEEE 44th Annual International Symposium on Computer Architecture (ISCA), pp. 1–12. IEEE (2017)

Kim, D., Kung, J., Chai, S., Yalamanchili, S., Mukhopadhyay, S.: Neurocube: a programmable digital neuromorphic architecture with high-density 3D memory. In: 2016 ACM/IEEE 43rd Annual International Symposium on Computer Architecture (ISCA), pp. 380–392. IEEE (2016)

Krizhevsky, A., Hinton, G.: Learning multiple layers of features from tiny images. Tech. rep, Citeseer (2009)

Krizhevsky, A., Sutskever, I., Hinton, G.E.: Imagenet classification with deep convolutional neural networks. In: Advances in neural information processing systems, pp. 1097–1105 (2012)

Kuzum, D., Yu, S., Wong, H.P.: Synaptic electronics: materials, devices and applications. Nanotechnology 24(38), 382001 (2013)

Kwon, H., Samajdar, A., Krishna, T.: MAERI: enabling flexible dataflow mapping over DNN accelerators via reconfigurable interconnects. In: ACM SIGPLAN Notices, vol. 53, pp. 461–475. ACM (2018)

LeCun, Y.: The mnist database of handwritten digits. http://yann.lecun.com/exdb/mnist/ (1998)

Lee, D., Lee, J., Jo, M., Park, J., Siddik, M., Hwang, H.: Noise-analysis-based model of filamentary switching ReRAM with \(\text{ ZrO }_{x}/\text{ HfO }_{x}\) stacks. IEEE Electron Device Lett. 32(7), 964–966 (2011)

Li, Y., Liu, Z., Xu, K., Yu, H., Ren, F.: A 7.663-tops 8.2-w energy-efficient FPGA accelerator for binary convolutional neural networks. In: FPGA, pp. 290–291 (2017)

Li, B., Song, L., Chen, F., Qian, X., Chen, Y., Li, H.H.: ReRAM-based accelerator for deep learning. In: 2018 Design, Automation & Test in Europe Conference & Exhibition (DATE), pp. 815–820. IEEE (2018)

Lin, J., Li, S., Hu, X., Deng, L., Xie, Y.: CNNWIRE: Boosting convolutional neural network with winograd on ReRAM based accelerators. In: Proceedings of the 2019 on Great Lakes Symposium on VLSI, pp. 283–286. ACM (2019a)

Lin, J., Zhu, Z., Wang, Y., Xie, Y.: Learning the sparsity for ReRAM: mapping and pruning sparse neural network for ReRAM based accelerator. In: Proceedings of the 24th Asia and South Pacific Design Automation Conference, pp. 639–644. ACM (2019b)

Liu, X., Mao, M., Liu, B., Li, H., Chen, Y., Li, B., Wang, Y., Jiang, H., Barnell, M., Wu, Q., et al.: Reno: a high-efficient reconfigurable neuromorphic computing accelerator design. In: 2015 52nd ACM/EDAC/IEEE Design Automation Conference (DAC), pp. 1–6. IEEE (2015)

Liu, W., Anguelov, D., Erhan, D., Szegedy, C., Reed, S., Fu, C.Y., Berg, A.C.: SSD: single shot multibox detector. In: Leibe B, Matas J, Sebe N, Welling M (eds) Computer vision – ECCV 2016. Springer, Cham, pp. 21–37 (2016)

Liu, M., Xia, L., Wang, Y., Chakrabarty, K.: Design of fault-tolerant neuromorphic computing systems. In: 2018 IEEE 23rd European Test Symposium (ETS), pp. 1–9. IEEE (2018a)

Liu, M., Xia, L., Wang, Y., Chakrabarty, K.: Fault tolerance for RRAM-based matrix operations. In: 2018 IEEE International Test Conference (ITC), pp. 1–10. IEEE (2018b)

Liu, R., Peng, X., Sun, X., Khwa, W.S., Si, X., Chen, J.J., Li, J.F., Chang, M.F., Yu, S.: Parallelizing SRAM arrays with customized bit-cell for binary neural networks. In: Proceedings of the 55th Annual Design Automation Conference, p. 21. ACM (2018c)

Liu, X., Yang, H., Liu, Z., Song, L., Li, H., Chen, Y.: DPATCH: an adversarial patch attack on object detectors. arXiv:1806.02299 (2018d)

Liu, M., Xia, L., Wang, Y., Chakrabarty, K.: Fault tolerance in neuromorphic computing systems. In: Proceedings of the 24th Asia and South Pacific Design Automation Conference, pp. 216–223. ACM (2019a)

Liu, T., Wen, W., Jiang, L., Wang, Y., Yang, C., Quan, G.: A fault-tolerant neural network architecture. In: Proceedings of the 56th Annual Design Automation Conference 2019, DAC ’19, pp. 55:1–55:6. ACM, New York (2019b). https://doi.org/10.1145/3316781.3317742

Mahajan, D., Park, J., Amaro, E., Sharma, H., Yazdanbakhsh, A., Kim, J.K., Esmaeilzadeh, H.: Tabla: a unified template-based framework for accelerating statistical machine learning. In: 2016 IEEE International Symposium on High Performance Computer Architecture (HPCA), pp. 14–26. IEEE (2016)

Mao, M., Cao, Y., Yu, S., Chakrabarti, C.: Optimizing latency, energy, and reliability of 1T1R ReRAM through appropriate voltage settings. In: 2015 33rd IEEE International Conference on Computer Design (ICCD), pp. 359–366. IEEE (2015)

Mao, M., Chen, P.Y., Yu, S., Chakrabarti, C.: A multilayer approach to designing energy-efficient and reliable ReRAM cross-point array system. IEEE Trans. Very Large Scale Integr. (VLSI) Syst. 25(5), 1611–1621 (2017)

Mao, M., Sun, X., Peng, X., Yu, S., Chakrabarti, C.: A versatile ReRAM-based accelerator for convolutional neural networks. In: 2018 IEEE International Workshop on Signal Processing Systems (SiPS), pp. 211–216. IEEE (2018a)

Mao, M., Yu, S., Chakrabarti, C.: Design and analysis of energy-efficient and reliable 3-d ReRAM cross-point array system. IEEE Trans. Very Large Scale Integr. (VLSI) Syst. 26(7), 1290–1300 (2018b)

Mao, M., Peng, X., Liu, R., Li, J., Yu, S., Chakrabarti, C.: Max2: an ReRAM-based neural network accelerator that maximizes data reuse and area utilization. IEEE J. Emerg. Sel. Top. Circuits Syst. (2019)

Miotto, R., Wang, F., Wang, S., Jiang, X., Dudley, J.T.: Deep learning for healthcare: review, opportunities and challenges. Brief. Bioinform. 19(6), 1236–1246 (2017)

Mohanty, A., Du, X., Chen, P.Y., Seo, J.s., Yu, S., Cao, Y.: Random sparse adaptation for accurate inference with inaccurate multi-level RRAM arrays. In: 2017 IEEE International Electron Devices Meeting (IEDM), pp. 6–3. IEEE (2017)

Netzer, Y., Wang, T., Coates, A., Bissacco, A., Wu, B., Ng, A.Y.: Reading digits in natural images with unsupervised feature learning (2011)

Niu, D., Chen, Y., Xu, C., Xie, Y.: Impact of process variations on emerging memristor. In: Proceedings of the 47th Design Automation Conference, pp. 877–882. ACM (2010)

Niu, D., Xu, C., Muralimanohar, N., Jouppi, N.P., Xie, Y.: Design trade-offs for high density cross-point resistive memory. In: Proceedings of the 2012 ACM/IEEE international symposium on Low power electronics and design, pp. 209–214. ACM (2012)

Parashar, A., Rhu, M., Mukkara, A., Puglielli, A., Venkatesan, R., Khailany, B., Emer, J., Keckler, S.W., Dally, W.J.: SCNN: an accelerator for compressed-sparse convolutional neural networks. In: 2017 ACM/IEEE 44th Annual International Symposium on Computer Architecture (ISCA), pp. 27–40. IEEE (2017)

Qiu, J., Wang, J., Yao, S., Guo, K., Li, B., Zhou, E., Yu, J., Tang, T., Xu, N., Song, S., et al.: Going deeper with embedded FPGA platform for convolutional neural network. In: Proceedings of the 2016 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays, pp. 26–35. ACM (2016)

Qiao, X., Cao, X., Yang, H., Song, L., Li, H.: Atomlayer: a universal ReRAM-based CNN accelerator with atomic layer computation. In: Proceedings of the 55th Annual Design Automation Conference, p. 103. ACM (2018)

Rajendran, J., Manem, H., Karri, R., Rose, G.S.: An energy-efficient memristive threshold logic circuit. IEEE Trans. Comput. 61(4), 474–487 (2012)

Rastegari, M., Ordonez, V., Redmon, J., Farhadi, A.: Xnor-net: Imagenet classification using binary convolutional neural networks. In: European Conference on Computer Vision, pp. 525–542. Springer (2016)

Ren, S., He, K., Girshick, R., Sun, J.: Faster R-CNN: towards real-time object detection with region proposal networks. In: Advances in Neural Information Processing Systems, pp. 91–99 (2015)

Russakovsky, O., Deng, J., Su, H., Krause, J., Satheesh, S., Ma, S., Huang, Z., Karpathy, A., Khosla, A., Bernstein, M., et al.: Imagenet large scale visual recognition challenge. Int. J. Comput. Vis. 115(3), 211–252 (2015)

Shafiee, A., Nag, A., Muralimanohar, N., Balasubramonian, R., Strachan, J.P., Hu, M., Williams, R.S., Srikumar, V.: Isaac: a convolutional neural network accelerator with in-situ analog arithmetic in crossbars. ACM SIGARCH Comput. Archit. News 44(3), 14–26 (2016)

Sharma, H., Park, J., Suda, N., Lai, L., Chau, B., Chandra, V., Esmaeilzadeh, H.: Bit fusion: bit-level dynamically composable architecture for accelerating deep neural networks. In: Proceedings of the 45th Annual International Symposium on Computer Architecture, pp. 764–775. IEEE Press (2018)

Song, L., Qian, X., Li, H., Chen, Y.: Pipelayer: a pipelined ReRAM-based accelerator for deep learning. In: 2017 IEEE International Symposium on High Performance Computer Architecture (HPCA), pp. 541–552. IEEE (2017)

Song, L., Zhuo, Y., Qian, X., Li, H., Chen, Y.: GRAPHR: accelerating graph processing using ReRAM. In: 2018 IEEE International Symposium on High Performance Computer Architecture (HPCA), pp. 531–543. IEEE (2018a)

Song, M., Zhao, J., Hu, Y., Zhang, J., Li, T.: Prediction based execution on deep neural networks. In: 2018 ACM/IEEE 45th Annual International Symposium on Computer Architecture (ISCA), pp. 752–763. IEEE (2018b)

Song, L., Chen, F., Young, S.R., Schuman, C.D., Perdue, G., Potok, T.E.: Deep learning for vertex reconstruction of neutrino-nucleus interaction events with combined energy and time data. In: ICASSP 2019–2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 3882–3886. IEEE (2019a)

Song, L., Mao, J., Zhuo, Y., Qian, X., Li, H., Chen, Y.: Hypar: towards hybrid parallelism for deep learning accelerator array. In: 2019 IEEE International Symposium on High Performance Computer Architecture (HPCA), pp. 56–68. IEEE (2019b)

Sun, X., Peng, X., Chen, P.Y., Liu, R., Seo, J.s., Yu, S.: Fully parallel RRAM synaptic array for implementing binary neural network with (+ 1, -1) weights and (+ 1, 0) neurons. In: Proceedings of the 23rd Asia and South Pacific Design Automation Conference, pp. 574–579. IEEE Press (2018a)

Sun, X., Yin, S., Peng, X., Liu, R., Seo, J.s., Yu, S.: XNOR-RRAM: a scalable and parallel resistive synaptic architecture for binary neural networks. In: 2018 Design, Automation & Test in Europe Conference & Exhibition (DATE), pp. 1423–1428. IEEE (2018b)

Tang, T., Xia, L., Li, B., Wang, Y., Yang, H.: Binary convolutional neural network on RRAM. In: 2017 22nd Asia and South Pacific Design Automation Conference (ASP-DAC), pp. 782–787. IEEE (2017)

Wang, Y., Xu, J., Han, Y., Li, H., Li, X.: Deepburning: automatic generation of FPGA-based learning accelerators for the neural network family. In: Proceedings of the 53rd Annual Design Automation Conference, p. 110. ACM (2016)

Wang, Y., Wen, W., Song, L., Li, H.H.: Classification accuracy improvement for neuromorphic computing systems with one-level precision synapses. In: 2017 22nd Asia and South Pacific Design Automation Conference (ASP-DAC), pp. 776–781. IEEE (2017)

Wang, P., Ji, Y., Hong, C., Lyu, Y., Wang, D., Xie, Y.: SNRRAM: an efficient sparse neural network computation architecture based on resistive random-access memory. In: Proceedings of the 55th Annual Design Automation Conference, p. 106. ACM (2018)

Wong, H.S.P., Lee, H.Y., Yu, S., Chen, Y.S., Wu, Y., Chen, P.S., Lee, B., Chen, F.T., Tsai, M.J.: Metal-oxide rram. Proc. IEEE 100(6), 1951–1970 (2012)

Woo, J., Peng, X., Yu, S.: Design considerations of selector device in cross-point RRAM array for neuromorphic computing. In: 2018 IEEE International Symposium on Circuits and Systems (ISCAS), pp. 1–4. IEEE (2018)

Xu, C., Niu, D., Muralimanohar, N., Jouppi, N.P., Xie, Y.: Understanding the trade-offs in multi-level cell ReRAM memory design. In: 2013 50th ACM/EDAC/IEEE Design Automation Conference (DAC), pp. 1–6. IEEE (2013)

Xu, C., Niu, D., Muralimanohar, N., Balasubramonian, R., Zhang, T., Yu, S., Xie, Y.: Overcoming the challenges of crossbar resistive memory architectures. In: 2015 IEEE 21st International Symposium on High Performance Computer Architecture (HPCA), pp. 476–488. IEEE (2015)

Yazdanbakhsh, A., Samadi, K., Kim, N.S., Esmaeilzadeh, H.: GANAX: a unified MIMD-SIMD acceleration for generative adversarial networks. In: Proceedings of the 45th Annual International Symposium on Computer Architecture, pp. 650–661. IEEE Press (2018)

Yu, S., Wu, Y., Jeyasingh, R., Kuzum, D., Wong, H.S.P.: An electronic synapse device based on metal oxide resistive switching memory for neuromorphic computation. IEEE Trans. Electron Devices 58(8), 2729–2737 (2011)

Yu, S., Wu, Y., Wong, H.S.P.: Investigating the switching dynamics and multilevel capability of bipolar metal oxide resistive switching memory. Appl. Phys. Lett. 98(10), 103514 (2011)

Yu, S., Gao, B., Fang, Z., Yu, H., Kang, J., Wong, H.S.P.: A low energy oxide-based electronic synaptic device for neuromorphic visual systems with tolerance to device variation. Adv. Mater. 25(12), 1774–1779 (2013)

Yu, S., Chen, P.Y., Cao, Y., Xia, L., Wang, Y., Wu, H.: Scaling-up resistive synaptic arrays for neuro-inspired architecture: Challenges and prospect. In: 2015 IEEE International Electron Devices Meeting (IEDM), pp. 17–3. IEEE (2015)

Yu, J., Lukefahr, A., Palframan, D., Dasika, G., Das, R., Mahlke, S.: Scalpel: customizing DNN pruning to the underlying hardware parallelism. In: ACM SIGARCH Computer Architecture News, vol. 45, pp. 548–560. ACM (2017)

Zhang, C., Li, P., Sun, G., Guan, Y., Xiao, B., Cong, J.: Optimizing FPGA-based accelerator design for deep convolutional neural networks. In: Proceedings of the 2015 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays, pp. 161–170. ACM (2015)

Zhang, C., Wu, D., Sun, J., Sun, G., Luo, G., Cong, J.: Energy-efficient cnn implementation on a deeply pipelined FPGA cluster. In: Proceedings of the 2016 International Symposium on Low Power Electronics and Design, pp. 326–331. ACM (2016)

Zhang, C., Sun, G., Fang, Z., Zhou, P., Pan, P., Cong, J.: Caffeine: towards uniformed representation and acceleration for deep convolutional neural networks. IEEE Trans. Comput. Aided Design Integr. Circuits Syst. (2018). https://doi.org/10.1109/TCAD.2017.2785257

Zokaee, F., Zhang, M., Jiang, L.: Finder: Accelerating fm-index-based exact pattern matching in genomic sequences through ReRAM technology. In: Proceedings of the 28th International Conference on Parallel Architectures and Compilation Techniques. ACM (2019)

Acknowledgements

This work was supported in part by NSF 1910299, 1717657, DOE DE-SC0018064, AFRL FA8750-18-2-0057, NSF CCF-1657333, CCF-1717754, CNS-1717984, CCF-1750656, and CCF-1919289.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Rights and permissions

About this article

Cite this article

Song, L., Wu, Y., Qian, X. et al. ReBNN: in-situ acceleration of binarized neural networks in ReRAM using complementary resistive cell. CCF Trans. HPC 1, 196–208 (2019). https://doi.org/10.1007/s42514-019-00014-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s42514-019-00014-8