Abstract

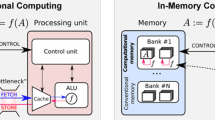

Recently, many researches have proposed computing-in-memory architectures trying to solve von Neumann bottleneck issue. Most of the proposed architectures can only perform some application-specific logic functions. However, the scheme that supports general purpose computing is more meaningful for the complete realization of in-memory computing. A reconfigurable computing-in-memory architecture for general purpose computing based on STT-MRAM (GCIM) is proposed in this paper. The proposed GCIM could significantly reduce the energy consumption of data transformation and effectively process both fix-point calculation and float-point calculation in parallel. In our design, the STT-MRAM array is divided into four subarrays in order to achieve the reconfigurability. With a specified array connector, the four subarrays can work independently at the same time or work together as a whole array. The proposed architecture is evaluated using Cadence Virtuoso. The simulation results show that the proposed architecture consumes less energy when performing fix-point or float-point operations.

Similar content being viewed by others

References

Biswas, A., Chandrakasan, A.P.: Conv-RAM: an energy-efficient SRAM with embedded convolution computation for low-power CNN-based machine learning applications. In: IEEE international solid-state circuits conference-(ISSCC). IEEE, pp. 488–490 (2018)

Cai, F., Correll, J.M., Lee, S.H., et al.: A fully integrated reprogrammable memristor–CMOS system for efficient multiply–accumulate operations. Nat. Electron. 2(7), 290–299 (2019)

Chen, Y., Wang, X.: Compact modeling and corner analysis of spintronic memristor. In: IEEE/ACM international symposium on nanoscale architectures, pp. 7–12 (2009)

Cheng, M., Xia, L., Zhu, Z., et al.: Time: A training-in-memory architecture for rram-based deep neural networks. IEEE Trans. Comput. Aided Des. Integr. Circuits Syst. 38(5), 834–847 (2018)

Chowdhury, Z., Harms, J.D., Khatamifard, S.K., et al.: Efficient in-memory processing using spintronics. IEEE Comput. Archit. Lett. 17(1), 42–46 (2017)

Deng, E., Zhang, Y., Klein, J.O., et al.: Low power magnetic full-adder based on spin transfer torque MRAM. IEEE Trans. Magn. 49(9), 4982–4987 (2013)

Fujiki, D., Mahlke, S., Das, R.: In-memory data parallel processor. ACM SIGPLAN Not. 53(2), 1–14 (2018)

Gao, F., Tziantzioulis, G., Wentzlaff, D.: ComputeDRAM: in-memory compute using off-the-shelf DRAMs. In: Proceedings of the 52nd annual IEEE/ACM international symposium on microarchitecture, pp. 100–113 (2019)

Hirohata, A., Sukegawa, H., Yanagihara, H., et al.: Roadmap for emerging materials for spintronic device applications. IEEE Trans. Magn. 51(10), 1–11 (2015)

Imani, M., Gupta, S., Kim, Y., et al.: Floatpim: in-memory acceleration of deep neural network training with high precision. In: Proceedings of the 46th international symposium on computer architecture. ACM, pp. 802–815 (2019)

Jain, S., Ranjan, A., Roy, K., et al.: Computing in memory with spin-transfer torque magnetic ram. IEEE Trans. Very Large Scale Integr. (VLSI) Syst. 26(3), 470–483 (2017)

Kang, W., Zhang, Y., Wang, Z., et al.: Spintronics: emerging ultra-low-power circuits and systems beyond MOS technology. ACM J. Emerg. Technol. Comput. Syst. (JETC) 12(2), 16 (2015)

Kang, W., Wang, H., Wang, Z., et al.: In-memory processing paradigm for bitwise logic operations in STT–MRAM. IEEE Trans. Magn. 53(11), 1–4 (2017)

Kang, W., Deng, E., Wang, Z., et al.: Spintronic logic-in-memory paradigms and implementations, pp. 215–229. Springer, Singapore (2020)

Keckler, S.W., Dally, W.J., Khailany, B., et al.: GPUs and the future of parallel computing. IEEE Micro 31(5), 7–17 (2011)

Kim, A., Austin, T., Baauw, D., et al.: Leakage current: Moore’s law meets static power. Computer 36(12), 68–75 (2003)

Li, S., Xu, C., Zou, Q., et al.: Pinatubo: a processing-in-memory architecture for bulk bitwise operations in emerging non-volatile memories. In: Proceedings of the 53rd annual design automation conference. ACM, p. 173 (2016)

Li, S., Niu, D., Malladi, K. T., et al.: Drisa: a dram-based reconfigurable in-situ accelerator. In: 2017 50th annual IEEE/ACM international symposium on microarchitecture (MICRO). IEEE, pp. 288–301 (2017)

Liu, B., Hu, M., Li, H., et al.: Digital-assisted noise-eliminating training for memristor crossbar-based analog neuromorphic computing engine. In: Proceedings of the 53rd annual design automation conference, pp. 1–6 (2013)

Maehara, H., Nishimura, K., Nagamine, Y., et al.: Tunnel Magnetoresistance above 170% and resistance–area product of 1 Ω (µm) 2 attained by in situ annealing of ultra-thin MgO tunnel barrier. Appl. Phys. Express 4(3), 033002 (2011)

Vincent, A.F., Locatelli, N., Klein, J.-O., Zhao, W.S., Galdin-Retailleau, S., Querlioz, D.: Analytical macrospin modeling of the stochastic switching time of spin-transfer torque devices. IEEE Trans. Electron Devices 62(1), 164–170 (2015)

Wang, J., Wang, X., Eckert, C., et al.: A compute SRAM with bit-serial integer/floating-point operations for programmable in-memory vector acceleration. In: 2019 IEEE international solid-state circuits conference-(ISSCC). IEEE, pp. 224–226 (2019)

Wulf, W.A., McKee, S.A.: Hitting the memory wall: implications of the obvious. ACM SIGARCH Comput. Archit. News 23(1), 20–24 (1995)

Zabihi, M., Chowdhury, Z., Zhao, Z., et al.: In-memory processing on the spintronic CRAM: from hardware design to application mapping. IEEE Trans. Comput. 68(8), 1159–1173 (2018)

Zhang, H., Kang, W., Cao, K., et al.: spintronic processing unit in spin transfer torque magnetic random access memor. IEEE Trans. Electron. Devices 66(4), 2017–2022 (2019)

Zhao, W., Chappert, C., Javerliac, V., et al.: High speed, high stability and low power sensing amplifier for MTJ/CMOS hybrid logic circuits. IEEE Trans. Magn. 45(10), 3784–3787 (2009)

Acknowledgements

This work was supported in part by the National Natural Science Foundation of China under Grant 61701013, in part by State Key Laboratory of Software Development Environment under Grant SKLSDE-2018ZX-07, in part by National Key Technology Program of China under Grant 2017ZX01032101, in part by State Key Laboratory of Computer Architecture under Grant CARCH201917 and in part by the 111 Talent Program under Grant B16001.

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Rights and permissions

About this article

Cite this article

Pan, Y., Jia, X., Cheng, Z. et al. An STT-MRAM based reconfigurable computing-in-memory architecture for general purpose computing. CCF Trans. HPC 2, 272–281 (2020). https://doi.org/10.1007/s42514-020-00038-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s42514-020-00038-5