Abstract

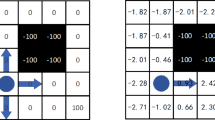

The current study proposes a unique algorithm for shortest trajectory creation based on q learning. Major issues towards grid world problem are environment generalization. In Q learning to learn without prior knowledge of the system is based on trial-and-error interaction using reward and penalty. Every decision contains in the form of look-up table. The decision-making system train the agent over a series of episodes. In this research paper, we present novel algorithms for optimal trajectory analysis based on state action using pairs. Performance comparisons with various learning algorithms in the context of trajectory efficiency verses number of episodes and accuracy prediction between number of episodes shows that our proposed algorithm is better than Q Learning. This approach can be used in autonomous sectors, computer vision, route optimization along with IoT (internet of things) and distributed systems.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Ding Z, Huang Y, Yuan H, Dong H. Introduction to reinforcement learning. In: Deep reinforcement learning: fundamentals, research and applications. 2020. p. 47–123.

Iima H, Kuroe Y. Swarm reinforcement learning algorithms based on Sarsa method. In: 2008 SICE annual conference. IEEE; 2008. p.2045–2049.

Yadav AK, Sachan AK. Research and application of dynamic neural network based on reinforcement learning. In: Proceedings of the international conference on information systems design and intelligent applications 2012 (INDIA 2012) held in Visakhapatnam, India, January 2012. Berlin: Springer; 2012. p. 931–942.

Quan L, Zhi-ming C, Yu-chen F. The research on the spider of the domain-specific search engines based on the reinforcement learning. In: 2009 WRI Global congress on intelligent systems, vol 2. IEEE. 2009. p. 588–592.

Wang J, Tropper C. Optimizing time warp simulation with reinforcement learning techniques. In: 2007 winter simulation conference. IEEE. 2007. p. 577–584.

Santos-Pata D, Zucca R, Verschure PF. Navigate the unknown: implications of grid-cells “mental travel” in vicarious trial and error. In: Proceedings 5 Biomimetic and biohybrid systems: 5th international conference, living machines 2016, Edinburgh, UK, July 19–22, 2016. Springer International Publishing; 2016. p. 251–262.

Hamahata K, Taniguchi T, Sakakibara K, Nishikawa I, Tabuchi K, Sawaragi T. Effective integration of imitation learning and reinforcement learning by generating internal reward. In: 2008 Eighth international conference on intelligent systems design and applications, vol 3. IEEE; 2008. p. 121–126.

Taniguchi T, Tabuchi K, Sawaragi T. Role differentiation process by division of reward function in multi-agent reinforcement learning. In: 2008 SICE annual conference. IEEE. 2008. p. 387–393.

Efroni Y, Merlis N, Mannor S. Reinforcement learning with trajectory feedback. In: Proceedings of the AAAI conference on artificial intelligence, vol 35, no 8. 2021. p. 7288–7295.

Feng Z, Tan L, Li W, Gulliver TA. Reinforcement learning based dynamic network self-optimization for heterogeneous networks. In: 2009 IEEE Pacific rim conference on communications, computers and signal processing. IEEE. 2009. p. 319–324.

Sivamayil K, Rajasekar E, Aljafari B, Nikolovski S, Vairavasundaram S, Vairavasundaram I. A systematic study on reinforcement learning based applications. Energies. 2023;16(3):1512.

Ulusoy Ü, Güzel MS, Bostanci E. A Q-learning-based approach for simple and multi-agent systems. In: Multi agent systems-strategies and applications. IntechOpen. 2020.

Kormushev P, Nomoto K, Dong F, Hirota K. Time manipulation technique for speeding up reinforcement learning in simulations. 2009. arXiv:0903.4930.

Shibuya T, Shimada S, Hamagami T. Experimental study of the eligibility traces in complex valued reinforcement learning. In 2007 IEEE international conference on systems, man and cybernetics. IEEE. 2007. p. 1630–1635.

Lizotte D, Wang T, Bowling M, Schuurmans D. Dual representations for dynamic programming. 2008.

Hwang KS, Yang TW, Lin CJ. Self organizing decision tree based on reinforcement learning and its application on state space partition. In: 2006 IEEE international conference on systems, man and cybernetics, vol 6. IEEE. 2006. p. 5088–5093.

Khodayari S, Yazdanpanah MJ. Network routing based on reinforcement learning in dynamically changing networks. In 17th IEEE international conference on tools with artificial intelligence (ICTAI'05). IEEE. 2005. p. 5.

Asgharnia A, Schwartz H, Atia M. Multi-objective fuzzy Q-learning to solve continuous state-action problems. Neurocomputing. 2023;516:115–32.

Shoham Y, Powers R, Grenager T. Multi-agent reinforcement learning: a critical survey, vol 288. Technical report, Stanford University. 2003.

Kawakami T, Kinoshita M, Kakazu Y. A study on reinforcement learning mechanisms with common knowledge field for heterogeneous agent systems. In: IEEE SMC'99 conference proceedings. 1999 IEEE international conference on systems, man, and cybernetics (Cat. No. 99CH37028), vol 5. IEEE. 1999. p. 469–474.

Kaelbling LP, Littman ML, Moore AW. Reinforcement learning: a survey. J Artif Intell Res. 1996;4:237–85.

Low ES, Ong P, Cheah KC. Solving the optimal path planning of a mobile robot using improved Q-learning. Robot Auton Syst. 2019;115:143–61.

Maoudj A, Hentout A. Optimal path planning approach based on Q-learning algorithm for mobile robots. Appl Soft Comput. 2020;97: 106796.

Abdi A, Ranjbar MH, Park JH. Computer vision-based path planning for robot arms in three-dimensional workspaces using Q-learning and neural networks. Sensors. 2022;22(5):1697.

Wang J, Tropper C. Optimizing time warp simulation with reinforcement learning techniques. In 2007 winter simulation conference. IEEE. 2007. p. 577–584.

Frank M, Leitner J, Stollenga M, Förster A, Schmidhuber J. Curiosity driven reinforcement learning for motion planning on humanoids. Front Neurorobot. 2014;7:25.

Wen S, Chen J, Li Z, Rad AB, Othman KM. Fuzzy Q-learning obstacle avoidance algorithm of humanoid robot in unknown environment. In: 2018 37th Chinese control conference (CCC). IEEE. 2018. p. 5186–5190.

Bae H, Kim G, Kim J, Qian D, Lee S. Multi-robot path planning method using reinforcement learning. Appl Sci. 2019;9(15):3057.

Erez T, Smart WD. What does shaping mean for computational reinforcement learning? In: 2008 7th IEEE international conference on development and learning. IEEE. 2008. p. 215–219.

Sallans B, Hinton GE. Reinforcement learning with factored states and actions. J Mach Learn Res. 2004;5:1063–88.

Mahadevaswamy UB, Keshava V, Lamani AC, Abbur LP, Mahadeva S. Robotic mapping using autonomous vehicle. SN Comput Sci. 2020;1:1–12.

Yadav AK, Shrivastava SK. Evaluation of reinforcement learning techniques. In: Proceedings of the first international conference on intelligent interactive technologies and multimedia. 2010. p. 88–92.

Morimoto J, Cheng G, Atkeson CG, Zeglin G. A simple reinforcement learning algorithm for biped walking. In: Proceedings. ICRA'04 IEEE international conference on robotics and automation, vol 3. IEEE. 2004. p. 3030–3035.

Raj S, Kumar CS. Q learning based Reinforcement learning approach to bipedal walking control. In: Proc. iNaCoMM, Roorkee. 2013. p. 615–620.

Peters J, Vijayakumar S, Schaal S. Reinforcement learning for humanoid robotics. In: Proceedings of the third IEEE-RAS international conference on humanoid robots. 2003. p. 1–20.

Zhang W, Jiang Y, Farrukh FUD, Zhang C, Zhang D, Wang G. LORM: a novel reinforcement learning framework for biped gait control. PeerJ Comput Sci. 2022;8: e927.

Canese L, Cardarilli GC, Di Nunzio L, Fazzolari R, Giardino D, Re M, Spanò S. Multi-agent reinforcement learning: a review of challenges and applications. Appl Sci. 2021;11(11):4948.

Mehta D. State-of-the-art reinforcement learning algorithms. Int J Eng Res Technol. 2020;8:717–22.

Funding

This study is not funded.

Author information

Authors and Affiliations

Contributions

VBS Assistant Professor, MANIT, Bhopal, Madhya Pradesh, India. DKM Assistant Professor ASET Amity University, Gwalior, Madhya Pradesh, India.

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This article is part of the topical collection “Machine Intelligence and Smart Systems” guest edited by Manish Gupta and Shikha Agrawal.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Kumar, M., Mishra, D.K. & Semwal, V.B. A Novel Algorithm for Optimal Trajectory Generation Using Q Learning. SN COMPUT. SCI. 4, 447 (2023). https://doi.org/10.1007/s42979-023-01876-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s42979-023-01876-0