Abstract

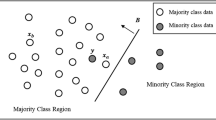

The class imbalance problem is prevalent in many classification tasks such as disease identification using microarray data, network intrusion detection, and so on. These are tasks in which the class distribution is skewed towards one class, more commonly known as the majority class. In such cases, traditional classifiers may not perform well as they tend to become biased towards the majority class. To address this problem, an intelligent undersampling technique is proposed in this paper. The method first groups the samples of the majority class into \(l\) clusters, where \(l\) is some number, using the K-means clustering algorithm. From these clusters, each of the cluster centroids is selected to form the undersampled majority class set. A classifier is then trained on this undersampled dataset consisting of the selected majority class samples and all the minority class samples. The trained model is used to predict the probability of each majority class sample belonging to the minority class. A Gaussian distribution is then constructed from these probabilities using which the top p-percent samples from each cluster are selected. The centroid of the cluster is recomputed using these samples only, which forms the new sample for our dataset for the corresponding cluster. The classifier is again trained using these samples, along with the minority class samples, thereby iteratively improving the classifier. The results obtained by the proposed method show that it performs better than most state-of-the-art methods while being evaluated on some standard datasets.

Similar content being viewed by others

References

Seiffert C, Khoshgoftaar TM, Van Hulse J, Folleco A. An empirical study of the classification performance of learners on imbalanced and noisy software quality data. Inf Sci. 2014;259:571–95. https://doi.org/10.1016/j.ins.2010.12.016.

Gray D, Bowes D, Davey N, et al. Reflections on the NASA MDP data sets. IET Softw. 2012;6(6):549–58. https://doi.org/10.1049/iet-sen.2011.0132.

Acuña E, Rodríguez C. An empirical study of the effect of outliers on the misclassification error rate. Trans Knowl Data Eng. 2004;17:1–21.

Zhang J, Mani I. KNN approach to unbalanced data distributions: a case study involving information extraction. In: Proceedings of the ICML’2003 workshop on learning from imbalanced datasets. 2003.

Maloof M. Learning when data sets are imbalanced and when costs are unequal and unknown. In: Proceedings of the ICML’03 workshop on learning from imbalanced data sets. 2003.

Chawla NV. C4.5 and imbalanced data sets : investigating the effect of sampling method, probabilistic estimate, and decision tree structure. In: Proceedings of international conference machine learning and work learning from imbalanced data sets II. 2003.

Seiffert C, Khoshgoftaar TM, Van Hulse J. Improving software-quality predictions with data sampling and boosting. IEEE Trans Syst Man Cybern A Syst Humans. 2009;39(6):1283–94. https://doi.org/10.1109/TSMCA.2009.2027131.

He H, Garcia EA. Learning from imbalanced data. IEEE Trans Knowl Data Eng. 2009;21(9):1263–84. https://doi.org/10.1109/TKDE.2008.239.

Wasikowski M, Chen XW. Combating the small sample class imbalance problem using feature selection. IEEE Trans Knowl Data Eng. 2010;22(10):1388–400. https://doi.org/10.1109/TKDE.2009.187.

Liu B, Ma Y, Wong CK. Improving an association rule based classifier. In: Lecture notes in computer science (including subseries Lecture notes in artificial intelligence and lecture notes in bioinformatics). Berlin: Springer; 2000.

Farid DM, Zhang L, Hossain A, et al. An adaptive ensemble classifier for mining concept drifting data streams. Expert Syst Appl. 2013;40(15):5895–906. https://doi.org/10.1016/j.eswa.2013.05.001.

Sun Z, Song Q, Zhu X, et al. A novel ensemble method for classifying imbalanced data. Pattern Recognit. 2015;48(5):1623–37. https://doi.org/10.1016/j.patcog.2014.11.014.

Galar M, Fernandez A, Barrenechea E, et al. A review on ensembles for the class imbalance problem: bagging-, boosting-, and hybrid-based approaches. IEEE Trans Syst Man Cybern C Appl Rev. 2012;42(4):463–84.

Elkan C. The foundations of cost-sensitive learning. In: IJCAI international joint conference on artificial intelligence. 2001.

Zadrozny B, Langford J, Abe N. Cost-sensitive learning by cost-proportionate example weighting. In: Proceedings—IEEE international conference on data mining, ICDM. 2003.

Haixiang G, Yijing L, Shang J, et al. Learning from class-imbalanced data: review of methods and applications. Expert Syst Appl. 2017;73:220–39.

Chawla NV, Lazarevic A, Hall LO, Bowyer KW. SMOTEBoost : improving prediction of the minority class in boosting. In: Proceedings of European conference on principles and practice of knowledge discovery in databases. Berlin: Springer; 2003.

Wang S, Yao X. Diversity analysis on imbalanced data sets by using ensemble models. In: 2009 IEEE symposium on computational intelligence and data mining, CIDM 2009—Proceedings. 2009.

Barandela R, Sánchez JS, Valdovinos RM. New applications of ensembles of classifiers. Pattern Anal Appl. 2003;6:245–56. https://doi.org/10.1007/s10044-003-0192-z.

Chawla NV, Bowyer KW, Hall LO, Kegelmeyer WP. SMOTE: synthetic minority over-sampling technique. J Artif Intell Res. 2002;16:321–57. https://doi.org/10.1613/jair.953.

MacIejewski T, Stefanowski J. Local neighbourhood extension of SMOTE for mining imbalanced data. In: IEEE SSCI 2011: symposium series on computational intelligence—CIDM 2011: 2011 IEEE symposium on computational intelligence and data mining. 2011.

Santos MS, Abreu PH, García-Laencina PJ, et al. A new cluster-based oversampling method for improving survival prediction of hepatocellular carcinoma patients. J Biomed Inform. 2015;58:49–59. https://doi.org/10.1016/j.jbi.2015.09.012.

Blagus R, Lusa L. SMOTE for high-dimensional class-imbalanced data. BMC Bioinformatics. 2013;14:106. https://doi.org/10.1186/1471-2105-14-106.

García V, Sánchez JS, Marqués AI, et al. Understanding the apparent superiority of over-sampling through an analysis of local information for class-imbalanced data. Expert Syst Appl. 2020;158: 113026.

He H, Ma Y. Imbalanced learning: foundations, algorithms, and applications. Hoboken: Wiley; 2013.

Das B, Krishnan NC, Cook DJ. Handling imbalanced and overlapping classes in smart environments prompting dataset. In: Yada K, editor. Data mining for service. Studies in big data, vol. 3. Berlin: Springer; 2014.

Yen SJ, Lee YS. Cluster-based under-sampling approaches for imbalanced data distributions. Expert Syst Appl. 2009;36(3):5718–27. https://doi.org/10.1016/j.eswa.2008.06.108.

Chennuru VK, Timmappareddy SR. MahalCUSFilter: a hybrid undersampling method to improve the minority classification rate of imbalanced datasets. In: International conference on mining intelligence and knowledge exploration. New York: Springer; 2017. p. 43–53.

Lin W-C, Tsai C-F, Hu Y-H, Jhang J-S. Clustering-based undersampling in class-imbalanced data. Inf Sci. 2017;409:17–26.

Ofek N, Rokach L, Stern R, Shabtai A. Fast-CBUS: a fast clustering-based undersampling method for addressing the class imbalance problem. Neurocomputing. 2017;243:88–102.

Tsai C-F, Lin W-C, Hu Y-H, Yao G-T. Under-sampling class imbalanced datasets by combining clustering analysis and instance selection. Inf Sci. 2019;477:47–54.

Guzmán-Ponce A, Sánchez JS, Valdovinos RM, Marcial-Romero JR. DBIG-US: a two-stage under-sampling algorithm to face the class imbalance problem. Expert Syst Appl. 2021;168: 114301.

Kumar NS, Rao KN, Govardhan A, et al. Undersampled K-means approach for handling imbalanced distributed data. Prog Artif Intell. 2014;3:29–38.

Seiffert C, Khoshgoftaar TM, Van Hulse J, Napolitano A. RUSBoost: a hybrid approach to alleviating class imbalance. IEEE Trans Syst Man Cybern A Syst Humans. 2010;40(1):185–97. https://doi.org/10.1109/TSMCA.2009.2029559.

Schapire RE. The strength of weak learnability. Mach Learn. 1990;5(2):197–227. https://doi.org/10.1023/A:1022648800760.

Rayhan F, Ahmed S, Mahbub A, et al. CUSBoost: cluster-based under-sampling with boosting for imbalanced classification. In: 2nd international conference on computational systems and information technology for sustainable solutions, CSITSS 2017. 2018.

Galar M, Fernández A, Barrenechea E, Herrera F. EUSBoost: enhancing ensembles for highly imbalanced data-sets by evolutionary undersampling. Pattern Recognit. 2013;46(12):3460–71. https://doi.org/10.1016/j.patcog.2013.05.006.

Gautheron L, Habrard A, Morvant E, Sebban M. Metric learning from imbalanced data with generalization guarantees. Pattern Recognit Lett. 2020;133:298–304. https://doi.org/10.1016/j.patrec.2020.03.008.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interests

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Bhattacharya, R., De, R., Chakraborty, A. et al. Clustering Based Undersampling for Effective Learning from Imbalanced Data: An Iterative Approach. SN COMPUT. SCI. 5, 386 (2024). https://doi.org/10.1007/s42979-024-02717-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s42979-024-02717-4