Abstract

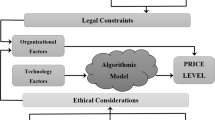

The growing use of artificial intelligence (A.I.) algorithms in businesses raises regulators' concerns about consumer protection. While pricing and recommendation algorithms have undeniable consumer-friendly effects, they can also be detrimental to them through, for instance, the implementation of dark patterns. These correspond to algorithms aiming to alter consumers' freedom of choice or manipulate their decisions. While the latter is hardly new, A.I. offers significant possibilities for enhancing them, altering consumers' freedom of choice and manipulating their decisions. Consumer protection comes up against several pitfalls. Sanctioning manipulation is even more difficult because the damage may be diffuse and not easy to detect. Symmetrically, both ex-ante regulation and requirements for algorithmic transparency may be insufficient, if not counterproductive. On the one hand, possible solutions can be found in counter-algorithms that consumers can use. On the other hand, in the development of a compliance logic and, more particularly, in tools that allow companies to self-assess the risks induced by their algorithms. Such an approach echoes the one developed in corporate social and environmental responsibility. This contribution shows how self-regulatory and compliance schemes used in these areas can inspire regulatory schemes for addressing the ethical risks of restricting and manipulating consumer choice.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Code availability

Not applicable.

Notes

Following Thaler [47] and Sunstein [44, 46], we could define a sludge as ‘a viscous mixture', in the form of excessive or unjustified frictions that make it difficult for consumers, investors, employees, students, patients, clients, small businesses, and many others to get what they want or to do as they wish.

A.I.-based recommendation tools may also deprive consumers of access to services, such as the loans market. Such refusals are sometimes based on social bias reflected and aggravated by algorithms bias [11].

See the cases of Tor or Anonabox, for instance. Sunstein [45] has introduced a distinction between an architecture of control proposed by the algorithm and based on past choices and architecture of serendipity in which the proposals made to the consumers only reflect the average choices of the platform's users.

Algorithmic tools as Shadowbid propose to consumers using marketplaces to “state their personal reservation price [and] then purchases automatically when the price drops below this threshold” ([51], p. 589). The literature on algoactivism can also be investigated to draw parallels between consumers' possible counterstrategies and platforms depending on contractors, such as car drivers, for instance [25].

As mentioned above, the European Commission's proposal draws a continuum between algorithmic systems prohibited insofar as they would exploit vulnerabilities of specific groups, ex-ante obligations for systems involving high stake decisions, and finally, transparency obligations for systems involving less significant risks [50]. The algorithms that concern us fall into this third category. The E.U. Unfair Commercial Practices Directive already imposes conditions on their use, and the General Data Protection Regulation (GDPR) imposes transparency and explainability requirements on companies [24]. Under the A.I. proposal, only high-risk algorithms are obligated to set up a quality system to carry out a lifecycle impact assessment.

References

Acquisti, A., Brandimarte, L., Loewenstein, G.: Secrets and likes: The drive for privacy and the difficulty of achieving it in the digital age. J. Consum. Psychol. 30(4), 736–758 (2020)

Athey, S.: Beyond prediction: Using big data for policy problems. Science 355(6324), 483–485 (2017)

Agrawal, A., Gans, J., Goldfarb, A.: How AI Will Change Strategy: A Thought Experiment. Harvard Business Review. https://hbr.org/2017/10/how-ai-will-change-strategy-a-thought-experiment (2017).

Bakos, Y., Marotta-Wurgler, F., Trossen, D.R.: Does anyone read the fine print? Consumer attention to standard-form contracts. J. Legal Stud. 43, 1 (2014)

British Office for Artificial Intelligence : Understanding artificial Ethics and Safety- Understand how to use artificial intelligence ethically and Safely https://www.gov.uk/guidance/understanding-artificial-intelligence-ethics-and-safety (2019)

Calo, M.R.: Digital market manipulation. George Washington Law Rev. 82(4), 995–1051 (2014)

Canadian Treasury Board Secretariat. Directive on Automated Decision-Making. Policy on Service and Digital. https://www.tbs-sct.gc.ca/pol/doc-eng.aspx?id=32592 (2020)

Coglianese, C., Mendelson, E.: Meta-regulation and self-regulation. In: Cave, M., Baldwin, R., Lodge, M. (eds.) The Oxford Handbook on Regulation, pp. 146–168. Oxford University Press, Oxford (2010)

Colangelo, G., Maggiolino, M.: From fragile to smart consumers: Shifting paradigm for the digital era. Comput. Law Secur. Rev. 35(2), 173–181 (2019)

Contissa, G., Lagioia, F., Lippi, M., Micklitz, H-W, Pałka, P., Sartor, G., Torroni, P.: Towards Consumer-Empowering Artificial Intelligence. Proceedings of the 27th International Joint Conference on Artificial Intelligence (IJCAI-18), pp.5150–5157 (2018)

Citron, D.K., Pasquale, F.: The scored society: Due process for automated predictions. Washington Law Rev. 89, 1 (2014)

Cusumano, M.A., Gawer, A., Yoffie, D.B.: Can self-regulation save digital platforms? Ind. Corpor. Change (2021). https://doi.org/10.1093/icc/dtab052

E.U. Commission: Proposal for a Regulation laying down harmonized rules on artificial intelligence (Artificial Intelligence Act) (2021)

E.U. Commission: The Digital Services Act Package, https://digital-strategy.ec.europa.eu/en/policies/digital-services-act-package (2020)

Ezrachi, A., Stucke, M.E.: Digitalisation and its impact on innovation. Working Paper 2020/07, October R&I Paper Series, European Commission https://wbc-rti.info/object/document/20829/attach/KIBD20003ENN_en.pdf (2020)

Falco, G., Shneiderman, B., Badger, J., Carrier, R., Dahbura, A., Danks, D., Eling, M., Goodloe, A., Gupta, J., Hart, C., Jirotka, M., Johnson, H., LaPointe, C., Llorens, A., Mackworth, A., Maple, C., Pálsson, S., Pasquale, F., Winfield A., Yeong. Z.: “Governing A.I. safety through independent audits” Independent audit of A.I. systems serves as a pragmatic approach to an otherwise burdensome and unenforceable assurance challenge”, Nature – Machine Intelligence, VOL 3, July, pp 566–571, https://t.co/ksb7ZYozHi (2021)

Floridi, L.: Faultless responsibility: on the nature and allocation of moral responsibility for distributed moral actions. Philos. Trans. R. Soc. A Math. Phys. Eng. Sci. (2016). https://doi.org/10.1098/RSTA.2016.0112

Gal, M.: Algorithmic challenges to autonomous choice. Michigan Telecommun. Technol. Law Rev. 25(1), 59–104 (2018)

Gal, M., Elkin-Koren, N.: Algorithmic consumers. Harvard J. Law Technol. 30, 309 (2017)

Grafanaki, S.: Drowning in big data: Abundance of choice, scarcity of attention and the personalization trap, a case for regulation. Richmond J. Law Technol. 24(1), 1–66 (2017)

Gray, C.M., Kou, Y., Battles, B., Hoggatt, J., Toombs, A.: The Dark (Patterns) Side of UX Design. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, CHI '18, New York, NY, USA: Association for Computing Machinery, 1–14. https://doi.org/10.1145/3173574.3174108 (2018)

Government of Canada: Algorithmic Impact Assessment, https://open.canada.ca/data/en/dataset/5423054a-093c-4239-85be-fa0b36ae0b2e/resource/7381144a-8e88-4d74-b83b-746c13de4093 (2020)

Jin, G.Z. Wagman, L.: Big data at the crossroads of antitrust and consumer protection. Information Economics and Policy, https://lwagman.org/BigDataAntitrustCP.pdf (2020)

Kaminski, M., Malgieri G.: Algorithmic impact assessments under the GDPR: Producing multi-layered explanations, international data privacy law, https://doi.org/10.1093/idpl/ipaa020 (2020)

Kellog, K.C., Valentine, M.A., Christin, A.: Algorithms at work: the new contested terrain of control. Acad. Manag. Ann. 14(1), 366–410 (2020)

Kouloukoui, D., De Marcellis-Warin, N., Armellini, F., Warin, T., Andrade, T.E.: Factors influencing the perception of exposure to climate risks: evidence from the world’s largest carbon-intensive industries. J. Clean. Prod. (2021). https://doi.org/10.1016/j.jclepro.2021.127160

Lehagre, E.: L’évaluation d’impact algorithmique : un outil qui doit encore faire ses preuves, Rapport Etalab, https://www.etalab.gouv.fr/wp-content/uploads/2021/07/Rapport_EIA_ETALAB-.pdf (2021)

Luguri, J., Strahilevitz, L.: Shining a Light on Dark Patterns, Journal of Legal Analysis 43, University of Chicago Coase-Sandor Institute for Law & Economics Research Paper No. 879, U of Chicago, Public Law Working Paper No. 719, Available at SSRN: https://ssrn.com/abstract=3431205 or https://doi.org/10.2139/ssrn.3431205 (2021)

Lippi, M.C., Lagioia, G., Micklitz, F., Pałka, P., Sartor, G., Torroni, P.: The force awakens artificial intelligence for consumer law. J. Artif. Intell. Res. 67, 169–187 (2020)

Maitland, I.: The limits of self-regulation. Calif. Manage. Rev. 27(3), 135 (1985)

Marciano, A., Nicita, A., Ramello., G., B.: Big data and big techs: understanding the value of information in platform capitalism. Eur. J. Law Econ. (2020). https://doi.org/10.1007/s10657-020-09675-1

Marty, F., Warin, T.: Innovation in Digital Ecosystems: Challenges and Questions for Competition Policy. CIRANO Working Paper Series. https://cirano.qc.ca/fr/sommaires/2020s-10 (2020a)

Marty, F., Warin, T.: Keystone Players and Complementors: An Innovation Perspective. CIRANO Working Paper Series 2020s‑61. https://cirano.qc.ca/fr/sommaires/2020s-61 (2020b)

Mulligan, D.K., Regan, P., King, J.: The fertile dark matter of privacy takes on the dark patterns of surveillance. J. Consum. Psychol. 30(4), 767–773 (2020)

Obar, J., Oeldorf-Hirsch, A.: The Clickwrap: A Political Economic Mechanism for Manufacturing Consent on Social Media. Social Media Society 4(3), 2056305118784770 (2018)

Pałka, P., Lippi, M.: Big data analytics, online terms of service, and privacy policies. in Vogl R., ed., Research Handbook on Big Data Law, Edward Elgar (2020)

Ranchordas, S.: Experimental Regulations for A.I.: Sandboxes for Morals and Mores, University of Groningen Faculty of Law Research Paper No. 7/2021, Available at SSRN: https://ssrn.com/abstract=3839744 or https://doi.org/10.2139/ssrn.3839744 (2021)

Rasch, A., Thöne, M., Wenzel, T.: Drip pricing and its regulation: Experimental evidence. J. Econ. Behav. Organ. 176, 353–370 (2020)

Smuha, N.: From a “A race to A.I." to a “race to A.I. regulation” regulatory competition for artificial intelligence. Law Technol. 13, 1 (2021)

Smuha, N., Ahmed-Rengers, E., Harkens, A., Li, W., MacLaren, J., Piselli R., Yeung, K., How the E.U. can achieve legally trustworthy A.I.: a response to the European Commission’s proposal for an artificial intelligence act, LEADS working paper, University of Birmingham, august (2021)

Stephenson, A.: The pursuit of CSR and business ethics policies: Is it a source of competitive advantage for organizations? J. Am. Acad. Bus. 14(2), 251–262 (2009)

Stigler Center: Stigler committee on digital platforms final report. The University of Chicago, Chicago (2019)

Susser, D., Roessler, B., Nissenbaum, H.: Online manipulation: Hidden influences in a digital world. Georgetown Law Technol. Rev. 4(1), 2–45 (2020)

Sunstein, C.: Sludge and ordeal. Duke Law J. 68(8), 1843–1883 (2019)

Sunstein, C.: The Ethics of Nudging, 32 Yale J. on Reg. Available at: https://digitalcommons.law.yale.edu/yjreg/vol32/iss2/6 (2015)

Sunstein, C.: Sludge Audits. Behavioural Public Policy: 1‑20. (2020)

Thaler, R.H.: Nudge, not sludge. Science 361(6401), 431 (2018)

Thelisson, E., Morin, J.H., Rochel, J.: A.I. governance: Digital responsibility as a building block—towards an index of digital responsibility. Delphi Interdiscip. Rev. Emerg. Technol. 2(4), 167–178 (2019)

U.S. Government Accountability Office, Artificial Intelligence: An Accountability Framework for Federal Agencies and Other Entities, GAO-21–519SP. (2021)

Veale, M., Zuiderveen Borgesius F.: “Demystifying the Draft E.U. Artificial Intelligence Act.” SocArXiv. July 6. doi:https://doi.org/10.31235/osf.io/38p5f. (2021)

Wagner, G., Eidenmüller, H.: Down by algorithms? Siphoning rents, exploiting biases, and shaping preferences: regulating the dark side of personalized transaction. Univ. Chicago Law Rev. 86, 581–609 (2019)

Warin, T., Leiter, D.: Homogenous goods markets: An empirical study of price dispersion on the internet. Int. J. Econ. Bus. Res. 4(5), 514–529 (2012). https://www.inderscienceonline.com/doi/abs/10.1504/IJEBR.2012.048776

Warin, T., Troadec, A.: Price strategies in a big data world. Encyclopedia of E-commerce development, implementation, and management. IGI Global, Chapter 46, pp 625–638, March (2016)

Yeung, K.: Hypernudge: Big data as a mode of regulation by design. Inf. Commun. Soc. 20(1), 118–136 (2017)

Funding

Not applicable.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

No conflict of interest for this manuscript.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

de Marcellis-Warin, N., Marty, F., Thelisson, E. et al. Artificial intelligence and consumer manipulations: from consumer's counter algorithms to firm's self-regulation tools. AI Ethics 2, 259–268 (2022). https://doi.org/10.1007/s43681-022-00149-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s43681-022-00149-5