Abstract

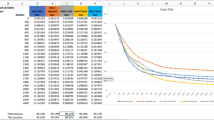

Models can have different outcomes based on the different types of inputs; the training data used to build the model will change its output (a data-centric outcome), while the hyperparameter selection can also affect the model’s output and performance (a model-centric outcome). When building classification models, data scientists generally focus on model performance as the key metric, often focusing on accuracy. However, other metrics should be considered during model development that assesses performance in other ways, like fairness. Assessing the fairness during the model development process is often overlooked but should be considered as part of a model’s full assessment before deployment. This research investigates the fairness–accuracy tradeoff that occurred by changing only one hyperparameter in a neural network for binary classification to assess how this single change in the hidden layer can alter the algorithm’s accuracy and fairness. Neural networks were used for this assessment because of their wide usage across domains, limitations to current bias measurement methods, and the underlying challenges in their interpretability. Findings suggest that assessing accuracy and fairness during model development provides value while mitigating potential negative effects for users while reducing organizational risk. No particular activation function was found to be fairer than another. Notable differences in the fairness and accuracy measures could help developers deploy a model with high accuracy and robust fairness. Algorithm development should include a grid search for hyperparameter optimization that includes fairness along with performance measures, like accuracy. While the actual choices for hyperparameters may depend on the business context and dataset considered, an optimal development process should use both fairness and model performance metrics.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Data availability

The data and code are made available in the Github listed in Appendix B and supplementary materials.

References

U.S. Equal Employment Opportunity Commission. Questions and Answers to Clarify and Provide a Common Interpretation of the Uniform Guidelines on Employee Selection Procedures [online] Available at: https://www.eeoc.gov/laws/guidance/questions-and-answers-clarify-and-provide-common-interpretation-uniform-guidelines (1979). Accessed 10 Jan 2022

Larson, J., Mattu, S., Kirchner, L., Angwin, J.: How We Analyzed the COMPAS Recidivism Algorithm. [online] ProPublica. Available at: https://www.propublica.org/article/how-we-analyzed-the-compas-recidivism-algorithm (2016). Accessed 12 Jan 2022

Hardesty, L.: Study finds gender and skin-type bias in commercial artificial-intelligence systems. [online] Massachusetts Institute of Technology News. Available at: https://news.mit.edu/2018/study-finds-gender-skin-type-bias-artificial-intelligence-systems-0212 (2018). Accessed 12 Jan 2022

Nedlund, E.: We did what Apple told us not to with the Apple Card. [online] CNN. Available at: https://edition.cnn.com/2019/11/12/business/apple-card-gender-bias/index.html (2019). Accessed 6 Jan 2022

Angwin, J., Larson, J., Mattu, S., and Kirchner, L.: Machine Bias: There’s software used across the country to predict future criminals. And it’s biased against blacks. [online] ProPublica. Available at: https://www.propublica.org/article/machine-bias-risk-assessments-in-criminal-sentencing (2016). Accessed 6 Jan 2022

Holzinger, A.: The Next Frontier: AI We Can Really Trust. In: Kamp, Michael (eds) Proceedings of the ECML PKDD 2021, CCIS 1524. Cham: Springer Nature, pp. 427–440, https://doi.org/10.1007/978-3-030-93736-2_33 (2021). Accessed 25 Nov 2022

Friedman, B., Nissenbaum, H.: Bias in computer systems. ACM Transactions on Information Systems, 14(3), pp.330–347. https://doi.org/10.4324/9781315259697-23(1996). Accessed 6 Jan 2022

Köchling, A., Wehner, M.C.: Discriminated by an algorithm: a systematic review of discrimination and fairness by algorithmic decision-making in the context of HR recruitment and HR development. Bus Res 13(3), 795–848 (2020). https://doi.org/10.1007/s40685-020-00134-w

Wachter, S., Mittelstadt, B., Russell, C.: Bias Preservation in Machine Learning: The Legality of Fairness Metrics Under EU Non-Discrimination Law. SSRN Electronic Journal. [online] Available at: https://doi.org/10.2139/ssrn.3792772 (2021). Accessed 6 Oct 2021

Cooper, A. F., Lu, Y., Forde, J., De Sa, C.: Hyperparameter Optimization Is Deceiving Us, and How to Stop It. [online] Available at: https://proceedings.neurips.cc/paper/2021/file/17fafe5f6ce2f1904eb09d2e80a4cbf6-Paper.pdf (2021). Accessed 6 Jan 2022

Forde, J., Cooper, A., Kwegyir-Aggrey, K., De Sa, C., Littman, M.: Model Selection’s Disparate Impact in real World Deep Learning Applications [online] Available at: https://arxiv.org/pdf/2104.00606.pdf (2021). Accessed 12 Jan 2022

Schelter, S., Stoyanovich, J.: Taming Technical Bias in Machine Learning Pipelines. Bulletin of the IEEE Computer Society Technical Committee on Data Engineering. [online] Available at: http://sites.computer.org/debull/A20dec/p39.pdf (2020). Accessed 6 Jan 2022

Hasson, U., Nastase, S.A., Goldstein, A.: (Direct Fit to Nature: An Evolutionary Perspective on Biological and Artificial Neural Networks. Neuron, [online] 105(3), pp. 416–434. Available at: https://www.sciencedirect.com/science/article/pii/S089662731931044X (2020). Accessed 6 Jan 2022

Sheu, Y.: Illuminating the Black Box: Interpreting Deep Neural Network Models for Psychiatric Research. Frontiers in Psychiatry, [online] 11. Available at: https://doi.org/10.3389/fpsyt.2020.551299 (2020). Accessed 10 Jan 2022

Sudjianto, A., Knauth, W., Singh, R., Yang, Z., Zhang, A.: Unwrapping The Black Box of Deep ReLU Networks: Interpretability, Diagnostics, and Simplification. [online] arXiv.org. Available at: https://arxiv.org/abs/2011.04041 (2020). Accessed 10 Jan 2022

Buolamwini, J., Gebru, T.: Proceedings of Machine Learning Research. In Conference on Fairness, Accountability and Transparency (Vol. 81, pp. 77–91). [online] http://proceedings.mlr.press/v81/buolamwini18a/buolamwini18a.pdf (2018). Accessed 6 Jan 2022

Zhang, Y., Tiňo, P., Leonardis, A., Tang. K.: A Survey on Neural Network Interpretability. Available at https://doi.org/10.1109/tetci.2021.3100641 (2021). Accessed 6 Jan 2022

Bird, S., Dudik, M., Edgar, R., Horn, B., Lutz, R., Milan, V., Sameki, M., Wallach, H., Walker, K.: Fairlearn: A toolkit for assessing and improving fairness in AI. Microsoft. [online] https://www.microsoft.com/en-us/research/publication/fairlearn-a-toolkit-for-assessing-and-improving-fairness-in-ai/ (2020). Accessed 15 Jan 2022

Narayanan, A.: Tweet on ArgMax amplifying bias. [online] Twitter. Available at: https://twitter.com/random_walker/status/1399348241142104064 (2021). Accessed 3 Jun 2021

Smith, G., Rustagi, I.: Mitigating Bias in Artificial Intelligence An Equity Fluent Leadership Playbook. [online] Available at: https://haas.berkeley.edu/wp-content/uploads/UCB_Playbook_R10_V2_spreads2.pdf (2020). Accessed 6 Jan 2022

Crawford, K.: The Trouble with Bias - Neural Information Processing Systems Conference 2017 Keynote (video) https://nips.cc/Conferences/2017/Schedule?showEvent=8742 (2017). Accessed 6 Oct 2021

Angerschmid, A., Zhou, J., Theuermann, K., Chen, F., Holzinger, A.: Fairness and Explanation in AI-Informed Decision Making. Machine Learning and Knowledge Extraction, 4, (2), pp 556–579, [online] doi:https://doi.org/10.3390/make4020026. (2022). Accessed 25 Nov 2022

Von Laufenberg, R.: Bias and discrimination in algorithms – where do they go wrong? Vicesse. [online] Available at: https://www.vicesse.eu/blog/2020/6/29/bias-and-discrimination-in-algorithms-where-do-they-go-wrong (2020). Accessed 6 Jan 2022

Simon, J., Wong, P.-H., Rieder, G.: Algorithmic bias and the Value Sensitive Design approach. Internet Policy Review, 9(4) https://doi.org/10.14763/2020.4.1534 (2020). Accessed 6 Jan 2022

Barata, J.: How to Fix Bias in Machine Learning Algorithms? Yields.io. [online] Available at: https://www.yields.io/blog/how-to-fix-bias-in-machine-learning-algorithms/. (2020). Accessed 6 Jan 2022

Dobbe, R., Dean, S., Gilbert, T., Kohli, N.: A Broader View on Bias in Automated Decision-Making: Reflecting on Epistemology and Dynamics. [online] Available at: https://arxiv.org/pdf/1807.00553. (2018). Accessed 6 Jan 2022

Agarwal, A., Beygelzimer, A., Dudik, M., Langford, J., Wallach, H.: A Reductions Approach to Fair Classification [Internet]. Proceedings of the 35th International Conference on Machine Learning, PMLR 80:60–69, 2018 [cited 2022 Sept 01]. p. 60–9. Available from: https://proceedings.mlr.press/v80/agarwal18a.html (2018). Accessed 15 Jan 2022

Greene, N.: Technical bias in neural networks (Order No. 29390587). Available from Dissertations & Theses, Utica University. (2723521159). Retrieved from http://ezproxy.utica.edu/login?url=https://www.proquest.com/dissertations-theses/technical-bias-neural-networks/docview/2723521159/se-2 (2022). Accessed 12 July 2022

Mabilama, J. M.: E-commerce - Users of a C2C fashion store. [online] Data.world. Available at: https://data.world/jfreex/e-commerce-users-of-a-french-c2c-fashion-store (2020). Accessed 19 Jan 2022

Dua, D., Graff, C.: UCI Machine Learning Repository. University of California, School of Information and Computer Science, Irvine, CA (2019). http://archive.ics.uci.edu/ml/datasets/Adult. Accessed 5 Jan 2022

Chigozie, C. E., Ijomah, W., Gachaganand, A., Marshall, S.: Activation Functions: Comparison of Trends in Practice and Research for Deep Learning. [online] Available at: https://arxiv.org/pdf/1811.03378.pdf (2018). Accessed 6 Jan 2022

Szandała, T.: Review and Comparison of Commonly Used Activation Functions for Deep Neural Networks. [online] Available at: https://doi.org/10.1007/978-981-15-5495-7_11 (2020). Accessed 6 Jan 2022

Amir, S., van de Meent, J., Wallace, B.: On the Impact of Random Seeds on the Fairness of Clinical Classifiers. In Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, pp 3808–3823, [online] https://doi.org/10.18653/v1/2021.naacl-main.299 (2021). Accessed 15 Jan 2022

Dodge, J., Ilharco, G., Schwartz, R., Farhadi, A., Hajishirzi, H., Smith, N.: Fine-Tuning Pretrained Language Models: Weight Initializations, Data Orders, and Early Stopping. [online] Available at: https://arxiv.org/abs/2002.06305 (2020). Accessed 6 Jan 2022

Wu, Y., Liu, L., Bae, J., Chow, K.-H., Iyengar, A., Pu, C., Wei, W., Yu, L., Zhang, Q.: Demystifying Learning Rate Policies for High Accuracy Training of Deep Neural Networks. [online] arXiv.org. Available at: https://doi.org/10.1109/bigdata47090.2019.9006104 (2019). Accessed 10 Jan 2022

Spinelli, I., Scardapane, S., Hussain, A., Uncini, A.: FairDrop: Biased Edge Dropout for Enhancing Fairness in Graph Representation Learning. [online] arXiv.org. Available at: https://doi.org/10.1109/tai.2021.3133818 (2021). Accessed 10 Jan 2022

Labach, A., Salehinejad, H., Valaee, S.: Survey of Dropout Methods for Deep Neural Networks. [online] arXiv.org. Available at: https://arxiv.org/abs/1904.13310 (2019). Accessed 10 Jan 2022

Padala, M., Gujar, S.: FNNC: Achieving Fairness through Neural Networks. [online] Available at: https://doi.org/10.24963/ijcai.2020/315 (2020). Accessed 6 Jan 2022

Roh, Y., Lee, K., Whang, S.E. and Suh, C.: FairBatch: Batch Selection for Model Fairness. [online] arXiv.org. Available at: https://arxiv.org/abs/2012.01696. (2012). Accessed 6 Jan 2022

Weng, T.-W., Zhang, H., Chen, H., Song, Z., Hsieh, C.-J., Boning, D., Dhillon, I. and Daniel, L.: Towards Fast Computation of Certified Robustness for ReLU Networks. [online] Available at: http://proceedings.mlr.press/v80/weng18a/weng18a.pdf (2018). Accessed 10 Jan 2022

Acknowledgements

The authors confirm that the research was independent and was not funded or sponsored.

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Appendices

Appendix A

1.1 Data sources

-

1.

Adult dataset

Source: Dua, D. and Graff, C. (2019). UCI Machine Learning Repository. Available at: http://archive.ics.uci.edu/ml/datasets/Adult. Irvine, CA: University of California, School of Information and Computer Science [Accessed 01 Sept. 2022].

-

2.

French dataset

Source: Mabilama, J. M. (2020). E-commerce—Users of a C2C fashion store. [online] Data.world. Available at: https://data.world/jfreex/e-commerce-users-of-a-french-c2c-fashion-store [Accessed 05 Jan. 2022].

Appendix B

Code and Data Repository: https://github.com/Michael-AI-ML/fairness

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

McCarthy, M.B., Narayanan, S. Fairness–accuracy tradeoff: activation function choice in a neural network. AI Ethics 3, 1423–1432 (2023). https://doi.org/10.1007/s43681-022-00250-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s43681-022-00250-9