Abstract

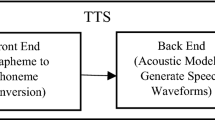

For any given mixed-language text, a multilingual synthesizer synthesizes speech that is intelligible to human listener. However, as speech data are usually collected from native speakers to avoid foreign accent, synthesized speech shows speaker switching at language switching points. To overcome this, the multilingual speech corpus can be converted to a polyglot speech corpus using cross-lingual voice conversion, and a polyglot synthesizer can be developed. Cross-lingual voice conversion is a technique to produce utterances in target speaker’s voice from source speaker’s utterance irrespective of the language and text spoken by the source and the target speakers. Conventional voice conversion technique based on GMM tokenization suffer from degradation in speech quality as the spectrum is oversmoothed due to statistical averaging. The current work focuses on alleviating the oversmoothing effect in GMM-based voice conversion technique, using (source) language-specific mixture weights in a multi-level GMM followed by selective pole focusing in the unvoiced speech segments. The continuity between the frames of the converted speech is ensured by performing fifth-order mean filtering in the cepstral domain. For the current work, cross-lingual voice conversion is performed for four regional Indian languages and a foreign language namely, Tamil, Telugu, Malayalam, Hindi, and Indian English. The performance of the system is evaluated subjectively using ABX listening test for speaker identity and using mean opinion score for quality. Experimental results demonstrate that the proposed method effectively improves the quality and intelligibility mitigating the oversmoothing effect in the voice-converted speech. A hidden Markov model-based polyglot text-to-speech system is also developed, using this converted speech corpus, to further make the system suitable for unrestricted vocabulary.

Similar content being viewed by others

Notes

In fact, experiments had been conducted with various number of mixture components starting from 16 and in steps of powers of two up to 1024. The performance does not seem to improve beyond 128.

References

M. Abe, S. Nakamura, K. Shikano, H. Kuwabara, Voice conversion through vector quantization, in International Conference on Acoustics, Speech, and Signal Processing (ICASSP), vol. 1 (1988), pp. 655–658

M. Abe, K. Shikano, H. Kuwabara, Cross-language voice conversion, in International Conference on Acoustics, Speech, and Signal Processing (ICASSP), vol. 1 (1990), pp. 345–348

M. Charlier, Y. Ohtani, T. Toda, A. Moinet, T. Dutoit, Cross-language voice conversion based on eigenvoices, in INTERSPEECH (2009), pp. 1635–1638

D. Erro, A. Moreno, A. Bonafonte, Voice conversion based on weighted frequency warping. IEEE Trans. Audio Speech Lang. Process. 18(5), 922–931 (2010)

E. Godoy, O. Rosec, T. Chonavel, Voice conversion using dynamic frequency warping with amplitude scaling, for parallel or nonparallel corpora. IEEE Trans. Audio Speech Lang. Process. 20(4), 1313–1323 (2012)

A.J. Hunt, A.W. Black, Unit selection in a concatenative speech synthesis system using a large speech database, in International Conference on Acoustics, Speech, and Signal Processing (ICASSP), vol. 1 (1996), pp. 373–376

H.T. Hwang, Y. Tsao, H.M. Wang, Y.R. Wang, S.H. Chen, Alleviating the over-smoothing problem in GMM-based voice conversion with discriminative training, in INTERSPEECH (2013), pp. 3062–3066

A. Kain, M. Macon, Spectral voice conversion for text-to-speech synthesis. In International Conference on Acoustics, Speech, and Signal Processing (ICASSP), vol. 1 (1998), pp. 285–288

H. Kawahara, Speech representation and transformation using adaptive interpolation of weighted spectrum: vocoder revisited, in International Conference on Acoustics, Speech, and Signal Processing (ICASSP), vol. 2 (1997), pp. 1303–1306

E.K. Kim, S. Lee, Y.H. Oh, Hidden Markov model based voice conversion using dynamic characteristics of speaker, in EUROSPEECH (1997), pp. 2519–2522

J. Latorre, K. Iwano, S. Furui, New approach to the polyglot speech generation by means of an HMM-based speaker adaptable synthesizer. Speech Commun. 48(10), 1227–1242 (2006)

A.F. Machado, M. Quieroz, Voice conversion: a critical survey. In: Sound and Music Computing, pp. 291–298 (2010)

T. Masuko, HMM-based speech synthesis and its applications. Ph.D. Dissertation, (2002)

P.A. Naylor, A. Kounoudes, J. Gudnason, M. Brookes, Estimation of glottal closure instants in voiced speech using the DYPSA algorithm. IEEE Trans. Audio Speech Lang. Process. 15, 34–43 (2007)

W.H. Press, S.A. Teukolsky, W.T. Vetterling, B.P. Flannery, Numerical recipes in C: the art of scientific computing (Chapter 14), 2nd edn. (Cambridge University Press, Cambridge, 1992), pp. 615–619

B. Ramani, M.P. Actlin Jeeva, P. Vijayalakshmi, T. Nagarajan, Cross-lingual voice conversion-based polyglot speech synthesizer for Indian languages, in INTERSPEECH (2014), pp. 775–779

B. Ramani, S.L. Christina, G.A. Rachel, V.S. Solomi, M.K. Nandwana, A. Prakash, A. Shanmugam, R. Krishnan, S. Kishore, K. Samudravijaya, P. Vijayalakshmi, T. Nagarajan, H.A. Murthy, A common attribute based unified HTS framework for speech synthesis in Indian languages, in ISCA Workshop on Speech Synthesis (2013), pp. 291–296

A.K. Singh, A computational phonetic model for indian language scripts, in Constraints on Spelling Changes: Fifth International Workshop on Writing Systems (2006)

V.S. Solomi, S.L. Christina, G.A. Rachel, B. Ramani, P. Vijayalakshmi, T. Nagarajan, Analysis on acoustic similarities between tamil and english phonemes using product of likelihood-Gaussians for an HMM-based mixed-language synthesizer, in COCOSDA (2013), pp. 1–5

Y. Stylianou, Voice transformation: a survey, in International Conference on Acoustics, Speech, and Signal Processing (ICASSP) (2009), pp. 3585–3588

Y. Stylianou, O. Cappe, E. Moulines, Statistical methods for voice quality transformation, in EUROSPEECH (1995), pp. 447–450

D. Sundermann, H. Hoge, A. Bonafonte, H. Ney, A. Black, S. Narayanan, Text-independent voice conversion based on unit selection, in International Conference on Acoustics, Speech, and Signal Processing (ICASSP), vol. 1 (2006), pp. I81–I84

D. Sundermann, H. Ney, H. Hoge, VTLN-based cross-language voice conversion, in IEEE Workshop on Automatic Speech Recognition and Understanding, ASRU’03 (2003), pp. 676–681

Technology Development for Indian Languages Programme, DeitY (2013), http://tdil.mit.gov.in/AboutUs.aspx. Last Accessed on 06 Sept 2014

T. Toda, H. Saruwatari, K. Shikano, Voice conversion algorithm based on Gaussian mixture model with dynamic frequency warping of straight spectrum, in International Conference on Acoustics, Speech, and Signal Processing (ICASSP), vol. 2 (2001), pp. 841–844

T. Toda, A. Black, K. Tokuda, Voice conversion based on maximum-likelihood estimation of spectral parameter trajectory. IEEE Trans. Audio Speech Lang. Process. 15, 2222–2235 (2007)

P.A. Torres-carrasquillo, D.A. Reynolds, J. Deller Jr, Language identification using Gaussian mixture model tokenization, in International Conference on Acoustics, Speech, and Signal Processing (ICASSP), pp. I-757–I-760 (2002)

H. Valbret, E. Moulines, J.P. Tubach, Voice transformation using PSOLA technique, in International Conference on Acoustics, Speech, and Signal Processing (ICASSP), vol. 1 (1992), pp. 145–148

P. Vijayalakshmi, T. Nagarajan, P. Mahadevan, Improving speech intelligibility in cochlear implants using acoustic models. WSEAS Trans. Signal Process. 7(4), 131–144 (2011)

J. Yamagishi, T. Kobayashi, Y. Nakano, K. Ogata, J. Isogai, Analysis of speaker adaptation algorithms for HMM-based speech synthesis and a constrained SMAPLR adaptation algorithm. IEEE Trans. Audio Speech Lang. Process. 17(1), 66–83 (2009)

H. Zen, K. Tokuda, A.W. Black, Statistical parametric speech synthesis. Speech Commun. 51, 1039–1064 (2009)

M. Zhang, J. Tao, J. Tian, X. Wang, Text-independent voice conversion based on state mapped codebook, in International Conference on Acoustics, Speech, and Signal Processing (ICASSP) (2008), pp. 4605–4608

M.A. Zissman, E. Singer, Automatic language identification of telephone speech messages using phoneme recognition and N-gram modeling, in International Conference on Acoustics, Speech, and Signal Processing (ICASSP), vol. 1 (1994), pp. I-305–I-308

Author information

Authors and Affiliations

Corresponding author

Additional information

The authors would like to thank the Department of Information Technology, Ministry of Communication and Technology, Government of India, for funding the project, “Development of Text-to-Speech synthesis for Indian Languages Phase II”, Ref. no. 11(7)/2011- HCC(TDIL).

Rights and permissions

About this article

Cite this article

Ramani, B., Actlin Jeeva, M.P., Vijayalakshmi, P. et al. A Multi-level GMM-Based Cross-Lingual Voice Conversion Using Language-Specific Mixture Weights for Polyglot Synthesis. Circuits Syst Signal Process 35, 1283–1311 (2016). https://doi.org/10.1007/s00034-015-0118-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00034-015-0118-1