Abstract

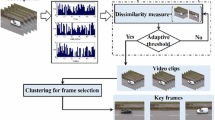

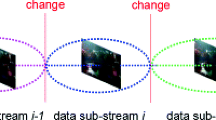

Recent years have witnessed a drastic growth of various videos in real-life scenarios, and thus there is an increasing demand for a quick view of such videos in a constrained amount of time. In this paper, we focus on automatic summarization of surveillance videos and present a new key-frame selection method for this task. We first introduce a dissimilarity measure based on f-divergence by a symmetric strategy for multiple change-point detection and then use it to segment a given video sequence into a set of non-overlapping clips. Key frames are extracted from the resulting video clips by a typical clustering procedure for final video summary. Through experiments on a wide range of testing data, excellent performances, outperforming given state-of-the-art competitors, have been demonstrated which suggests good potentials of the proposed method in real-world applications.

Similar content being viewed by others

Notes

A group of ten domain volunteers were invited to view the original videos used in the experiments first and then discussed together to determine the ground-truth frames. For the selected ground-truth frames, what is more important is whether these frames can contain all interested event(s)/subject(s) within the videos, and we thus did not provide the number/index of these frames.

References

Song, X., Sun, L., Lei, J., Tao, D., Yuan, G., Song, M.: Event-based large scale surveillance video summarization. Neurocomputing 187, 66–74 (2016)

Angadi, S., Naik, V.: Entropy based fuzzy c-means clustering and key frame extraction for sports video summarization. In: Proceedings of the 2014 IEEE International Conference on Signal and Image Processing (ICSIP), pp. 271–279 (2014)

Peng, W.T., Chu, W.T., Chang, C.H., Chou, C.N., Huang, W.J., Chang, W.Y., Hung, Y.P.: Editing by viewing: automatic home video summarization by viewing behavior analysis. IEEE Trans. Multimed. 13(3), 539–550 (2011)

Jiang, W., Cotton, C., Loui, A.C.: Automatic consumer video summarization by audio and visual analysis. In: Proceedings of the 2011 IEEE International Conference on Multimedia and Expo (ICME), pp. 1–6 (2011)

Hammoud, R.I., Sahin, C.S., Blasch, E.P., Rhodes, B.J.: Multi-source multi-modal activity recognition in aerial video surveillance. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, pp. 237–244 (2014)

Xiong, Z., Radhakrishnan, R., Divakaran, A., Rui, Y., Huang, T.S.: A Unified Framework for Video Summarization, Browsing & Retrieval: With Applications to Consumer and Surveillance Video. Academic Press, Orlando (2006)

Zhou, Z., Chen, X., Chung, Y.C., He, Z., Han, T.X., Keller, J.M.: Activity analysis, summarization, and visualization for indoor human activity monitoring. IEEE Trans. Circuits Syst. Video Technol. 18(11), 1489–1498 (2008)

Beetz, M., Tenorth, M., Jain, D., Bandouch, J.: Towards automated models of activities of daily life. Technol. Disabil. 22(1, 2), 27–40 (2010)

Truong, B.T., Venkatesh, S.: Video abstraction: a systematic review and classification. ACM Trans. Multimed. Comput. Commun. Appl. 3(1), 79–82 (2007)

Vila, M., Bardera, A., Xu, Q., Feixas, M., Sbert, M.: Tsallis entropy-based information measures for shot boundary detection and keyframe selection. Signal Image Video Process. 7(3), 507–520 (2013)

Sun, L., Ai, H., Lao, S.: The dynamic VideoBook: a hierarchical summarization for surveillance video. In: Proceedings of the 2013 IEEE International Conference on Image Processing, pp. 3963–3966 (2013)

Wang, M., Hong, R., Li, G., Zha, Z.J., Yan, S., Chua, T.S.: Event driven web video summarization by tag localization and key-shot identification. IEEE Trans. Multimed. 14(4), 975–985 (2012)

Wang, X., Jiang, Y.G., Chai, Z., Chai, Z., Gu, Z., Du, X., Wang, D.: Real-time summarization of user-generated videos based on semantic recognition. In: Proceedings of the 22nd ACM International Conference on Multimedia, pp. 849–852 (2014)

Wang, F., Ngo, C.W.: Rushes video summarization by object and event understanding, In: Proceedings of the International Workshop on TRECVID Video Summarization, pp. 25–29 (2007)

Lee, Y.J., Grauman, K.: Predicting important objects for egocentric video summarization. Int. J. Comput. Vis. 114(1), 38–55 (2015)

Lin, W., Zhang, Y., Lu, J., Zhou, B., Wang, J., Zhou, Y.: Summarizing surveillance videos with local-patch-learning-based abnormality detection, blob sequence optimization, and type-based synopsis. Neurocomputing 155, 84–98 (2015)

Lu, G., Zhou, Y., Li, X., Yan, P.: Unsupervised, efficient and scalable key-frame selection for automatic summarization of surveillance videos. Multimed. Tools Appl. 76(5), 6309–6331 (2017)

Sugiyama, M., Suzuki, T., Kanamori, T.: Density-ratio matching under the Bregman divergence: a unified framework of density-ratio estimation. Ann. Inst. Stat. Math. 64(5), 1009–1044 (2012)

Rajendra, S.P., Keshaveni, N.: A survey of automatic video summarization techniques. Int. J. Electron. Electr. Comput. Syst. 3(1), 1–6 (2014)

Ioannidis, A., Vasileios, C., Aristidis, L.: Weighted multi-view key-frame extraction. Pattern Recognit. Lett. 72, 52–61 (2016)

Mei, S., Guan, G., Wang, Z., He, M., Hua, X.S., Feng, D.D.: \(L_{2,0}\) constrained sparse dictionary selection for video summarization. In: Proceedings of the 2014 IEEE International Conference on Multimedia and Expo (ICME), pp. 1–6 (2014)

Mei, S., Guan, G., Wang, Z., Wan, S., He, M., Feng, D.D.: Video summarization via minimum sparse reconstruction. Pattern Recognit. 48(2), 522–533 (2015)

Mukherjee, S., Mukherjee, D.P.: A design-of-experiment based statistical technique for detection of key-frames. Multimed. Tools Appl. 62(3), 847–877 (2013)

Lai, J.L., Yi, Y.: Key frame extraction based on visual attention model. J. Vis. Commun. Image Represent. 23(1), 114–125 (2012)

Liu, J., Wang, G., Duan, L.Y., Abdiyeva, K., Kot, A.C.: Skeleton based human action recognition with global context-aware attention lSTM networks. IEEE Trans. Image Process. 27(4), 1586–1599 (2018)

Zhang, K., Chao, W. L., Sha, F., Grauman, K.: Video summarization with long short-term memory. In: Proceedings of European Conference on Computer Vision, pp. 766–782 (2016)

Ejaz, N., Tariq, T.B., Baik, S.W.: Adaptive key frame extraction for video summarization using an aggregation mechanism. J. Vis. Commun. Image Represent. 23(7), 1031–1040 (2012)

Chatzigiorgaki, M., Skodras, A.N.: Real-time keyframe extraction towards video content identification. In: Proceedings of the 2009 16th IEEE International Conference on Digital Signal Processing, pp. 1–6 (2009)

Gong, D., Medioni, G., Zhao, X.: Structured time series analysis for human action segmentation and recognition. IEEE Trans. Pattern Anal. Mach. Intell. 36(7), 1414–1427 (2013)

Song, L., Yamada, M., Collier, N., Sugiyama, M.: Change-point detection in time-series data by relative density-ratio estimation. Neural Netw. 43(1), 72–83 (2013)

Kuanar, S.K., Panda, R., Chowdhury, A.S.: Video key frame extraction through dynamic Delaunay clustering with a structural constraint. J. Vis. Commun. Image Represent. 24(7), 1212–1227 (2013)

Chang, H.S., Sull, S., Lee, S.U.: Efficient video indexing scheme for content-based retrieval. IEEE Trans. Circuits Syst. Video Technol. 9(8), 1269–1279 (1999)

Liu, T., Kender, J.R.: Computational approaches to temporal sampling of video sequences. ACM Trans. Multimed. Comput. Commun. Appl. (TOMM) 3(2), 7 (2007)

Sainui, J., Sugiyama, M.: Minimum dependency key frames selection via quadratic mutual information. In: Proceedings of the IEEE International Conference on Digital Information Management (ICDIM), pp. 148–153 (2015)

Ciocca, G., Schettini, R.: An innovative algorithm for key frame extraction in video summarization. J. Real-Time Image Proc. 1(1), 69–88 (2006)

Ngo, C.W., Ma, Y.F., Zhang, H.J.: Video summarization and scene detection by graph modeling. IEEE Trans. Circuits Syst. Video Technol. 15(2), 296–305 (2005)

Yu, J.C.S., Kankanhalli, M.S., Mulhen, P.: Semantic video summarization in compressed domain MPEG video. In: Proceedings of the IEEE International Conference on Multimedia and Expo (ICME), pp. 329–332 (2003)

Ji, Q.G., Fang, Z.D., Xie, Z.H., Lu, Z.M.: Video abstraction based on the visual attention model and online clustering. Sig. Process. Image Commun. 28(3), 241–253 (2013)

Kawai, Y., Sumiyoshi, H., Yagi, N.: Shot boundary detection at TRECVID 2007. In: Trecvid 2007 Workshop Participants Notebook Papers, pp. 2197–2204 (2007)

Baber, J., Afzulpurkar, N., Dailey, M.N., Bakhtyar, M.: Shot boundary detection from videos using entropy and local descriptor. In: Proceedings of 2011 17th IEEE International Conference on Digital Signal Processing (DSP), pp. 1–6 (2011)

Yang, Y., Dadgostar, F., Sanderson, C., Lovell, B.C.: Summarisation of surveillance videos by key-frame selection. In: ACM/IEEE International Conference on Distributed Smart Cameras, pp. 1–6 (2011)

Pan, L., Wu, X., Shu, X.: Key frame extraction based on sub-shot segmentation and entropy computing. In: Proceedings of Chinese Conference on Pattern Recognition (CCPR), pp. 1–5 (2009)

Wu, J., Zhong, S.H., Jiang, J., Yang, Y.: A novel clustering method for static video summarization. Multimed. Tools Appl. 76(7), 9625–9641 (2017)

Cernekova, Z., Pitas, I., Nikou, C.: Information theory-based shot cut/fade detection and video summarization. IEEE Trans. Circuits Syst. Video Technol. 16(1), 82–91 (2006)

Lin, W., Sun, M.T., Li, H., Chen, Z., Li, W., Zhou, B.: Macroblock classification method for video applications involving motions. IEEE Trans. Broadcast. 58(1), 34–46 (2012)

Ren, J., Jiang, J., Chen, J.: Shot boundary detection in MPEG videos using local and global indicators. IEEE Trans. Circuits Syst. Video Technol. 19(8), 1234–1238 (2009)

Liu, T., Zhang, X., Feng, J., Lo, K.T.: Shot reconstruction degree: a novel criterion for key frame selection. Pattern Recognit. Lett. 25(12), 1451–1457 (2004)

Sundaram, H., Chang, S.F.: Video scene segmentation using video and audio features. In: Proceedings of the IEEE International Conference on Multimedia and Expo (ICME), pp. 1145–1148 (2000)

Barbic, J., Safonova, A., Pan, J.Y., Faloutsos, C., Hodgins, J.K., Pollard, N.S.: Segmenting motion capture data into distinct behaviors. In: Proceedings of Graphics Interface, pp. 185–194 (2004)

Yamanishi, K., Takeuchi, J.: A unifying framework for detecting outliers and change points from non-stationary time series data. In: Proceedings of the Eighth ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp. 676–681 (2002)

Groen, J.J., Kapetanios, G., Price, S.: Multivariate methods for monitoring structural change. J. Appl. Econom. 28(2), 250–274 (2013)

Liu, Z., Shi, R., Shen, L., Xue, Y., Ngan, K.N., Zhang, Z.: Unsupervised salient object segmentation based on kernel density estimation and two-phase graph cut. IEEE Trans. Multimed. 14(4), 1275–1289 (2012)

Ali, S.M., Silvey, S.D.: A general class of coefficients of divergence of one distribution from another. J. R. Stat. Soc. Ser. B (Methodol.) 28, 131–142 (1966)

Wang, Y., Liu, K., Hao, Q., Wang, X., Lau, D.L., Hassebrook, L.G.: Robust active stereo vision using Kullback–Leibler divergence. IEEE Trans. Pattern Anal. Mach. Intell. 34(3), 548–563 (2012)

Wang, H., Du, L., Zhou, P., Shi, L., Shen, Y.D.: Convex batch mode active sampling via relative Pearson divergence. In: Proceedings of the 29th AAAI Conference on Artificial Intelligence, pp. 3045–3051 (2015)

Sugiyama, M., Suzuki, T., Nakajima, S., Kashima, H., Bnau, P.V., Kawanabe, M.: Direct importance estimation for covariate shift adaptation. Ann. Inst. Stat. Math. 60(4), 699–746 (2008)

Kanamori, T., Suzuki, T., Sugiyama, M.: Statistical analysis of kernel-based least-squares density-ratio estimation. Mach. Learn. 86(3), 335–367 (2012)

Yamada, M., Suzuki, T., Kanamori, T., Hachiya, H., Sugiyama, M.: Relative density-ratio estimation for robust distribution comparison. Neural Comput. 25(5), 1324–1370 (2013)

Elhamifar, E., Sapiro, G., Vidal, R.: See all by looking at a few: sparse modeling for finding representative objects. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1600–1607 (2012)

Fu, Y., Guo, Y., Zhu, Y., Liu, F., Song, C., Zhou, Z.H.: Multi-view video summarization. IEEE Trans. Multimed. 12(7), 717–729 (2010)

Vezzani, R., Cucchiara, R.: Video surveillance online repository (ViSOR): an integrated framework. Multimed. Tools Appl. 50(2), 359–380 (2010)

Sohn, H., Neve, W.D., Ro, Y.M.: Privacy protection in video surveillance systems: analysis of subband-adaptive scrambling in JPEG XR. IEEE Trans. Circuits Syst. Video Technol. 21(2), 170–177 (2011)

Cong, Y., Yuan, J., Luo, J.: Towards scalable summarization of consumer videos via sparse dictionary selection. IEEE Trans. Multimed. 14(1), 66–75 (2012)

Potapov, D., Douze, M., Harchaoui, Z., Schmid, C.: Category-specific video summarization. In: European conference on computer vision, pp. 540–555 (2014)

Ejaz, N., Mehmood, I., Baik, S.W.: Efficient visual attention based framework for extracting key frames from videos. Sig. Process. Image Commun. 28(1), 34–44 (2013)

Guan, G., Wang, Z., Lu, S., Deng, J.D., Feng, D.D.: Keypoint-based keyframe selection. IEEE Trans. Circuits Syst. Video Technol. 23(4), 729–734 (2013)

Fanfani, M., Bellavia, F., Colombo, C.: Accurate keyframe selection and keypoint tracking for robust visual odometry. Mach. Vis. Appl. 27(6), 833–844 (2016)

Mahmoud, K.M., Ismail, M.A., Ghanem, N.M.: VSCAN: an enhanced video summarization using density-based spatial clustering. In: Proceedings of the International Conference on Image Analysis and Processing (ICIAP), pp. 733–742 (2013)

Elhamifar, E., Sapiro, G., Vidal, R.: See all by looking at a few: sparse modeling for finding representative objects. In: Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1600–1607 (2012)

Panagiotakis, C., Ovsepian, N., Michael, E.: Video synopsis based on a sequential distortion minimization method. In: Proceedings of the International Conference on Computer Analysis of Images and Patterns, pp. 94–101 (2013)

Panagiotakis, C., Doulamis, A., Tziritas, G.: Equivalent key frames selection based on iso-content principles. IEEE Trans. Circuits Syst. Video Technol. 19(3), 447–451 (2009)

Acknowledgements

The work is financially supported in part by National Natural Science Foundation of China (61403232, 61327003, 51775319), Natural Science Foundation of Shandong Province, China (ZR2014FQ025), and Young Scholars Program of Shandong University (YSPSDU, 2015WLJH30).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Gao, Z., Lu, G., Lyu, C. et al. Key-frame selection for automatic summarization of surveillance videos: a method of multiple change-point detection. Machine Vision and Applications 29, 1101–1117 (2018). https://doi.org/10.1007/s00138-018-0954-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00138-018-0954-7