Abstract

Accumulative representations provide a method for representing variable-length videos with constant length features. In this study, we present aligned temporal accumulative features (ATAF), a skeleton heatmap-based feature for efficient representation and modeling of isolated sign language videos. Inspired by the movement-hold model in sign linguistics, we extract keyframes, align them using temporal transformer networks (TTNs) and extract descriptors using convolutional neural networks (CNNs). In the proposed approach, the use of aligned keyframes increases the recognition power of accumulative features as linguistically significant parts of signs are represented uniquely. Since we detect keyframes using hand movement, there can be differences from signer to signer. To overcome this challenge, ATAF has been implemented with both alignment of sampled frames and keyframe alignment approaches, using both finger speed differences and hand joint heatmaps to perform end-to-end alignment during classification. Results demonstrate that the proposed method achieves state-of-the-art recognition performance on the public BosphorusSign22k (BSign22k) dataset in combination with 3D-CNNs.

Similar content being viewed by others

References

Gökgöz, K.: Negation in turkish sign language: the syntax of nonmanual markers. Sign Language Linguistics 14(1), 49–75 (2011)

Kuehne, H., Jhuang, H., Garrote, E., Poggio, T., Serre, T.: Hmdb: a large video database for human motion recognition. Science 2, 2556–2563 (2011)

Soomro, K., Zamir, A.R., Shah, M.: Ucf101: a dataset of 101 human actions classes from videos in the wild. arXiv:1212.0402 (2012)

Kay, W. et al.: The kinetics human action video dataset. arXiv:1705.06950 (2017)

Carreira, J., Zisserman, A.: Quo vadis, action recognition? a new model and the kinetics dataset. IEEE 2, 4724–4733 (2017)

Jiang, S., et al.: Skeleton Aware Multi-modal Sign Language Recognition. Springer, Berlin (2021)

Choutas, V., Weinzaepfel, P., Revaud, J., Schmid, C.: Potion: pose motion representation for action recognition. Science 3, 7024–7033 (2018)

Tran, D., et al.: A closer look at spatiotemporal convolutions for action recognition. Science 3, 6450–6459 (2018)

Liddell, S.K., Johnson, R.E.: American sign language: the phonological base. Sign Language Stud. 64(1), 195–277 (1989)

Pitsikalis, V., Theodorakis, S., Vogler, C., Maragos, P.: Advances in phonetics-based sub-unit modeling for transcription alignment and sign language recognition. In: IEEE, pp. 1–6 (2011)

Cooper, H., Ong, E.-J., Pugeault, N., Bowden, R.: Sign language recognition using sub-units. J. Mach. Learn. Res. 13(1), 2205–2231 (2012)

Bowden, R., Windridge, D., Kadir, T., Zisserman, A., Brady, M.: A Linguistic Feature Vector for the Visual Interpretation of Sign Language, pp. 390–401. Springer, Berlin (2004)

Theodorakis, S., Pitsikalis, V., Maragos, P.: Dynamic-static unsupervised sequentiality, statistical subunits and lexicon for sign language recognition. Image Vis. Comput. 32(8), 533–549 (2014)

Tornay, S.: Explainable Phonology-based Approach for Sign Language Recognition and Assessment. Ph.D. thesis, EPFL (2021)

Borg, M., Camilleri, K.P.: Phonologically-Meaningful Subunits for Deep Learning-Based Sign Language Recognition, pp. 199–217. Springer, Berlin (2020)

Camgoz, N.C., Hadfield, S., Koller, O., Bowden, R.: End-to-end hand shape and continuous sign language recognition. Subunets 3, 7 (2017)

Tavella, F., Schlegel, V., Romeo, M., Galata, A., Cangelosi, A.: Wlasl-lex: a dataset for recognising phonological properties in american sign language. arXiv preprint arXiv:2203.06096 (2022)

Caselli, N.K., Sehyr, Z.S., Cohen-Goldberg, A.M., Emmorey, K.: Asl-lex: a lexical database of American sign language. Behav. Res. Methods 49(2), 784–801 (2017)

Gao, Z., Lu, G., Lyu, C., Yan, P.: Key-frame selection for automatic summarization of surveillance videos: a method of multiple change-point detection. Mach. Vis. Appl. 29(7), 1101–1117 (2018)

Xiong, W., Lee, C.-M., Ma, R.-H.: Automatic video data structuring through shot partitioning and key-frame computing. Mach. Vis. Appl. 10(2), 51–65 (1997)

Fanfani, M., Bellavia, F., Colombo, C.: Accurate keyframe selection and keypoint tracking for robust visual odometry. Mach. Vis. Appl. 27(6), 833–844 (2016)

Tang, H., Liu, H., Xiao, W., Sebe, N.: Fast and robust dynamic hand gesture recognition via key frames extraction and feature fusion. Neurocomputing 331, 424–433 (2019)

Mo, H., Yamagishi, F., Ide, I., Satoh, S., Sakauchi, M.: Key shot extraction and indexing in a news video archive. IEICE Tech. Rep. 105(118), 55–59 (2005)

Xu, W., Miao, Z., Yu, J., Ji, Q.: Action recognition and localization with spatial and temporal contexts. Neurocomputing 333, 351–363 (2019)

Yang, R., Sarkar, S.: Detecting coarticulation in sign language using conditional random fields. Science 2, 108–112 (2006)

Zhao, Z., Elgammal, A.M.: Information Theoretic Key Frame Selection for Action Recognition, pp. 1–10. Springer, Berlin (2008)

Carlsson, S., Sullivan, J.: Action Recognition by Shape Matching to Key Frames, vol. 1. Citeseer, London (2001)

Lu, G., Zhou, Y., Li, X., Yan, P.: Unsupervised, efficient and scalable key-frame selection for automatic summarization of surveillance videos. Multimedia Tools Appl. 76(5), 6309–6331 (2017)

Rodriguez, A., Laio, A.: Clustering by fast search and find of density peaks. Science 344(6191), 1492–1496 (2014)

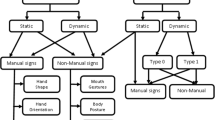

Elakkiya, R., Selvamani, K.: Extricating manual and non-manual features for subunit level medical sign modelling in automatic sign language classification and recognition. J. Med. Syst. 41(11), 1–13 (2017)

De Coster, M., Van Herreweghe, M., Dambre, J.: Sign language recognition with transformer networks. In: European Language Resources Association (ELRA), pp. 6018–6024 (2020)

Huang, S., Mao, C., Tao, J., Ye, Z.: A novel Chinese sign language recognition method based on keyframe-centered clips. IEEE Signal Process. Lett. 25(3), 442–446 (2018)

Pan, W., Zhang, X., Ye, Z.: Attention-based sign language recognition network utilizing keyframe sampling and skeletal features. IEEE Access 8, 215592–215602 (2020)

Albanie, S., et al.: Bsl-1k: Scaling Up Co-articulated Sign Language Recognition Using Mouthing Cues, pp. 35–53. Springer, Berlin (2020)

Berndt, D.J., Clifford, J.: Using Dynamic Time Warping to Find Patterns in Time Series, vol. 10, pp. 359–370. Springer, Seattle (1994)

Cuturi, M., Blondel, M.: Soft-dtw: a differentiable loss function for time-series. arXiv preprint arXiv:1703.01541 (2017)

Petitjean, F., Ketterlin, A., Gançarski, P.: A global averaging method for dynamic time warping, with applications to clustering. Pattern Recogn. 44(3), 678–693 (2011)

Zhou, F., Torre, F.: Canonical time warping for alignment of human behavior. Adv. Neural. Inf. Process. Syst. 22, 2286–2294 (2009)

Trigeorgis, G., Nicolaou, M.A., Zafeiriou, S., Schuller, B.W.: Deep canonical time warping. Science 2, 5110–5118 (2016)

Chang, C.-Y., Huang, D.-A., Sui, Y., Fei-Fei, L., Niebles, J.C.: D3tw: discriminative differentiable dynamic time warping for weakly supervised action alignment and segmentation. Science 6, 3546–3555 (2019)

Korbar, B., Tran, D., Torresani, L.: Scsampler: sampling salient clips from video for efficient action recognition. Science 6, 6232–6242 (2019)

Lohit, S., Wang, Q., Turaga, P.K.: Temporal transformer networks: Joint learning of invariant and discriminative time warping. CoRR abs/1906.05947. http://arxiv.org/abs/1906.05947 (2019)

Oh, J., Wang, J. , Wiens, J.: Learning to exploit invariances in clinical time-series data using sequence transformer networks. arXiv preprint arXiv:1808.06725 (2018)

Starner, T., Pentland, A.: Real-Time American Sign Language Recognition from Video Using Hidden Markov Models, pp. 227–243. Springer, Berlin (1997)

Özdemir, O., Camgöz, N.C., Akarun, L.: Isolated sign language recognition using improved dense trajectories. In: IEEE, pp. 1961–1964 (2016)

Camgöz, N.C. et al.: Bosphorussign: a Turkish sign language recognition corpus in health and finance domains (2016)

Ding, L., Martinez, A.M.: Modelling and recognition of the linguistic components in American sign language. Image Vis. Comput. 27(12), 1826–1844 (2009)

Theodorakis, S., Pitsikalis, V., Maragos, P.: Dynamic-static unsupervised sequentiality, statistical subunits and lexicon for sign language recognition. Image Vis. Comput. 32(8), 533–549 (2014)

Ong, E.-J., Koller, O., Pugeault, N., Bowden, R.: Sign spotting using hierarchical sequential patterns with temporal intervals, pp. 1923–1930 (2014)

Belgacem, S., Chatelain, C., Paquet, T.: Gesture sequence recognition with one shot learned crf/hmm hybrid model. Image Vis. Comput. 61, 12–21 (2017)

Rastgoo, R., Kiani, K., Escalera, S.: Sign language recognition: a deep survey. Expert Syst. Appl. 164, 113794 (2021)

Vaezi Joze, H.R., Koller, O.: MS-ASL: a large-scale data set and benchmark for understanding American Sign Language (2018)

Li, D., Rodriguez, C., Yu, X., Li, H.: Word-level deep sign language recognition from video: a new large-scale dataset and methods comparison, pp. 1459–1469 (2020)

Chai, X., Wang, H., Chen, X.: The devisign large vocabulary of chinese sign language database and baseline evaluations. Technical report VIPL-TR-14-SLR-001. Key Lab of Intelligent Information Processing of Chinese Academy of Sciences (CAS), Institute of Computing Technology, CAS (2014)

Neidle, C., Thangali, A., Sclaroff, S.: Challenges in development of the American sign language lexicon video dataset (asllvd) corpus (Citeseer, 2012)

Albanie, S. et al.: BSL-1K: scaling up co-articulated sign language recognition using mouthing cues (2020)

He, J., Liu, Z., Zhang, J.: Chinese sign language recognition based on trajectory and hand shape features. In: IEEE, pp. 1–4 (2016)

Özdemir, O., Kındıroğlu, A.A., Camgöz, N.C., Akarun, L.: Bosphorussign22k sign language recognition dataset. arXiv preprint arXiv:2004.01283 (2020)

Forster, J., Schmidt, C., Koller, O., Bellgardt, M., Ney, H.: Extensions of the sign language recognition and translation corpus rwth-phoenix-weather, pp. 1911–1916 (2014)

Zhang, J., Zhou, W., Xie, C., Pu, J., Li, H.: Chinese sign language recognition with adaptive hmm. In: IEEE, pp. 1–6 (2016)

Pu, J., Zhou, W., Li, H.: Iterative alignment network for continuous sign language recognition, pp. 4165–4174 (2019)

Donahue, J. et al.: Long-term recurrent convolutional networks for visual recognition and description, pp. 2625–2634 (2015)

Tran, D., Bourdev, L., Fergus, R., Torresani, L., Paluri, M.: Learning spatiotemporal features with 3d convolutional networks. In: IEEE, pp. 4489–4497 (2015)

Koller, O., Camgoz, N.C., Ney, H., Bowden, R.: Weakly supervised learning with multi-stream cnn-lstm-hmms to discover sequential parallelism in sign language videos. IEEE Trans. Pattern Anal. Mach. Intell. 6, 788 (2019)

Cao, Z., Hidalgo, G., Simon, T., Wei, S.-E., Sheikh, Y.: Openpose: realtime multi-person 2d pose estimation using part affinity fields. arXiv preprint arXiv:1812.08008 (2018)

Yan, S., Xiong, Y., Lin, D.: Spatial temporal graph convolutional networks for skeleton-based action recognition. arXiv preprint arXiv:1801.07455 (2018)

Cheng, K., Zhang, Y., Cao, C., Shi, L., Cheng, J.: Decoupling GCN with Dropgraph Module for Skeleton-based Action Recognition. Springer, Berlin (2021)

Zhu, W. et al.: Co-occurrence feature learning for skeleton based action recognition using regularized deep lstm networks. arXiv preprint arXiv:1603.07772 (2016)

Joze, H.R.V., & Koller, O.: Ms-asl: a large-scale data set and benchmark for understanding american sign language. arXiv preprint arXiv:1812.01053 (2018)

Asghari-Esfeden, S., Sznaier, M., Camps, O.: Dynamic motion representation for human action recognition, pp. 557–566 (2020)

Simonyan, K., Zisserman, A.: Two-Stream Convolutional Networks for Action Recognition in Videos, pp. 568–576. MIT Press, London (2014)

Sincan, O.M., Keles, H.Y.: Autsl: a large scale multi-modal Turkish sign language dataset and baseline methods. IEEE Access 8, 181340–181355 (2020)

Han, J., Shao, L., Xu, D., Shotton, J.: Enhanced computer vision with microsoft kinect sensor: a review. IEEE Trans. Cybern. 43(5), 1318–1334 (2013)

Paszke, A., et al.: Pytorch: an imperative style, high-performance deep learning library. Adv. Neural Inf. Process. Syst. 32, 8024–8035 (2019)

Loshchilov, I., Hutter, F.: Decoupled weight decay regularization. arXiv preprint arXiv:1711.05101 (2017)

Alp Kindiroglu, A., Ozdemir, O., Akarun, L.: Temporal accumulative features for sign language recognition (2019)

Gökçe, Ç., Özdemir, O., Kındıroğlu, A.A., Akarun, L.: Score-level multi cue fusion for sign language recognition, pp. 294–309, Springer (2020)

Moryossef, A. et al.: Evaluating the immediate applicability of pose estimation for sign language recognition, pp. 3434–3440 (2021)

Acknowledgements

This work was funded by the Turkish ministry of development under the TAM Project #2007K120610, TUBITAK Project #117E059. The numerical calculations reported in this paper were also performed at TUBITAK ULAKBIM, HPAGCC-TRUBA.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Kındıroglu, A.A., Özdemir, O. & Akarun, L. Aligning accumulative representations for sign language recognition. Machine Vision and Applications 34, 12 (2023). https://doi.org/10.1007/s00138-022-01367-x

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00138-022-01367-x