Abstract

The linear regression model is widely applied to measure the relationship between a dependent variable and a set of independent variables. When the independent variables are related to each other, it is said that the model presents collinearity. If the relationship is between the intercept and at least one of the independent variables, the collinearity is nonessential, while if the relationship is between the independent variables (excluding the intercept), the collinearity is essential. The Stewart index allows the detection of both types of near multicollinearity. However, to the best of our knowledge, there are no established thresholds for this measure from which to consider that the multicollinearity is worrying. This is the main goal of this paper, which presents a Monte Carlo simulation to relate this measure to the condition number. An additional goal of this paper is to extend the Stewart index for its application after the estimation by ridge regression that is widely applied to estimate model with multicollinearity as an alternative to ordinary least squares (OLS). This extension could be also applied to determine the appropriate value for the ridge factor.

Similar content being viewed by others

Notes

A variable is standardized by resting its mean and dividing by the square root of n times the variance.

To determine whether there is nonessential collinearity from the CV, it is necessary to check the thresholds provided by Salmerón et al. (2020c): \(CV (\mathbf {x}_{i}) < 0.1002506\), \(CV (\mathbf {x}_{i}) < 0.06674082\). In addition, VIF values higher than 10 should be obtained to verify that the collinearity is essential.

This will be the default value used throughout the work.

References

Belsley DA, Kuh E, Welsch RE (2005) Regression diagnostics: Identifying influential data and sources of collinearity, vol 571. John Wiley & Sons, London

García J, Salmerón R, García C, López Martín MdM (2016) Standardization of variables and collinearity diagnostic in ridge regression. International Statistical Review 84(2):245–266

Gorman J, Toman R (1970) Selection of variables for fitting equations to data. Technometrics 8:27–51

Hoerl A, Kennard R (1970a) Ridge regression: applications to nonorthogonal problems. Technometrics 12(1):69–82

Hoerl A, Kennard R (1970b) Ridge regression: biased estimation for nnorthogonal problems. Technometrics 12(1):55–67

Klein LR, Goldberger AS (1955) An economic model of the Untied States 1929–1952. North-Holland Publishing Company, Amsterdam

Marquardt DW (1970) Generalized inverses, ridge regression, biased linear estimation, and nonlinear estimation. Technometrics 12(3):591–612

Marquardt DW, Snee RD (1975) Ridge regression in practice. The American Statistician 29(1):3–20

McDonald GC (2010) Tracing ridge regression coefficients. Wiley Interdisciplinary Reviews: Computational Statistics 2(6):695–703

R Core Team (2019) R: A Language and Environment for Statistical Computing. R Foundation for Statistical Computing, Vienna, Austria, https://www.R-project.org/

Sánchez AR, Gómez RS, García C (2019) The coefficient of determination in the ridge regression. Commun Stat-Simul Comput. https://doi.org/10.1080/03610918.2019.1649421

Salmerón R, García C, García J (2018a) Variance inflation factor and condition number in multiple linear regression. Journal of Statistical Computation and Simulation 88(12):2365–2384

Salmerón R, García J, García C, del Mar López M (2018b) Transformation of variables and the condition number in ridge estimation. Computational Statistics 33(3):1497–1524

Salmerón R, García C, García J (2020) Comment on “a note on collinearity diagnostics and centering” by velilla (2018). The American Statistician 74(1):68–71

Salmerón R, García C, García J (2020a) Detection of near-multicollinearity through centered and noncentered regression. Mathematics 8(6):931–948

Salmerón R, García C, García J (2020b) A guide to using the r package “multicoll” for detecting multicollinearity. Computational Economics. https://doi.org/10.1007/s10614-019-09967-y

Salmerón R, Rodríguez A, García C (2020c) Diagnosis and quantification of the non-essential collinearity. Computational Statistics 35:647–66

Silva T, Ribeiro A (2018) A new accelerated algorithm for ill-conditioned ridge regression problems. Computational and Applied Mathematics 37:1941–1958

Stewart GW (1987) Collinearity and least squares regression. Statistical Science 2(1):68–84

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Main properties of \(k_{i}^{2} (\lambda )\)

Main properties of \(k_{i}^{2} (\lambda )\)

In this section the main properties of \(k_{i}^{2} (\lambda )\) are demonstrated.

Proposition 1

\(k_{i}^{2} (\lambda )\) is continuous at zero, that is, \(k_{i}^{2} (0) = k_{i}^{2}\).

Proof

Evident comparing expressions (7) and (14). Also from expression (16). \(\square \)

Proposition 2

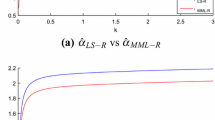

\(k_{i}^{2} (\lambda )\) decreases as a function of \(\lambda \).

Proof

To study the monotony, McDonald (2010) is considered, where \(\mathbf {X}_{-i}^{t}\mathbf {X}_{-i} + \lambda \mathbf {I}_{p-1} = \varvec{\varGamma } \mathbf {D}_{\mu + \lambda } \varvec{\varGamma }^{t}\) with \(\mathbf {D}_{\mu + \lambda } = diag ((\mu _{1} + \lambda ), \dots , (\mu _{p-1} + \lambda ))\), \(\varvec{\varGamma }\) is the \((p-1) \times (p-1)\) orthogonal matrix of eigenvectors of the \((p-1) \times (p-1)\) matrix \(\mathbf {X}_{-i}^{t}\mathbf {X}_{-i}\) and \(\mu _{j}\) represents the eigenvalues of this matrix. Taking into account that \(\varvec{\gamma } = \mathbf {X}_{-i}^{t}\mathbf {x}_{i}\) and \(\varvec{\alpha } =\varvec{\gamma }^{t}\varvec{\varGamma }\):

From this expression, it is verified that \(k_{i}^{2} (\lambda )\) decreases as a function of \(\lambda \) because \(\lambda \ge 0\) and \(\mu _{j} > 0\), and it is verified that:

\(\square \)

Proposition 3

\(k_{i}^{2} (\lambda ) \ge 1\), \(\forall \lambda \).

Proof

Suppose that:

Because the last condition is not possible since \(\lambda \ge 0\) and \(\mu _{j} > 0\), it can be concluded that the first supposition is not true, and consequently, \(k_{i}^{2} (\lambda ) \ge 1\), \(\forall \lambda .\) In addition, from expression (15), it is evident that \(\lim \nolimits _{\lambda \rightarrow +\infty } k_{i}^{2}(\lambda ) = 1\). \(\square \)

Proposition 4

\(k_{i}^{2}(\lambda )\) is related to \(k_{i}^{2}\).

Proof

Starting from the expression (14) and taking into account that the augmented model (4) is given as \(\widetilde{\mathbf {x}}_{i} = \widetilde{\mathbf {X}}_{-i} \varvec{\delta }+\mathbf {v}\) being \(\widetilde{\mathbf {X}} = \left( \begin{array}{c} \mathbf {X}\\ \sqrt{\lambda }\mathbf {I}_{p} \end{array}\right) \), it is obtained that:

and consequently:

Then, adding and subtracting the same quantity \(\mathbf {x}_{i}^{t}\mathbf {X}_{-i}(\mathbf {X}_{-i}^{t}\mathbf {X}_{-i})^{-1}\mathbf {X}_{-i}^{t}\mathbf {x}_{i}\), we obtain:

where \(SSR_{i}\) denotes the sum of squares residuals of the auxiliary regression (8).

Then, because \(\mathbf {x}_{i}^{t} \mathbf {x}_{i} = SST_{i} + n \overline{\mathbf {x}}_{i}^{2}\), where \(SST_{i}\) denotes the sum of squares totals of the auxiliary regression (8), we obtain:

\(\square \)

Proposition 5

For standardized data, \(k_{i}^{2}(\lambda )\) coincide with \(VIF(i,\lambda )\).

Proof

On the one hand, expression (14) can be expressed as:

and, on the other hand, starting from the augmented model (4), for \(\widetilde{\mathbf {x}}_{i} = \widetilde{\mathbf {X}}_{-i} \varvec{\delta }+\mathbf {v}\), the following sum of squares explained and total are obtained:

Then, due to:

it can be concluded that expressions (17) and (18) coincide if \(\overline{\widetilde{\mathbf {x}}}_{i} = 0\). Thus, because \(\overline{\widetilde{\mathbf {x}}}_{i} = \frac{n \overline{\mathbf {x}}_{i} + \sqrt{\lambda }}{n+p}\), to be equal to zero, it is necessary to verify that \(\overline{\mathbf {x}}_{i} = 0 = \lambda \). That is, the ridge regression coincides with OLS.

Alternatively, the matrix \(\widetilde{\mathbf {X}}\) can be standardized, since in that case, all the implied variables have a mean equal to zero. This possibility is in agreement with the conclusion presented in García et al. (2016), where it was established that standardized data must be used for a correct application of the VIF in ridge regression. \(\square \)

Rights and permissions

About this article

Cite this article

Sánchez, A.R., Gómez, R.S. & García, C.G. Obtaining a threshold for the stewart index and its extension to ridge regression. Comput Stat 36, 1011–1029 (2021). https://doi.org/10.1007/s00180-020-01047-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00180-020-01047-2