Abstract

Mechanisms for Nash implementation in the literature are fragile in the sense that they fail if just one or two players do not follow their equilibrium strategy. A mechanism is outcome-robust if its equilibrium outcome is not affected by any deviating minority of players. Is Nash implementation possible with outcome-robust mechanisms? I first show that in the standard environment, it is impossible to Nash-implement any nonconstant social choice rule with outcome-robust mechanisms even if a small number of players are partially honest. If simple transfers are used and if at least one player is partially honest, however, any social choice rule is Nash implementable using an outcome-robust mechanism. The mechanism presented in this paper makes no assumptions about how transfers enter players’ preferences except that transfers are valuable. Moreover, it has: no transfers in equilibrium, arbitrarily small off-equilibrium transfers, and no integer or modulo games.

Similar content being viewed by others

Notes

Following Dutta and Sen (2012), a partially honest individual is one who prefers a truthful message to an untruthful one whenever the outcome of the mechanism resulting from a truthful message is at least as preferred by that player as the outcome resulting from an untruthful message.

Integer or modulo games are types of “tail-chasing” constructs that have been consistently criticized in the literature (see Jackson 1992, 2001 for a discussion). The typical integer game gives dictatorial power to the player who reports the highest integer, and so it provides a profitable deviation to any player for whom the outcome is not the highest preferred. Modulo games are similar but their strategy spaces are bounded through the use of modular arithmetic. Such constructs often have no Nash equilibria or lead to mixed strategy equilibria that are both plausible and undesirable. Because they inherently incentivize deviations, they are often augmented to mechanisms to overcome the main challenge with full implementation: undesirable equilibria. They have been criticized in the literature for being unnatural and complex and also for being an inappropriate setting to apply the Nash equilibrium solution concept. In cases where an integer game has no Nash equilibria, for example, it is clear that Nash equilibrium is not the appropriate solution concept we should use to predict how we expect players to behave in the game. The exercise of prediction may itself even be problematic in such a setting because agents do not always have a well-defined best-response correspondence (see Jackson 1992 for more detail). Even though the use of integer and modulo games is typically confined to sufficiency proofs showing that implementation is possible for a large class of problems, a concern is whether their use in such cases is necessary and whether the implementation results are consequently dependent on their use. Although their use continues, the undesirability of integer or modulo games resulted in a long tradition of them being deliberately avoided whenever possible (e.g., Abreu and Matsushima 1992; Sjöström 1994; Jackson et al. 1994; Dutta and Sen 2012; Ben-Porath and Lipman 2012, and Kartik et al. 2014).

Dutta and Sen (2012) show that the integer game is not needed if preferences are restricted to those that satisfy a separability requirement and if all agents are partially honest.

To ensure that no minority of players wishes to deviate from equilibria, a mechanism should not contain possibilities in which a group can deviate and obtain a total net positive transfer to the members of the group. The mechanism in this paper satisfies this condition.

For instance, the mechanism used in Tumennasan (2013) functions as intended only if all players play a limit logit quantal response equilibrium, and, in a similar way to the mechanism in Maskin (1999) (see Sect. 1.2), a deviation by just one player can lead to the worst outcome for all players. Given that social choice rules in Tumennasan (2013) are assumed to choose no worst alternatives, a worst outcome for all players would be a clear failure of the mechanism.

Eliaz (2002) studies a similar notion of robustness termed “fault-tolerance.” In Eliaz (2002), fault-tolerance is not defined as a property of the mechanism. Rather, it is an alternative notion of implementation defined along with a particular solution concept (k-fault-tolerant-Nash-equilibrium). In fault-tolerant implementation some Nash implementation properties are lost. In particular, the crucial requirement that all Nash equilibria of the mechanism lead to desirable outcomes is no longer maintained. I use a different term, “outcome-robustness,” to decouple the robustness of the mechanism from the particular notion of implementation and the solution concept used. In Eliaz (2002), k-fault-tolerant implementation directly implies some robustness properties of the mechanism used, but this is not true with other solution concepts—for instance, a mechanism that Nash implements a social choice rule may or may not be outcome-robust. Another difference between the two notions is that fault-tolerance allows the mechanism outcome to change as long as it remains in the set of outcomes chosen by the social choice rule; thus, fault-tolerance is weaker than outcome-robustness (see the discussion of weak-outcome-robustness in Sect. 3).

An alternate, but equivalent, formulation could assume that players have knowledge of a “state of the world” that is unknown to the designer, and that preferences are completely determined by the state of the world. In this formulation the set of truthful messages would depend on the state of the world; typically, a player’s message would be considered truthful if it includes a report of the true state of the world and untruthful if it contains a state of the world different from the true one. The paper can be re-written in this formulation with no change in the results.

It must then be possible to deduce which candidate is the highest skilled from a given preference profile of the members. In this environment, a candidate \(c_{i}\) is more skilled than candidate \(c_{j}\) if and only if at least \(n-1\) members rank candidate \(c_{i}\) higher than candidate \(c_{j}\). If \(c_{i}\) is more skilled than candidate \(c_{j}\) then all members, except possibly for the member with the same background as \(c_{j}\), will rank \(c_{i}\) higher than \(c_{j}\). Similarly, if at least \(n-1\) members rank a candidate \(c_{i}\) higher than a candidate \(c_{j}\), it must be that \(c_{i}\) is more skilled than \(c_{j}\). Hence, if the preference profile were known, a SCF could not only deduce the highest-skilled candidate, but it could also rank the candidates according to skill.

For each player i, the set of truthful messages is determined by the mapping \(T_i\) that selects a subset of each player’s message space to be truthful. This allows, for instance, that a player’s message space include no truthful messages: a player i may be instructed to only choose between two messages (say, \(M_i = \{G_1,G_2\}\)) that determine which game is played by other players; player i’s message space is this case is allowed to have no intrinsic truth-value by defining \(T_i(\cdot )\) to always be empty. In addition, the mapping \(T_i\) can easily be extended to environments with more complex states of the world so that the truthfulness of players’ messages may depend on the preferences of subsets of players (such as a player’s own preference ordering) or on other environment-dependent states of the world.

In practice, the mapping \(c^*\) can be very simple to construct. For instance, if the outcomes in A can be enumerated, \(A=\{a_1,a_2,\ldots \}\), then \(c^*\) can simply pick the outcome with the smallest index in any subset of A. More generally, as long as the set of outcomes A is well-ordered (a set A is well-ordered if an ordering of A exists such that every subset of A contains a least element) then \(c^*\) can simply be the mapping selecting the least element in every subset of A. Any finite or countably infinite set can be well-ordered.

Player j, by reporting the same preference profile as player i but the same outcome as the rest of the players, identifies player i as the player who receives the negative transfer under case (b) of the transfers rule and also identifies the outcome and preference profile treated as the majority report.

For instance, if R is the true preference profile and outcome a is the top preferred outcome for all players at R, a message in which \(n-1\) players report (R, a, 1) and one player who is not partially honest reports \((R',a,1)\) is an equilibrium. At this message profile the outcome is a, which is the highest preferred outcome for all players so no player has an outcome-based incentive to deviate, and all partially honest players report the true preference profile so they have no honesty-based incentive to deviate. However, any player reporting (R, a, 1) can deviate to the integer game and obtain any outcome other than a, showing that the mechanism is not outcome-robust.

For example, suppose \(a^*\) is the socially desirable outcome and let preferences be as follows: the highest-preferred outcome for player 1 is \(a^*\) and for players 2 and 3 is b. Consider a message profile in which player 1 reports \(a^*\) for all \(K+1\) turns and players 2 and 3 report b for all K turns. Note that the outcome from this message profile is b with probability 1, and both players 2 and 3 receive a transfer of \(-\varepsilon \). If we have no knowledge of players’ utility functions, there is no guarantee that this message profile would not be an “undesirable” equilibrium in which outcome b is selected with probability 1. To see this consider whether any player has a profitable deviation. Player 1, who is partially honest, reports the truth and so has no honesty-based incentive to deviate, and the lottery selected by the mechanism is independent of player 1’s message in this case (because a majority agrees on outcome b regardless of how player 1 behaves) so there is no outcome-based incentive to deviate. In addition, player 1 cannot improve his/her transfer because player 1’s transfer is always zero. Consider next player \(i\in \{2,3\}\). The proof of the main theorem in Matsushima (2008) assumes that player i in this case has an incentive to deviate to reporting \(a^*\) at turn K. If player i deviates to reporting \(a^*\) at turn K he/she receives a more preferred transfer of 0, but the probability of b being selected is now \((K-1)/K\). Let \(u_i(a,t)\) denote player i’s utility from outcome a and transfer t when the socially desirable outcome is \(a^*\), where the preference for honesty is implicit in the utility function. The utility from reporting \(a^*\) at turn K is \((1/K)u_i(a^*,0) + ((K-1)/K)u_i(b,0)\). The utility from reporting b at turn K is \(u_i(b,-\varepsilon )\). To ensure that player i prefers to deviate it must be true that \(K > (u_i(b,0)-u_i(a^*,0))/(u_i(b,0)-u_i(b,-\varepsilon ))\). If this condition is not satisfied it is impossible to guarantee the outcome selected by the mechanism or even the uniqueness of the equilibrium. Thus, setting K sufficiently large requires knowledge of players’ utility functions.

Player 1, who is assumed to be partially honest, can deviate and impose a fine on all other players because player 1’s report at turn \(K+1\) is taken to be the truth.

Because this section presents only impossibility results, there is no need to explicitly introduce a separate and stronger definition of weak-outcome-robustness to constrain how private outcomes are allowed to change off-equilibrium. However, such a definition may be desired if one wishes to design a weak-outcome-robust mechanism in a private outcomes environment in which weak-outcome-robustness is possible.

The set \(B_2\backslash \{j\}\) is of size \(n/2-1<n/2\) and \((m_{B_1},m'_j,m'_{B_2\backslash \{j\}})=(m_{B_1},m'_{B_2})\) is a minority-group deviation from \((m'_j,m_{-j})\) in which players in \(B_2\backslash \{j\}\) switch from following message profile m to following \(m'\).

References

Abraham I, Dolev D, Gonen R, Halpern J (2006) Distributed computing meets game theory: robust mechanisms for rational secret sharing and multiparty computation. In: Proceedings of the 25th annual ACM symposium on principles of distributed computing. ACM, New York, pp 53–62

Abreu D, Matsushima H (1992) Virtual implementation in iteratively undominated strategies: complete information. Econometrica 60:993–1008

Attiyeh G, Franciosi R, Isaac RM (2000) Experiments with the pivot process for providing public goods. Public Choice 102:93–112

Aumann RJ (1960) Acceptable points in games of perfect information. Pac J Math 10:381–417

Ben-Porath E, Lipman BL (2012) Implementation with partial provability. J Econ Theory 147:1689–1724

Bernheim BD, Peleg B, Whinston MD (1987) Coalition-proof Nash equilibria I. Concepts. J Econ Theory 42:1–12

Cabrales A, Charness G, Corchón LC (2003) An experiment on Nash implementation. J Econ Behav Organ 51:161–193

Chakravorti B (1991) Strategy space reduction for feasible implementation of Walrasian performance. Soc Choice Welf 8:235–245

Duggan J (2003) Nash implementation with a private good. Econ Theory 21:117–131

Dutta B, Sen A (2012) Nash implementation with partially honest individuals. Games Econ Behav 74:154–169

Dutta B, Sen A, Vohra R (1995) Nash implementation through elementary mechanisms in economic environments. Econ Des 1:173–203

Eliaz K (2002) Fault tolerant implementation. Rev Econ Stud 69:589–610

Halpern JY (2008) Beyond Nash equilibrium: solution concepts for the 21st century. In: Proceedings of the 27th ACM symposium on principles of distributed computing. ACM, New York, pp 1–10

Jackson MO (1992) Implementation in undominated strategies: a look at bounded mechanisms. Rev Econ Stud 59:757–775

Jackson MO (2001) A crash course in implementation theory. Soc Choice Welf 18:655–708

Jackson MO, Palfrey TR, Srivastava S (1994) Undominated Nash implementation in bounded mechanisms. Games Econ Behav 6:474–501

Kartik N, Tercieux O, Holden R (2014) Simple mechanisms and preferences for honesty. Games Econ Behav 83:284–290

Kawagoe T, Mori T (2001) Can the pivotal mechanism induce truth-telling? An experimental study. Public Choice 108:331–354

Maskin E (1999) Nash equilibrium and welfare optimality. Rev Econ Stud 66:23–38

Maskin E (2011) Commentary: Nash equilibrium and mechanism design. Games Econ Behav 71:9–11

Matsushima H (2008) Behavioral aspects of implementation theory. Econ Lett 100:161–164

Moore J, Repullo R (1988) Subgame perfect implementation. Econometrica 56:1191–1220

Ortner J (2015) Direct implementation with minimally honest individuals. Games Econ Behav 90:1–16

Reichelstein S, Reiter S (1988) Game forms with minimal message spaces. Econometrica 56:661–692

Renou L, Schlag KH (2011) Implementation in minimax regret equilibrium. Games Econ Behav 71:527–533

Saijo T (1988) Strategy space reduction in Maskin’s theorem: sufficient conditions for Nash implementation. Econometrica 56:693–700

Saijo T, Sjostrom T, Yamato T (2007) Secure implementation. Theor Econ 2:203–229

Segal IR (2010) Nash implementation with little communication. Theor Econ 5:51–71

Sethi R (2007) Nash equilibrium. In: International Encyclopedia of the Social Sciences, 2nd edn. pp 540–542

Sjöström T (1994) Implementation in undominated Nash equilibria without integer games. Games Econ Behav 6:502–511

Tumennasan N (2013) To err is human: implementation in quantal response equilibria. Games Econ Behav 77:138–152

Author information

Authors and Affiliations

Corresponding author

Additional information

This paper is based on the first chapter of my Ph.D. dissertation at the Department of Economics, University of Texas, 1 University Station, C3100, Austin, TX 78712, USA. That chapter is titled “Safety in Mechanism Design and Implementation Theory.” I am grateful to my advisers, Jason Abrevaya and Maxwell Stinchcombe, and my committee members, Marika Cabral, Laurent Mathevet, and Thomas Wiseman for their support, feedback, and encouragement. I thank those who have provided invaluable comments and feedback on this paper or previous versions of it: John Hatfield, Troy Kravitz, Justin Leroux, Jon Pogach, and Philipp Strack; seminar participants at UT-Austin and the FDIC Center for Financial Research; the attendees at the 2nd Texas Economics Theory Camp, 2013; and the participants at the Society of Social Choice and Welfare Conference, 2014.

Opinions expressed in this paper are those of the author and not necessarily those of the FDIC. This research was largely conducted while the author was affiliated with the University of Texas at Austin.

Appendix

Appendix

One may conjecture from Theorems 2 and 4 that it is never possible to Nash implement a social choice rule using an outcome-robust mechanism without transfers. The following counterexample shows that such a conjecture would be false. There are some cases in which partial honesty can be relied on instead of transfers, but as Theorems 2 and 4 show, this is not true for the vast majority of interesting implementation problems.

Example 2

As in Example 1, let the set of outcomes be \(A=\{a,b,c\}\) and consider a social choice function given by \(F(R) = a\) and \(F(R')=b\), where there are three players with preference profiles R and \(R'\) given by:

\(R_{1}\) | \(R_{2}\) | \(R_{3}\) | \(R'_{1}\) | \(R'_{2}\) | \(R'_{3}\) |

|---|---|---|---|---|---|

a | b | c | a | b | c |

b | a | a | b | a | b |

c | c | b | c | c | a |

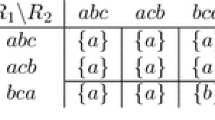

Suppose the designer is certain that players 1 and 2 are partially honest. Then the following outcome-robust mechanism Nash implements \(F:M_i=\{R,R'\}\) for all i, and, for any \(m\in M\), \(g(m)=a\) if at least two players report R and \(g(m)=b\) if at least two players report \(R'\). It is assumed in this case that \(T_i(R)=R\) and \(T_i(R')=R'\) for all i; that is, player i is reporting truthfully if he/she reports the accurate preference profile. This mechanism can be illustrated as follows (where player 1 picks the row, player 2 the column, and player 3 the matrix):

Note that there are only two message profiles from which no minority of players (one player in this case) can change the outcome: \(m=(R,R,R)\) and \(m'=(R',R',R')\). The outcomes from those two profiles are \(g(m)=a\) and \(g(m)=b\). For the mechanism to be outcome-robust and Nash implement F, it must be the case that m is the only NE at R and \(m'\) is the only NE at \(R'\). It is clear that m is a NE at R and \(m'\) is a NE at \(R'\) because all players report the truth at both m and \(m'\) and no player can change the outcome by deviating. I will show next that no other NE exist under R or \(R'\) under the assumption that players 1 and 2 are partially honest.

The following table lists all the possible message profiles in the mechanism. For each it lists one profitable deviation (of potentially several), if one exists, at R and \(R'\), along with the reasoning behind each listed deviation. Note that only \(m=(R,R,R)\) is an equilibrium at R (with no profitable deviation listed) and only \(m'=(R',R',R')\) is an equilibrium at \(R'\). Two message profiles have the reasoning in bold font; these two profiles are unique in that a profitable deviation exists only if one specific player is partially honest, and no player has an outcome- or honesty-based incentive to deviate otherwise. Thus, if outcome-robustness is required, this mechanism can only be used to Nash implement F if we are certain that players 1 and 2 are partially honest.

Message | Outcome | Deviation at R | Reasoning | Deviation at \(R'\) | Reasoning |

|---|---|---|---|---|---|

\((R,R,R')\) | a | \((R,R',R')\) | Player 2 prefers b | \((R,R',R')\) | Player 2 prefers b |

\((R,R',R)\) | a | (R, R, R) | Player 2 is partially honest | \((R,R',R')\) | Player 3 prefers b |

\((R',R,R)\) | a | \((R',R',R)\) | Player 2 prefers b | \((R',R',R)\) | Player 2 prefers b |

\((R',R',R)\) | b | \((R,R',R)\) | Player 1 prefers a | \((R,R',R)\) | Player 1 prefers a |

\((R',R,R')\) | b | \((R,R,R')\) | Player 1 prefers a | \((R,R,R')\) | Player 1 prefers a |

\((R,R',R')\) | b | \((R,R',R)\) | Player 3 prefers a | \((R',R',R')\) | Player 1 is partially honest |

\((R',R',R')\) | b | \((R',R,R')\) | Player 2 is partially honest | ||

(R, R, R) | a | \((R',R,R)\) | Player 1 is partially honest |

Rights and permissions

About this article

Cite this article

Shoukry, G.F.N. Outcome-robust mechanisms for Nash implementation. Soc Choice Welf 52, 497–526 (2019). https://doi.org/10.1007/s00355-018-1154-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00355-018-1154-0