Abstract

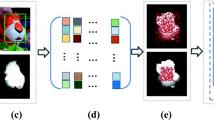

Obtaining the exact spatial information of moving objects is a crucial and difficult task during visual object tracking. In this study, a comprehensive spatial feature similarity (CSFS) strategy is proposed to compute the confidence level of target features. This strategy is used to determine the current position of the target among candidates during the tracking process. Given that the spatial information and appearance feature of an object should be considered simultaneously, the CSFS strategy offers the benefit of reliable tracking position decisions. Moreover, we propose an appearance-based multi-independent features distribution fields (MIFDFs) object representation model, which represents targets using spatial distribution fields with multiple features independently. This representation model can preserve a large amount of original spatial and feature data synthetically. Various experimental results show that the proposed method exhibits significant improvement in terms of tracking drift in complex scenes. In particular, the proposed approach outperforms other techniques in tracking robustness and accuracy in some challenging situations.

Similar content being viewed by others

References

Tian, Y., Lu, M., Hampapur, A.: Robust and efficient foreground analysis for real-time video surveillance. In: Proceedings of IEEE Computer Society Conference Computer Vision Pattern Recognition, vol. 1, pp. 1182–1187, Jun (2005)

Collins, R., Lipton, A., Kanade, T., Fujiyoshi, H., Duggins, D., Tsin, Y., Tolliver, D., Enomoto, N., Hasegawa, O., Burt, P., Wixson, L.: Asystem for video surveillance and monitoring. Robotics Institute, Carnegie Mellon Univ., Pittsburgh, PA, VSAM Final Rep. CMU-RI-TR-00-12, May (2000)

Stauffer, C., Grimson, W.: Learning patterns of activity using realtime tracking. IEEE Trans. Pattern Anal. Mach. Intell. 22(8), 747–757 (2000)

Sun, L., Klank, U., Beetz, M.: EYEWATCHME: 3-D hand and object tracking for inside out activity analysis. In: Proceedings of IEEE Computer Society Conference CVPR Workshops, pp. 9–16, Jun (2009)

Moeslund, T., Granum, E.: A survey of computer vision-based human motion capture. Comput. Vis. Image Underst. 81(3), 231–268 (2001)

Nguyen, T., Qui, T., Xu, K., Cheok, A.D., Teo, S.L., Zhou, Z., Mallawaarachchi, A., Lee, S.P., Liu, W., Teo, H.S., Thang, L.N., Li, Y., Kato, H.: Real-time 3-D human capture system for mixed-reality art and entertainment. IEEE Trans. Vis. Comput. Graph. 11(6), 706–721 (2005)

Zhang, L., Maaten, L.: Structure Preserving Object Tracking. In: IEEE Conference Computer Vision Pattern Recognit, pp. 1839–1845 (2013)

Mei, X., Ling, H.: Robust visual tracking and vehicle classification via sparse representation. IEEE Trans. Pattern Anal. Mach. 33(11), 2259–2272 (2011)

Kwon, J., Lee, K. M.: Tracking of a non-rigid object via patch-based dynamic appearance modeling and adaptive basin hopping monte carlo sampling. In: IEEE Conference Computer Vision Pattern Recognit, pp. 1208–1215 (2009)

Comaniciu, D., Ramesh, V., Meer, P.: Real-time tracking of non-rigid objects using mean shift”[C]//Computer Vision and Pattern Recognition, 2000. In: Proceedings IEEE Conference on. IEEE vol. 2, pp. 142–149 (2000)

Collins, R.: Mean-shift blob tracking through scale space, In: Proceedings IEEE Computer Society Conference Computer Vision Pattern Recognition, vol. 2, pp. 234–240, Jun (2003)

Arulampalam, M.S., Maskell, S., Gordon, N.: A tutorial on particle filters for online nonlinear/non-Gaussian Bayesian tracking[J]. IEEE Trans. Signal Process 50(2), 174–188 (2002)

Grabner, H., Bischof, H.: On-line boosting and vision[C]//Computer Vision and Pattern Recognition, 2006 IEEE Computer Society Conference on IEEE, vol. 1, pp. 260–267 (2006)

Babenko, B., Yang, M.-H., Belongie, S.: Robust object tracking with online multiple instance learning. IEEE Trans. Pattern Anal. Mach. Intell. 33(8), 1619–1632 (2011)

Zhang, K., Song, H.: Real-time visual tracking via online weighted multiple instance learning. Pattern Recognit. 46, 397–411 (2013)

Comaniciu, D., Ramesh, V., Meer, P., et al.: Kernel-based object tracking. IEEE Trans. Pattern Anal. Mach. Intell. 25(5), 564–577 (2003)

Birchfield, S., Rangarajan, S.: Spatiograms versus histograms for region-based tracking. In: Proceedings of IEEE Computer Society Conference Computer Vision Pattern Recognition, vol. 2, pp. 1158–1163, Jun (2005)

Lowe, D.G.: Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 60(2), 91–110 (2004)

Ke, Y., Sukthankar, R.: PCA-SIFT: A more distinctive representation for local image descriptors. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit. 2, 506–513 (2004)

Bay, H., Tuytelaars, T., Van Gool, L.: Surf: Speeded up robust features. In: Computer Vision-ECCV, pp. 404–417 (2006)

Song, S., Xiao, J.: Tracking Revisited using RGBD Camera: Unified Benchmark and Baselines[J], (2013)

Xiong, H., Xiong, D., Zhu, Q.: A structured learning-based graph matching method for tracking dynamic multiple objects. IEEE Trans. Circuits Syst. Video Technol. 23, 534–548 (2013)

Learned-Miller, E.G., Lara, L.S.: Distribution Fields For Tracking. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 1910–1917 (2012)

Kim, D.H., Kim, H.K., Ko, S.J.: Spatial color histogram based center voting method for subsequent object tracking and segmention. Image Vis. Comput. 29(12), 850–860 (2011)

Sun, L., Liu, G.: Visual object tracking based on combination of local description and global representation. IEEE Trans. Circuits Syst. Video Technol. 21(4), 408–420 (2011)

Khan, Z.H., Gu, I.Y.H.: Joint feature correspondences and appearance similarity for robust visual object tracking. IEEE Trans Inf Forensics Secur 5(3), 591–606 (2010)

Junqiu, W., Yasushi, Y.: Integrating color and shape-texture features for adaptive real-time tracking. IEEE TIP IEEE Trans. Image Process. 17(2), (2008)

Junseok, K., Kyoung Mu, L.: Visual tracking decomposition. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, pp. 1269–1276 (2010)

Froba, B., Kublbeck, C.: Robust Face Detection at Video Frame Rate Based on Edge Orientation Features. In: Proceedings Fifth IEEE International Conference on Automatic Face and Gesture Recognition, pp. 342–347 (2002)

Chen, J., Li, J., et al.: Edge-guided multiscale segmentation of satellite multispectral imagery. IEEE Trans. Geosci. Remote Sens. 50(11), 4513–4520 (2012)

Hotrakool, W., Siritanawan, P., Kondo, T.: A real-time eye-tracking method using time-varying gradient orientation patterns. In: International Conference on Electrical Engineering/Electronics Computer Telecommunications and Information Technology (ECTI-CON), pp. 492–496 (2010)

Adam, A., Rivlin, E., Shimshoni, I.: Robust fragments-based tracking using the integral histogram. In: Proceedings of IEEE Computer Society Conference Computer Vision Pattern Recognition, pp. 798–805 (2006)

http://www.cl.cam.ac.uk/research/dtg/attarchive/facedatabase.html, Nov. 1. (2013)

http://www.kasrl.org/jaffe_info.html 2013.11.29 (2013)

Canny, J.: A computational approach to edge detection. IEEE Trans. Pattern Anal. Mach. 6, 679–698 (1986)

Zhang, K., Zhang, L., Yang, M.-H.: Real-time compressive tracking, In: Proceedings of the 12th European Conference on Computer Vision (ECCV), pp. 864–877 (2012)

http://campar.in.tum.de/Chair/PaFiSS, Oct. 2 (2013)

http://groups.inf.ed.ac.uk/vision/CAVIAR/CAVIARDATA1/, Sep. 1 (2013)

http://visual-tracking.net/, Jun. 29.2013 (2013)

Acknowledgments

This work was partially supported by the National Natural Science Foundation of China (Grant no. 61173107, 91320103), National High Technology Research And Development Program (863) (Grant no. 2012AA01A301-01), the Research Foundation of Industry-Education-Research Cooperation among Guangdong Province, Ministry of Education and Ministry of Science & Technology, China (Grant No. 2011A091000027) and the Research Foundation of Industry-Education-Research Cooperation of Huizhou, Guangdong (Grant No. 2012C050012012).

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

Section 4 has proven that CSFS weakens some pixel contributions for the concerned image patches. The corresponding validation of pixels is also given in Fig. 5. As further proof, we list some specific pixel ratios to analyze the weakening effect in the following tables.

Both in Tables 5 and 6, the red values that were computed using the PR relation and our method, respectively, exhibit no difference. Thus, their difference in terms of feature value is minimal in participant patches. They indicate that they have very small difference of the feature value in participant patches, and these pixels are assigned to the same layer in 3D models \(V\) and \(V'\). The green values indicate that these pixels have been assigned to adjacent layers in the proposed 3D model. The difference in their feature values for tolerance is defined by \(T_{nbins}\). The purple values show significant changes in terms of a given PR weight and a proposed weight because of the huge differences in pixel pairs. Similarly, pixel pairs with significant differences in terms of their feature values should have been assigned to far layers, with 0 as their similarity value. However, in this study, we make their similarity very small (like the blue numbers in Tables 5 and 6) to be suitable for the tracking process in Sect. 5. The color figures are representatives of the black figures and the figures are not listed in tables.

Rights and permissions

About this article

Cite this article

Li, Z., Yu, X., Li, P. et al. Moving object tracking based on multi-independent features distribution fields with comprehensive spatial feature similarity. Vis Comput 31, 1633–1651 (2015). https://doi.org/10.1007/s00371-014-1044-0

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-014-1044-0