Abstract

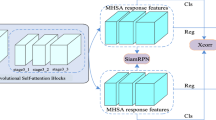

In the process of unmanned aerial vehicles (UAVs) object tracking, the tracking object is lost due to many problems such as occlusion and fast motion. In this paper, based on the SiamRPN algorithm, a UAV object tracking algorithm that optimizes the semantic information of cyberspace, channel feature information and strengthens the selection of bounding boxes, is proposed. Since the traditional SiamRPN method does not consider remote context information, the calculation and selection of bounding boxes need to be improved. Therefore, (1) we design a convolutional attention module to enhance the weighting of feature spatial location and feature channels. (2) We also add a multi-spectral channel attention module to the search branch of the network to further solve remote dependency problems of the network and effectively understand different UAVs tracking scenes. Finally, we use the distance intersection over union to predict the bounding box, and the accurate prediction bounding box is regressed. The experimental results show that the algorithm has strong robustness and accuracy in many scenes.

Similar content being viewed by others

References

Tian, B., Yao, Q., Gu, Y., et al.: Video processing techniques for traffic flow monitoring: A survey. In: International IEEE Conference on Intelligent Transportation Systems (ITSC), pp. 1103-1108 (2011)

Yilmaz, A., Javed, O., Shah, M.: Object tracking: A survey. ACM Comput. Survey. 38(4), 13-es (2006)

Li, B., Yan, J., Wu, W., et al.: High performance visual tracking with siamese region proposal network. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 8971–8980 (2018)

Comaniciu, D., Ramesh, V., Meer, P.: Real-time tracking of non-rigid objects using mean shift. Proc. IEEE Conf. Comput. Vis. Pattern Recogn. (CVPR) 2, 142–149 (2000)

Bolme, D.S., Beveridge, J.R., Draper, B.A., et al.: Visual object tracking using adaptive correlation filters. In: 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, pp. 2544–2550 (2010)

Henriques, J.F., Caseiro, R., Martins, P., et al.: Exploiting the circulant structure of tracking-by-detection with kernels. In: European Conference on Computer Vision (ECCV), pp. 702-715 (2012)

Henriques, J.F., Caseiro, R., Martins, P., et al.: High-speed tracking with kernelized correlation filters. IEEE Trans. Pattern Anal. Mach. Intell. 37(3), 583–596 (2014)

Li, Y., Zhu, J.: A scale adaptive kernel correlation filter tracker with feature integration. In: European Conference on Computer Vision (ECCV), pp. 254–265. Springer, Cham (2014)

Danelljan, M., Häger, G., Khan, F., et al.: Accurate scale estimation for robust visual tracking. In: British Machine Vision Conference, Nottingham. BMVA Press (2014)

Danelljan, M., Hager, G., Shahbaz, Khan. F., et al.: Learning spatially regularized correlation filters for visual tracking. In: Proceedings of the IEEE International Conference on Computer Vision (ICCV), pp. 4310-4318 (2015)

Kiani Galoogahi, H., Fagg, A., Lucey, S.: Learning background-aware correlation filters for visual tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1135-1143 (2017)

Li, F., Tian, C., Zuo, W., et al.: Learning spatial-temporal regularized correlation filters for visual tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 4904-4913 (2018)

Krizhevsky, A., Sutskever, I., Hinton, G.E.: Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 25, 1105–1907 (2012)

Bertinetto, L., Valmadre, J., Henriques, J.F., et al.: Fully-convolutional siamese networks for object tracking. In: European Conference on Computer Vision (ECCV), pp. 850-865 (2016)

Zhu, Z., Wang, Q., Li, B., et al.: Distractor-aware siamese networks for visual object tracking. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 101-117 (2018)

Zhang, Z., Peng, H.: Deeper and wider siamese networks for real-time visual tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 4591–4600 (2019)

He, K., Zhang, X., Ren, S., et al.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 770–778 (2016)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. arXiv: 1409.1556 (2014)

Xie, S., Girshick, R., Dollár, P., et al.: Aggregated residual transformations for deep neural networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1492–1500 (2017)

Li, B., Wu, W., Wang, Q., et al.: Siamrpn++: Evolution of siamese visual tracking with very deep networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 4282–4291 (2019)

Fan, H., Ling, H.: Siamese cascaded region proposal networks for real-time visual tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 7952–7961 (2019)

Xu, Y., Wang, Z., Li, Z., et al.: Siamfc++: Towards robust and accurate visual tracking with target estimation guidelines. Proc. AAAI Conf. Artif. Intell. 34(07), 12549–12556 (2020)

Hou, Q., Zhang, L., Cheng, M.M., et al.: Strip pooling: Rethinking spatial pooling for scene parsing. Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recogn. (CVPR) 34(07), 4003–4012 (2020)

Qilong, W., Banggu, W., Pengfei, Z., et al.: ECA-Net: Efficient channel attention for deep convolutional neural networks. In: 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (2020)

Qin, Z., Zhang, P., Wu, F., et al.: FcaNet: Frequency Channel Attention Networks. arXiv preprint arXiv: 2012.11879 (2020)

Zheng, Z., Wang, P., Liu, W., et al.: Distance-IoU loss: Faster and better learning for bounding box regression. Proc. AAAI Conf. Artif. Intell. 34(07), 12993–13000 (2020)

Cheng, H., Lin, L., Zheng, Z., et al.: An autonomous vision-based target tracking system for rotorcraft unmanned aerial vehicles. In: IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 1732–1838 (2017)

Yin, Y., Wang, X., Xu, D., et al.: Robust visual detection-learning-tracking framework for autonomous aerial refueling of UAVs. IEEE Trans. Instrum. Meas. 65(3), 510–521 (2016)

Fu, C., Carrio, A., Olivares-Mendez, M.A., et al.: Robust real-time vision-based aircraft tracking from unmanned aerial vehicles. In: IEEE International Conference on Robotics and Automation (ICRA), pp. 5441–5446 (2014)

Wang, X., Fan, B., Chang, S., et al.: Greedy batch-based minimum-cost flows for tracking multiple objects. IEEE Trans. Image Process. 26(10), 4765–4776 (2014)

Huang, Z., Fu, C., Li, Y., et al.: Learning aberrance repressed correlation filters for real-time uav tracking. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 2891–2900 (2019)

Yang, M., Lin, Y., Huang, D., et al.: Accurate visual tracking via reliable patch. In: Visual Computer (2021)

Gong, K., Cao, Z., Xiao, Y., et al.: Abrupt-motion-aware lightweight visual tracking for unmanned aerial vehicles. Visual Comput. 37(2), 371–383 (2021)

Danelljan, M., Bhat, G., Khan, F.S., et al.: ATOM: Accurate tracking by overlap maximization. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 4660-4669 (2019)

Bhat, G., Danelljan, M., Gool, L.V., et al.: Learning discriminative model prediction for tracking. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), pp. 6182-6191 (2019)

Tian, C., Xu, Y., Zuo, W.: Image denoising using deep CNN with batch renormalization. Neural Netw. 121, 461–473 (2020)

Tian, C., Xu, Y., Zuo, W., et al.: Designing and training of a dual CNN for image denoising. Knowledge-Based Syst. 226, 106949 (2021)

Russakovsky, O., Deng, J., Su, H., et al.: Imagenet large scale visual recognition challenge. Int. J. Comput. Vis. 115(3), 211–252 (2015)

Tian, C., Xu, Y., Li, Z., et al.: Attention-guided CNN for image denoising. Neural Netw. 124, 117–129 (2020)

Tian, C., Fei, L., Zheng, W., et al.: Deep learning on image denoising: an overview. Neural Netw. 131, 251–275 (2020)

Hu, H., Gu, J., Zhang, Z., et al.: Relation networks for object detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 211–252 (2015)

Hu, J., Shen, L., Sun, G.: Squeeze-and-excitation networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 7132–7141 (2018)

Wang, X., Girshick, R., Gupta, A., et al.: Non-local neural networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 7794–7803 (2018)

Vaswani, A., Shazeer, N., Parmar, N., et al.: Attention is all you need. In: Advances in Neural Information Processing Systems, pp. 5998–6008 (2017)

Cao, Y., Xu, J., Lin, S., et al.: Gcnet: Non-local networks meet squeeze-excitation networks and beyond. In: Proceedings of the IEEE International Conference on Computer Vision Workshops (2019)

Rezatofighi, H., Tsoi, N., Gwak, J.Y., et al.: Generalized intersection over union: A metric and a loss for bounding box regres-sion. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 658–666 (2019)

Ioffe, S., Szegedy, C.: Batch normalization: Accelerating deep network training by reducing internal covariate shift. In: International Conference on Machine Learning, pp. 448–456. PMLR (2015)

Real, E., Shlens, J., Mazzocchi, S., et al.: Youtube-boundingboxes: A large high-precision humanannotated data set for object detection in video. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 5296–5305 (2017)

Mueller, M., Smith, N., Ghanem, B.: A benchmark and simulator for UAV tracking. In: European Conference on Computer Vision (ECCV), pp. 445–461 (2016)

Du, D., Qi, Y., Yu, H., et al.: The unmanned aerial vehicle benchmark: Object detection and tracking. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 370–386 (2018)

Li, S., Yeung, D.Y.: Visual object tracking for unmanned aerial vehicles: a benchmark and new motion models. In: Proceedings of the AAAI Conference on Artificial Intelligence (2017)

Jia, X., Lu, H., Yang, M.H.: Visual tracking via adaptive structural local sparse appearance model. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 1822–1829 (2012)

Hong, Z., Chen, Z., Wang, C., et al.: Multi-store tracker (muster): A cognitive psychology inspired approach to object tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 749–758 (2015)

Ross, D.A., Lim, J., Lin, R.S., et al.: Incremental learning for robust visual tracking. Int. J. Comput. Vis. 77(1–3), 125–141 (2008)

Danelljan, M., Bhat, G., Shahbaz, Khan.F., et al.: Eco: Efficient convolution operators for tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 6638–6646 (2017)

Fan, H., Ling, H.: Parallel tracking and verifying: A framework for real-time and high accuracy visual tracking. In: Proceedings of the IEEE International Conference on Computer Vision (ICCV), pp. 5486–5494 (2017)

Valmadre, J., Bertinetto, L., Henriques, J., et al.: End-to-end representation learning for correlation filter based tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 2805–2813 (2017)

Zhang, J., Ma, S., Sclaroff, S.: MEEM: robust tracking via multiple experts using entropy minimization. In: European Conference on Computer Vision (ECCV), pp. 188–203 (2014)

Kala, Z., Mikolajczyk, K., Matas, J.: Tracking-learning-detection. IEEE Trans. Pattern Anal. Mach. Intell. 34(7), 1409–1422 (2011)

Held, D., Thrun, S., Savarese, S.: Learning to track at 100 fps with deep regression networks. In: European Conference on Computer Vision (ECCV), pp. 749–765 (2016)

Bertinetto, L., Valmadre, J., Golodetz, S., et al.: Staple: Complementary learners for real-time tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1401–1409 (2016)

Zhang, T., Xu, C., Yang, M.H.: Multi-task correlation particle filter for robust object tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 4335–4343 (2017)

Danelljan, M., Robinson, A., Khan, F.S., et al.: Beyond correlation filters: Learning continuous convolution operators for visual tracking. In: European Conference on Computer Vision (ECCV), pp. 472–488 (2016)

Wang, L., Ouyang, W., Wang, X., et al.: Stct: Sequentially training convolutional networks for visual tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1373–1381 (2016)

Huang, L., Zhao, X., Huang, K.: Got-10k: A large high-diversity benchmark for generic object tracking in the wild. In: IEEE Transactions on Pattern Analysis and Machine Intelligence, pp. 1562–1577 (2019)

Wang, N., Song, Y., Ma, C., et al.: Unsupervised deep tracking. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1308–1317 (2019)

Wang, N., Zhou, W., Tian, Q., et al.: Multi-cue correlation filters for robust visual tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 4844–4853 (2018)

Li, Y., Fu, C., Huang, Z., et al.: Intermittent contextual learning for keyfilter-aware UAV object tracking using deep convolutional feature. IEEE Trans. Multimed. 23, 810–822 (2020)

Li, Y., Fu, C., Ding, F., et al.: AutoTrack: Towards high-performance visual tracking for UAV with automatic spatio-temporal regularization. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 11923–11932 (2020)

Funding

This research was supported by the Smart grid and intelligent control Nanchong city Key Laboratory Platform Construction (phase I), Grant/Award Number: 19SXHZ0011.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

All the authors do not have any possible conflicts of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Yang, S., Chen, H., Xu, F. et al. High-performance UAVs visual tracking based on siamese network. Vis Comput 38, 2107–2123 (2022). https://doi.org/10.1007/s00371-021-02271-7

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-021-02271-7