Abstract

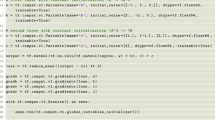

Recurrent neural networks have been successfully used for analysis and prediction of temporal sequences. This paper is concerned with the convergence of a gradient-descent learning algorithm for training a fully recurrent neural network. In literature, stochastic process theory has been used to establish some convergence results of probability nature for the on-line gradient training algorithm, based on the assumption that a very large number of (or infinitely many in theory) training samples of the temporal sequences are available. In this paper, we consider the case that only a limited number of training samples of the temporal sequences are available such that the stochastic treatment of the problem is no longer appropriate. Instead, we use an off-line gradient training algorithm for the fully recurrent neural network, and we accordingly prove some convergence results of deterministic nature. The monotonicity of the error function in the iteration is also guaranteed. A numerical example is given to support the theoretical findings.

Similar content being viewed by others

References

Atiya AF, Parlos AG (2000) New results on recurrent network training: Unifying the algorithms and accelerating convergence. IEEE Trans Neural Netw 11:697–709. doi:10.1109/72.846741

Aussem A (2002) Sufficient conditions for error backflow convergence in dynamical recurrent neural networks. Neural Comput 14:1907–1927. doi:10.1162/089976602760128063

Chen TB, Soo VW (1996) A comparative study of recurrent neural network architectures on learning temporal sequences. IEEE Int Conf Neural Netw 4:1945–1950

Gori M, Maggini M (1996) Optimal convergence of on-line backpropagation. IEEE Trans Neural Netw 7:251–254. doi:10.1109/72.478415

Jesus OD, Hagan MT (2007) Backpropation algorithms for a broad class of dynamic networks. IEEE Trans Neural Netw 18:14–27. doi:10.1109/TNN.2006.882371

Ku CC, Lee KY (1995) Diagonal recurrent neural networks for dynamic systems control. IEEE Trans Neural Netw 6:144–156. doi:10.1109/72.363441

Kuan CM, Hornik K, White H (1994) A convergence results for learning in recurrent neural networks. Neural Comput 6:420–440. doi:10.1162/neco.1994.6.3.420

Ortega J, Rheinboldt W (1970) Iterative solution of nonlinear equations in several variables. Academic Press, New York

Williams RJ, Zisper J (1989a) A learning algorithm for continually running fully recurrent neural networks. Neural Comput 1:270–280. doi:10.1162/neco.1989.1.2.270

Williams RJ, Zisper J (1989b) Experimental analysis of the real time recurrent learning algorithm. Connect Sci 1:87–111. doi:10.1080/09540098908915631

Yuan YX, Sun WY (2001) Optimization theory and methods. Science Press, Beijing

Acknowledgments

This work is partly supported by the National Natural Science Foundation of China (10471017).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Xu, D., Li, Z. & Wu, W. Convergence of gradient method for a fully recurrent neural network. Soft Comput 14, 245–250 (2010). https://doi.org/10.1007/s00500-009-0398-0

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-009-0398-0