Abstract

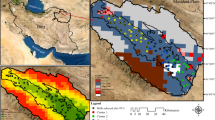

In this paper, a sequential intelligent methodology is implemented to estimate the sea-ice thickness along the Labrador coast of Canada based on spatio-temporal information from the moderate resolution imaging spectro-radiometer, and the advanced microwave scanning radiometer-earth sensors. The proposed intelligent model comprises two separate sub-systems. In the first part of the model, clustering is performed to divide the studied region into a set of sub-regions, based on a number of features. Thereafter, this learning system serves as a distributor to dispatch the proper information to a set of estimation modules. The estimation modules utilize ridge randomized neural network to create a map between a set of features and sea-ice thickness. The proposed modular intelligent system is best suited for the considered case study as the amount of collected spatio-temporal information is large. To ascertain the veracity of the proposed technique, two different spatio-temporal databases are considered, which include the remotely sensed brightness temperature data at two different frequencies (low frequency, 6.9 GHz, and high frequency, 36.5 GHz) in addition to both atmospheric and oceanic variables coming from validated forecasting models. To numerically prove the accuracy and computational robustness of the designed sequential learning system, two different sets of comparative tests are conducted. In the first phase, the emphasis is put on evaluating the efficacy of the proposed modular framework using different clustering methods and using different types of estimators at the heart of the estimation modules. Thereafter, the modular estimator is prepared with standard neural identifiers to prove to what extent the modular estimator can increase the accuracy and robustness of the estimation.

Similar content being viewed by others

References

Belchansky GI, Douglas DC, Platonov NG (2008) Fluctuating Arctic sea ice thickness changes estimated by an in situ learned and empirically forced neural network model. J Clim 21(4):716–729

Broomhead DS, Lowe D (1988) Multivariable functional interpolation and adaptive networks. Complex Syst 2:321–355

Burger M, Neubauer A (2003) Analysis of Tikhonov regularization for function approximation by neural networks. Neural Netw 16(1):79–90

Chuang L-Y, Hsiao C-J, Yang C-H (2011) Chaotic particle swarm optimization for data clustering. Expert Syst Appl 38(12):14555–14563

Ding Y, Feng Q, Wang T, Fu X (2014) A modular nuerla network architecture with concept. Neurocomputing 125(11):3–6

Eicken H (2013) Arctic sea ice needs better forecasts. Nature 497:431–433

Farooq A (2000) Biologically inspired modular neural networks. Ph.D. thesis, Virginia Tech

Fodor JA (1983) Modularity of mind: an essay on faculty psychology. MIT Press, Cambridge

Hall DK, Key JR, Casey KA, Riggs GA, Cavalieri DJ (2004) Sea ice surface temperature product from MODIS. IEEE Trans Geosci Remote Sens 42(5):1076–1087

Hall DK, Riggs GA, Salomonson VV (2007) MODIS/terra sea ice extent 5-min L2 swath 1 km V005. National Snow and Ice Data Center, Boulder

Hastie T, Tibshirani R, Friedman J (2009) The elements of statistical learning. Number 1. Springer, Berlin

Haverkamp D, Soh L-K, Tsatsoulis C (1995) A comprehensive, automated approach to determining sea ice thickness from SAR data. IEEE Trans Geosci Remote Sens 33(1):46–57

Hornik K (1991) Approximation capabilities of multilayer feedforward networks. Neural Netw 4(2):251–257

Huang G-B, Zhu Q-Y, Siew C-K (2006) Extreme learning machine: theory and applications. Neurocomputing 70(1):489–501

Huang P-S, Deng L, Hasegawa-Johnson M, He X (2013) Random features for kernel deep convex network. In: 2013 IEEE international conference on acoustics, speech and signal processing (ICASSP). IEEE, pp 3143–3147

Iwamoto K, Ohshima KI, Tamura T, Nihashi S (2013) Estimation of thin ice thickness from AMSR-E data in the Chukchi sea. Int J Remote Sens 34(2):468–489

Javadi M, Abbaszadeh ASAA, Sajedin A, Ebrahimpour R (2013) Classification of ECG arrhythmia by a modular neural network based on mixture of experts and negative correlated learning. Biomed Signal Process Control 8:289–296

Kaleschke L, Tian-Kunze X, Maab N, Makynen M, Matthias D (2012) Sea ice thickness retrieval from SMOS brightness temperatures during the Arctic freeze-up period. J Geophys Res 39. doi:10.1029/2012GL050916

Karaboga D, Ozturk C (2011) A novel clustering approach: artificial bee colony (ABC) algorithm. Appl Soft Comput 11(1):652–657

Le Q, Sarlós T, Smola A (2013) Fastfood-approximating kernel expansions in loglinear time. In: Proceedings of the international conference on machine learning

Lin H, Yang L (2012) A hybrid neural network model for sea ice thickness forecasting. In: 2012 eighth international conference on natural computation (ICNC). IEEE, pp 358–361

Lowe D (1989) Adaptive radial basis function nonlinearities, and the problem of generalisation. In: First IEE international conference on (Conf. Publ. No. 313) Artificial neural networks. IET, pp 171–175

LukošEvičIus M, Jaeger H (2009) Reservoir computing approaches to recurrent neural network training. Comput Sci Rev 3(3):127–149

Meireles MRG, Almeida PEM, Simoes MG (2003) A comprehensive review for industrial applicability of artificial neural networks. IEEE Trans Ind Electron 50(3):585–601

Melin P (2012) Modular neural networks and type-2 fuzzy systems for pattern recognition. Studies in computational intelligence. Springer, Berlin, Heidelberg

Mozaffari A, Fathi A (2012) Identifying the behaviour of laser solid freeform fabrication system using aggregated neural network and the great salmon run optimization algorithm. Int J Bio-Inspir Comput 4(5):330–343

Mozaffari A, Behzadipour S, Kohani M (2014) Identifying the tool-tissue force in robotic laparoscopic surgery using neuro-evolutionary fuzzy systems and a synchronous self-learning hyper level supervisor. Appl Soft Comput 14:12–30

Neri F, Tirronen V (2009) Scale factor local search in differential evolution. Memet Comput 1:153–171

Nihashi S, Ohshima KI, Tamura T, Fukamachi Y, Saitoh S (2009) Thickness and production of sea ice in the okhotsk sea coastal polynyas from AMSR-E. J Geophys Res: Oceans 114(C10)

Pao YH, Park GH, Sobajic DJ (1994) Learning and generalization characteristics of the random vector functional-link net. Neurocomputing 6(2):163–180

Park J, Sandberg IW (1991) Universal approximation using radial-basis-function networks. Neural Comput 3(2):246–257

Pratama M, Anavatti SG, Angelov PP, Lughofer E (2014a) PANFIS: a novel incremental learning machine. Neural Netw Learn Syst IEEE Trans 25(1):55–68

Pratama M, Anavatti SG, Lughofer E (2014b) GENEFIS: toward an effective localist network. Fuzzy Syst IEEE Trans 22(3):547–562

Pratama M, Anavatti S, Lu J (2015a) Recurrent classifier based on an incremental meta-cognitive-based scaffolding algorithm. Fuzzy Syst IEEE Trans 23(6):2048–2066

Pratama M, Anavatti SG, Joo M, Lughofer ED (2015b) pClass: an effective classifier for streaming examples. Fuzzy Syst IEEE Trans 23(2):369–386

Pratama M, Lu J, Zhang G (2015c) Evolving type-2 fuzzy classifier. Fuzzy Syst IEEE Trans. doi:10.1109/TFUZZ.2015.2463732

Rahimi A, Recht B (2007) Random features for large-scale kernel machines. In: Advances in neural information processing systems, pp 1177–1184

Raja MAZ, Samar R (2014) Numerical treatment for nonlinear MHD Jeffery–Hamel problem using neural networks optimized with interior point algorithm. Neurocomputing 124:178–194

Rodan Ali, Tiňo Peter (2011) Minimum complexity echo state network. Neural Netw IEEE Trans 22(1):131–144

Rojas R (1996) Neural networks: a systematic introduction. Springer, Berlin

Schmidt WF, Kraaijveld MA, Duin RPW (1992) Feedforward neural networks with random weights. In: Pattern recognition, 1992. Conference B: pattern recognition methodology and systems, Proceedings, 11th IAPR international conference, vol II. IEEE, pp 1–4

Scott KA, Buehner M, Caya A, Carrieres T (2012) Direct assimilation of AMSR-E brightness temperatures for estimating sea ice concentration. Mon Weather Rev 140(3):997–1013

Scott KA, Buehner M, Carrieres T (2014) An assessment of sea-ice thickness along the Labrador coast from AMSR-E and MODIS data for operational data assimilation. IEEE Trans Geosci Remote Sens 52(5):2726–2737

Senthilnath J, Omkar SN, Mani V (2011) Clustering using firefly algorithm: performance study. Swarm Evolut Comput 1(3):164–171

Sheikh RH, Raghuwanshi MM, Jaiswal AN (2008) Genetic algorithm based clustering: a survey. In: International conference on emerging trends in engineering and technology, Nagpur, pp 314–319

Soh L-K, Tsatsoulis C, Gineris D, Bertoia C (2004) ARKTOS: an intelligent system for SAR sea ice image classification. IEEE Trans Geosci Remote Sens 42(1):229–248

Wang X, Key JR, Liu Y (2010) A thermodynamic model for estimating sea and lake ice thickness with optical satellite data. J Geophys Res: Oceans (1978–2012) 115(C12)

Weeks W (2010) On sea ice. University of Alaska Press, Fairbanks

Widrow Bernard, Greenblatt Aaron, Kim Youngsik, Park Dookun (2013) The no-prop algorithm: a new learning algorithm for multilayer neural networks. Neural Netw 37:182–188

Wu L, Moody J (1996) A smoothing regularizer for feedforward and recurrent neural networks. Neural Comput 8(3):461–489

Yamazaki T, Tanaka S (2007) The cerebellum as a liquid state machine. Neural Netw 20(3):290–297

Yu Y, Lindsay RW (2003) Comparison of thin ice thickness distributions derived from RADARSAT Geophysical Processor System and advanced very high resolution radiometer data sets. J Geophys Res: Oceans 108(C12)

Zhang L, Suganthan PN (2015) A comprehensive evaluation of random vector functional link networks. Inf Sci. doi:10.1016/j.ins.2015.09.025

Acknowledgments

This work was a part of a project funded by the Natural Sciences and Engineering Research Council (NSERC) of Canada. Their support is gratefully acknowledged.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Communicated by V. Loia.

Appendices

Appendix 1

Algorithm 1. Golden sectioning local search

-

1.

Verify the upper and lower bounds of searching domain (\(a=-1\) and \(b=1\)).

-

2.

Generate two intermediate values, i.e. \(F^{1}(i)\) and \(F^{2}(i)\), as below:

$$\begin{aligned}&F^{1}(i)=b-\frac{b-a}{\phi },\\&F^{2}(i)=a+\frac{b-a}{\phi }, \end{aligned}$$where \(\phi =1.6181\).

-

3.

Proceed with the mutation operator to find two different mutant solutions:

$$\begin{aligned} \mathbf {v}^{j}(i)=\mathbf {s}_1(i)+F^{j}(i)\left( \mathbf {s}_{3}(i)-\mathbf {s}_{2}(i)\right) , \quad j=1,2. \end{aligned}$$ -

4.

Proceed with the crossover mechanism to determine the final solutions:

$$\begin{aligned} u_{k}^{j}(i)={\left\{ \begin{array}{ll} s_{k}^{j}(i) &{}\quad \! \text {if }\text {rand}(0,1) < \text {CR}(i) \\ v_{k}^{j}(i) &{}\quad \! \text {otherwise} \end{array}\right. } \end{aligned}$$for \(j=1,2\) and \(k=1,\dots ,d\), where d represents the dimensionality of the optimization problem.

-

5.

Evaluate the fitness of each of the two obtained off-springs.

-

6.

If \(\mathrm{fitness}(\mathbf {u}^{1}(i)){>}\mathrm{fitness}(\mathbf {u}^{2}(i))\), then \(\mathbf {u}(i)=\mathbf {u}^{1}(i),F(i)=F^{2}(i)\) and \(b=F^{2}(i)\); else, \(\mathbf {u}(i)=\mathbf {u}^{2}(i),F(i)=F^{2}(i)\) and \(a=F^{1}(i)\).

-

7.

If the termination criteria are satisfied stop the process, otherwise return to Step 2.

Appendix 2

Algorithm 2. Hill climbing local search

-

1.

Define an initial value for climbing h.

-

2.

Produce three intermediate values, i.e. \(F^{1}(i), F^{2}(i)\) and \(F^{3}(i)\), as below:

$$\begin{aligned} F^{1}(i)&=F(i)-h\\ F^{2}(i)&=F(i)\\ F^{3}(i)&=F(i)+h. \end{aligned}$$ -

3.

Perform the mutation operator using the obtained scale factors to find the mutant solutions:

$$\begin{aligned} \mathbf {v}^{j}(i)=\mathbf {s}_1(i)+F^{j}(i)\left( \mathbf {s}_{3}(i)-\mathbf {s}_{2}(i)\right) , \quad j=1,2,3. \end{aligned}$$ -

4.

Proceed with the crossover mechanism to calculate the final solutions:

$$\begin{aligned} u_{k}^{j}(i)={\left\{ \begin{array}{ll} s_{k}^{j}(i) &{}\quad \! \text {if }\text {rand}(0,1) < \text {CR}(i) \\ v_{k}^{j}(i) &{}\quad \! \text {otherwise} \end{array}\right. } \end{aligned}$$for \(j=1,2,3\) and \(k=1,\dots ,d\).

-

5.

Calculate the fitness of each of the three obtained off-springs and extract the scale factor that yields the best fitness \(F^{*}(i)\).

-

6.

If \(F^{*}(i)=F(i)\) then \(h = h/2\); else, \(\mathbf {u}(i)=\mathbf {u}^{*}(i)\) and \(F(i)=F^{*}(i)\).

-

7.

If the termination criteria are satisfied stop the process; otherwise, return to Step 2.

Appendix 3

Algorithm 3. Standard operators

-

1.

Let \(F_\ell \) and \(F_u\) be the minimum and maximum scale factor values in Table 2, respectively. Update the scale factor using the following formula:

$$\begin{aligned} F(i)={\left\{ \begin{array}{ll} F_{\ell }+F_{u}u_{1} &{}\quad \! \text {if }u_{2} < \tau _{1}\\ F(i) &{}\quad \! \text {otherwise}. \end{array}\right. } \end{aligned}$$ -

2.

Update the crossover rate factor using the following formula:

$$\begin{aligned} \text {CR}(i)={\left\{ \begin{array}{ll} u_{3} &{}\quad \! \text {if }u_{4}<\tau _{2}\\ \text {CR}(i) &{}\quad \! \text {otherwise}. \end{array}\right. } \end{aligned}$$ -

3.

Proceed with the mutation operator to find the mutant solutions:

$$\begin{aligned} \mathbf {v}(i)=\mathbf {s}_1(i)+F(i)\left( \mathbf {s}_{3}(i)-\mathbf {s}_{2}(i)\right) . \end{aligned}$$ -

4.

Proceed with the crossover mechanism to calculate the final solutions:

$$\begin{aligned} u_k(i)={\left\{ \begin{array}{ll} s_k(i) &{}\quad \! \text {if rand}(0,1)<\text {CR}(i)\\ v_k(i) &{}\quad \! \text {otherwise}. \end{array}\right. } \end{aligned}$$for \(k=1,\dots ,d\).

Rights and permissions

About this article

Cite this article

Mozaffari, A., Scott, K.A., Chenouri, S. et al. A modular ridge randomized neural network with differential evolutionary distributor applied to the estimation of sea ice thickness. Soft Comput 21, 4635–4659 (2017). https://doi.org/10.1007/s00500-016-2074-5

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-016-2074-5