Abstract

The classifiers based on the relative transformation have good effectiveness in classification on the noisy, sparse and high-dimensional data. However, the relative transformation only simply transforms features from the original space to the relative space by Euclidean distances. It still ignores many other human perceptions. For example, to identify an object, human may find the difference among similar objects and adjust the recognition results according to the densities of classes. To simulate these two human perceptions, this paper first modifies the cognitive gravity model with a new way to estimate mass and then Gaussian transformation, and then applies the modified model to redefine the relative transformation, denoted as CGRT. Subsequently, a new classifier is designed, which utilizes CGRT to transform the original space to the relative space in which the classification is performed. The conducted experiments on challenging benchmark datasets validate the CGRT and the designed classifier.

Similar content being viewed by others

References

Bergman TJ, Beehner JC, Cheney DL, Seyfarth RM (2003) Hierarchical classification by rank and Kinship in Baboons. Science 302(5648):1234–1236

Burges CJC (1998) A tutorial on support vector machines for pattern recognition. Data Mining Knowl Discov 2(2):121–167

Cai X, Wen G, Wei J, Li J, Yu Z (2014) Perceptual relativity-based semi-supervised dimensionality reduction algorithm. Appl Soft Comput 16:112–123

Chang C-C, Lin C-J (2011) LIBSVM—a library for support vector machines. ACM Trans Intell Syst Technol 2(3):1–27

Chen J, Yi Z (2014) Sparse representation for face recognition by discriminative low-rank matrix recovery. J Vis Commun Image Represent 25(5), 763–773

Cover T, Hart P (1967) Nearest neighbor pattern classification. IEEE Trans Inf Theory 13(1):21–27

Desolneux A, Moisan L, Morel JM (2002) Computational gestalts and perception thresholds. J Physiol Paris 97(2–3):311–324

Feng Z, Yang M, Zhang L, Liu Y, Zhang D (2014) Joint discriminative dimensionality reduction and dictionary learning for face recognition. In: Proceedings of the international conference on computer vision and pattern recognition (CVPR), Columbus

He x, Cai D, Yan S, Zhang HJ (2005) Neighborhood preserving embedding. In: Proceedings of the international conference on computer vision (ICCV), Beijing, pp 1208–1213

He X, Niyogi P (2003) Locality preserving projections. In: Proceedings of the advances in neural information processing systems (NIPS), Vancouver, pp 585–591

Huang H, Li J, Liu J (2012) Enhanced semi-supervised local Fisher discriminant analysis for face recognition. Future Gener Comput Syst 28(1):244–253

Huang G, Song S, Gupta JND, Wu C (2013) A second order cone programming approach for semi-supervised learning. Pattern Recognit 46(12):3548–3558

ISOLET Data Set. http://archive.ics.uci.edu/ml/datasets/ISOLET

Jia WEI, Hong PENG (2008) Local and global preserving based semi-supervised dimensionality reduction method. J Softw 19(11):2833–3842

Jiang Zhuolin, Lin Zhe, Davis LS (2013) Label consistent K-SVD: learning a discriminative dictionary for recognition. IEEE Trans Pattern Anal Mach Intell 35(11):2651–2664

Mairal J, Bach F, Ponce J, Sapiro G (2009) Online dictionary learning for sparse coding. In: Proceedings of the international conference on machine learning (ICML), pp 689–696

Martinez A, Benavente R (1998) The AR face database. CVC technical report 24

Martinez AM, Kak AC (2001) PCA versus LDA. Trans Pattern Anal Mach Intell 23(2):228–233

Nasiri JA, Charkari NM, Mozafari K (2014) Energy-based model of least squares twin Support Vector Machines for human action recognition. Signal Process 104:248–257

Nene SA, Nayar SK, Murase H (1996) Columbia object image library (COIL-20). Technical report CUCS-005-96

Ogiela L, Ogiela MR (2011) Semantic analysis processes in advanced pattern understanding systems. international conference on advanced science and technology, Jeju Island, pp 26–30

Ogiela L, Ogiela MR (2014) Cognitive systems for intelligent business information management in cognitive economy. Int J Inf Manag 34(6):751–760

Ogiela L, Ogiela MR (2014) Cognitive systems and bio-inspired computing in Homeland security. J Netw Comput Appl 38:34–42

Pan Rotation Face Database, http://robotics.csie.ncku.edu.tw/

Peng L, Yang B, Chen Y, Abraham A (2009) Data gravitation based classification. Inf Sci 179(6):809–819

Samaria FS, Harter AC (1994) Parameterization of a stochastic model for human. In: Proceedings of the IEEE workshop on applications of computer vision (ACV), Sarasota, pp 138–142

Shang F, Jiao LC, Liu Y (2013) Semi-supervised learning with nuclear norm regularization. Pattern Recognit 46(8):2323–2336

Sim T, Baker S, Bsat M, The CMU Pose, Illumination, and expression (PIE) database. In: Proceedings of the IEEE international conference on automatic face and gesture recognition (AFGR), Washington, pp. 46–51

Sujay Raghavendra N, Paresh Chandra D (2014) Support vector machine applications in the field of hydrology: a review. Appl Soft Comput 19:372–386

Wang Q, Yuen PC, Feng G (2013) Semi-supervised metric learning via topology preserving multiple semi-supervised assumptions. Pattern Recognit 46(9):2576–2578

Wen G, Jiang L, Wen J (2009) Local relative transformation with application to isometric embedding. Pattern Recognit Lett 30(3):203–211

Wen G (2009) Relative transformation-based neighborhood optimization for isometric embedding. Neurocomputing 72(4–6):1205–1213

Wen G, Jiang L, Wen J, Wei J, Yu Z (2012) Perceptual relativity-based local hyperplane classification. Neurocomputing 97(15):155–163

Wen G, Wei J, Wang J, Zhou T, Chen L (2013) Cognitive gravitation model for classification on small noisy data. Neurocomputing 118(22):245–252

Wen G, Jiang L (2011) Relative local mean classifier with optimized decision rule, international conference on computational intelligence and security (CIS), Hainan, pp 477–481

Wen G, Wei J, Yu Z, Wen J, Jiang L (2011) Relative nearest neighbors for classification, international conference on machine learning and cybernetics (ICMLC), Guilin, pp 773–778

Wright J, Yang AY, Ganesh A, Sastry SS, Ma Y (2009) Robust face recognition via sparse representation. IEEE Trans Pattern Anal Mach Intell 31(2):210–227

Yang M, Zhang L, Feng X, Zhang D (2011) Fisher discrimination dictionary learning for sparse representation. In: Proceedings of the international conference on computer vision (ICCV), Washington, pp 543–550

Zhao M, Zhang Z, Chow TWS (2012) Trace ratio criterion based generalized discriminative learning for semi-supervised dimensionality reduction. Pattern Recognit 45(4):1482–1499

Acknowledgments

This work was supported by China National Science Foundation under Grants 60973083, 61273363, State Key Laboratory of Brain and Cognitive Science under grants 08B12.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

All authors declare that they have no conflicts of interest.

Additional information

Communicated by V. Loia.

Appendix 1

Appendix 1

Proposition 1

I(x) decreases with the increasing of fd(x).

Proof

Given training samples, \(\sum \nolimits _{i=1}^n {f_d(x_i)} \) can be seen as a constant, denoted as \(\sum \nolimits _{i=1}^n {f_d(x_i)} =\eta \), \(\mathrm{d}I(x)\mathrm{d}\left\| {x-x_i} \right\| _2^2\) can be computed as follow:

It can be seen from Eq. (16) that \(\mathrm{d}I(x)/\mathrm{d}\left\| {x-x_i} \right\| _2 >0\). Furthermore, \(\left\| {x-x_i} \right\| _2\) decreases with the increasing of density of x when \(x_i\in N_t(x)\), where \(N_t(x)\) contains t samples that have t smallest Euclidean distances with x. Therefore I(x) decreases with the increasing of density.

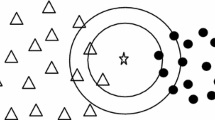

This proposition makes that I(x) could be a good candidate for estimating the mass of cognitive gravity model, because if I(x) decreases with the increasing of \(f_d(x)\), the gravity between two samples can decreases with the increasing of \(f_d(x)\), and then the goal of CGM (Wen et al. 2013) show in Fig. 2 can be achieved. \(\square \)

Proposition 2

I(x) nonlinearly increases with the increasing of n.

Proof

Redefined \(\sum \nolimits _{i=1}^n {f_d(x_i)} =z\), where z is changed with n, and rewritten I(x) as:

\(\mathrm{d}I(x)/\mathrm{d}z\) can be computed as:

Obviously, \(\mathrm{d}I(x)/\mathrm{d}z>0\) and \(\mathrm{d}I(x)/\mathrm{d}z\) is not a constant, so that I(x) nonlinearly increases with the increasing of number of training samples.

This proposition makes that I(x) is a bad estimate for the mass of cognitive gravity model, because if \(I(x_i)/I(x_j)\) nonlinearly changes with the number of training samples, \(\hat{F}(x_i,x_t)/\hat{F}(x_j,x_t)\) nonlinearly changes with the number of training samples, and then the densities of samples in relative space nonlinearly changes with the number of training. \(\square \)

Proposition 3

Equation (9) is a good estimate of the mass of the cognitive gravity mode.

Proof

Given an arbitrary dataset \(\{x_1,x_2,\ldots ,x_n\}\), denoted as \(X^{-}\). Given another dataset \(\{x_1,x_2,\ldots ,x_n,x_{n+1},\ldots ,x_s\}\), denoted as \(X^{+}\), which is consisted by \(X^{-}\) and \(\{x_n+1,\ldots ,x_s\}\), where \(\{x_{n+1},\ldots ,x_s\}\) are far from \(\{x_1,x_2,\ldots ,x_n\}\). \(x_i^{-} \) and \(x_i^{+} \) are, respectively, used to represent the i-th sample of \(X^{-}\) and \(X^{+}\).

Because \(\{x_{n+1}^{+} ,\ldots ,x_s^{+} \}\) are far from \(\{x_1^{+} ,x_2^{+} ,\ldots ,x_n^{+} \}\), \(f_d(x_i^{-} )\approx f_d(x_i^{+} ),i=1,2,\ldots ,n\). As a result, to prove m(x) can be correctly estimated by Eq. (9), we only need to prove that \(m(x_i^{-} )\approx m(x_i^{+})\) and \(m(x_i^{-})/m(x_j^{-} )\) is suitable.

We firstly prove \(m(x_i^{-} )\approx m(x_i^{+} )\). From Eq. (9), \(m(x_i^{-} )\) and \(m(x_i^{+} )\) can be computed as:

According to Eq. (12), has

where \(z^{-}=\sum \nolimits _{x_u^{-} \in X^{-}} {f_d^{\prime } (x_u^{-} )} \), \(z^{+}=\sum \nolimits _{x_u^{+} \in X^{+}} {f_d^{\prime } (x_u^{+} )} \), \(\zeta \) is a constant. Substitute \(z^{-}\delta (I(x_i^{-} ))\approx z^{+}\delta (I(x_i^{+} ))\) and \(z^{-}\bar{I}(x_i^{-} )\approx z^{+}\bar{I}(x_i^{+} )+\zeta \) in Eq. (19), has:

We further prove \(m(x_i^{-} )/m(x_j^{-} )\) is suitable. It can be seen from Eq. (21) that if setting \(\delta (m(x))=\delta (I(x^{+}))\) and \(\bar{m}(x)=\bar{I}(x^{+})\), has \(m(x_i^{-} )=m(x_i^{+} )=I(x_i^{+} )\), and then \(m(x_i^{-} )/m(x_j^{-} )=I(x_i^{+} )/I(x_j^{+} )\); if setting \(\delta (m(x))=\delta (I(x^{-}))\) and \(\bar{m}(x)=\bar{I}(x^{-})\), has \(m(x_i^{+} )=m(x_i^{-} )=I(x_i^{-} )\), and then \(m(x_i^{+} )/m(x_j^{+} )=I(x_i^{-} )/I(x_j^{-} )\). Similarity, if setting \(\delta (m(x))\) and \(\bar{m}(x)\) to the standard deviation and mean of the correctly estimated \(m(x_i),i=1,2,\ldots ,n\), \(m(x_i^{-} )/m(x_j^{-} )\) can be suitable.

In the proof, we first give two datasets with different number of samples, where the densities of the first n samples in dataset 1 are the same as the densities of the first n samples in dataset 2. And then we prove that the masses of the first n samples in dataset 1 are nearly the same as the masses of the first n samples in dataset 2, which means that m(x) no long changed with the number of training samples. In the last, we prove that \(m(x_i)/m(x_j)\) are suitable when m(x) is estimated by Eq. (9). Obviously, the above two attributions of m(x) make that Eq. (9) is a good estimate of the mass of the cognitive gravity mode. \(\square \)

Proposition 4

\(\bar{m}(x)\)and \(\delta (m(x))\) can be correctly computed by Eqs. (10) and (11)

Proof

Before explaining the reason that m(x) and \(\delta (m(x))\) can be correctly computed by Eqs. (10) and (11), we first give another way to estimate the m(x) for the dataset whose samples are evenly distributed, which is given in Eq. (22). Because the densities are the same in the above dataset, so that only the influences from the higher order groups need to be considered, and then m(x)can be correctly computed by Eq. (22) by setting suitable value for k.

Where \(x^k\) is the \(k^\mathrm{th}\) neighbor ofx.

Two cases that m(x) cannot be correctly estimated by Eq. (12)

However, Eq. (22) cannot be used to estimate the correct m(x) for the dataset that samples are arbitrarily distributed, which can be shown in Fig. 13a. It can be seen from Fig. 13 that \(m(x_A)\) is equal to \(m(x_B)\) when \(m(x_A)\) is estimated by \(\left\| {x_A-x_C} \right\| _2 \) and \(m(x_B)\) is estimated by \(\left\| {x_B-x_D} \right\| _2 \), but \(m(x_A)\) should be larger than \(m(x_B)\). However, Eq. (22) can be used to estimate the corrected \(\bar{m}(x)\) and \(\delta (m(x))\).

As to \(\bar{m}(x)\), if samples are evenly distributed around \(x_A,x_B\), m(x) can be correctly estimated by Eq. (22), and then \(\bar{m}(x)\) can be correctly estimated by Eq. (10). Furthermore, if samples are arbitrarily distributed around \(x_A,x_B\), illustrated as Fig. 13, \(m(x_A)\) is underestimated by \(\left\| {x_A-x_C} \right\| _2 \) in Fig. 13a and \(m(x_B)\) is overestimated by \(\left\| {x_B-x_D} \right\| _2 \) in Fig. 6b. Statistically, the probabilities of above two cases are the same, so that \(\bar{m}(x)\) also can be estimated by Eq. (10).

As to \(\delta (m(x))\), if samples are evenly distributed around \(x_A,x_B\), m(x) can be correctly estimated by Eq. (22), and then \(\delta (m(x))\) can be estimated by Eq. (11). Furthermore, if samples are arbitrarily distributed around \(x_A,x_B\), illustrated as Fig. 6, due to a larger \(m(x_A)\) is underestimated by \(\left\| {x_A-x_C} \right\| _2 \) and a smaller \(m(x_B)\) is overestimated by \(\left\| {x_B-x_D} \right\| _2 \), \(\delta (m(x))\) would be only a little smaller than its ideal value. It can be corrected by multiplying a factor \(\lambda \) greater than 1, which can be seen from Eq. (11). In most databases, \(\lambda \) can be set to 1.5, as the experiment results vary little when \(1.3<\lambda <1.7\).

This proposition gives a way to estimate the correctly \(\bar{m}(x)\) and \(\delta (m(x))\) when \(m(x_i),i=1,2,\ldots ,n\) are unknown, where the correctly \(\bar{m}(x)\) and \(\delta (m(x))\) can be used to estimate m(x) by Eq. (9). \(\square \)

Rights and permissions

About this article

Cite this article

Sun, Y., Wen, G. Cognitive gravitation model-based relative transformation for classification. Soft Comput 21, 5425–5441 (2017). https://doi.org/10.1007/s00500-016-2131-0

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-016-2131-0